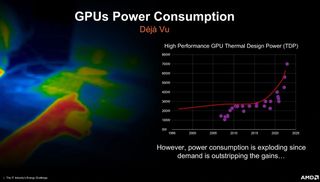

AMD Foresees GPUs With TDPs up to 700W by 2025

The company still aims to achieve its 30x25 goal by that time.

Sam Naffziger, AMD senior vice president, corporate fellow and product technology architect, took part in a wide-ranging interview with VentureBeat recently. Interestingly, AMD sees high-performance GPUs with TDPs as high as 700W emerging before we reach 2025. However, Naffziger’s specialism is in leading efficiency technologies, and with the success of 25x20 behind him, he is currently working hard on the ambitious 30x25 efficiency project.

Naffziger has been at AMD for 16 years, and has been embedded within the graphics division since 2017. He had some very interesting things to say about boosting the efficiency of Radeon GPUs via developments in the RDNA architecture, as well as the hand-in-glove development of the CDNA architecture for the data center.

The above chart isn’t specifically discussed in the interview, but making GPUs more efficient is. With this lack of clear context, we are seeing the chart as an indicative warning about the industry by AMD, rather than a prediction of the direction of travel for AMD GPUs. Naffziger reveals some of the innovative ways in which AMD has avoided seeing its GPU TDPs balloon, and from the interview it sounds like AMD still has some tricks up its sleeves for RDNA 3 and RDNA 4.

Much has been written about AMD’s transition from RDNA 1 to RDNA 2; it happened with the introduction of the Radeon RX 6000 series, of course. In the VentureBeat interview, Naffziger provides some interesting background to this generational change. He says that the “doubling of performance and a 50% gain in performance-per-watt” was largely due to taking things learned from CPU design to get the 2.5GHz+ clocks at modest voltages. AMD also took a bet on reducing bus widths and using big caches – specifically Infinity Cache – which was turned out to be “a great fit for graphics.”

For RDNA 3, Naffziger asserts that “We’re not going to let our momentum slow at all in the efficiency gains.” This means AMD is targeting a 50% performance-per-watt improvement. AMD will continue to use its CPU know-how in optimizing the potential of its GPUs, and this will include chiplet designs. RDNA 3 GPUs will leverage 5nm chiplets later this year. Naffziger notes that Intel is already big in chiplets + GPUs, as evidenced by Ponte Vecchio, but Nvidia has shown no signs of a jump yet. Talking about competitors, the AMD fellow added “Our competitors either have good CPUs or good GPUs, but nobody has both, at least not yet.”

For more information about the upcoming Radeon RX 7000 series GPUs, please check our RDNA 3 deep dive article based on information from the AMD financial analyst day this June.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a Freelance News Writer at Tom's Hardware US. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

-Fran- Well, AMD is clearly wrong.Reply

nVidia is rumored to release a 600W card already, so 100W in 3 more years looks rather small. Plus, I'm sure they'll feel compelled to increase the power if nVidia increases the power just to get a sight advantage.

Overall, this "MOAR power" tendency from all camps is just bad for everyone. Ugh...

Regards. -

thestryker Power consumption is certainly out of hand in the GPU space, and I think Ampere is the worst example I've seen. The architecture is very efficient at lower clockspeeds, but to maintain margins they've got them clocked so high all of those gains are thrown away. We need a generation like the GTX 600 series which basically served as a reset on power consumption.Reply -

King_V Part of me is giggling and the cartoonish thought of AMD saying "yeah, let's just say that we expect our GPUs to hit outrageous power consumption, too, then Nvidia will keep going that route, while we make it more efficient, and they'll have no idea what we're up to."Reply

Realistic? Probably not. But, sometimes the cartoonish-absurdity interpretation is fun. -

saunupe1911 GPU's will be bundled with it's own generator by 2030 while Nvidia patents small nuclear batteriesReply -

salgado18 What worries me is game developers creating content for these hungry GPUs, then forcing all of us to get them to play their games. If the top GPUs doubled their consumption, the lower ones should increase too, and suddenly reasonable cards won't handle the extra quality of future games.Reply

I hope it's not a tendency, and users feel constrained by cooling, PSU wattage/cost, energy, heat, etc., and don't feed this nonsense. -

PiranhaTech Add a higher-end CPU and that will be like running a small toaster oven while gamingReply

I prefer PC gaming, but man, this is making me glad that game consoles often have that 150-300W limit. Hopefully that can help keep games playable on a 200W or less GPU -

DougMcC Reply-Fran- said:Well, AMD is clearly wrong.

nVidia is rumored to release a 600W card already, so 100W in 3 more years looks rather small. Plus, I'm sure they'll feel compelled to increase the power if nVidia increases the power just to get a sight advantage.

Overall, this "MOAR power" tendency from all camps is just bad for everyone. Ugh...

Regards.

They are rapidly closing in on the limits of a USA wall plug's maximum power delivery. If CPU + board take up 300W and GPU takes 700W and losses take 100W you're left with only 400W of headroom. -

artk2219 Replysaunupe1911 said:GPU's will be bundled with it's own generator by 2030 while Nvidia patents small nuclear batteries

If I were a company and I had to choose between making GPU's or having the capability to develop a safe, clean, and small portable nuclear battery that could fit in the confines of a computer and not be a hazard, I would go for the nuclear battery option every time. Not everyone needs a GPU, but holy cow a safe nuclear battery revolutionize so many fields. Need 3KW of continuous power from a device roughly the size of a piece of toast? We've got you covered, please make your checks payable to Jensen Hua... umm ahem, I mean Nvidia corporation please. -

artk2219 ReplyDougMcC said:They are rapidly closing in on the limits of a USA wall plug's maximum power delivery. If CPU + board take up 300W and GPU takes 700W and losses take 100W you're left with only 400W of headroom.

Eh a standard NEMA 5-15 can handle 1875 watts (125v x 15A), theres still plenty of headroom so long as you dont have a bunch of things on the same curcuit, either way you're not wrong in that thats alot of power for a high end desktop, thats pulling into straight up toaster or electric space heater territory. -

King_V Replyartk2219 said:If I were a company and I had to choose between making GPU's or having the capability to develop a safe, clean, and small portable nuclear battery that could fit in the confines of a computer and not be a hazard, I would go for the nuclear battery option every time. Not everyone needs a GPU, but holy cow a safe nuclear battery revolutionize so many fields. Need 3KW of continuous power from a device roughly the size of a piece of toast? We've got you covered, please make your checks payable to Jensen Hua... umm ahem, I mean Nvidia corporation please.

I mean, of course the first thing that came to mind was the Fallout universe. And now I'm picturing Vault Boy working away with a nuclear powered PC and starting to glow as he gets into his gaming..

Most Popular