Intel's 1500W TDP for Falcon Shores AI processor confirmed — next-gen AI chip consumes more power than Nvidia's B200

It's getting hot in here.

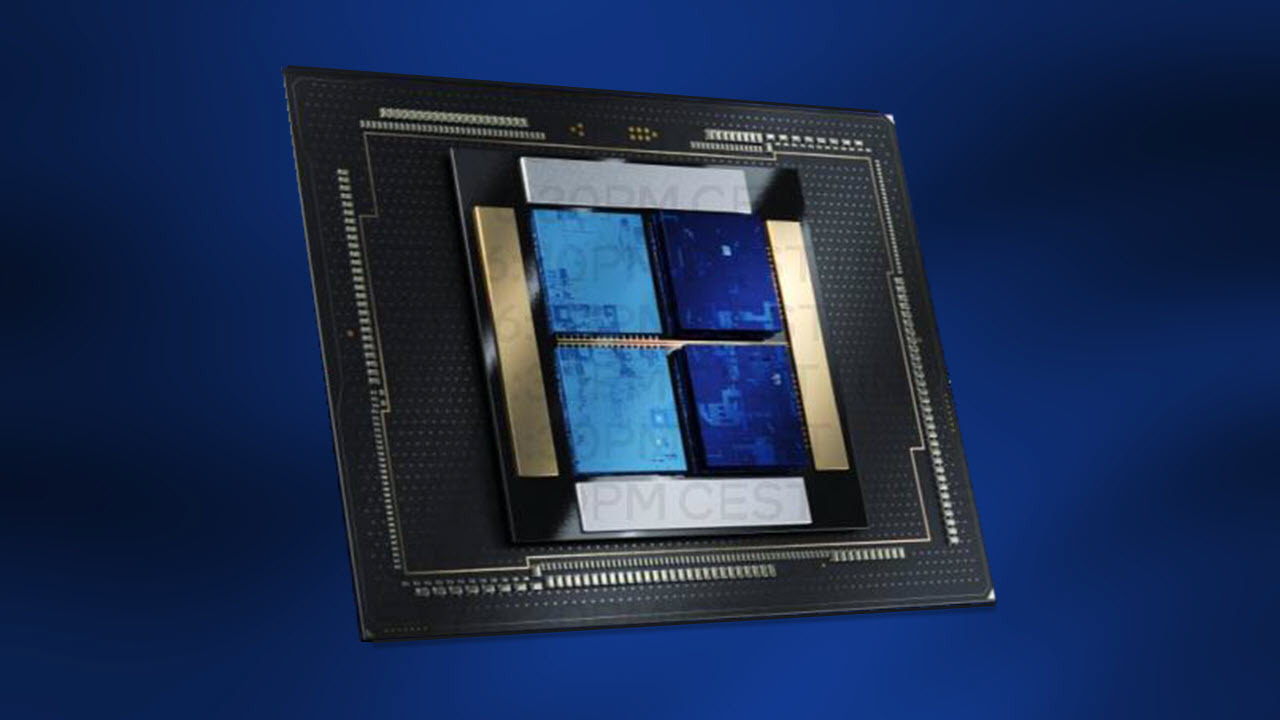

Intel's codenamed Falcon Shores hybrid processor combines x86 and Xe GPU cores to offer formidable performance for AI and HPC workloads, but it will consume an extreme 1500W of power, reports ComputerBase.de, citing a comment by Intel. Such an extreme power consumption will require Intel to use advanced cooling methods.

Intel's Falcon Shores will be a multi-tile processor featuring both x86 cores (tiles) for general-purpose processing and Xe cores (tiles) for highly parallel AI and HPC workloads. Intel itself once said that it would offer five times higher performance per watt and five times higher memory capacity and bandwidth compared to its 2022 products while also offering a 'simplified' programming model.

The company still hasn't revealed detailed performance expectations for its Falcon Shores processors. To feed Falcon Shores, Intel will probably have to use proprietary modules (or promote a new OAM specification) as even the latest OAM specification (OAM 2.0), which features a new high-power connector, can only support power levels of around 1000W. Even the power consumption of Nvidia's B200 won't exceed 1,200W.

Cooling a 1,500W processor is another matter. Some of Intel's partners may use liquid cooling, but others could probably opt for liquid immersion cooling, a technology that Intel has been promoting for several years now.

Intel seems to be pinning a lot of hope on its Falcon Shores processor. Although Intel's Gaudi 3 is significantly more powerful than its predecessors, and Intel expects the industry to adopt this accelerator for various uses, the company seems somewhat cautious about the product's success. This is perhaps because its expectations for its Falcon Shores processor are considerably higher.

Considering that by the time Falcon Shores arrives in 2025, there will be more software developers familiar with Xe architecture for supercomputers, the adoption of Falcon Shores will likely be relatively smooth for a new product.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Reply

Intel's codenamed Falcon Shores hybrid processor combines x86 and Xe GPU cores to offer formidable performance for AI and HPC workloads,

Intel's Falcon Shores will be a multi-tile processor featuring both x86 cores (tiles) for general-purpose processing and Xe cores (tiles) for highly parallel AI and HPC workloads.

Uh ?

Didn't Intel later downgraded and redesigned the product to a GPU-only design ? So it can now take the best of Intel's Gaudi AI accelerators, combined with next-gen Xe graphics architecture for HPC and other compute-heavy workloads.

Instead of CPU, GPU, and CPU+GPU configurations, we're just getting a GPU. -

But anyway, Intel didn't fully confirm whether we can see x86 cores implemented in future though. Plans might change, but for now we can only expect a GPU-only design IMO.Reply

But if we look at the current market trend, most of the customers are now interested in discrete GPUs and AI chips, which is why the company has also prioritized the Gaudi AI processor, and this whole "generative AI" hype/trend has also dried up demand for the original Falcon Shares, which was supposed to integrate both GPU and CPU on a single chip.

Also, current computing environment is not yet mature enough to achieve the initial goal of mixing CPU and GPU cores into the same Falcon Shores package. The "decoupling" of CPU and GPU could also provide more options for customers with different workloads.

I mean the move might allow more customers to logically use a variety of different CPUs, including AMD's x86 and Nvidia's Arm chips, as well as their GPU designs, and therefore does not limit them to only Intel's x86 cores.

Unlike AMD's Instinct MI300 and NVIDIA's Grace Hopper offerings, which might tie customers' product design to vendor solution configurations to a greater extent/degree though. But these have their own benefits and (dis)advantages.

Btw, that's some insane power consumption figure, 1500+ Watt ! Someone needs to regulate and stop this. -

CmdrShepard I think this is the point where environmental agencies and regulatory bodies should intervene and set a power cap so they are all forced to start innovating power efficiency again.Reply

I know this is for datacenters / HPC, but consumer desktop stuff is dangerously edging towards this sort of environmentally unfriendly power consumption and dissipation and the reason is exactly because it is too costly for AMD / Intel / NVIDIA to make two entirely different products for those two market segments -- HPC market is now negatively impacting the consumer market. -

jp7189 Reply

Let's hope regulators don't get involved. This is an area that will regulate itself. Generally, the bigger and more power hungry a single module, the more energy efficient the overall system will be. What's better, a 1500w module in 1 server or 5 servers with 300w modules? Yes, that simplistic example ignores a lot of nuance, but the general point is sound.Metal Messiah. said:Btw, that's some insane power consumption figure, 1500+ Watt ! Someone needs to regulate and stop this. -

bit_user Reply

Yup. That's what I was going to say. The heterogeneous version was either delayed or canceled.Metal Messiah. said:Instead of CPU, GPU, and CPU+GPU configurations, we're just getting a GPU. -

bit_user Reply

Well, AMD and Nvidia are both doing it. AMD has the MI300A, while Nvidia has Grace + Hopper "superchips", which involves putting one of each on the same SXM board.Metal Messiah. said:Also, current computing environment is not yet mature enough to achieve the initial goal of mixing CPU and GPU cores into the same Falcon Shores package. The "decoupling" of CPU and GPU could also provide more options for customers with different workloads.

The total power consumption doesn't tell you how efficient it is, and inefficiency is one major problem.Metal Messiah. said:Btw, that's some insane power consumption figure, 1500+ Watt ! Someone needs to regulate and stop this.

I think a better approach is to increase incentives for datacenters to reduce power consumption. That will encourage them to run power-hungry chips at lower clockspeeds, where they tend to be more efficient, and probably focus on buying more energy-efficient hardware than just the cheapest stuff.

Datacenter power consumption and cooling are already on track to become such big problems that governments probably won't even need to impose extra taxes or fines. -

bit_user Reply

I'm not sure you've thought that through sufficiently. AMD's EPYC Genoa uses like 380 W for 96 cores. That works out to about 4 W per core, which should see the 7950X pulling only 64 W.CmdrShepard said:I know this is for datacenters / HPC, but consumer desktop stuff is dangerously edging towards this sort of environmentally unfriendly power consumption and dissipation and the reason is exactly because it is too costly for AMD / Intel / NVIDIA to make two entirely different products for those two market segments -- HPC market is now negatively impacting the consumer market. -

Replybit_user said:Well, AMD and Nvidia are both doing it. AMD has the MI300A, while Nvidia has Grace + Hopper "superchips", which involves putting one of each on the same SXM board. The total power consumption doesn't tell you how efficient it is, and inefficiency is one major problem.

Of course, we know that. The point was to mention why INTEL is currently putting more efforts into a GPU-only solution, despite AMD and Nvidia going the other route (and Intel not jumping in the bandwagon).

That's why "decoupling" of CPU and GPU might help in specific use cases.

Metal Messiah. said:Unlike AMD's Instinct MI300 and NVIDIA's Grace Hopper offerings, which might tie customers' product design to vendor solution configurations to a greater extent/degree though. But these have their own benefits and (dis)advantages.

Btw, I will talk about the power "efficiency" topic in a different comment. I knew efficiency is a factor, but I didn't mention it in my OP. -

TerryLaze Reply

It probably does when it runs at the same clocks that the server runs at.bit_user said:I'm not sure you've thought that through sufficiently. AMD's EPYC Genoa uses like 380 W for 96 cores. That works out to about 4 W per core, which should see the 7950X pulling only 64 W.

"Overclocking" the 7950x to 5.1Ghz all core, down from the dynamic ~5.3-5.4 that AMD is trying to get them to run at, already gets it down to 95W average.

https://www.techpowerup.com/review/amd-ryzen-9-7950x/24.html -

CmdrShepard Reply

Please stop with this capitalism myth about greedy corporations regulating themselves -- it has never worked so far and it will never, ever work as long as greed is a factor.jp7189 said:This is an area that will regulate itself.

I don't see how that's relevant when those cores run at 2.4 GHz (max turbo all cores 3.55 GHz) which is like ~0.6x of what desktop cores are clocked.bit_user said:I'm not sure you've thought that through sufficiently. AMD's EPYC Genoa uses like 380 W for 96 cores. That works out to about 4 W per core, which should see the 7950X pulling only 64 W.