AMD GPU Roadmap: RDNA 3 With 5nm GPU Chiplets Coming This Year

But how will they fit together and perform?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

In its financial analysts briefing today, AMD shared its GPU roadmap along with some additional details on its upcoming RDNA 3 architecture. While the company unsurprisingly didn't go into a lot of detail, what was shared provides plenty of food for thought. AMD says its RDNA 3 chips will debut before the end of the year, at which point at least some of the new cards will likely find a spot on our best graphics cards list and help restructure the top of our GPU benchmarks hierarchy. Let's dig into the information AMD provided.

- AMD CPU Core Roadmap, 3nm Zen 5 by 2024, 4th-Gen Infinity Architecture

- AMD Zen 4 Ryzen 7000 Has 8–10% IPC Uplift, 35% Overall Performance Gain

- AMD CDNA 3 Roadmap: MI300 APU With 5X Performance/Watt

- AMD’s Data Center Roadmap: EPYC Genoa-X, Siena, and Turin

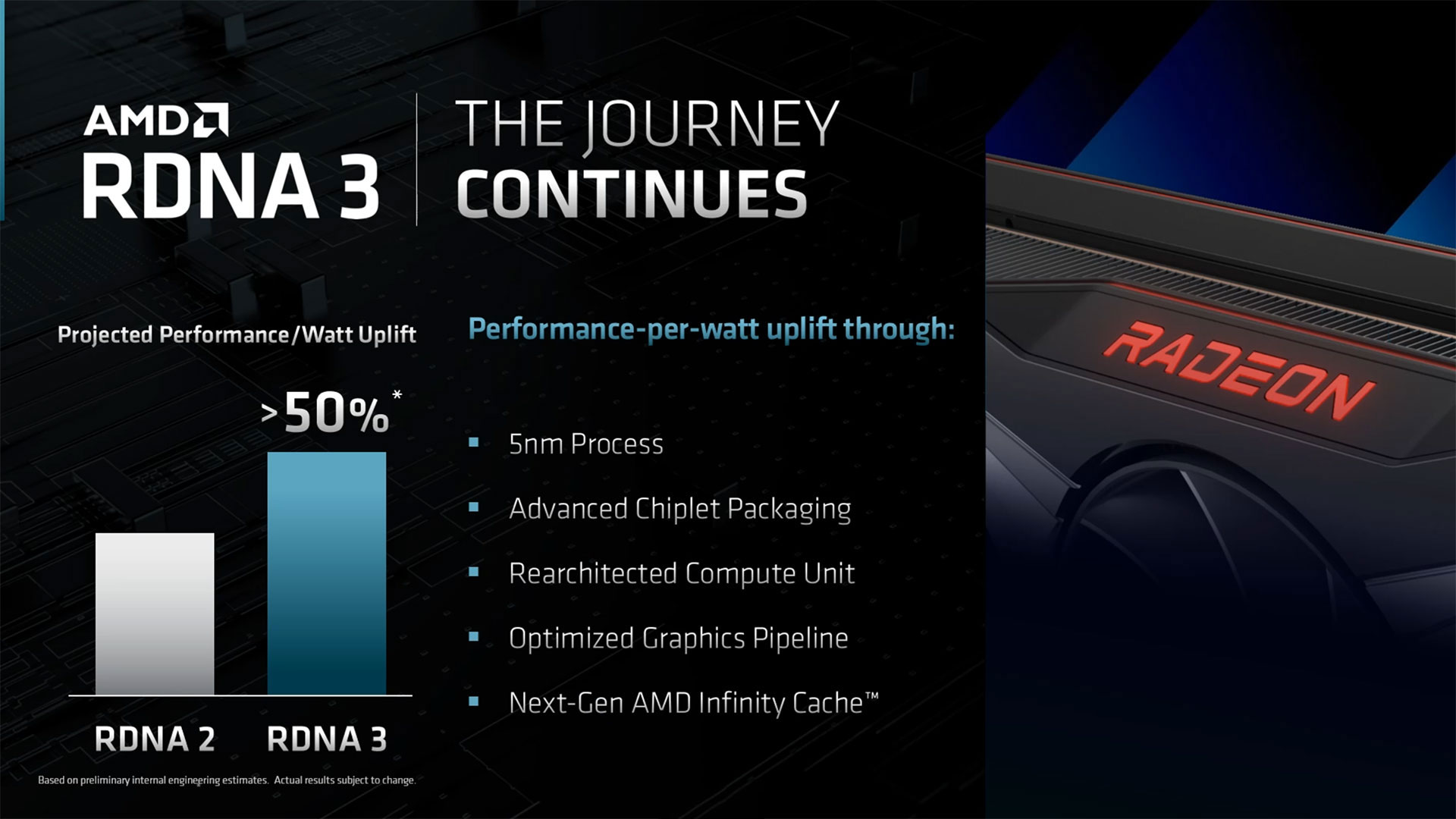

AMD says RDNA 3 is projected to provide more than a 50% uplift in performance per watt. As usual, that has to be taken with a large dose of skepticism, as performance per watt is a curve with a lot of possibilities. AMD's RDNA 2 architecture was also supposedly a 50% improvement in performance per watt, and Nvidia claimed its Ampere architecture delivered 50% more performance per watt than its previous Turing architecture. While both claims were certainly true in some cases, many comparisons showed far slimmer gains.

For example, based on our performance and power testing, the RX 6900 XT is up to 112% faster than the RX 5700 XT and uses 44% more power. That represents a 48% increase in performance per watt (perf/watt), which is close enough to 50% that we won't argue the point. But other comparisons yield different results. RX 6700 XT is about 32% faster than the RX 5700 XT while using basically the same amount of power, or if you want a really bad example, the RX 6500 XT is 22% slower than the RX 5500 XT 8GB while using 29% less power. That's a net perf/watt improvement of just 10%.

The story is the same for Nvidia. The RTX 3080 is about 35% faster than an RTX 2080 Ti (at 4K) but uses 28% more power. That means raw perf/watt only improved by 5%. Alternatively, the RTX 3070 is about 23% faster than an RTX 2080 Super, and it uses 11% less power, nearly a 40% improvement in performance per watt. And to give one final example, the RTX 3060 is 33% faster than the RTX 2060 while using 7% more power, giving an overall 24% increase in performance per watt.

The point is, AMD's claims of 50% higher performance per watt are a best-case scenario. It could mean RDNA 3 is 50% faster while using the same power as RDNA 2, or it could mean it's the same performance while using 33% less power. Generally speaking, actual products are likely to land between those two extremes, and it's a safe bet that not every comparison will show a 50% improvement in performance per watt.

Other details for RDNA 3 consist of some things we've already assumed: It will use 5nm process technology (almost certainly TSMC N5 or N5P). It will also support "advanced multimedia capabilities," including AV1 encode/decode support. AMD said RDNA 3 includes DisplayPort 2.0 connectivity, which was already rumored.

The architecture consists of a reworked compute unit (CU), the main building block for AMD's RDNA GPUs, and we've heard quite a few rumors about this. The most likely scenario is that AMD will be doing something similar to what Nvidia did with Turing and Ampere, adding additional compute pipelines.

Turing has separate FP32 and INT32 pipelines, and Ampere added FP32 support to the INT32 pipeline, in effect potentially doubling the FP32 compute per streaming multiprocessor (SM) — that's Nvidia's equivalent of the CU. If that's correct, RDNA 3 will likely have a big jump in theoretical computational performance relative to RDNA 2. How that will affect actual performance remains to be seen.

Not surprisingly, AMD also promises a next-generation Infinity Cache, which has proven to be quite effective in increasing overall performance on the various RDNA 2 GPUs. This could be a large cache, or perhaps it will be optimized in other ways. Given other expected architectural updates, we do expect AMD will move its top-tier RDNA 3 GPU to a wider memory interface, probably 384-bit, but the company hasn't commented on this yet.

Building GPUs With Chiplets

Last and certainly not least is perhaps the most interesting aspect of RDNA 3. AMD says it will use advanced packaging technologies combined with a chiplet architecture. We've seen a somewhat similar move with the X670/X670E chipsets, and AMD has proven it can do some very interesting things with its CPU chiplet architecture. What this means for GPUs however isn't entirely clear.

One option would be to have a main hub with the memory interface, display and media capabilities, and other core hardware. Then the GPU chiplets would focus on raw compute and would link to the root hub through an Infinity Fabric. There could be one to perhaps four GPU chiplets, offering a great range of scalability. However, AMD would likely need multiple different root hubs with this approach, for the different product tiers, and that would increase cost and complexity.

What’s more likely is that AMD will have a base GPU chiplet that targets the midrange performance segment, and it will be able to link two and perhaps as many as four of these chiplets together via a high-speed interconnect. It would be sort of like SLI and CrossFire, but without having to do alternate frame rendering. The OS would only see one GPU, even if there are multiple chiplets, and the architecture would take care of the sharing of data between the chiplets.

AMD has already done something like this with its Aldebaran MI250X data center GPUs, and Intel seems to be taking a similar approach with its Xe-HPC designs. AMD also revealed details on its data center CDNA 3 GPUs that suggest those will have up to four GPU chiplets. However, those are compute-focused architectures rather than designs focused on real-time graphics, so there are different hurdles that will need to be overcome. Regardless, AMD has plenty of experience with chiplets, as it has been multiple chips in a package since the first EPYC CPUs back in 2017, and chiplet designs since Zen 2 in 2019. It should have ample knowledge about how to make such a design work for GPUs as well.

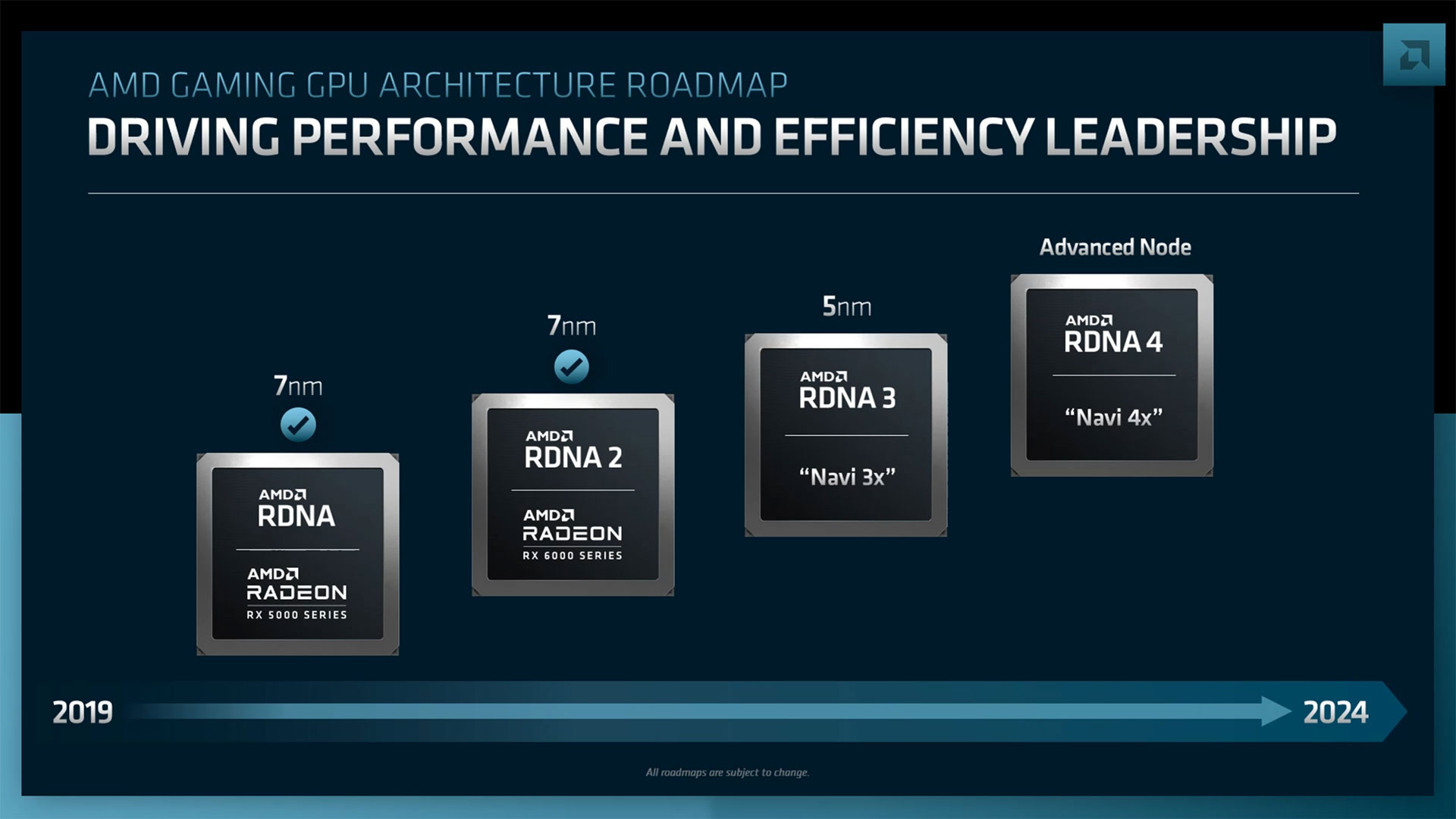

RDNA 4 in 2024, RDNA 3 This Year

Finally, AMD shared the above GPU roadmap, which officially shows RDNA 4 with a target release of some time before the end of 2024. As you can imagine, AMD didn't provide any other information on RDNA 4 other than its existence on the roadmap and the "Navi 4x" naming scheme. It will likely use a 3nm process technology, or some equivalent with a different name. Based on previous launches, late 2024 is a safer bet than early 2024 for the actual launch.

AMD also reiterated its plans for a 2022 launch of RDNA 3, which is good to see, though it could be a very limited launch and still technically qualify. Hopefully AMD pushes out multiple graphics card tiers before the end of the year, but we'll have to wait and see. There's plenty we still don't know about RDNA 3, like core counts, clock speeds, and other architectural enhancements. AMD has another GPU family in its CDNA architecture, which we've covered separately, but so far there hasn't been a lot of overlap between RDNA and CDNA. The GPU cores might be similar, but RDNA has ray accelerators while CDNA includes matrix cores. RDNA 3 might follow the examples of Intel Arc and Nvidia RTX and include some form of tensor/matrix core, but AMD hasn't suggested any such changes so far.

We still have plenty of questions, chief among them being the actual launch date and actual performance for RDNA 3. We'd love to see AMD deliver a similar performance boost to what it brought with RDNA 2 (up to double the performance compared to RDNA), even if that means higher power consumption. Imagine if the base GPU chiplet could match the performance of an RX 6900 XT, and then AMD links two or more chiplets together to double or potentially quadruple performance. That would be awesome! But dreams are free, so until AMD details the exact architectural changes, we'll try to wait patiently.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

hotaru.hino My money's on them using the approach with Zen 3: one centralized I/O chip with as many chiplets as you need.Reply -

JarredWaltonGPU Reply

I thought of this at first but then thought, no way, that would be a mess. Because then there would be an IO chiplet for the high-end, mid-range, and low-end. Or maybe it's only on the high-end and might make more sense?hotaru.hino said:My money's on them using the approach with Zen 3: one centralized I/O chip with as many chiplets as you need.

But I'm betting there will be a design where you get something like a 256-bit interface to GDDR6 from a single chip, with a big Infinity Fabric link to another chip. Or maybe 192-bit with a 128-bit wide interface to be used for interchip communications? I don't know, I suppose it depends on how far they scale. Will this be just a dual-chip solution, or will it scale up to three or four chips? MI250X and the images of MI300 make me think it will be up to 4-way, with each chip having some local memory and a wide link or two to another chip. -

hotaru.hino Reply

The only thing that keeps me from thinking they'll do something like glue two fully enabled GPUs together with a high speed link to resolve the memory sharing issue is that rendering games is still a real-time application. Every other setup with a multi-chip system I've seen runs tasks that aren't really real-time, just that it's better if it runs the thing faster. Needing to martial and share data tends to add latency, which will eat in to the minimum frame time achievable.JarredWaltonGPU said:I thought of this at first but then thought, no way, that would be a mess. Because then there would be an IO chiplet for the high-end, mid-range, and low-end. Or maybe it's only on the high-end and might make more sense?

But I'm betting there will be a design where you get something like a 256-bit interface to GDDR6 from a single chip, with a big Infinity Fabric link to another chip. Or maybe 192-bit with a 128-bit wide interface to be used for interchip communications? I don't know, I suppose it depends on how far they scale. Will this be just a dual-chip solution, or will it scale up to three or four chips? MI250X and the images of MI300 make me think it will be up to 4-way, with each chip having some local memory and a wide link or two to another chip.

I guess we'll just have to wait and see! -

Bikki Apple is first one on the scene with M1 ultra, gluing 2 gpus for everyday graphic. That and realtime ray traycing is the holy grail of gpu world. If amd can have x4 gpus in one chip and x2 ray tray, we may very well be living in the future this year.Reply -

eichwana Now to see if these will be scalped directly from AMD, and if they’ll actually be available.Reply

We can hope for the best. -

jp7189 Reply

I agree that a hub with a massive infinity cache thats shared by all gpu chiplets seems to make the most sense. I would also think the frontend/scheduler would have to live there as well. The gpu chiplets would then just be much simpler things. There's also a lot of stuff you don't want to duplicate like media decoders, display outputs, etc. I would expect all that to move to the hub.hotaru.hino said:The only thing that keeps me from thinking they'll do something like glue two fully enabled GPUs together with a high speed link to resolve the memory sharing issue is that rendering games is still a real-time application. Every other setup with a multi-chip system I've seen runs tasks that aren't really real-time, just that it's better if it runs the thing faster. Needing to martial and share data tends to add latency, which will eat in to the minimum frame time achievable.

I guess we'll just have to wait and see!

On the flip side, I agree with what Jared said. It might be hard for that to be cost effective on the low-end to require a hub to be pair with a single gpu chiplet. If they do use identical chiplets without a hub, i think frame time consistency will be tough to manage, and the burden on the driver team would likely be much higher. -

hotaru.hino Reply

If we were to consider the chiplet strategy as using multiple chips to provide additional performance as a single product, then that honor goes to at least ATI with their Rage Fury MAXX. Though if you want to stretch this out further, a company did make Voodoo2 SLI on a single card.Bikki said:Apple is first one on the scene with M1 ultra, gluing 2 gpus for everyday graphic. That and realtime ray traycing is the holy grail of gpu world. If amd can have x4 gpus in one chip and x2 ray tray, we may very well be living in the future this year.

Also since you mentioned it I did want to see how the M1 Ultra performs over the M1 Max. It looks like it has the same performance uplift as any other multi-GPU setup. -

quilciri Devil's advocate regarding the 50% performance per-watt improvement; AMD was really sandbagging a lot presenting Zen4 recently. How are you certain they're not here as well?Reply -

PiranhaTech GPU-wise, this might not do much and might actually lower the performance a bit. However, I could be wrong since AMD in the Ryzen 5000 series got around the bottlenecks that earlier Ryzen chiplet-based CPUs had, so it could be a temporary hit. There's also a chance that it'll increaseReply

Where it might matter more is the SoC game. This might make Radeon integration easier into Ryzen. However, Ryzen isn't the only factor. What does this mean for ARM? There's an interconnect standard coming out, and if AMD can easily integrate Radeon into ARM, that can be a huge factor. There has been Radeon in Samsung ARM chips.

Okay, what if Microsoft wanted to do their own SoC for the next Xbox generation? AMD can still potentially offer Ryzen and Radeon.