AMD's Latest Patent Could be FidelityFX Super Resolution Blueprint

AMD's patent for super-resolution has been revealed

We might finally have an idea as to how AMD's FidelityFX Super Resolution (FSR) tech will work compared to Nvidia's competing DLSS solution. eternity@Beyond3D (via VideoCardz) has uncovered the patent filing for AMD's Gaming Super Resolution technology which shows details of how this specific tech is supposed to operate, and it looks like it could be the blueprint to AMD's long-awaited FidelityFX Super Resolution. Additionally, @Underfox3 grabbed a few images from Nvidia's DLSS patent for a head-to-head comparison, which you can see below.

Now that AMD's super resolution architecture patent has been published, it is possible to look into the work of making an architectural comparison with Nvidia DLSS and verify its different applicability and overall robustness.image 1 - AMD GSRimage 2 - Nvidia DLSS pic.twitter.com/6N1XEvLVAtMay 20, 2021

The premise of AMD's super-resolution technology is that it will provide a more accurate image than other competitors (probably DLSS), using both non-linear and linear rendering techniques.

The patent says that conventional super-resolution techniques that use deep learning, like Nvidia's DLSS, do not use non-linear information, which results in the AI network having to make more educated guesses than what's necessary. This can result in reduced detail and lost color.

AMD claims that its Gaming Super Resolution (GSR), on the other hand, should more effectively keep more of the original information from an image while upscaling it and improving fidelity thanks to linear and non-linear downsampling processing techniques, all without the need for deep learning.

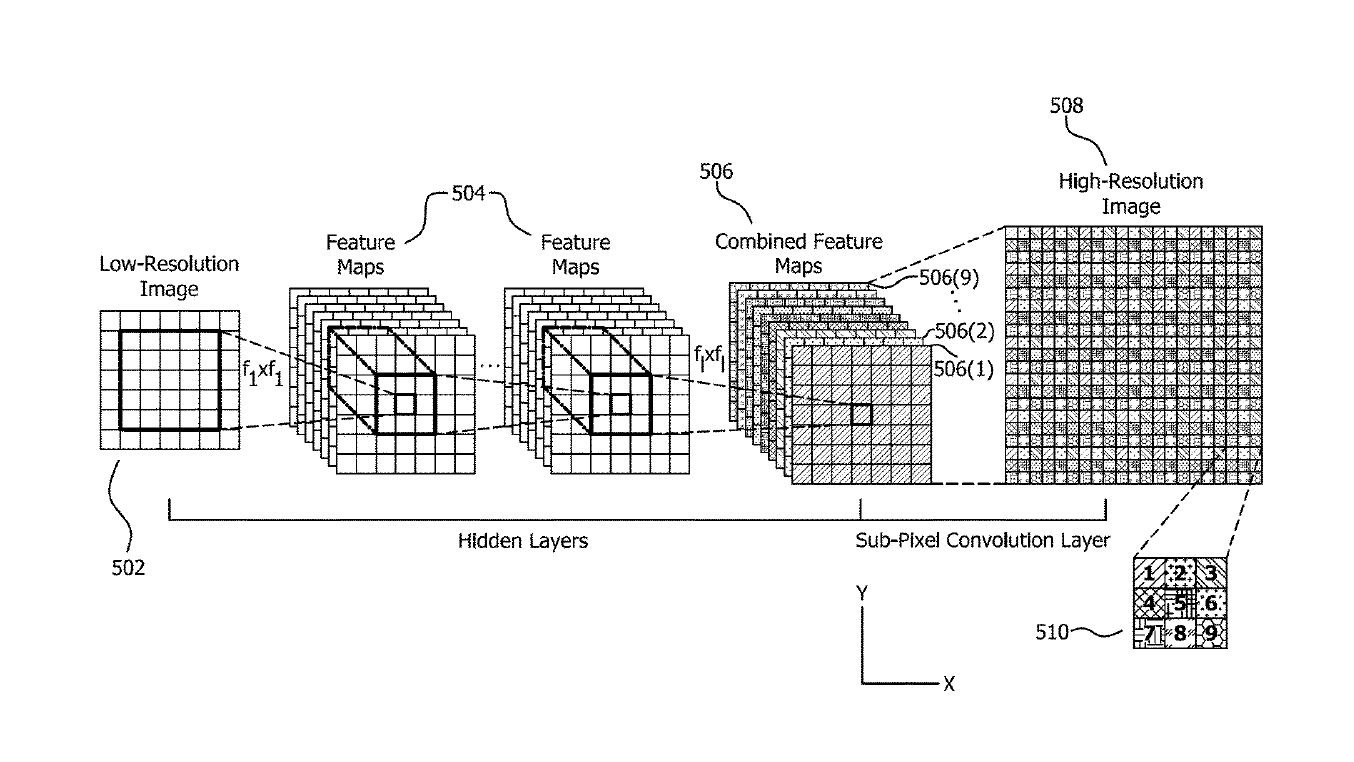

The process works by taking a resolution, say a 1080p (1920 x 1080) image, and using two downsampling layers; one is a linear downsampling network, and the second is a non-linear downsampling network. Once that is complete, the image (or images) gets chopped up into individual pixels, further analyzed until GSR reconstructs the image with extra detail and supersamples it beyond the 1080p resolution.

Like DLSS, GSR can use multiple frames as a point of reference, further improving accuracy.

What's great about this technology is that we could potentially see equal or better fidelity than Nvidia's DLSS solution while not requiring deep learning. At least if AMD's plans come to fruition. This means that potentially any GPU and even any CPU can run GSR.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If all this is true, then AMD Gaming Super Resolution will be a serious competitor to Nvidia's DLSS solution, which only runs on RTX graphics cards.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

hotaru.hino Curious I decided to look up what "non-linear image scaling" means. I found a few hits but this struck me the most: https://patents.google.com/patent/US6178272B1/en (titled: Non-linear and linear method of scale-up or scale-down image resolution conversion )Reply -

George421 Reply

Using multiple jittered (or consecutive motion corrected) frames to create a higher resolution image can work without any DL.hotaru.hino said:Curious I decided to look up what "non-linear image scaling" PrepaidGiftBalance means. I found a few hits but this struck me the most: https://patents.google.com/patent/US6178272B1/en (titled: Non-linear and linear method of scale-up or scale-down image resolution conversion )

But upscaling a single frame? No matter how clever in the end it will be just an interpolation -

Zero Silver The issue I have with this is since AMD's GPU's as of right now don't have dedicated hardware for these calculations, so in the end you would have less cores rasterizing since they'd be running calculations.Reply

On CPU's if the user has an 8 core or more CPU they could potentially offload the calculations to cores not being used by the game, if it's possible, but then those that have 6 or less would be SOL.

That's my main concern with all of this, Nvidia has dedicated hardware to run the calculations whereas this has to run on your standard hardware that most likely won't be able to perform the calculations as well, time will prove my worries either right or wrong.