Hot Chips 2017: AMD Outlines Threadripper And EPYC's MCM Advantage, Claims 41% Cost Reduction

AMD Senior Fellow Kevin Lepak took to the stage at Hot Chips 2017 to explain the reasoning behind EPYC's MCM (Multi-Chip Module) design and to remind us that the company decided to use multiple die very early in the design process.

Intel presented the now-famous EPYC slide deck during its Purley server event. Intel claimed that AMD merely uses "glued together desktop die" for its EPYC data center chips, and that its multi-chip design, which also makes an appearance in the company's Threadripper models, suffers from poor latency and bandwidth that hamstrings performance in critical workloads. Surprisingly, Barclays downgraded AMD's stock shortly thereafter. AMD suffered a short-term stock slump as a result. Although Barclays didn't directly cite Intel's claims, the reasoning (and explanation) was eerily similar.

But AMD has tailored its design to address some of the challenges associated with an MCM architecture and claims that its design provides a 41% cost reduction compared to a single monolithic die. Let's dive in.

MCM Delivers A 41% Cost Reduction

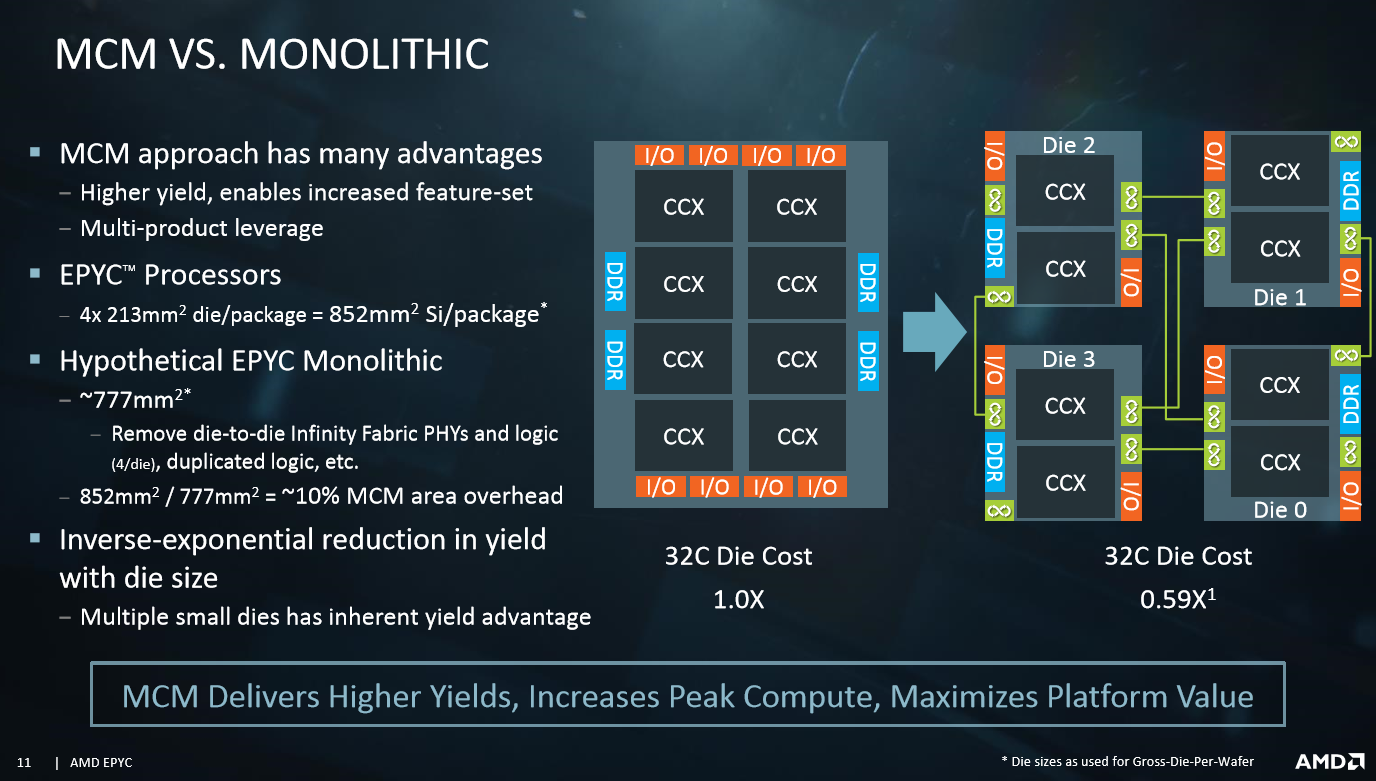

As we covered in our Threadripper 1950X review, AMD CEO Lisa Su tasked her team with developing a cutting-edge data center processor to challenge Intel's finest. The team realized early on that a single monolithic die couldn't deliver on the company's performance, memory, and I/O goals. Lepak revealed that the decision also stemmed from the company's cost projections. Lepak presented a mock-up of a monolithic EPYC processor and compared manufacturing costs between the two techniques.

AMD projects that a single EPYC die would weigh in at 777mm2, whereas the four-die MCM requires 852mm2 of total die area. AMD claims the 10% die area overhead is relatively tame. The company specifically architected the Zeppelin die for an MCM arrangement, so it focused on reducing the die overhead of replicated components. For instance, each of the four Infinity Fabric links consumes only 2mm2 of die area.

Each of AMD's Zeppelin die contains memory, I/O, and SCH (Server Control Hub--similar to a Northbridge) controllers, but the company removed those redundant parts from its monolithic die cost projection. The company also removed the Infinity Fabric links, as those obviously aren't needed for a single chip.

It's rational to assume that the MCM's larger overall die area would result in higher costs, but AMD claims the design actually reduces cost by 41%. All die suffer from defects during manufacturing, but larger die are more susceptible. Smaller die produce better yields, thus reducing the costly impact of defects. AMD can configure around defects in the cores or cache by disabling the unit and using the die for lower-priced SKUs, but defects that fall into I/O lanes or other critical pathways are usually irreparable.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

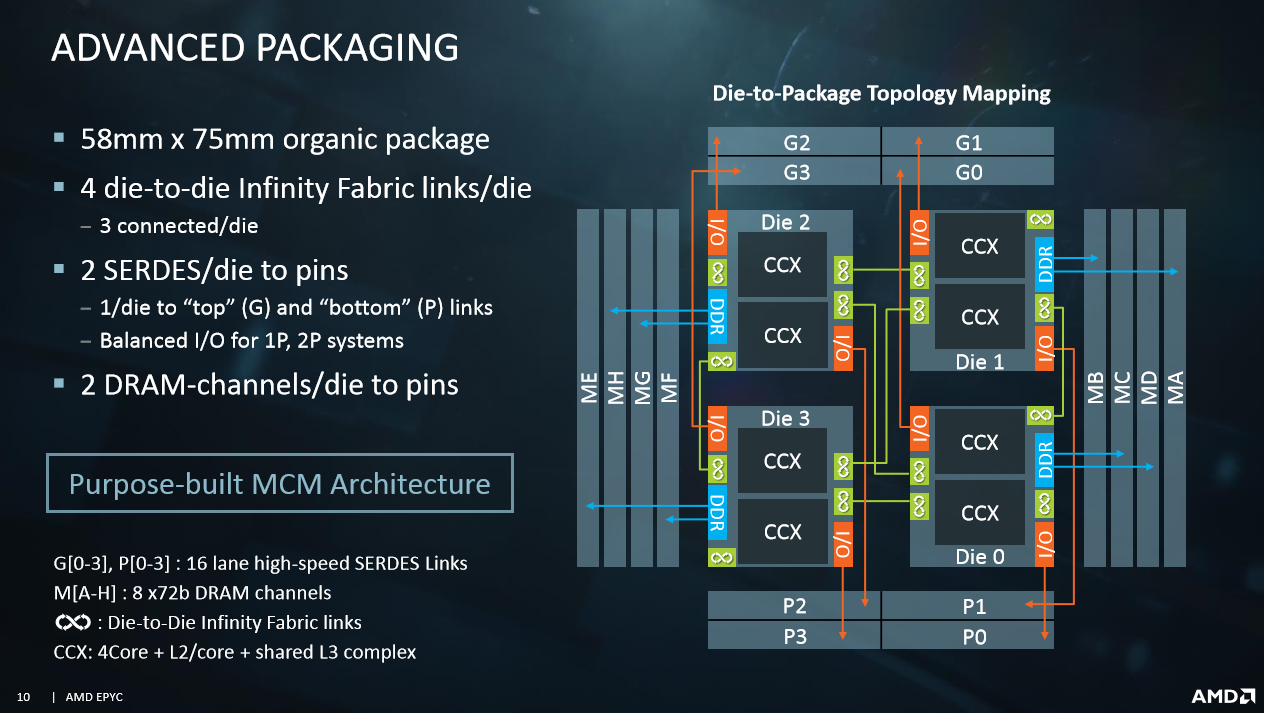

Each die wields four Infinity Fabric links. AMD uses only three links per die to minimize trace length, and thus latency. As you can see, the activated links vary based on the location of the die inside the MCM. Logically, the Threadripper models use only two Infinity Fabric links because they have only two die.

Each die also has two I/O controllers to maximize bandwidth. One feeds into the "G" blocks at the top of the diagram for communication between processors, while the other feeds into the "P" banks at the bottom for PCIe connections. AMD noted the distributed I/O approach ensures consistent performance scaling in two socket servers. Threadripper likely has a somewhat different arrangement because it doesn't need to communicate with another processor, likely leaving only one I/O controller active per die for the PCIe lanes.

Memory Throughput

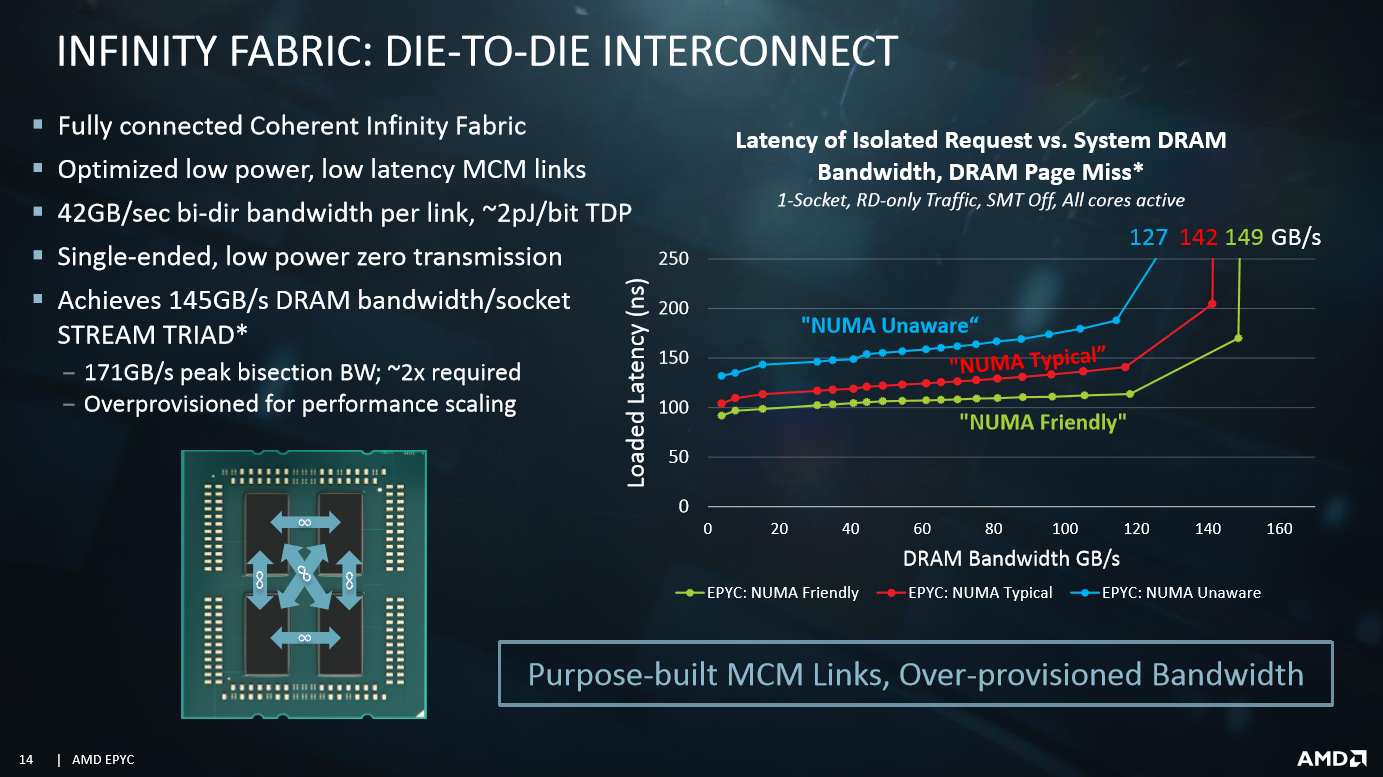

Memory throughput and latency can suffer due to MCM architectures. In fact, that's one of Intel's key arguments in its infamous slide deck. AMD presented DRAM bandwidth tests outlining performance in various configurations. The "NUMA Friendly" bandwidth represents memory accesses to the die's local memory controller, while "NUMA Unaware" measures memory traffic flowing over the Infinity Fabric from a memory controller connected to another die.

Obviously, AMD was aware of the memory throughput challenges associated with an MCM design, so it overprovisioned the memory subsystem to accommodate the complexity. Bandwidth varies by only 15% at full saturation. Notably, throughput scales well with limited variation between the different types of access during lighter workloads.

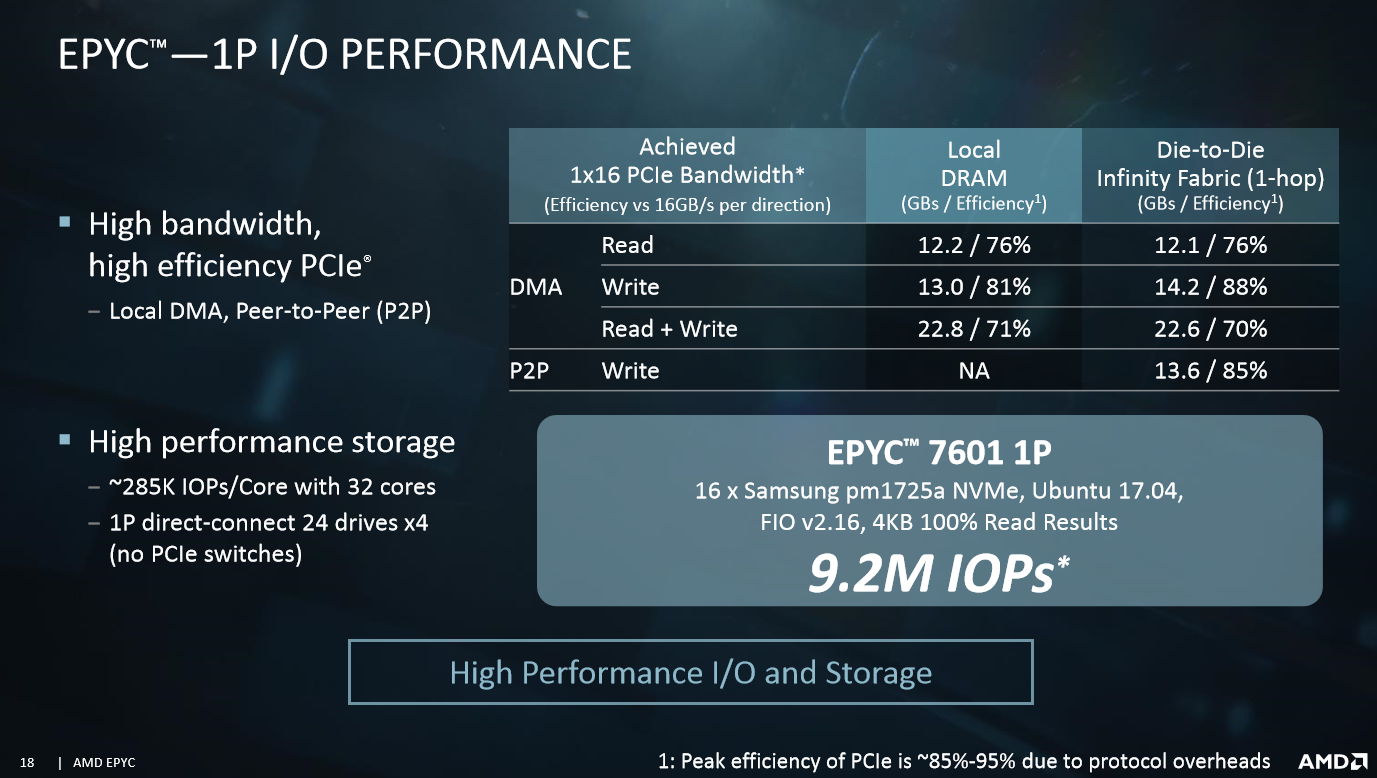

Peer-to-peer (P2P) communication between GPUs is important for AI workloads, which is one of the fastest growing segments in the data center, so performance is critical. AMD's EPYC has an SCH, which is similar to an integrated Northbridge. AMD's switching mechanism inside the processor can reroute device-to-device communication without sending it through the processor's memory subsystem, so it functions very much like a normal switch. This allows EPYC platforms to provide a full 128 PCIe 3.0 lanes without using switches, which reduces cost and complexity. Of course, that doesn't mean much if it doesn't provide the same performance.

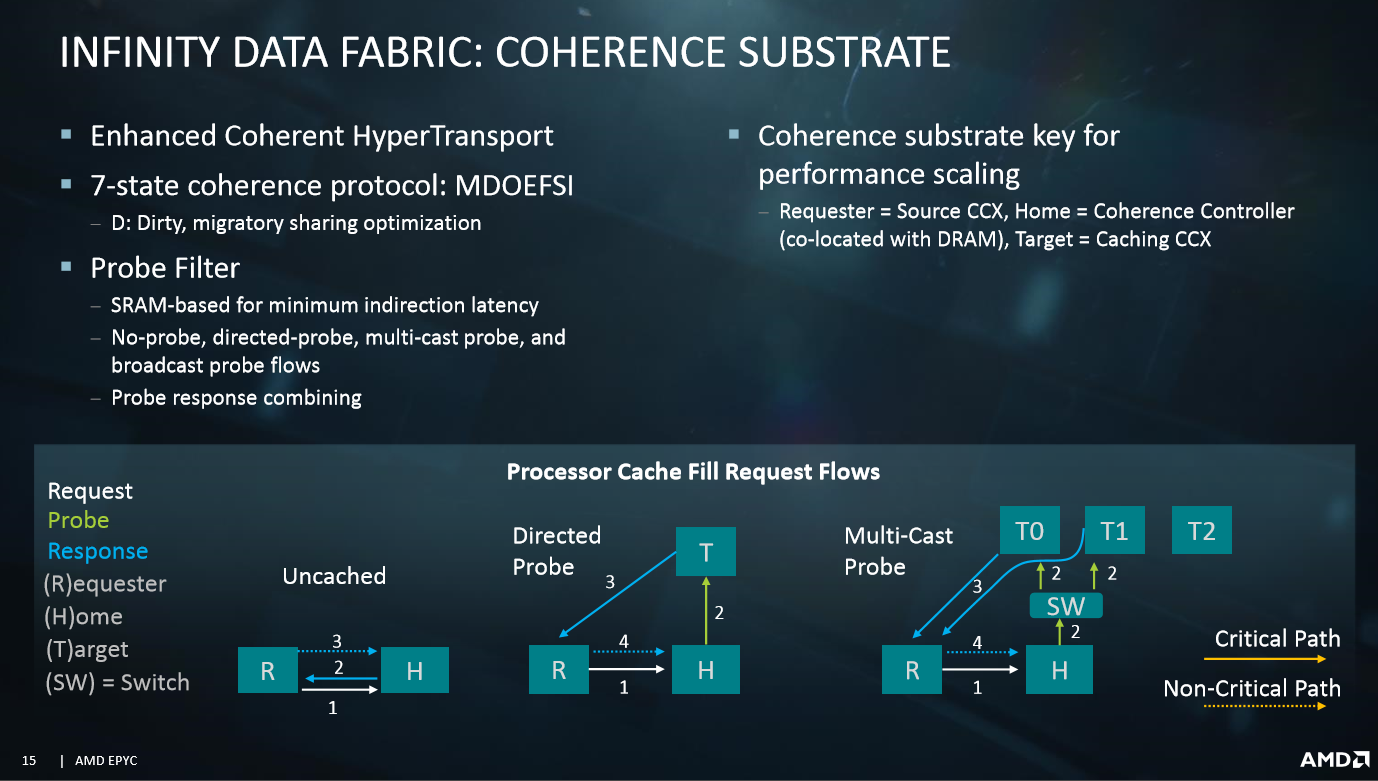

AMD presented performance data from a single socket server that shows EPYC offers solid P2P performance when the data flow traverses the Infinity Fabric. The company also presented DMA performance metrics. The "Local DRAM" column quantifies performance when a GPU does DMA access to the memory controller connected to the same die, while the "die-to-die" column measures performance with a DMA request to another die (across the Infinity Fabric). As you can see, performance is similar and even better in some cases. AMD divulged that the Infinity Fabric, which is an updated version of the coherent HyperTransport protocol, holds the directory tables in a dedicated SRAM buffer and also supports Multi-Cast probe.

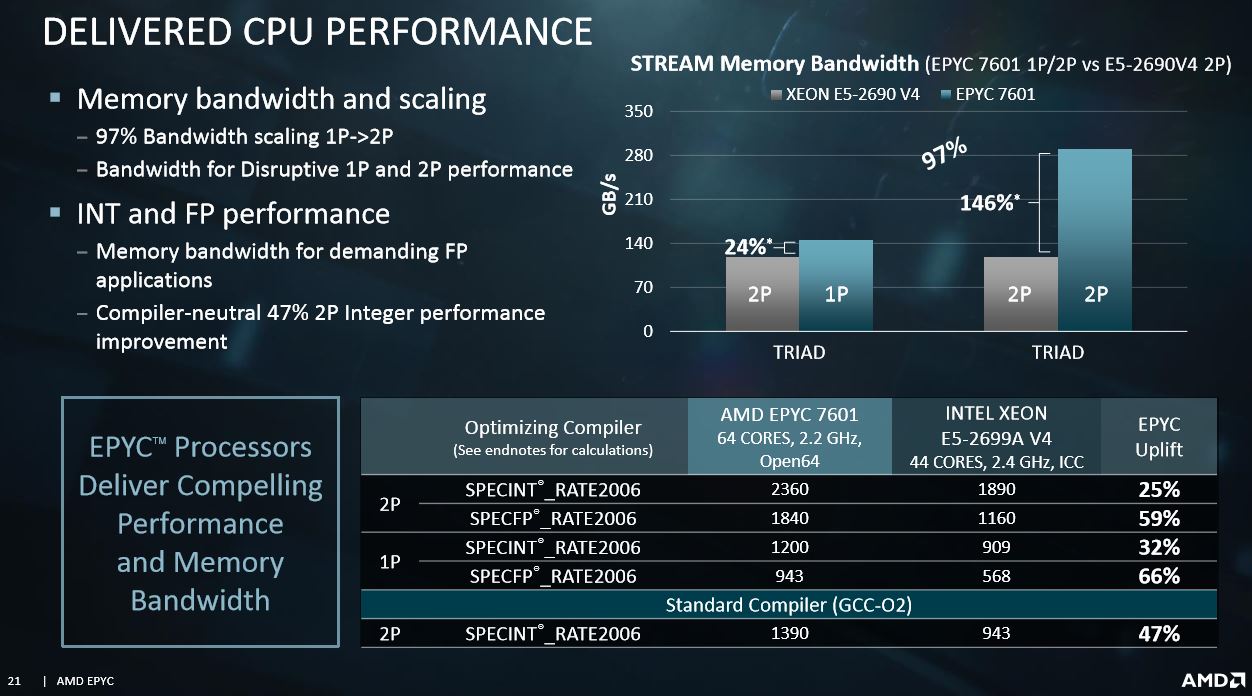

AMD also presented memory throughput and scaling benchmarks that show an impressive lead over Intel's processors, but the company is still comparing to Broadwell-era processors. As we found in our review, Intel's latest data center processors offer a big generational leap in memory throughput. Lepak explained that the company has had difficulties sourcing Intel's Skylake-based Purley processors but is working to provide updated comparisons. (We've included the test notes in a click-to-expand format at the end of the article.)

Final Thoughts

Although AMD has taken the high road and not responded directly to Intel's EPYC slide deck, its Hot Chips presentation seems to dispute a few of Intel's key arguments. First and foremost, AMD designed the die specifically for the data center. Intel's "glue" remark refers to glue logic, which is an industry term for interconnects between die (in this case AMD's Infinity Fabric). In either case, the insinuation that AMD is merely using desktop silicon for the data center certainly has a negative connotation. However, AMD's tactic is innovative and reduces cost. As we stated in our piece covering Intel's deck:

This mitigates the risk that goes into manufacturing complex, monolithic processors, potentially improving yields and keeping costs in check. It also helps the company increase volume at a time when it's going to want plenty of supply. It's a smart strategy for a fabless company that doesn't have Intel's R&D budget to throw around.

Interestingly, Intel's Programmable Solutions Group outlined the company's EMIB (Embedded Multi-die Interconnect Bridge) technology at the show, too. EMIB provides a communication pathway between separate chips to create a unified processing solution, which Intel considers a key technology for its next-gen processors. Although the approaches are different, the motivation behind Intel's EMIB and AMD's Infinity Fabric are similar, which AMD feels validates its approach.

In either case, AMD is humming right along with its EPYC processors, and a wide range of blue chip OEMs and ODMs have platforms coming to market. AMD also announced at the China EPYC technology summit that Tencent will deploy EPYC solutions by the end of the year and JD.com will deploy in the second half of the year. We expect more announcements in the future as the war for data center sockets rages on.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

BulkZerker Silly Intel. Glue is for holding the chips together, ot holding the heatspreader on.Reply -

redgarl Intel should learn that thermal paste should be applied on the CPU heatspreader, not between to chip and the heatspreader before trying to throw dirt at AMD...Reply

Funny that now, EMIB, is the next big thing if we listen to Intel! -

BonScott 8ch mem vs 6ch for Skylake SP more than makes up for EPYC higher latency at larger block sizes.Reply

EPYC bests Skylake in performance in most applications, and is cheaper, lower power, offers better security, more features and IO.

Out of all three: Ryzen, Theradripper, EPYC; its EPYC that outclasses Intel's offering the MOST.

Servers are the cash cow Intel was suppose to protect, but they didn't know Infinity Fabric was this good and this ready as was AMD's Zen core and ability to take advantage of MCM. -

BonScott Reply20103303 said:Intel should learn that thermal paste should be applied on the CPU heatspreader, not between to chip and the heatspreader before trying to throw dirt at AMD...

Funny that now, EMIB, is the next big thing if we listen to Intel!

They better hurry with that EMIB; they've been resting on node advantage continuing as we bump up against Moore's Law. Intel is banking on 10nm saving them from this AMD ass whipping. They are late to the new Moore's Law (parallel proc, MCM, Heterogeneous Architecture), like they are late to everything else ... except PC decades ago thanks only to IBM

Intel is doomed.

-

BonScott Is a commitment for $millions in AMD EPYC CPU sales to Chinese Cloud Providers a good thing? You will see on Monday.Reply

Tencent cloud senior director Zou Xianneng said Tencent cloud will be launched by the end of the year based on AMD EPYC processor 2- way cloud server, up to provide up to 64 processing cores ( 128 threads), with super stand-alone computing The ability to provide the industry with more diverse cloud products and cloud services.

Baidu company president Zhang Yaqin said, equipped with AMD EPYC processor single slot server can significantly increase the Baidu data center computing efficiency, reduce total cost of ownership, reduce energy consumption. Baidu will be released with AMD EPYC listed on the deployment of synchronization.

Jingdong hardware system, said Wang Zhongping, head of technology, China's Internet, electricity companies need more computing core and higher memory bandwidth. AMD EPYC data report shows that up to 32 cores, comparable to the industry's common two-way server, 8- channel memory interface is conducive to achieving higher memory communication bandwidth, I believe these will be more close to the domestic Internet companies The demand. Jingdong will also work with AMD EPYC to optimize the total cost of ownership ( TCO ) of the server system , as well as future technical cooperation in the areas of large data, artificial intelligence, and cloud computing. -

Martell1977 How ironic, I recall when the first AMD dual core CPU's came to the market and intel responded with pentium d, which was basically 2 pentium 4's glue together. They also did that with the first Core 2 quads, using C2D chips. but Intel swore back then that there was no performance penalty for doing it that way compared to true dual or quad cores...Reply -

JonDol "Surprisingly, Barclays downgraded AMD's stock shortly thereafter."Reply

Thanks for pointing out the technologically limited peoples at Barclays since their lack of vision and understanding results in bad use of their huge power as it may hurt not only the innovating companies but also their own clients.

Looks like the peoples having money at Barclays should have a talk with their asset manager. -

Rob1C SemiAccurate had this to say about Xeon Skylake CPUs: https://semiaccurate.com/2017/07/19/skylake-sp-diverged-core/ - accusing Intel of stitching together it's CPU.Reply

Then a follow-up post was written: https://semiaccurate.com/2017/07/21/semiaccurate-skylake-sp-die-shots-sizes/ - unfortunately the second Article is short and reading all it requires a Subscription.

PS: Website Guru: When posting a Comment there is often a Popup that says: "The form is not valid". -

Johnpombrio It all depends on AMD making a profit. It is great that AMD can come up with a way to interpose two CPUs together and that it costs less but that is not the issue. The real problem is to get companies to buy into AMD's solutions. Yes Tencents and others are offering AMD solutions to companies willing to buy the products and services but AMD has been out of the big boys market in servers since 2012. AMD may have the lowest cost solution but it does the company no good unless it can make a PROFIT. AMD has lost a half a billion dollars in the past 5 years and is still losing money every quarter. When it gets out of this hole, then is the time to celebrate AMD's accomplishments in technology.Reply