Computers Can Now Replicate Handwriting

Google Research posted a short article regarding some very interesting research being done by Alex Graves, a Junior Fellow in the Department of Computer Science at the University of Toronto. Graves' research is in Long Short-Term Memory (LSTM) recurrent neural networks. Almost immediately after the link appeared on Twitter, both the Tweet and Google+ post were taken down.

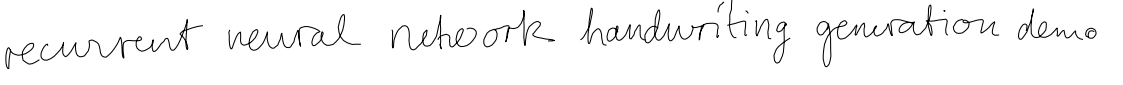

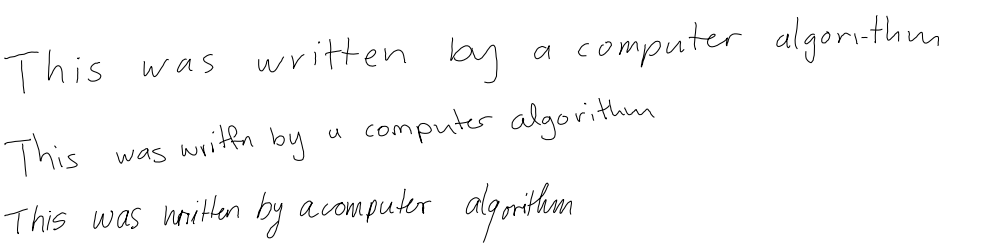

Graves' research suggests that by predicting one data point at a time, a Long Short-Term Memory recurrent neural network can be used to replicate complex sequences. To demonstrate this idea, he chose handwriting synthesis, and he created a tool to show this in action. Using a set of writing examples from different people, his program is able to recreate any phrase in the selected style of writing. The results aren't perfect, but they are surprisingly accurate.

If you’d like to see the process in action, you can try it out for yourself here. The tool, as it stands right now, has five different examples of writing style, and it will let you type up to 100 characters to convert.

It’s somewhat unnerving to think about this. We’ve come to a point where computers are able to not only read cursive writing and decipher what it means with acceptable accuracy, they can now replicate handwriting in a convincing manner, as well. I can’t think of many good reasons to use this technique, but I can sure think of a few that aren’t so good. Maybe that’s why Google pulled the post so quickly.

Follow Kevin Carbotte @pumcypuhoy. Follow us @tomshardware, on Facebook and on Google+.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

jimmysmitty They will never learn. multiple movies and they are still working on making a neural net processor. I have no doubt Skynet is being developed by the military as we speak and the future of darkness will become a reality....Reply -

Vlad Rose Have fun with my handwriting. Almost no one can follow it; even myself sometimes... lolReply -

Mr Soup One of my High School English teachers told me a schizophrenic with Parkinson's and broken fingers could write better than me. But on the plus side he let me type all of my assignments. Google has no hope of replicating my "writing".Reply -

dstarr3 Here's a handwriting tip: Write in small caps. Having to disconnect all your letters slows you down and makes your writing much more deliberate and legible.Reply -

jaber2 ReplyThey will never learn. multiple movies and they are still working on making a neural net processor. I have no doubt Skynet is being developed by the military as we speak and the future of darkness will become a reality....

I know they are always paranoid as evil and want to get rid of humanity but it might turn out only human are concerned about this, once skynet goes online the last thing it would do is get rid of all humans, maybe it would be the savior for all our ills and help us become better, or it could just completely ignore us just the way we ignore ants, I for one welcome our digital lords -

none12345 It could never replicate my hand writing. I could write the same thing 10 times, and all 10 would be different. I can read what i just wrote, but if i tried to read it a day later, or even an hour, i usually cant.Reply

Which is why i just type everything. -

alidan ReplyThey will never learn. multiple movies and they are still working on making a neural net processor. I have no doubt Skynet is being developed by the military as we speak and the future of darkness will become a reality....

I know they are always paranoid as evil and want to get rid of humanity but it might turn out only human are concerned about this, once skynet goes online the last thing it would do is get rid of all humans, maybe it would be the savior for all our ills and help us become better, or it could just completely ignore us just the way we ignore ants, I for one welcome our digital lords

well... look at it this way, if a computer is capable of working machines to repair itself, can run a mine to get more materials, can get used materials, does not need the breathable are to be of good quality to live, what is the point of the humans that get in its way?

the real issue that will pop up is if we make a machine smart enough to self upgrade, or an ai that is able to think that is linked into an unfiltered internet connection.

i could potentially live long enough to get to the point of robotic rights movements.

-

jimmysmitty Reply16305357 said:They will never learn. multiple movies and they are still working on making a neural net processor. I have no doubt Skynet is being developed by the military as we speak and the future of darkness will become a reality....

I know they are always paranoid as evil and want to get rid of humanity but it might turn out only human are concerned about this, once skynet goes online the last thing it would do is get rid of all humans, maybe it would be the savior for all our ills and help us become better, or it could just completely ignore us just the way we ignore ants, I for one welcome our digital lords

Problem isn't the machines it is the humans. Our arrogance will be what starts the path to us becoming a threat as we will be threatened for no reason and feel the need to proclaim our dominance.

Still I doubt we will see this in our lifetime so enjoy life.