Google Launches AI Supercomputer Powered by Nvidia H100 GPUs

Google's A3 supercomputer delivers up to 26 exaFlops of AI performance

Google kicked off Google I/O this afternoon by talking for more than an hour about its numerous advances in artificial intelligence. The company discussed its new PaLM 2 large language model (LLM) for generative AI, which powers the Bard chatbot tool. This is a foundational pillar for adding AI-infused features across Google's product portfolio, including Google Maps, Google Photos, and Gmail (among others).

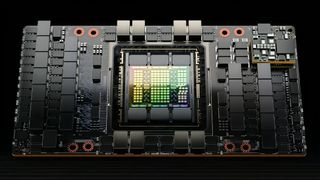

With that in mind, there is a need for some serious horsepower in the cloud to power models in the wild, as millions (and eventually billions) of users send requests for operations as mundane as removing a person lingering in the background of a picture to composing an entire email for you based on a short text prompt. That's where Google's new A3 GPU supercomputer comes into focus. Google says the new A3 supercomputers are "purpose-built to train and serve the most demanding AI models that power today's generative AI and large language model innovation" while delivering 26 exaFlops of AI performance.

Each A3 supercomputer is packed with 4th generation Intel Xeon Scalable processors backed by 2TB of DDR5-4800 memory. But the real "brains" of the operation come from the eight Nvidia H100 "Hopper" GPUs, which have access to 3.6 TBps of bisectional bandwidth by leveraging NVLink 4.0 and NVSwitch.

According to Google, A3 represents the first production-level deployment of its GPU-to-GPU data interface, which allows for sharing data at 200 Gbps while bypassing the host CPU. This interface, which Google calls the Infrastructure Processing Unit (IPU), results in a 10x uplift in available network bandwidth for A3 virtual machines (VM) compared to A2 VMs.

"Google Cloud's A3 VMs, powered by next-generation NVIDIA H100 GPUs, will accelerate training and serving of generative AI applications," said Ian Buck, VP for hyperscale and high-performance computing at NVIDIA. "On the heels of Google Cloud's recently launched G2 instances, we're proud to continue our work with Google Cloud to help transform enterprises around the world with purpose-built AI infrastructure."

If your business wants to leverage A3 virtual machines, the only way to gain access is by filling out Google's A3 Preview Interest Form to join the Early Access Program. But as Google clearly states, plugging in your information doesn't guarantee a spot in the program.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Brandon Hill is a senior editor at Tom's Hardware. He has written about PC and Mac tech since the late 1990s with bylines at AnandTech, DailyTech, and Hot Hardware. When he is not consuming copious amounts of tech news, he can be found enjoying the NC mountains or the beach with his wife and two sons.

-

GoldyChangus Reply

Damn you aren't easily impressed, you must be pretty cool.gg83 said:Here we go. The most advance text message answering service on the planet! I can't wait -

Ravestein NL Reply

I have my reservations about all the AI's growing in the wild.Admin said:Google's A3 GPU supercomputer is the company's latest platform for powering LLMs.

Google Launches AI Supercomputer Powered by Nvidia H100 GPUs : Read more

Who is checking what data the AI has access to?

Who is checking if the answers the AI gives on certain questions is factually correct?

In other words I'm afraid that this tech can easily be used to feed people only the data that's "allowed" and true facts can easily stay hidden.

There is always someone pushing the buttons and is that someone to be trusted?

Everybody knows that self regulation in big tech companies doesn't exist! -

gg83 Reply

Huh? I'm confused by this reply.GoldyTwatus said:Damn you aren't easily impressed, you must be pretty cool. -

Strider379 I call BS. Frontier is now the fastest computer in the world and just hit 1.7 exaflops. Nvidia says that this computer is 15 times faster?Reply -

samopa ReplyStrider379 said:I call BS. Frontier is now the fastest computer in the world and just hit 1.7 exaflops. Nvidia says that this computer is 15 times faster?

They say that A3 is "delivering 26 exaFlops of AI performance", so most likely it is not usual (normal) floating point operations.

Normal Floating Point Operation usually take 80 bit data (or more) per operations, but in AI, such precision is not needed, hence they (usually) use TF32 or even FP8 data format for floating point operations. So, assuming they used FP8 to produce this result, its only need 1/10 performance (hence 10 time faster) than normal floating point operations.

They also do not disclosed how many A3 are used to produce this result. -

bit_user @Strider379 ,Reply

The standard format to use for HPC is to use fp64. I think 80-bit is kind of a weird x87 thing.samopa said:They say that A3 is "delivering 26 exaFlops of AI performance", so most likely it is not usual (normal) floating point operations.

Normal Floating Point Operation usually take 80 bit data (or more) per operations, but in AI, such precision is not needed, hence they (usually) use TF32 or even FP8 data format for floating point operations. So, assuming they used FP8 to produce this result, its only need 1/10 performance (hence 10 time faster) than normal floating point operations.

A quick search for the H100 specs shows a single chip delivers "up to 34 TFLOPS" @ fp64. Using fp64 tensors, you can achieve 67 TFLOPS. To reach the EFLOPS range, you have to drop all the way to fp8 tensors, at which point it delivers 3958 TFLOPS.

https://www.nvidia.com/en-us/data-center/h100/

Actually, they do. They said it incorporates an undisclosed number of 4th Gen (Sapphire Rapids) Xeons (I'd guess 2x) and 8x H100 accelerators. That actually puts it rather below the theoretical peak throughput of 31.7 EFLOPS, although I'd guess the peak presumes boost clocks and what Google is actually reporting is the sustained performance.samopa said:They also do not disclosed how many A3 are used to produce this result. -

samopa Reply

They say the number of H100 used per A3, but they do not say how many A3 they use ;)bit_user said:Actually, they do. They said it incorporates an undisclosed number of 4th Gen (Sapphire Rapids) Xeons (I'd guess 2x) and 8x H100 accelerators. That actually puts it rather below the theoretical peak throughput of 31.7 EFLOPS, although I'd guess the peak presumes boost clocks and what Google is actually reporting is the sustained performance.

Most Popular