Nvidia: H100 AI Performance Improves by Up to 54 Percent With Software Optimizations

Nvidia's H100 remains the inference performance king and keeps getting faster.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Nvidia just published some new performance numbers for its H100 compute GPU in MLPerf 3.0, the latest version of a prominent benchmark for deep learning workloads. The Hopper H100 processor not only surpasses its predecessor A100 in time-to-train measurements, but it's gaining performance thanks to software optimizations. In addition, Nvidia also revealed early performance comparisons of its compact L4 compact compute GPU to its predecessor, the T4 GPU.

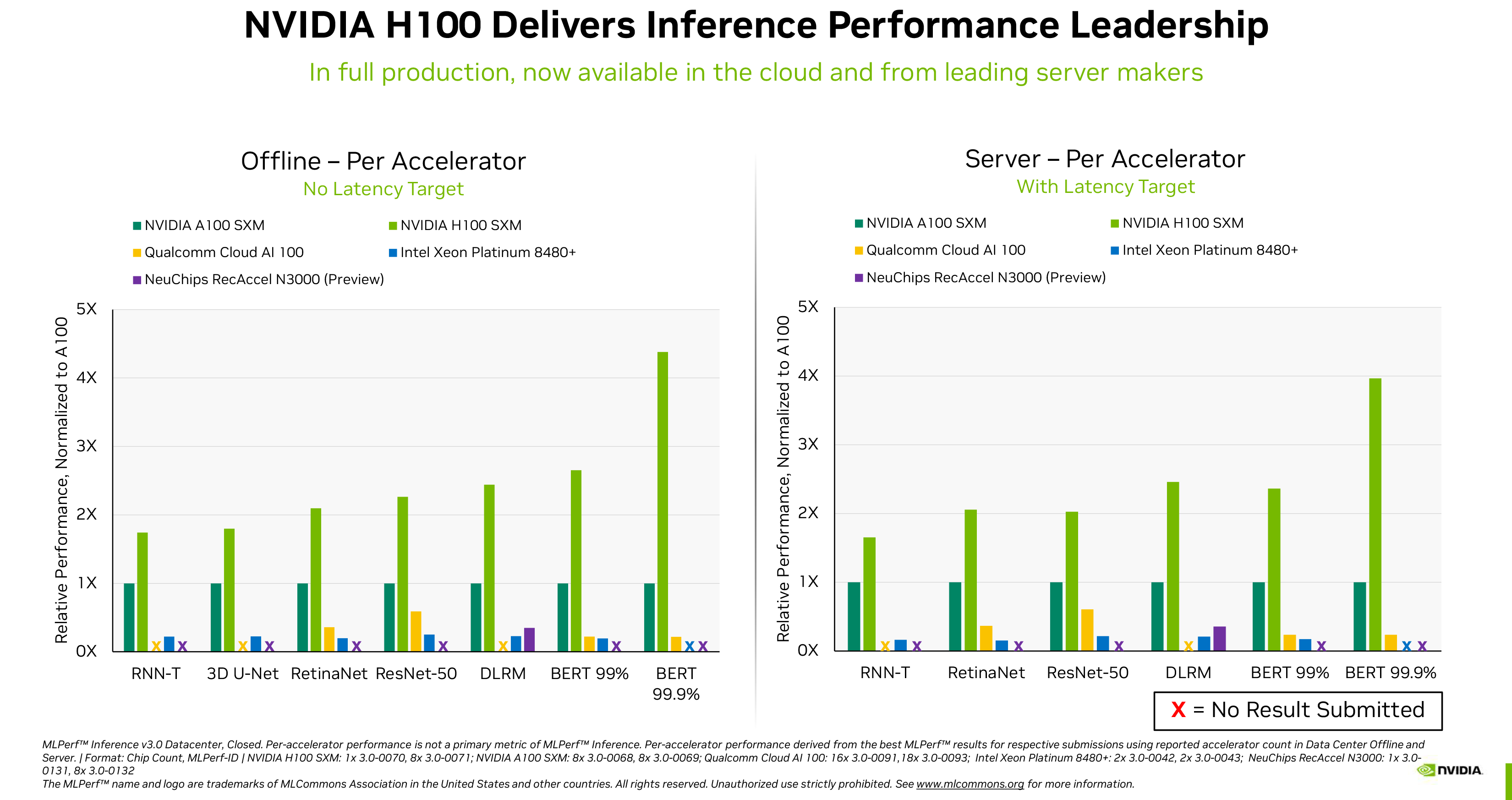

Nvidia first published H100 test results obtained in the MLPerf 2.1 benchmark back in September 2022, revealing that its flagship compute GPU can beat its predecessor A100 by up to 4.3–4.4 times in various inference workloads. The newly released performance numbers obtained in MLPerf 3.0 not only confirm that Nvidia's H100 is faster than its A100 (no surprise), but reaffirms that it is also tangibly faster than Intel's recently released Xeon Platinum 8480+ (Sapphire Rapids) processor as well as NeuChips's ReccAccel N3000 and Qualcomm's Cloud AI 100 solutions in a host of workloads

These workloads include image classification (ResNet 50 v1.5), natural language processing (BERT Large), speech recognition (RNN-T), medical imaging (3D U-Net), object detection (RetinaNet), and recommendation (DLRM). Nvidia makes the point that not only are its GPUs faster, but they have better support across the ML industry — some of the workloads failed on competing solutions.

There is a catch with the numbers published by Nvidia though. Vendors have the option to submit their MLPerf results in two categories: closed and open. In the closed category, all vendors must run mathematically equivalent neural networks, whereas in the open category they can modify the networks to optimize their performance for their hardware. Nvidia's numbers only reflect the closed category, so optimizations Intel or other vendors can introduce to optimize performance of their hardware are not reflected in these group results.

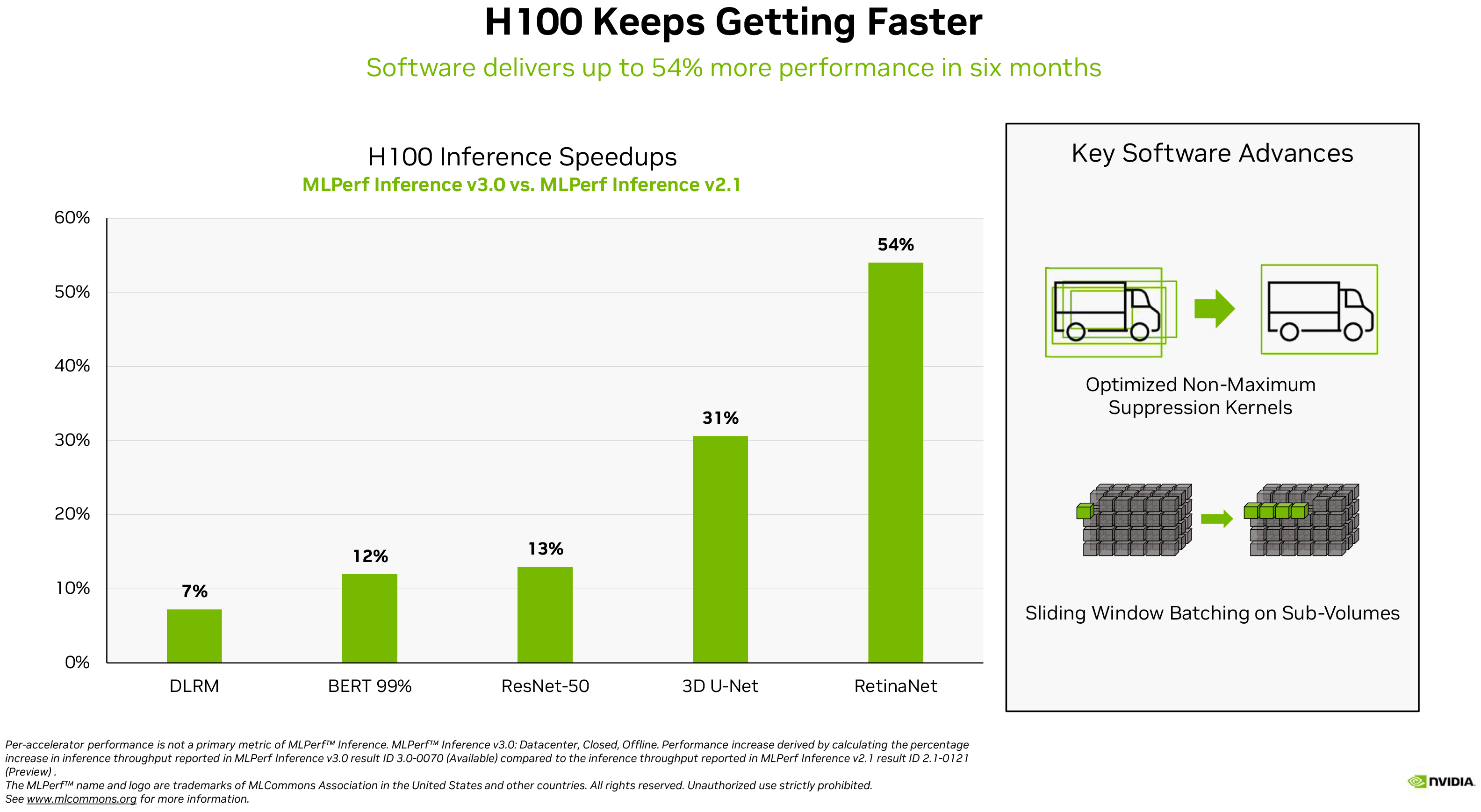

Software optimizations can bring huge benefits to modern AI hardware, as Nvidia's own example shows. The company's H100 gained anywhere from 7% in recommendation workloads to 54% in object detection workloads with in MLPerf 3.0 vs MLPerf 2.1, which is a sizeable performance uplift.

Referencing the explosion of ChatGPT and similar services, Dave Salvator, Director of AI, Benchmarking and Cloud, at Nvidia, writes in a blog post: "At this iPhone moment of AI, performance on inference is vital... Deep learning is now being deployed nearly everywhere, driving an insatiable need for inference performance from factory floors to online recommendation systems."

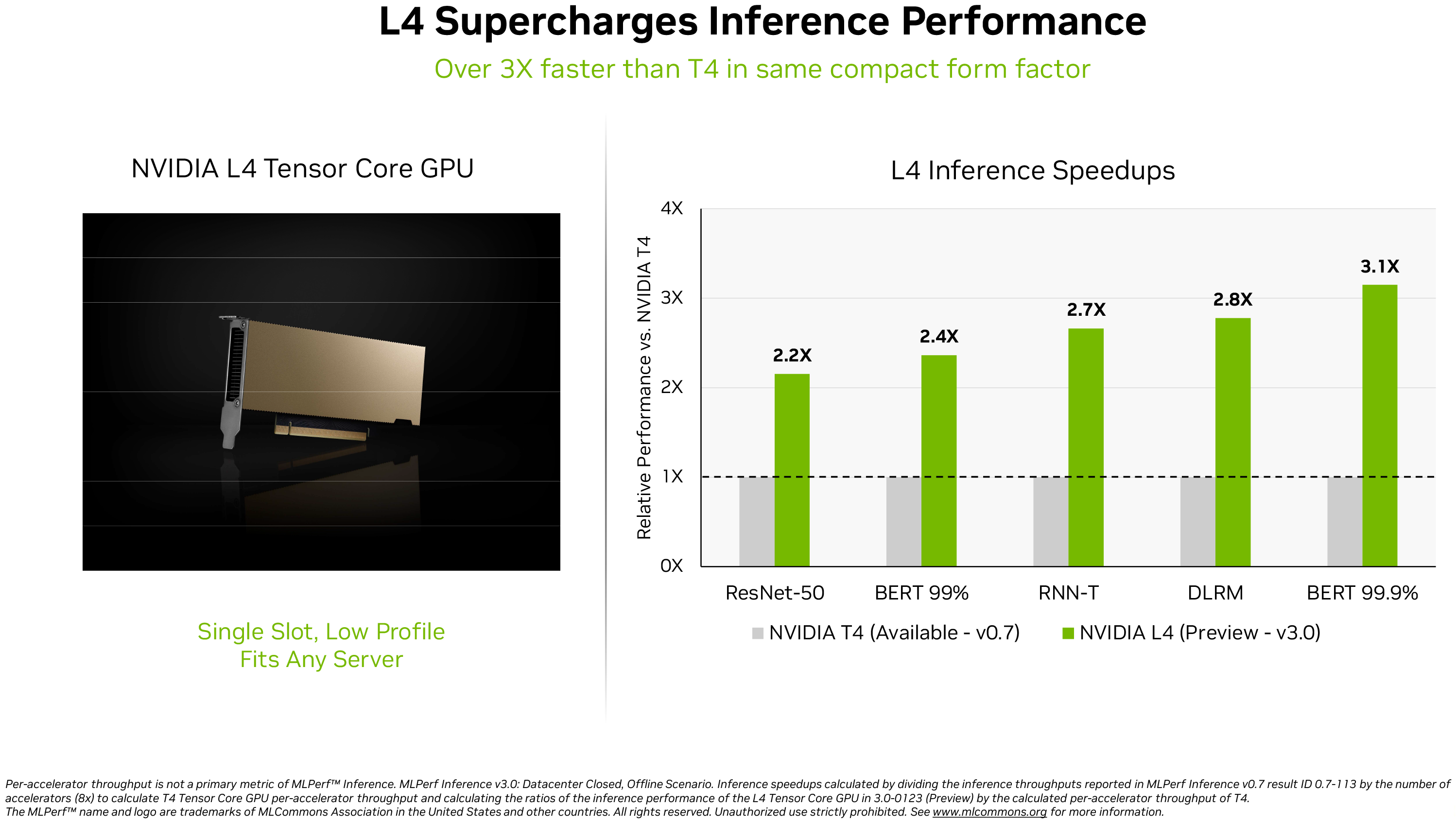

In addition to reaffirming that its H100 is inference performance king in MLPerf 3.0, the company also gave a sneak peek on performance of its recently released AD104-based L4 compute GPU. This Ada Lovelace-powered compute GPU card comes in a single-slot low-profile form-factor to fit into any server, yet it delivers quite formidable performance: up to 30.3 FP32 TFLOPS for general compute and up to 485 FP8 TFLOPS (with sparsity).

Nvidia only compared its L4 to one of its other compact datacenter GPUs, the T4. The latter is based on the TU104 GPU featuring the Turing architecture from 2018, so it's hardly surprising that the new GPU is 2.2–3.1 times faster than the predecessor in MLPerf 3.0, depending on the workload.

"In addition to stellar AI performance, L4 GPUs deliver up to 10x faster image decode, up to 3.2x faster video processing, and over 4x faster graphics and real-time rendering performance," Salvator wrote.

Without doubt, the benchmark results of Nvidia's H100 and L4 compute GPUs — which are already offered by major systems makers and cloud service providers — look impressive. Still, keep in mind that we are dealing with benchmark numbers published by Nvidia itself rather than independent tests.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

This doesn't come much of a surprise though, since to achieve this level of performance on H100, NVIDIA is using FP8 performance through its transformer engine that is embedded within the Hopper architecture. Ampere lacks this.Reply

It works on a per-layer basis by analyzing all of the workload that is sent through it and then attests whether the data can be run in FP8 without compromising efficiency. Suppose if the data can be run in FP8, then it'll use that, if not, then the transformer engine will utilize the FP16 Math Ops and FP32 accumulate to run the data instead.

Since the Ampere arch lineup didn't have a Transformer engine architecture, it was run on FP16+FP32, rather than FP8.

Also, while on the topic of MLPerf, it is worth mentioning that Deci has also announced that it has achieved record-breaking inference speed on NVIDIA GPUs at MLPerf. This chart shows the throughput performance per TeraFLOPs as achieved by Deci and other submitters within the same category.

It appears that with Deci, teams can replace 8 NVIDIA A100 cards with just one NVIDIA H100 card, while getting higher throughput and better accuracy (+0.47 F1).

https://cdn.wccftech.com/wp-content/uploads/2023/04/image002-1.png -

BTW, it appears that NVidia has also showcased a performance boost with the Jetson AGX Orin SOC/platform. I presume that Nvidia used the JetPack SDK 5.1 kit to achieve this feat. When it comes to performance alone, the Orin SOC shows an 81% boost while in power efficiency, the chip shows up to a 63% performance jump which is dramatic.Reply

NVIDIA seem to be more committed to the GPU and silicon longevity in the server space than AMD, no?. Just curious to know the future of Orin SOC.

https://cdn.wccftech.com/wp-content/uploads/2023/04/NVIDIA-MLPerf-Hopper-H100-L4-Ada-GPUs-Performance-Benchmarks-_7.png