NASA's old supercomputers are causing mission delays — one has 18,000 CPUs but only 48 GPUs, highlighting dire need for update

Managed wrongly, too, internal report finds.

NASA works with some of the world's most advanced technologies and makes some of the most significant discoveries in human history. However, according to a special report conducted by the NASA Office of Inspector General and discovered by The Register, NASA's supercomputing capabilities are insufficient for its tasks, which leads to mission delays. NASA's supercomputers still rely primarily on CPUs, and one of its flagship supercomputers uses 18,000 CPUs and 48 GPUs.

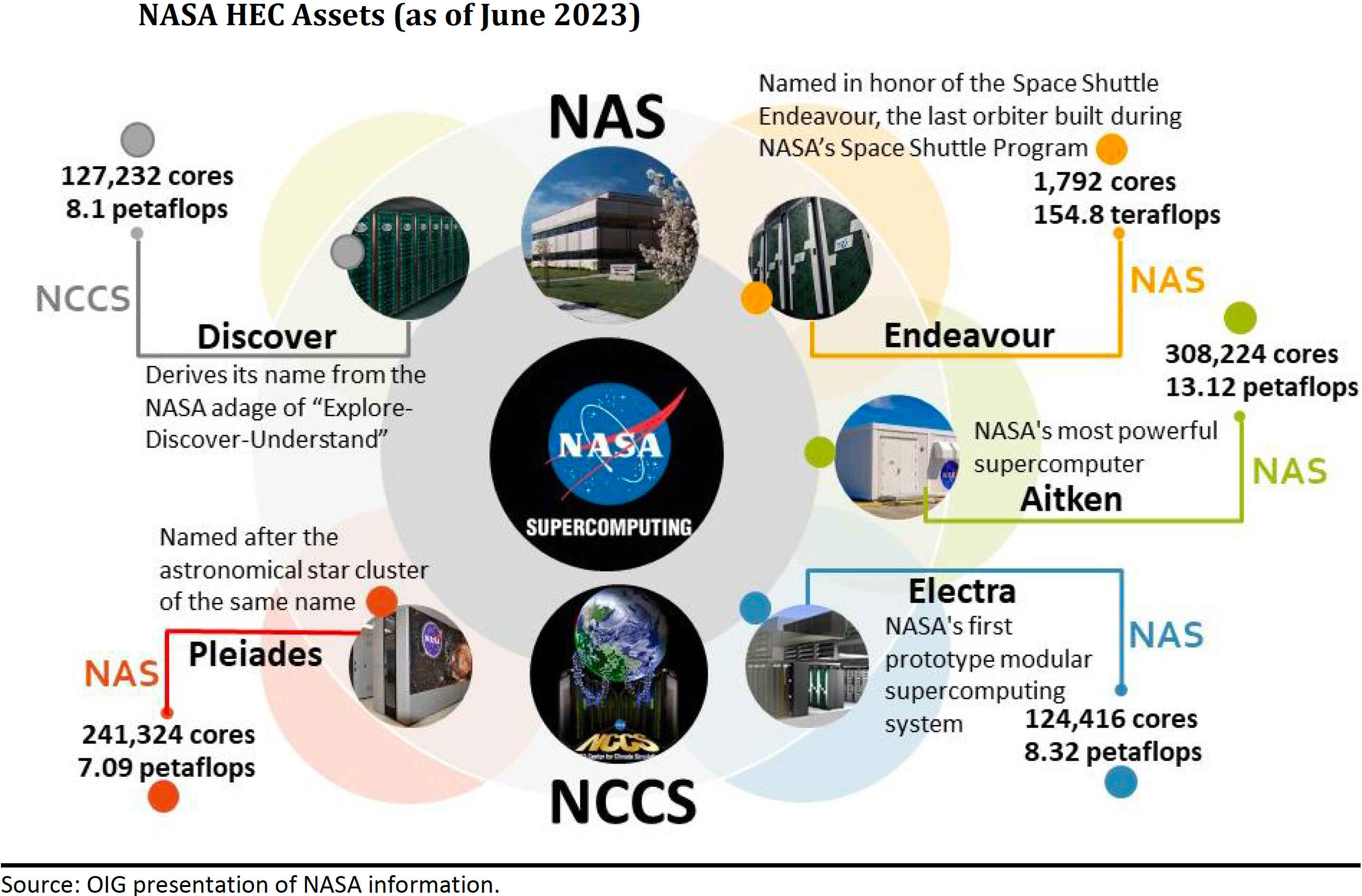

NASA currently has five central high-end computing (HEC) assets located at the NASA Advanced Supercomputing (NAS) facility in Ames, California, and the NASA Center for Climate Simulation (NCCS) in Goddard, Maryland. The list includes Aitken (13.12 PFLOPS, designed to support the Artemis program, which aims to return humans to the Moon and establish a sustainable presence there), Electra (8.32 PFLOPS), Discover (8.1 PFLOPS, used for climate and weather modeling), Pleiades (7.09 PFLOPS, used for climate simulations, astrophysical studies, and aerospace modeling, and Endeavour (154.8 TFLOPS).

These machines almost exclusively use old CPU cores. For example, all NAS supercomputers use over 18,000 CPUs and only 48 GPUs, and NCSS uses even fewer GPUs.

"HEC officials raised multiple concerns regarding this observation, stating that the inability to modernize NASA's systems can be attributed to various factors such as supply chain concerns, modern computing language (coding) requirements, and the scarcity of qualified personnel needed to implement the new technologies," the report says. "Ultimately, this inability to modernize its current HEC infrastructure will directly impact the Agency's ability to meet its exploration, scientific, and research goals."

The audit conducted by NASA's Office of Inspector General also revealed that the agency's HEC operations are not centrally managed, resulting in inefficiencies and a lack of a cohesive strategy for using on-premises versus cloud computing resources. This uncertainty has led to hesitancy in using cloud resources due to unknown scheduling practices or assumed higher costs. Some missions have resorted to acquiring their infrastructure to avoid waiting for access to the primary supercomputing resources, which are overwhelmed to a large degree because they do not rely on the latest HPC technologies.

Additionally, the audit found that the security controls for the HEC infrastructure are often bypassed or not implemented, increasing the risk of cyber attacks.

The report suggests that transitioning to GPUs and code modernization is essential for meeting NASA's current and future needs. GPUs offer significantly higher computational capabilities for workloads involving parallel processing, which are very common in scientific simulations and modeling.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

George³ I'm sure if they install zetaflops of supercomputers at NASA, the result will be the same.Reply -

Co BIY The problem of a lack of talent in high-tech jobs is typical in all levels of government.Reply

Even mediocre tech talent can get great salaries in the private sector making a government job unappealing.

Add in typical .gov bureaucracy, Federal purchasing rules, a rapidly changing field and likely political lobbying it's amazing anything is accomplished.

I'd like an article on Space X's supercomputer infrastructure. It would make an interesting comparison. -

bit_user Reply

Not only that, but surely the looming threat (and sometimes practical reality) of government shutdowns, every couple of years, can't help.Co BIY said:The problem of a lack of talent in high-tech jobs is typical in all levels of government.

Even mediocre tech talent can get great salaries in the private sector making a government job unappealing.

Yeah, but theirs is surely RoI-based, whereas NASA's is probably limited by what they have budget for, when the need arises. NASA is not RoI-based, nor do they have time-to-market constraints. They have missions which are much more limited in their funding than they are in time. So, if the computing portion takes longer because you have to run it on older infrastructure, that's easier to live with than scaling back mission objectives so you can buy newer computing hardware.Co BIY said:I'd like an article on Space X's supercomputer infrastructure. It would make an interesting comparison.

IMO, they should just negotiate big contracts with cloud computing providers and decommission anything that's significantly less cost-effective to operate than those contracts. They should also keep a transition plan in their back pocket, so they could revert to using their own infrastructure, if the cloud computing costs ever got out of hand. -

bit_user Reply

For one thing, you have to keep in mind that people programming them are typically experts in some other domain. So, the programming model they're typically using is probably OpenMP or other standard HPC frameworks.IDNeon said:Im not impressed with the quality of this article. FPGAs are better matrix math accelerators than GPUs.

Also, the benefits of FPGA are mainly around signal processing and limited-precision arithmetic, whereas most HPC code utilizes 64-bit floating point. Show me a FPGA that can even come close to competing on fp64 with a H100 or MI300.

Oops, didn't you hear? Intel is spinning off Altera.IDNeon said:NASA might build a better supercomputer with Intel CPUs and FPGAs.

Unless you have specific knowledge to the contrary, I believe Congress doesn't allocate NASA funding down to the level of choosing the suppliers for their compute infrastructure. Congress allocates budget for NASA, perhaps earmarking certain amounts for specific missions, but then I think the rest of the decisions are up to NASA.IDNeon said:But then that wouldn't make any richer now would it? -

jlake3 Reply

I'm sorry, but what?IDNeon said:Im not impressed with the quality of this article. FPGAs are better matrix math accelerators than GPUs. Why would a supercomputer benefit from GPUs versus FPGAs? Without knowing the actual architecture of the HPCs controlled by NASA then all this article is doing is regurgitating some moron at NASA who thinks adding A100s makes a better supercomputer.

NASA might build a better supercomputer with Intel CPUs and FPGAs. But then that wouldn't make Nacy Pelosi any richer now would it?

If you look at the TOP500 supercomputer rankings, none of the top systems are using FPGAs as their primary accelerators/coprocessors. That sounds like it could be difficult to develop for, as well as difficult to manage the flashing and synchronizing, less flexible if you're sharing compute time between multiple projects, and still slower and less efficient than an ASIC if your workload is extremely predictable. -

Co BIY Replybit_user said:Not only that, but surely the looming threat (and sometimes practical reality) of government shutdowns, every couple of years, can't help.

Federal government shutdowns are a perk for the workers not a detriment. They always get paid the missing days in a few weeks and it ends up being a bonus vacation. It's the taxpayers who lose in a shutdown, but they also lose if government shutdowns are considered unthinkable.

bit_user said:IMO, they should just negotiate big contracts with cloud computing providers and decommission anything that's significantly less cost-effective to operate than those contracts. They should also keep a transition plan in their back pocket, so they could revert to using their own infrastructure, if the cloud computing costs ever got out of hand.

You would need some pretty talented managers to do that well also. I'm not knowledgeable enough to know how difficult managing the security aspect would be. -

thestryker Reply

NASA has been extremely screwed by congressional budgeting for years due to earmarking. Somewhere around half their budget is already spoken for due to mandatory programs. Back when Shelby was still a senator we had the building that was being worked on for the engines for the Constellation program that he forced to be completed despite the program being canceled and NASA not being able to use it for the engines for SLS. This sort of thing has dogged the agency for decades which is partly how we ended up with no shuttle replacement even in true planning before the program ended.bit_user said:Unless you have specific knowledge to the contrary, I believe Congress doesn't allocate NASA funding down to the level of choosing the suppliers for their compute infrastructure. Congress allocates budget for NASA, perhaps earmarking certain amounts for specific missions, but then I think the rest of the decisions are up to NASA.

The ridiculous bureaucracy has undoubtedly caused a huge amount of problems getting/retaining talent. Who really wants to work on programs that are pointless, bad ideas or have a high likelihood of being retasked. The problem with computing here really seems like a situation of knowing there's no money to fix it so they keep moving along. -

IDNeon Reply

All infrastructure is political. My "facetious comment" about FPGAs is meant to provoke thought. Without knowing the actual architecture of these HPCs all we are given is some puff piece that goes out of its way to mention GPUs during a "GPU craze".bit_user said:For one thing, you have to keep in mind that people programming them are typically experts in some other domain. So, the programming model they're typically using is probably OpenMP or other standard HPC frameworks.

Also, the benefits of FPGA are mainly around signal processing and limited-precision arithmetic, whereas most HPC code utilizes 64-bit floating point. Show me a FPGA that can even come close to competing on fp64 with a H100 or MI300.

Oops, didn't you hear? Intel is spinning off Altera.

Unless you have specific knowledge to the contrary, I believe Congress doesn't allocate NASA funding down to the level of choosing the suppliers for their compute infrastructure. Congress allocates budget for NASA, perhaps earmarking certain amounts for specific missions, but then I think the rest of the decisions are up to NASA.

Nowhere is there any consideration of the design of the HPCs in question which may be built using any number of other accelerators than GPUs. It could have been ASICs.

It could be INTL processors and AMD (xilinx) FPGA accelerators.

It could be ARM.

There is no reason to mention GPUs whatsoever in this news article. -

USAFRet Reply

As a person who was subject to one or more of those "shutdowns", you're wrong.Co BIY said:Federal government shutdowns are a perk for the workers not a detriment. They always get paid the missing days in a few weeks and it ends up being a bonus vacation.

It is not a perk, or vacation. It is maybe 1 or 2 days a month.

And you can't really go anywhere, because if Congress comes to its senses and signs something at 11:58PM, you have to go to work the next day.

Additionally, the pay is retroactive. You are minus that pay for the term of the shutdown.

A lot of uncertainty.

And there is NOTHING in writing where they have to provide that backpay. Yes, they do. But is it NOT in writing.

But, this has gone far off topic, so lets leave it. -

IDNeon Reply

You're comparing "generalized public supercomputers" designed to sell fractions of time to highest bidders versus catered and specifically designed in-house systems designed to cater to exacting specifications and security requirements.jlake3 said:I'm sorry, but what?

If you look at the TOP500 supercomputer rankings, none of the top systems are using FPGAs as their primary accelerators/coprocessors. That sounds like it could be difficult to develop for, as well as difficult to manage the flashing and synchronizing, less flexible if you're sharing compute time between multiple projects, and still slower and less efficient than an ASIC if your workload is extremely predictable.

Which is also why it's ridiculous to imagine using public cloud computing capabilities to run top secret nuclear proliferation controlled technology data on them.

All missile technology is considered controlled by nuclear proliferation laws and missile technology export controls. Etc.

I also want to point out that the Pleiades Super computer at NASA specifically was built using FPGAs if I recall.

Which further makes my point. The article (not the person here quoting it) seems buffoonish and wrongly motivated to hop on the GPU bandwagon.