BitTorrent's P2P Browser For Decentralized Websites Now In Beta

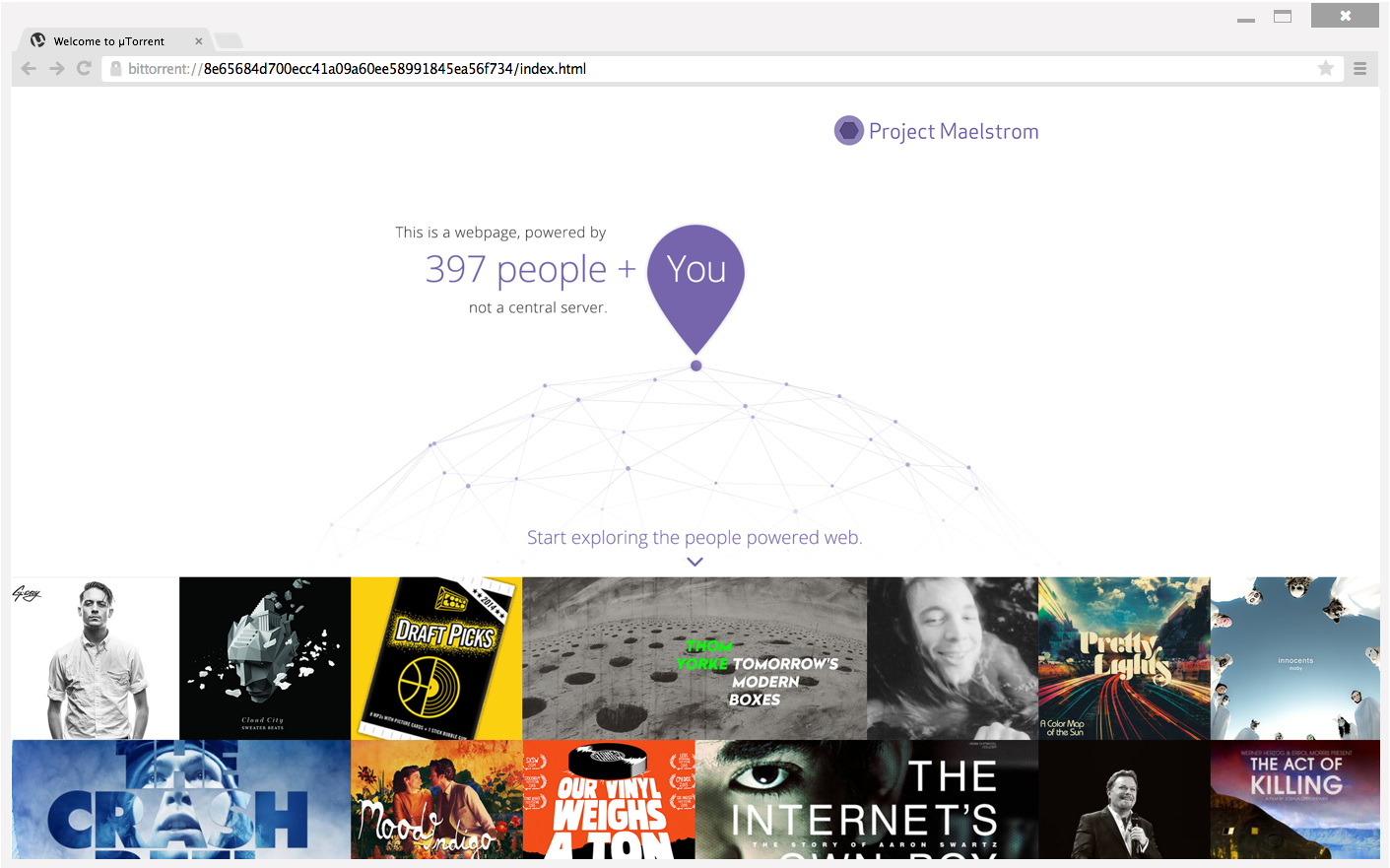

BitTorrent, the company behind file-sharing tools such as BitTorrent, uTorrent (acquired) and BitTorrent Sync, launched the beta version of its peer-to-peer browser, Maelstrom. Maelstrom is a browser based on Chromium that allows websites to work over the BitTorrent protocol and be hosted by peers, without any servers needed.

The alpha version of the browser was released in December 2014, and since then over 10,000 developers and 3,500 publishers have signed up to work with the platform. Although you had to receive an invitation for the alpha version, the beta version is now public which means even more people can try out the browser. Right now the browser only works on Windows, but the Mac version is "coming soon."

The reason Maelstrom is a fork of Chromium is because the browser can still deliver all the web pages on the Internet, just like all the other browsers. However, it can also access these torrent-powered websites that are hosted on people's PCs. Therefore, it's like Chrome, but with the ability to also load torrent-based websites.

The idea is that eventually, popular websites could load even faster than sites that are hosted on centralized servers. The more people "seed" a website, the higher the chance one of those seeds would be closer to you. Then the website could be delivered faster than it would be if it had to come from some other country or region where the website you're trying to access is hosted.

For sites that might not be popular enough for the P2P model to work well, it should be possible to use a mix of servers and regular peers to offer a good experience to their visitors. This way the servers ensure that there's always a strong "seed" for the website within a group of peers supporting the website, which ensures reliability. At the same time, the extra peers can still improve the speed of access for those who may be closer to another peer rather than the server.

Because the more peers share a torrent-based site, the faster that site becomes, server overload problems could be assuaged for popular web pages or sites. With the centralized server model, if too many people visit a certain site and the IT administrators weren't prepared for that kind of traffic, the server could become overwhelmed. With the torrent model, it just means that more peers join the network because once they visit a site, they have also downloaded that site and have become peers for that site.

Maelstrom and other such projects that aim to make the web P2P could lead to less censorship, weaker DDoS attacks, cheaper hosting for site owners and many other benefits. Achieving some kind of critical mass will be important for Project Maelstrom to take off, though, otherwise it could be left with only a few torrent-based sites that work on it and not enough people willing to host them.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow us @tomshardware, on Facebook and on Google+.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

PaulBags That sound interesting for small, fairly static websites. What about sites with dynamic or constantly changing content?Reply

What about mobile browsers? -

nofish Similar project, but OpenSource and works on any OS/Browser: https://github.com/HelloZeroNet/ZeroNetReply

Also solves the dynamic content problem: The peers is grouped by site owner's publickey (in torrent peers grouped by content's hash), so the owner anytime can distribute new updates to site. -

Seb Yx This might work good with websockets pub/sub model. Instead of pushing updates to every connection, you only push to a few then it propagates. But not everyone wants to be a server => websocket blockers => internet moving backwardsReply -

bloc97 Is there any implementation of a security feature? When a website becomes p2p wouldn't it be hard to control malware?Reply -

dstarr3 ReplyIs there any implementation of a security feature? When a website becomes p2p wouldn't it be hard to control malware?

If you're torrenting, hopefully you have anti-malware measures in place already. -

knowom Something like a download for the homepage proxy server squid cache data to the top 100,000 websites updated weekly or monthly would probably be a better, faster, and more reliable approach to be honest.Reply

This just sounds entirely too dependent on seeders which could be quite fickle and hit or miss not to mention content providers which could also be hit or miss meanwhile squid cache works and getting more people to start using would be a good thing anyway that would take some burden of ISP which might in turn solve some of the bandwidth burden, consumption, and capping dilemma's consumers & ISP's face. -

clifftam For those who don't have unlimited bandwidth and/or slow connection, why do I want others to access website through my browser and hog my bandiwdth?Reply -

milkod2001 I like the idea but i believe that delay between seeders being found and website being actually displayed on screen will always be bigger that any request sent to main server and data being sent back(as is currently now).Reply

Besides does not modern browsers like chrome store/cache websites already visited and sends request only for new/dynamic content? How exactly p2p browsers are going to help here?

-

milkod2001 p2p browser will still have to communicate with main server when requesting the latest dynamic content for website. It will have to compare this information with information potential seeder has. This will only cause another delay.I must be missing something as i actually see zero benefits of p2p browsers. Maybe for static websites? mehReply