Nvidia GPUs Can Outperform Google Brain

Nvidia talks machine learning and the popular faces of humans and cats.

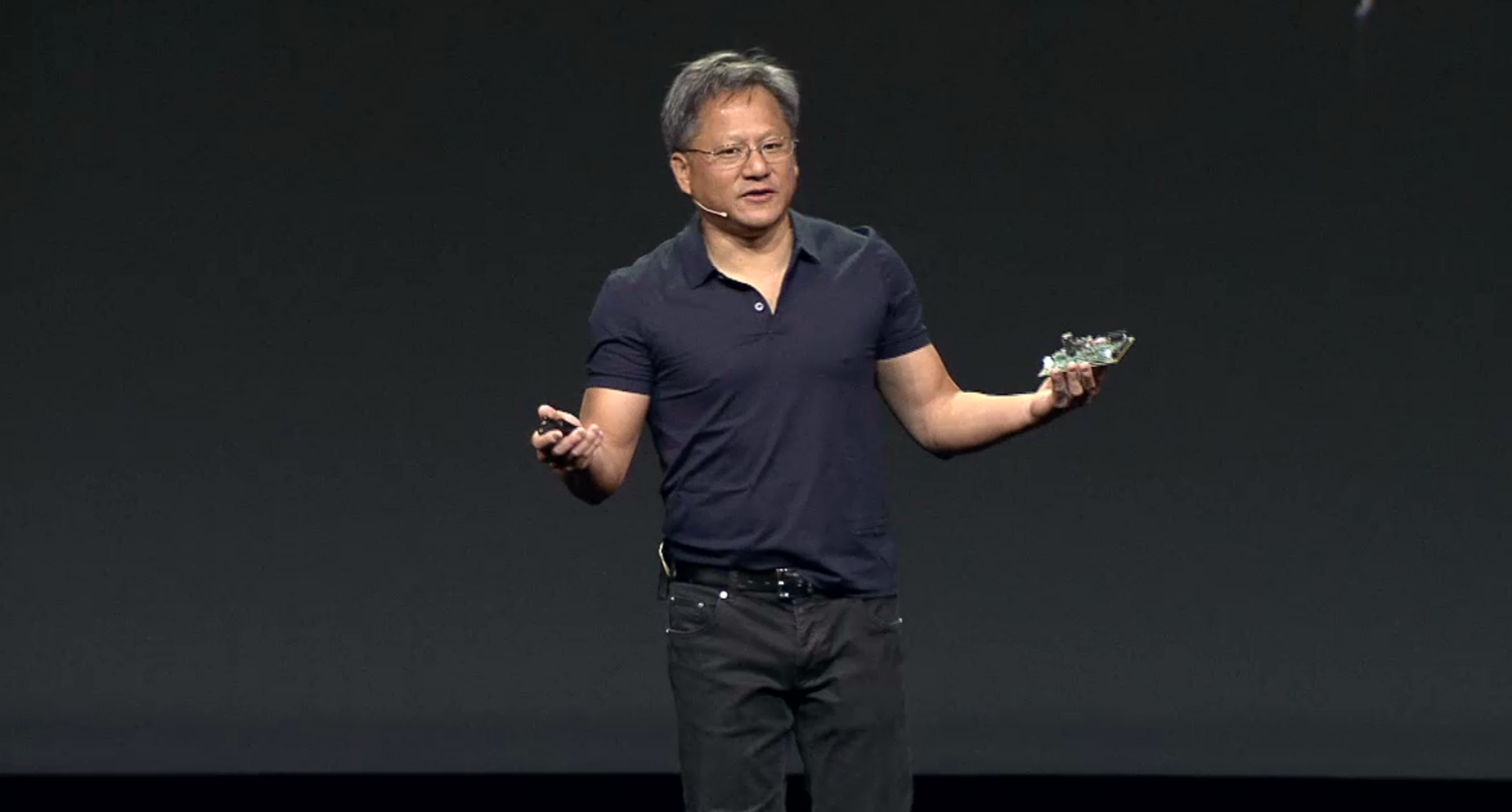

Nvidia's Jen-Hsun Huang talked a great deal about Machine Learning during his GTC 2014 keynote presentation. Machine Learning is a branch of artificial intelligence that becomes smarter as more data is presented; it actually learns, giving the impression that the PC is thinking.

"This is a pretty exciting time for data," he told the keynote audience. "As you know, we're surrounded by data; there are torrents of data from your cameras, from your GPS, from your cell phone, from the video you upload, on searches that we do, on purchases that you make. And in the future, as your car drives around, we're going to be collecting enormous, enormous amounts of data. And all of this data can contribute to machines be smarter."

He goes on to talk about programs that are running on massive super-computers that emulate how the brain functions. Our brains have neurons that recognize edges; we have a neuron for every type of edge. These edges turn into features that, when combined with other features, become a face. Computer scientists call this object recognition.

A breakthrough in machine learning came by way of Google Brain, which consisted of 1,000 servers (16,000 CPU cores) simulating a model of the brain with a billion synapses (connections). Google Brain was trained using ten million 200x200 images unsupervised in three days. At the end, Google Brain revealed that there are two types of images that show up on the Internet quite frequently: faces and cats.

He said that a billion synapses is what you'll find in a honey bee. To emulate an actual human brain, you'll need a 100 billion neurons with a thousand connections each, equaling around 100 trillion connections. To train this brain using Google Brain's setup, you'll need a lot more images -- around 500 million images – and lots of time: about 5 million times longer than that of the honeybee brain setup.

Naturally Nvidia tackled this problem by developing a solution of its own. Huang said that it's now possible using three GPU-accelerated servers: 12 GPUs in total, 18,432 CUDA processor cores (Google Brain has around 16,000 cores). The Nvidia solution uses 100 times less energy, and a 100 times less cost.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Parrish has over a decade of experience as a writer, editor, and product tester. His work focused on computer hardware, networking equipment, smartphones, tablets, gaming consoles, and other internet-connected devices. His work has appeared in Tom's Hardware, Tom's Guide, Maximum PC, Digital Trends, Android Authority, How-To Geek, Lifewire, and others.

-

skit75 The most important part of the article in my opinion, had the least amount of information.Reply -

bjaminnyc 100x cheaper & 100x less power = what % of performance of google brain. Likely higher than 1%, but with simple cores. Still interesting though.Reply -

InvalidError Reply

Was there ever any doubt? Parallelizing workloads wherever parallelizing them was practical has been done for the past 30+ years in mainframes and mini-computers.12972099 said:I think it's already clear parallel computing beats serial workloads.

The reason desktop computing is so heavily reliant on single-thread performance is because most user-interactive code does not multi-thread easily, often does not scale well or meaningfully. -

Blazer1985 Yeah, still 1 cuda core != 1 cpu core so this parallelism (word that fits perfectly here) is highly incorrect imho.Reply