Nvidia Tech Uses AI to Optimize Chip Designs up to 30X Faster

AI can help find optimal chip design solutions to improve cost, performance, and power consumption.

Nvidia is one of the leading designers of chips used for artificial intelligence (AI) and machine learning (ML) acceleration. Therefore it is apt that it looks set to be one of the pioneers in applying AI to chip design. Today, it published a paper and blog post revealing how its AutoDMP system can accelerate modern chip floor-planning using GPU-accelerated AI/ML optimization, resulting in a 30X speedup over previous methods.

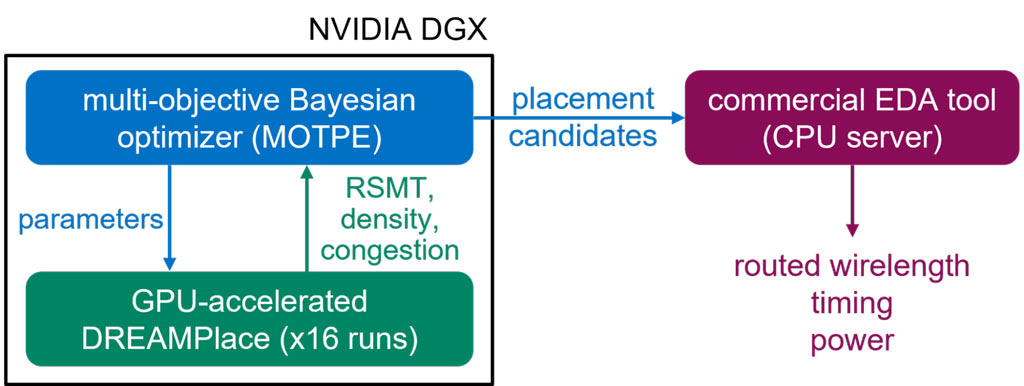

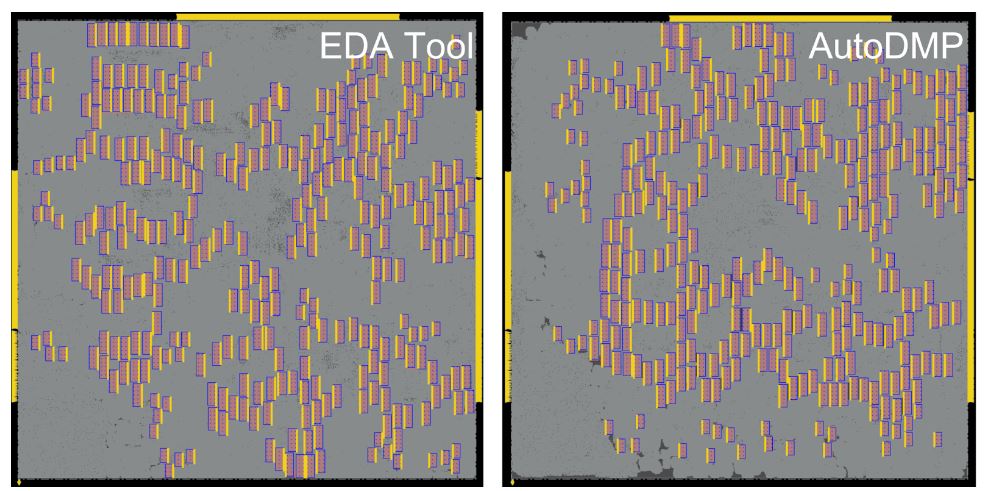

AutoDMP is short for Automated DREAMPlace-based Macro Placement. It is designed to plug into an Electronic Design Automation (EDA) system used by chip designers, to accelerate and optimize the time-consuming process of finding optimal placements for the building blocks of processors. In one of Nvidia’s examples of AutoDMP at work, the tool leveraged its AI on the problem of determining an optimal layout of 256 RSIC-V cores, accounting for 2.7 million standard cells and 320 memory macros. AutoDMP took 3.5 hours to come up with an optimal layout on a single Nvidia DGX Station A100.

Macro placement has a significant impact on the landscape of the chip, “directly affecting many design metrics, such as area and power consumption,” notes Nvidia. Optimizing placements is a key design task in optimizing the chip performance and efficiency, which directly affects the customer.

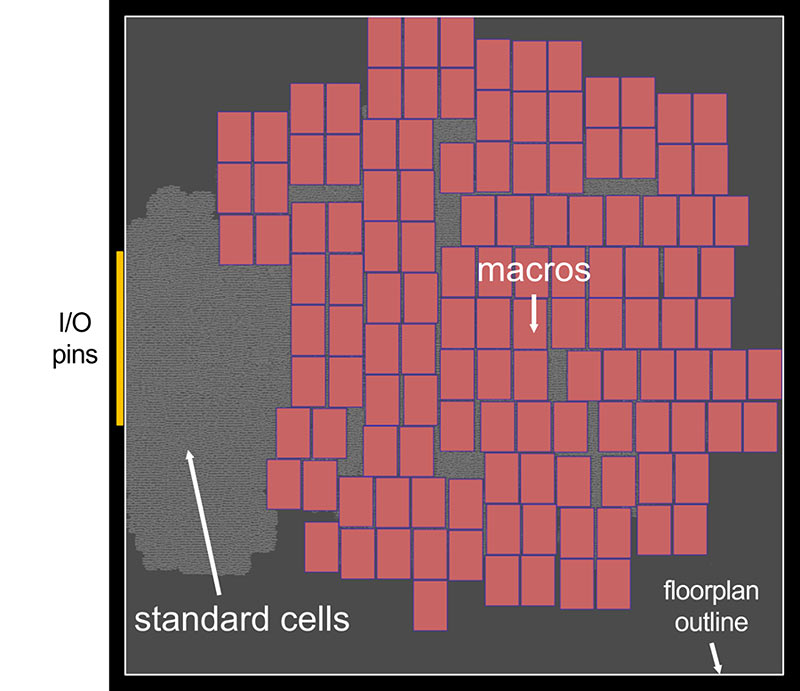

On the topic of how AutoDMP works, Nvidia says that its analytical placer “formulates the placement problem as a wire length optimization problem under a placement density constraint and solves it numerically.” GPU-accelerated algorithms deliver up to 30x speedup compared to previous methods of placement. Moreover, AutoDMP supports mixed-sized cells. In the top animation, you can see AutoDMP placing macros (red) and standard cells (gray) to minimize wire length in a constrained area.

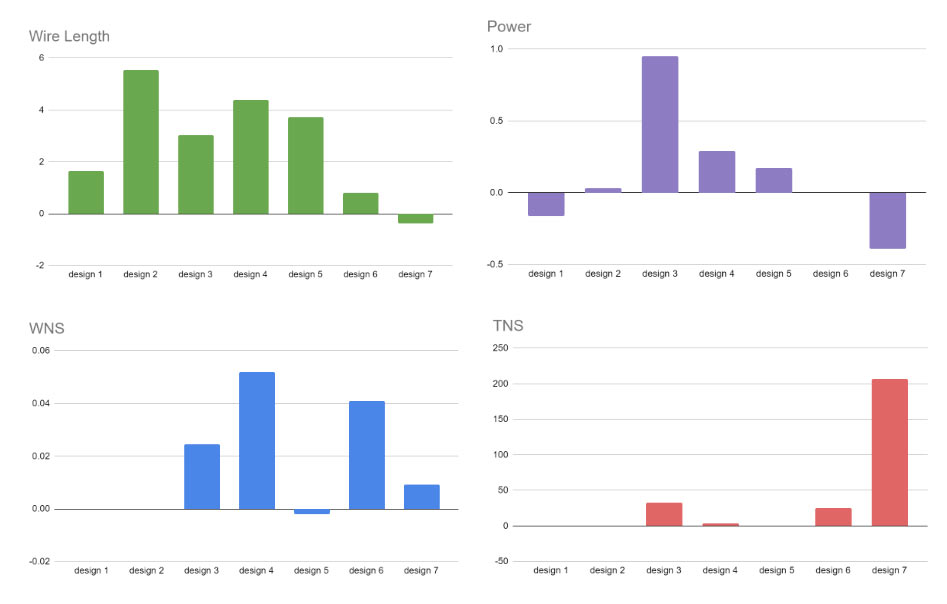

We have talked about the design speed benefits of using AutoDMP, but have not yet touched upon qualitative benefits. In the figure above, you can see that compared to seven alternative existing designs for a test chip, the AutoDMP-optimized chip offers clear benefits in wire length, power, worst negative slack (WNS), and total negative slack (TNS). Results above the line are a win by AutoDMP vs the various rival designs.

AutoDMP is open source, with the code published on GitHub.

Nvidia isn’t the first chip designer to leverage AI for optimal layouts; back in February we reported on Synopsys and its DSO.ai automation tool, which has already been used for 100 commercial tape-outs. Synopsys described its solution as an “expert engineer in a box.” It added that DSO.ai was a great fit for on-trend multi-die silicon designs, and its use would free engineers from dull iterative work, so they could bend their talents towards more innovation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

bit_user For once, it seems like Nvidia might be a late-comer. From 2021:Reply

https://www.anandtech.com/show/16836/cadence-cerebrus-to-enable-chip-design-with-ml-ppa-optimization-in-hours-not-months -

gg83 This is exactly how a country with enough money to throw at chip design can get around patents and such.Reply -

jkflipflop98 This is good for a small design house or startup or something. All the big boys are four parallel universes ahead of you.Reply -

OneMoreUser Is Nvidia using AI supposed to be a selling point, if not then why should we care.Reply

Judging from the 30- and not the 40- generation of Nvidia GPU's their AI isn't working since their products have not gotten cheaper and efficiency isn't like revolutionary either.

Or maybe it is because they have used AI to improve their pricing, as in how they make more money for each GPU they sell.

Before any one buys then do your self a favor, look at what AMD and Intel is bringing in terms of GPU's and make sure to notice the current prices rather than those from the launch reviews (and note also that DLSS and FSR is damn close to equal in many situations). -

Zeus287 ReplyOneMoreUser said:Is Nvidia using AI supposed to be a selling point, if not then why should we care.

Judging from the 30- and not the 40- generation of Nvidia GPU's their AI isn't working since their products have not gotten cheaper and efficiency isn't like revolutionary either.

Or maybe it is because they have used AI to improve their pricing, as in how they make more money for each GPU they sell.

Before any one buys then do your self a favor, look at what AMD and Intel is bringing in terms of GPU's and make sure to notice the current prices rather than those from the launch reviews (and note also that DLSS and FSR is damn close to equal in many situations).

I hate Nvidia right now just as much as the next gamer, but their 40 series chips are the most efficient GPU's on the market. The 4090 might not seem that way with the stock power limit, but that's because Nvidia was worried about AMD before launch and pushed the power limit WAY past it's efficiency curve. With the power limit set to just under 300W, it only loses about 5-10% performance. That makes it more than twice as efficient as the 3080 from last gen, and MUCH more efficient than the 3090. It's also much more efficient than the 7900XTX at that wattage, while having more performance. That said, I don't recommend anyone buy current gen GPU's. With Nvidia charging $800 for what essentially should've been the 4060, and AMD not having a high enough jump in performance over last gen and moving a GPU up the stack to charge more, buying them is telling them the prices are okay. As much as I hate Intel for all their anti consumer practices, I really hope they succeed. Nvidia and AMD need competition to put them in their places. -

bit_user Reply

The reason why the RTX 4090 is so expensive is that it's big. And all desktop GPUs are normally clocked above their peak-efficiency point. The recipe for making an efficient GPU is basically just to make it big and clock it low. That's exactly what you're describing.Zeus287 said:their 40 series chips are the most efficient GPU's on the market. The 4090 might not seem that way with the stock power limit, but that's because Nvidia was worried about AMD before launch and pushed the power limit WAY past it's efficiency curve. With the power limit set to just under 300W, it only loses about 5-10% performance. That makes it more than twice as efficient as the 3080 from last gen, and MUCH more efficient than the 3090. It's also much more efficient than the 7900XTX at that wattage, while having more performance.

Because size costs money, the dilemma is that you cannot have a GPU that's both cheap and efficient. However, as we've seen in the past, die size is not a perfect predictor of performance, so I don't mean to say that architecture doesn't matter. However, a bigger, lower-clocked GPU will generally tend to be more efficient.

Disclaimers:

When comparing die size, I'm assuming the GPUs in question are on a comparable process node.

When comparing efficiency, I'm assuming the GPUs in question are at comparable performance levels. If you're willing to throw performance out the window, you can obviously make a very efficient GPU. -

Johnpombrio Starting with the 8086 CPU, Intel became concerned with the integrated circuit's layout very early on. Within a couple of generations, the company started to develop tools to aid the engineers in designing the layout. Less than a decade later (in the late 1980s), these design tools were fully responsible for the entire layout. Intel didn't call it "AI", just a design tool. This is VERY old news.Reply -

bit_user Reply

I think we all know that automated routing and layout isn't new. However, they seem to be tackling a higher-level floorplanning problem, which involves instantiating multiple macros. I doubt they're the first to automate such a task, but they do claim that it's "traditionally" a somewhat manual process. The paper indeed references benchmarks by the TILOS-AI-Institute, which immediately tells us this is by no means a new optimization target.Johnpombrio said:Intel didn't call it "AI", just a design tool. This is VERY old news.

To understand where/how they used "AI" in the process - exactly what they mean by that - you'd have to read their paper or perhaps just the blog post linked from the article. Unfortunately, those links aren't working for me (I get a "bad merchant" error). So, I've gone to their site and found the links for myself:

https://developer.nvidia.com/blog/autodmp-optimizes-macro-placement-for-chip-design-with-ai-and-gpus/https://research.nvidia.com/publication/2023-03_autodmp-automated-dreamplace-based-macro-placement -

derekullo Teaching AI to build more efficient and better version of chips could never backfire on us!Reply