Nvidia to Sell 550,000 H100 GPUs for AI in 2023: Report

Raking in billions on AI and HPC compute GPUs this year.

The generative AI boom is driving sales of servers used for artificial intelligence (AI) and high-performance computing (HPC), and dozens of companies will benefit from it. But one company will likely benefit more than others. Nvidia is estimated to sell over half of a million of its high-end H100 compute GPUs worth tens of billions of dollars in 2023, reports Financial Times.

Nvidia is set to ship around 550,000 of its latest H100 compute GPUs worldwide in 2023, with the majority going to American tech firms, according to multiple insiders linked to Nvidia and TSMC who spoke to Financial Times. Nvidia chose not to provide any remarks on the matter, which is understandable considering FTC rules.

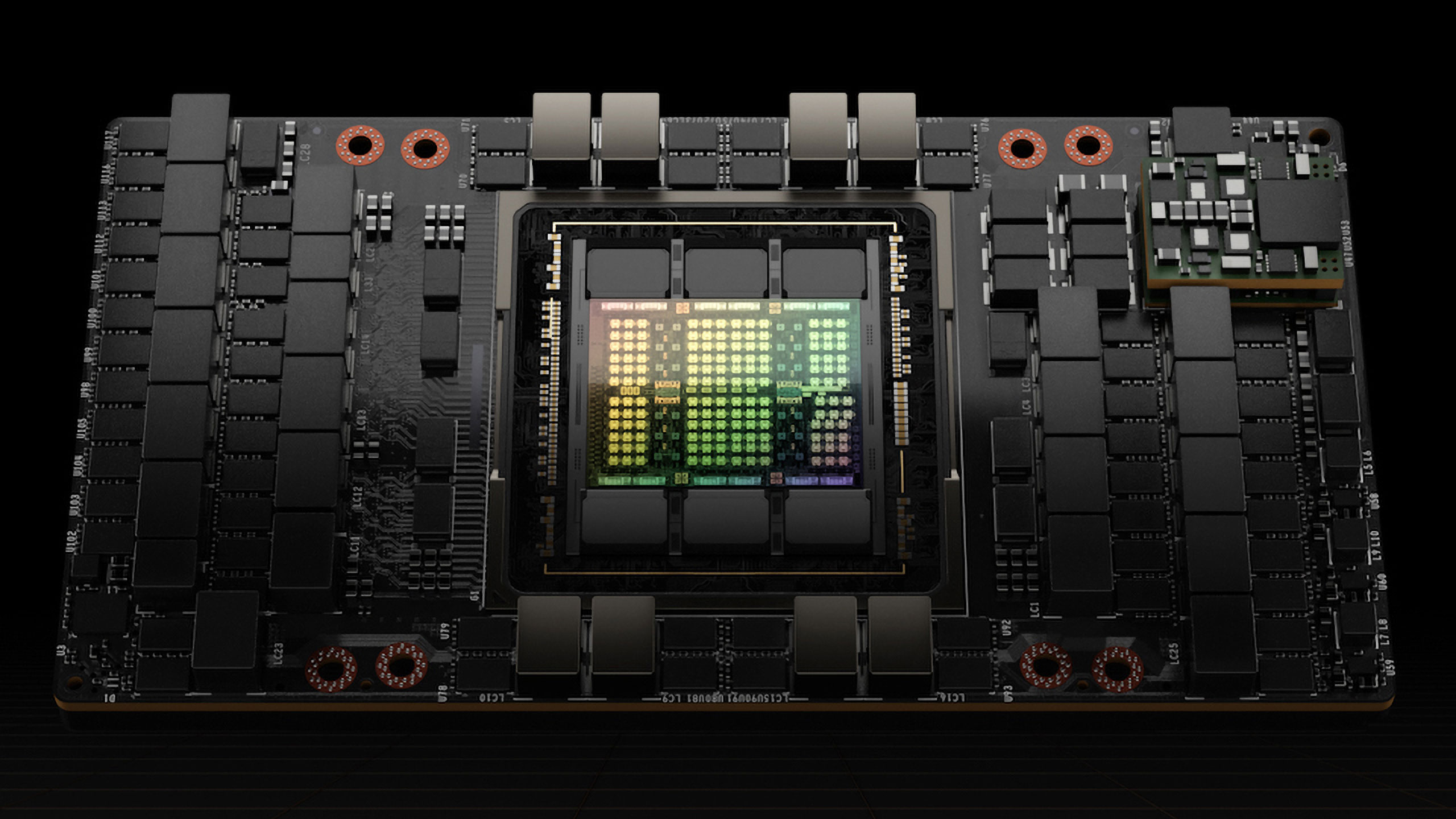

While we don't know the precise mix of GPUs sold, each Nvidia H100 80GB HBM2E compute GPU add-in-card (14,592 CUDA cores, 26 FP64 TFLOPS, 1,513 FP16 TFLOPS) retails for around $30,000 in the U.S. However, this is not the company's highest-performing Hopper architecture-based part. In fact, this is the cheapest one, at least for now. Meanwhile in China, one such card can cost as much as $70,000.

Nvidia's range-topping H100-powered offerings include the H100 SXM 80GB HBM3 (16,896 CUDA cores, 34 FP64 TFLOPS, 1,979 FP16 TFLOPS) and the H100 NVL 188GB HBM3 dual-card solution. These parts are sold either directly to server manufacturers like Foxconn and Quanta, or are supplied inside servers that Nvidia sells directly. Also, Nvidia is about to start shipping its GH200 Grace Hopper platform consisting of its 72-core Grace processor and an H100 80GB HBM3E compute GPU.

Nvidia dies not publish prices of its H100 SXM, H100 NVL, and GH200 Grace Hopper products as they depend on the volume and business relationship between Nvidia and a particular customer. Meanwhile, even if Nvidia sells each of H100-based product for $30,000, that would still account for $16.5 billion this year just on the latest generation compute GPUs. But the company does not sell only H100-series compute GPUs.

There are companies that still use Nvidia's previous generation A100 compute GPUs to boost their existing deployments without making any changes to their software and hardware. There are also the China-specific A800 and H800 models.

While we cannot make any precise estimates about where Nvidia's earnings from the sale of compute GPUs will land, nor the precise number of compute GPUs that the company will sell this year, we can make some guesses. Nvidia's datacenter business generated $4.284 billion in the company's Q1 FY2024 (ended April 30). Given the ongoing AI frenzy, it looks like sales of Nvidia's compute GPUs were higher in its Q2 FY2024, which ended in late July. The full 2023 fiscal year is set to be record-breaking for Nvidia's datacenter unit, in other words.

It's noteworthy that Nvidia's partner TSMC can barely meet demand for compute GPUs right now, as all of them use CoWoS packaging and the foundry is struggling to boost capacity for this chip packaging method. With numerous companies looking to purchase tens of thousands of compute GPUs for AI purposes, supply isn't likely to match demand for quite some time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JamesJones44 Seems to match up with general wall st. estaminets. The average revenue target for Nvidia is 43.8 billion in fiscal 2024 sales (Nvidia's fiscal 2024 year started in Febuary). These numbers put H100 sales somewhere between 16.5 billion and 33 billion meaning the rest of the product mix is making up the difference which was traditionally around 18 billion in revenue.Reply