Watch Dogs Legion Benchmarked: Seriously Demanding

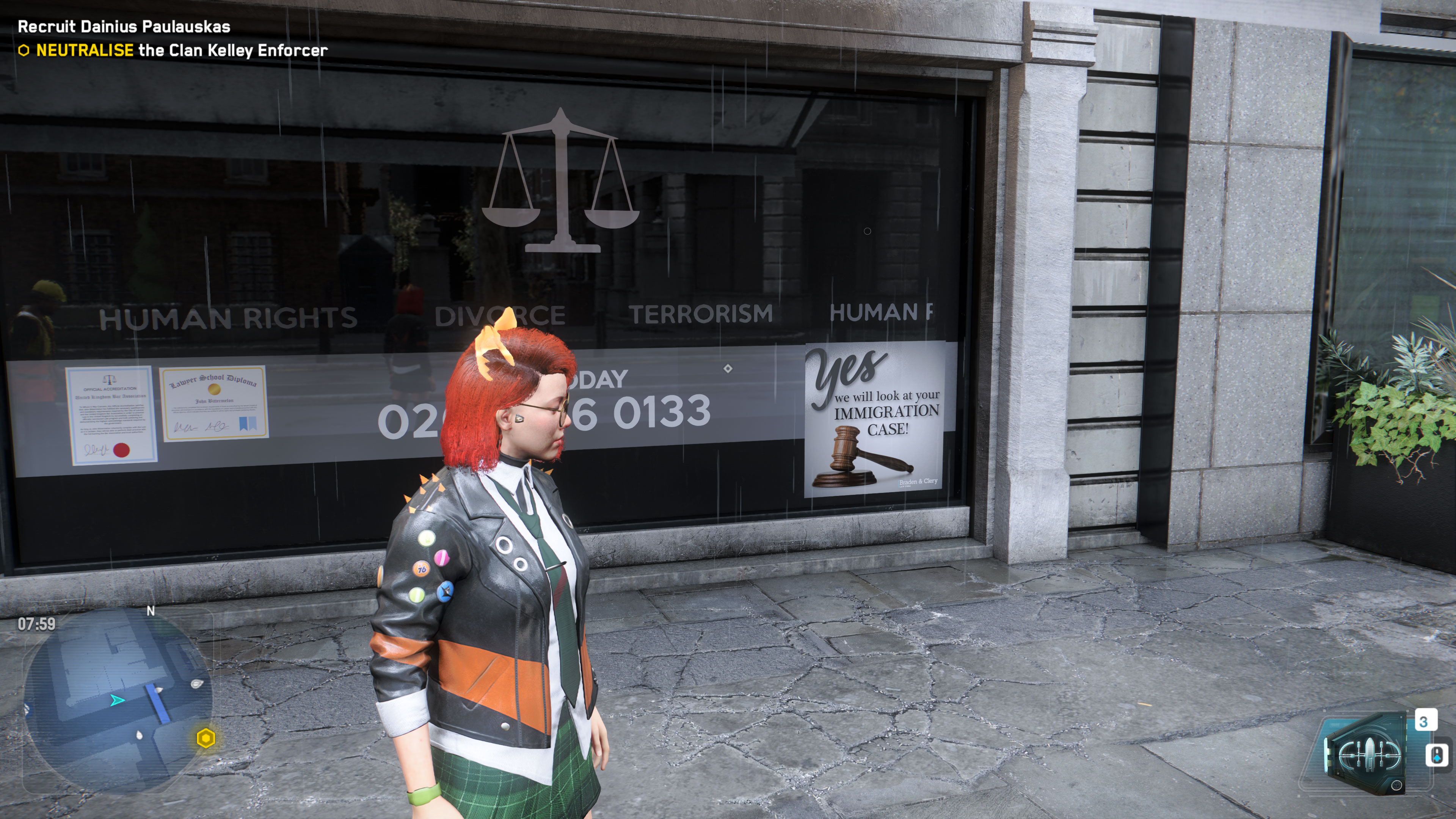

Say hello to ray traced reflections in wet and shiny London.

Watch Dogs Legion came out this week, and we've been running some early benchmarks on some of the best graphics cards. It's still early, and performance may improve with patches and driver updates — with a PC patch supposedly landing today to at least somewhat improve things. In the meantime, even cards from the very top of our GPU benchmarks hierarchy can struggle. Thank goodness the game supports DLSS 2.1 for Nvidia's RTX cards, and you can try temporal upscaling on other GPUs as well.

We've run a limited set of cards through the game's built-in benchmark, which seems reasonably representative of what you'll see in the game. It's a true-to-life rainy and damp recreation of London, which means lots of puddles to make the most of the ray traced reflections. There are also plenty of windows, shiny cars, and other surfaces that can cast reflections as well. The windows are probably the most noticeable change with ray tracing, since they often face you and thus reflect things behind you — screen space reflections can't do stuff like that. The only drawback is that the performance hit for ray tracing is, as usual, pretty significant.

It's also worth mentioning that Ubisoft is working on patches for the game that are supposed to improve performance. We saw a new download occur today on at least one of our test systems, but we're not sure if this is the promised patch or not. A quick check of performance didn't show any changes, so hopefully there are still more updates incoming that will improve things.

We're using our standard GPU testbed, which you can see on the right. We've tested all of the latest RTX GPUs (30-series and 20-series Super, plus the 2060 and 2080 Ti), along with AMD's top three Navi GPUs (RX 5700 XT, RX 5700, and RX 5600 XT). To help cut down on the total number of tests to run, we've focused on the medium and ultra presets at 1080p, 1440p, and 4K. For the RTX cards, we also added ultra quality ray tracing with DLSS performance mode. Native resolution ray tracing might be viable on the fastest cards at 1080p and 1440p, but 4K with maxed out settings basically isn't going to run well without DLSS.

Before we get to the charts, let's also note that the low preset basically performed the same as the medium preset (a few percent faster). High quality ends up running 10-15 percent slower than medium, or 50-60 percent faster than ultra. The very high preset runs about 20 percent slower than medium and 25 percent faster than ultra. That can vary depending on how much VRAM your GPU has, along with other factors, and as usual there are quite a few options you can tweak and tune to improve performance.

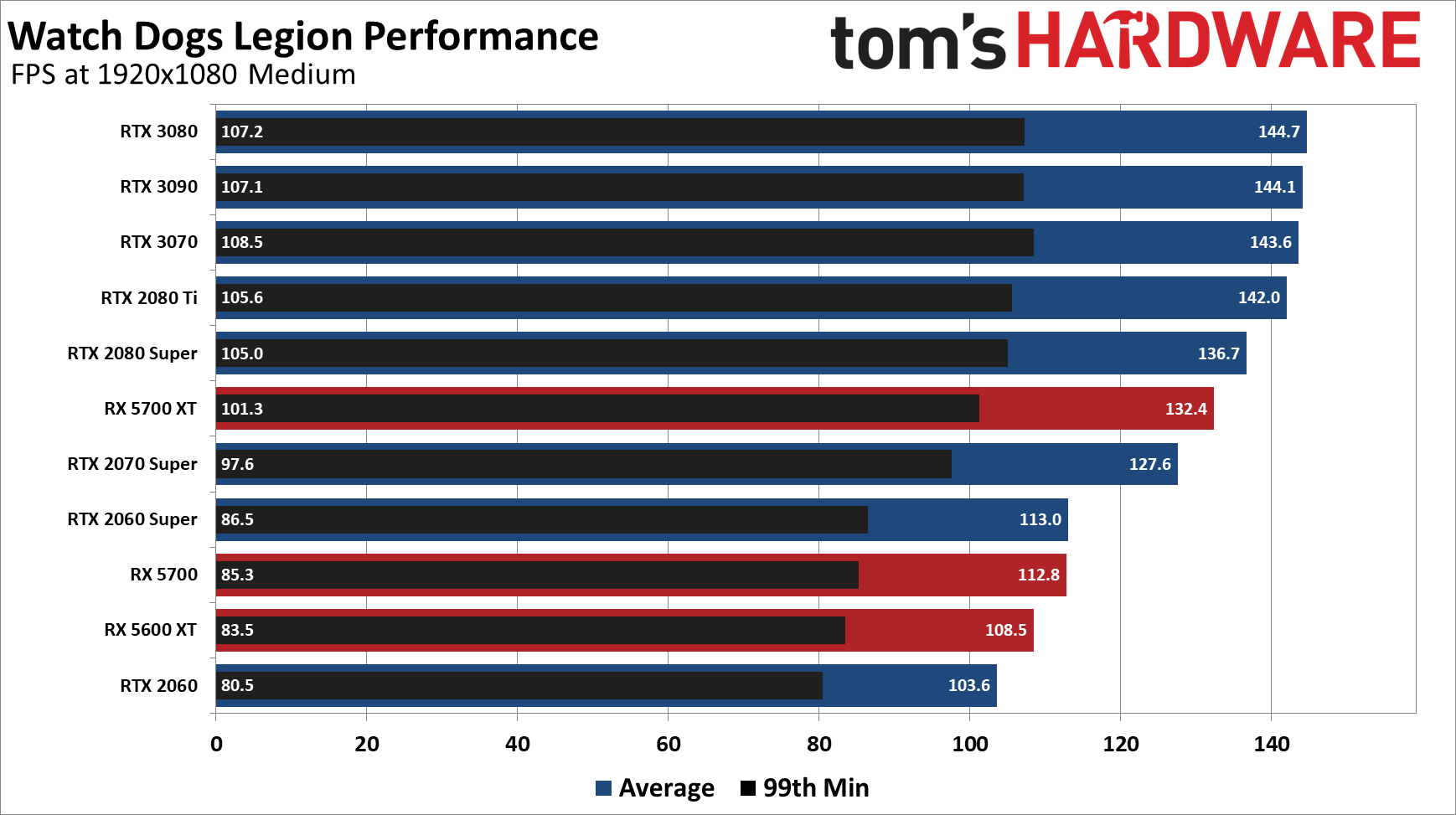

The game also appears to be pretty CPU limited, at least at lower settings — none of the GPUs managed more than about 145 fps average at 1080p. Due to the potentially performance changing patch, however, we've held off on doing any additional CPU tests for now. Just be warned that with all the 'unique' citizens running around town, plus traffic and drones, you'll want a pretty potent CPU to keep things running smoothly.

1440p Low

1440p Medium

1440p High

1440p Very High

1440p Ultra

1440p Ultra Plus Ray Tracing (Native)

1440p Ultra Plus RT DLSS Performance

1440p Ultra Plus RT DLSS Quality

1440p Ultra (no RT)

1440p Ultra, RT Medium

1440p Ultra, RT High

1440p Ultra, RT Ultra

1440p Medium

1440p Ultra

1440p Ultra Plus RT DLSS Performance

1440p Medium

1440p Ultra

1440p Ultra Plus RT DLSS Performance

Depending on how realistic you want the game to look, the various quality modes make quite a difference. Ray tracing in particular can really help flesh out the scenery. It's not something that's absolutely required, and you basically want to enable DLSS for most of the RTX GPUs, but the end results look quite good. Step away from the reflective surfaces and it's no huge loss, but just watching buses and cars drive around the city with their transparent reflective windows looks very cool.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It's a shame we can't get full ray tracing for all the other effects like shadows, indirect lighting, and global illumination, but Watch Dogs Legion is already pretty taxing even without RT. The second set of screenshots above shows the three levels of ray traced reflections. Basically, the higher the setting, the farther rays are cast to create reflections.

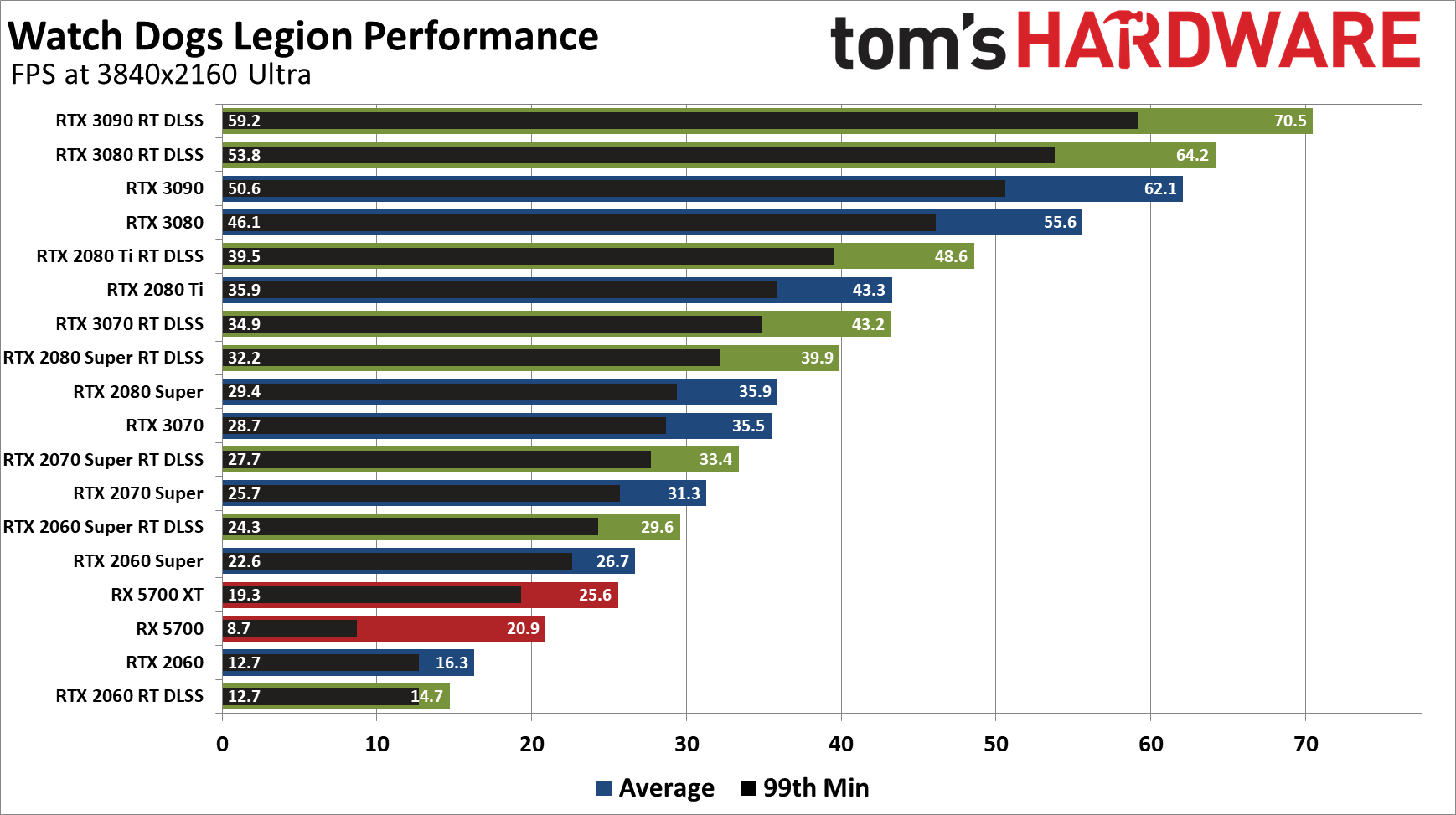

We'll see the results in moment, but basically you'll need an RTX 3080 or 3090 to do 4K ultra with ray tracing at 60 fps, and that's still with DLSS in performance mode. The 3090 can maybe do DLSS balanced for slightly better quality, but then it will start to dip below 60. Based on Legion's performance, we may still be another generation or two of hardware away from full ray tracing effects.

Starting with 1080p at medium quality, everything runs just fine — not that we'd expect anything less. The slowest GPUs we tested, RTX 2060 and RX 5600 XT, average over 100 fps, with minimums of 80 fps. Of course, by the same token none of the GPUs can go higher than 145 fps. The top four GPUs tested are all clumped together in the 142-145 fps range, and clearly CPU bottlenecks are holding them back.

The image quality comparisons from above show the difference in visuals, but while Watch Dogs Legion generally looks fine at medium quality, there are a lot of missing details. Reflections for example are pretty much fake, with dynamic objects completely missing from the puddles and such. Shadows are less present and accurate, textures are blurrier. It's the usual tradeoffs, in other words.

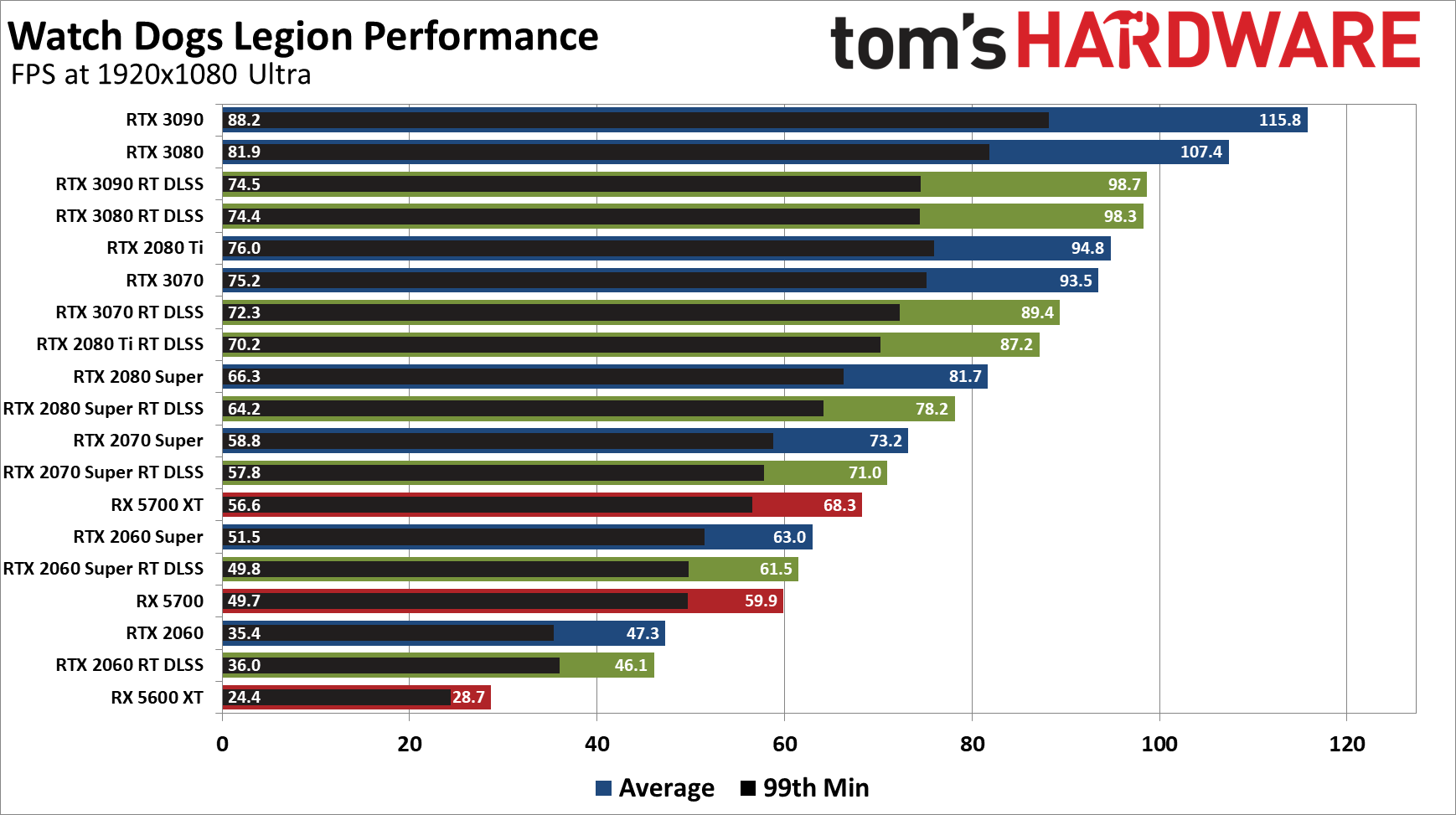

1080p Ultra is another matter entirely. Technically, it uses more VRAM than the 6GB cards can provide, which likely accounts for their poor showings. Still, the RX 5600 XT really struggles, while the RTX 2060 is at least playable. (AMD's 20.10.1 drivers aren't Game Ready, so a driver update or game patch will likely help.) Other than the 6GB cards, everything basically averages 60 fps or more, though the RX 5700 and RTX 2060 Super are right on the fence.

Perhaps more interesting is that if we enable ray tracing and DLSS Performance mode on the RTX cards, frame rates aren't that much worse than native resolution without RT and DLSS. Of course, 1080p with DLSS Performance mode is sort of the worst-case scenario, as it upscales 960x540 content and there's just not as much that can be done. DLSS tends to look better with higher base resolutions in our experience.

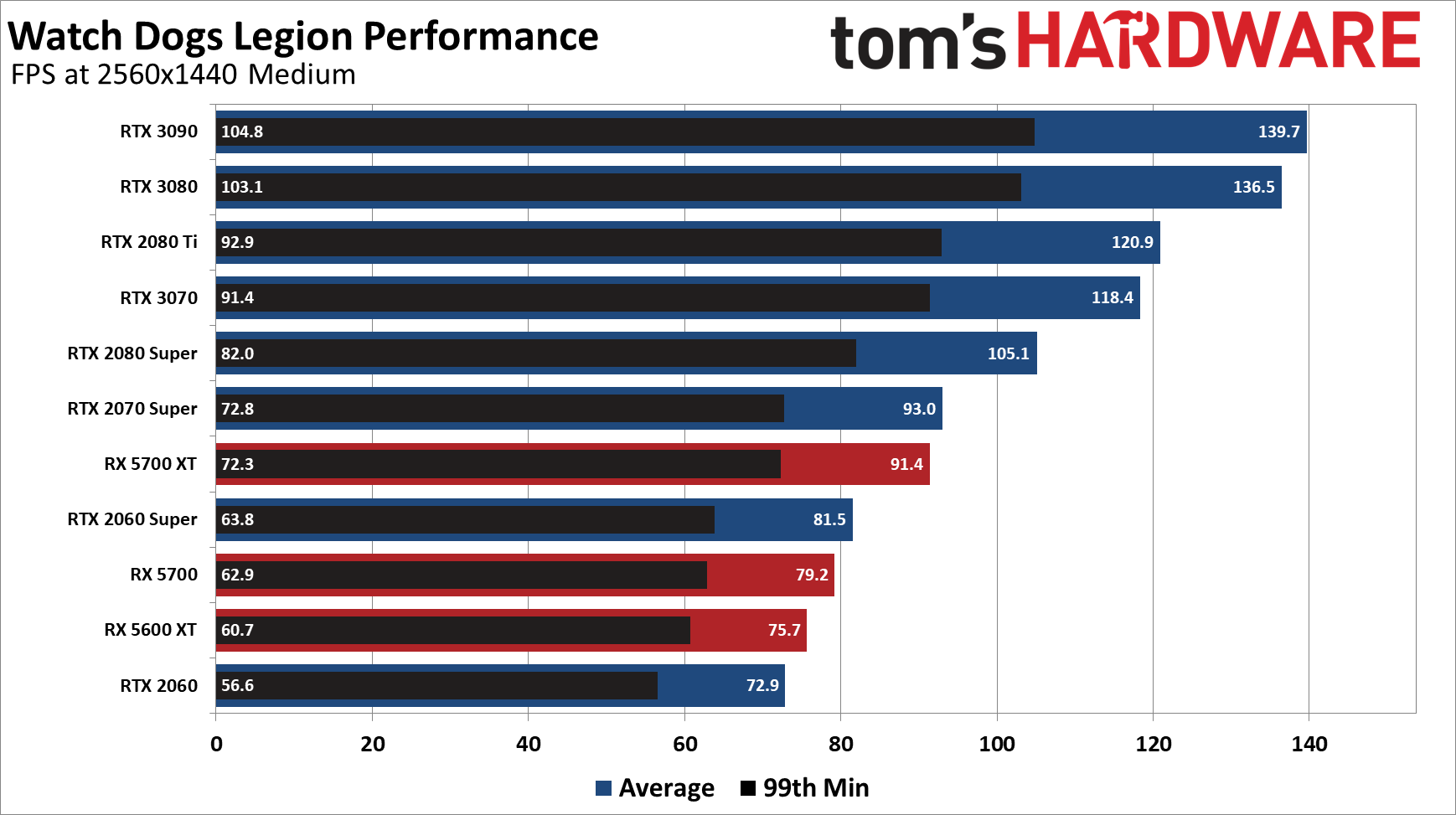

Other than the RTX 3090 and 3080, all of the tested GPUs take a decent hit to performance going from 1080p medium to 1440p medium. The slower RTX cards and AMD's cards drop about 30 percent, while the faster RTX cards only drop 15-20 percent. RTX 3080 meanwhile only loses 6 percent, while the 3090 dips just 3 percent. Basically, those two GPUs remain almost fully CPU limited.

The silver lining is that every GPU tested (which, granted, start at over $250) still averages more than 60 fps. Most of the cards can certainly do 1440p at high settings just fine, particularly if you're willing to use DLSS Quality mode.

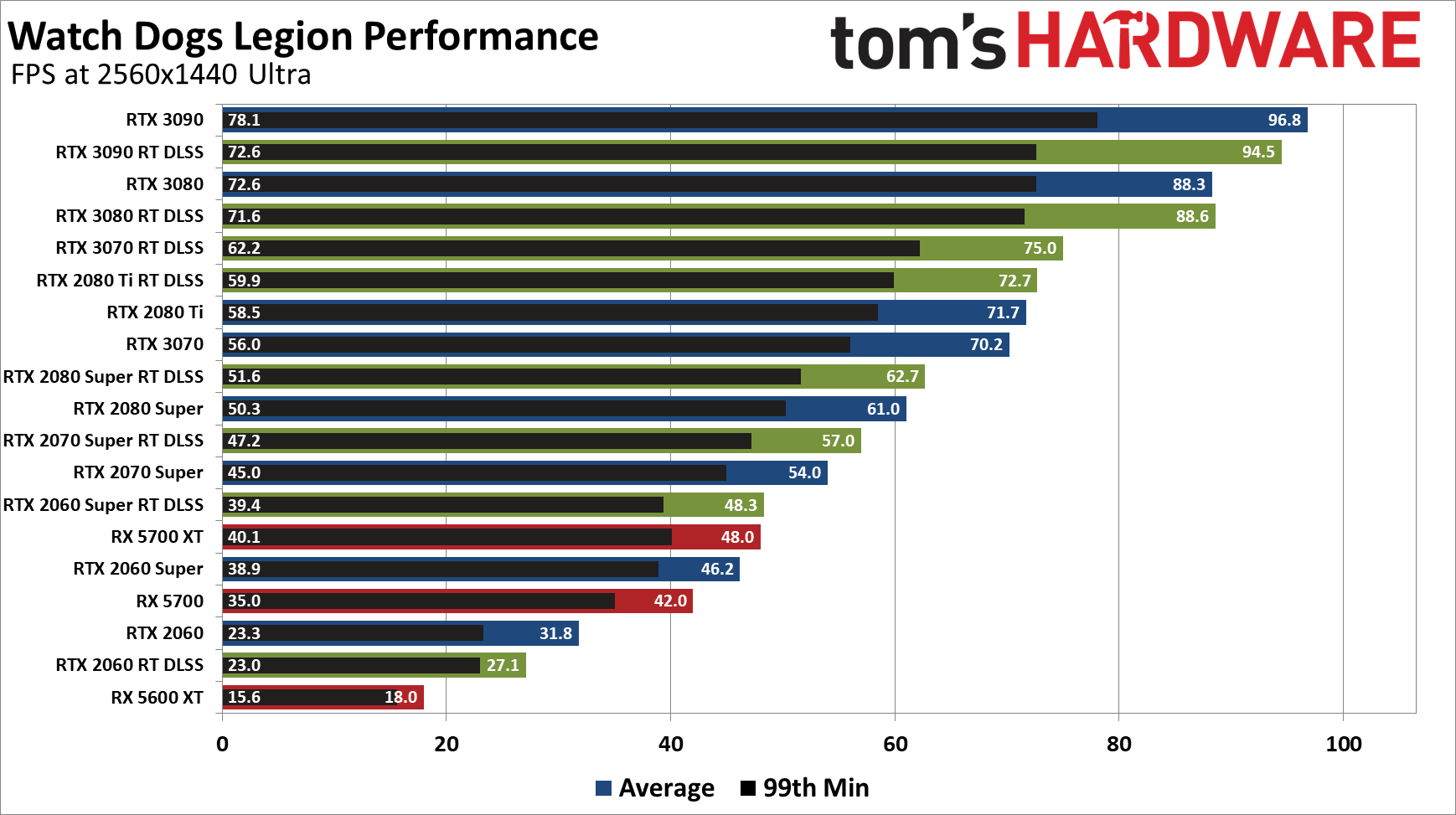

Where 1440p medium wasn't quite fully GPU limited on the fastest GPUs, the same can't be said for 1440p ultra — with or without ray tracing. Only the RTX 2080 Super and above maintain 60 fps averages, and it's right on the threshold. Basically, RTX 3070 or above (and probably Big Navi RX 6800 and above for AMD, once those arrive) is required for a consistent 1440p ultra experience.

With ray tracing and DLSS — now upscaling 720p content to 1440p — the RTX GPUs lose a bit of performance but nothing that generally would make the difference between playable and unplayable. RTX 2060 for example should be set to high quality first, or maybe ultra but with texture and shadow quality at high. That would help avoid the 6GB issues that are dropping performance.

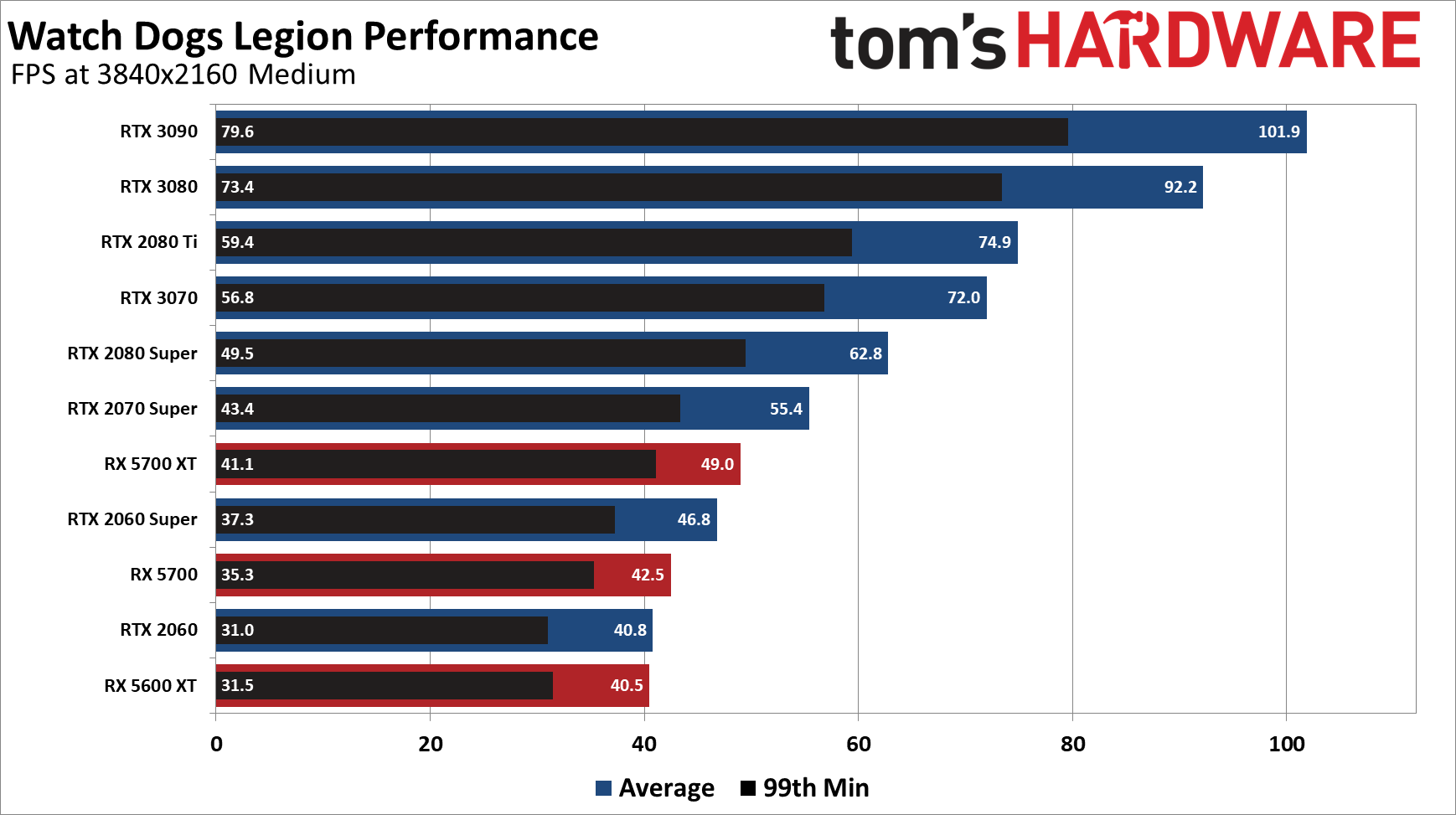

4K medium continues the already established pattern, as expected. FPS drops by 40-45 percent on nearly all of the GPUs, relative to 1440p medium. The 3080 and 3090 remain the exceptions, but they still drop by 32 and 27 percent, respectively. In the current state (which may improve with a patch or new drivers), only the RTX 2080 Super and above clear 60 fps.

Last up, pushing the limits of what most PCs can handle, 4K ultra really tanks performance. Only the RTX 3090 clear 60 fps without ray tracing, while the 3080 and 3090 can break 60 fps with ray tracing and DLSS Performance mode. It's interesting that at 4K, the DLSS upscaling finally overcomes the ray tracing performance hit on nearly all of the RTX cards (2060 being the sole exception).

Something else to point out is the RX 5700 performance. The first time we tested RX 5700 XT at 4K ultra, we saw very weak performance (13 fps). Restarting the game gave us the above result. The RX 5700, on the other hand, simply didn't run well at 4K ultra, even after multiple restarts and reboots. There's likely a driver fix that will help out, but AMD's cards really don't seem to like it when a game uses more memory than the available VRAM.

If you're wondering, the performance for the RTX cards with RT and DLSS here is a bit lower than what the cards would also get a 1080p without DLSS. You can still do ray tracing with something like the RTX 2060, of course, but you'd want to run high quality settings with DLSS Performance mode at 1080p or 1440p to get above 60 fps (it can do around 63 fps at 1440p high with RT and DLSS).

Watch Dogs Legion: Initial Thoughts

We know Ubisoft is still working on Watch Dogs Legion, so this is more of a first look than the final say on performance. Open world games tend to be some of the most demanding games when it comes to CPU and GPU requirements, and this is no exception.

If you want to run with all the eye candy enabled, you'll need a top-of-the-line graphics card, a reasonably potent CPU, and plenty of RAM and a fast SSD certainly won't hurt. Even with top-tier hardware, stepping back a notch or two on some settings is recommended, particularly if you want to avoid periodic dips below 60 fps.

The game itself feels like an improvement over the previous two installments, and the "play as anyone" idea actually can work. Not that you'd really want to play as just anyone, but there are some randomly generated people running around London that come with just the right set of skills and accessories to make them the right fit for your own personal version of DedSec, the hacker group of the series.

Also appreciated: There's a built-in benchmark this time, so if anyone else wants to see about testing their own PC to see how it stacks up to our test system, go right ahead. It's not indicative of worst-case performance, but it's repeatable and tends to show similar scaling to what you'd see while playing the game. Feel free to post your results in our forums.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

oplix Just seems like a highly bloated engine which was rushed to release to counter program CyberpunkReply -

JarredWaltonGPU Reply

I really dislike claims like this.jepeman said:Seems more like seriously unoptimized.

It's an open-world game with hundreds (perhaps thousands) of active vehicles and people running around the city, following schedules. I'm sure there's some behind-the-scenes stuff to keep things from bogging down too much, but the more realistic a simulation becomes, the more demanding it is on the CPU. Visually, there's also a lot going on with a relatively faithful recreation of London. It's not photo-realistic, but all the textures, shadows, lighting, reflections, etc. all tax your GPU -- and that's before enabling ray tracing.

What 'unoptimized' part of the game needs to be fixed? Should Ubisoft cut down the number of AI entities to reduce the CPU load? Drop the geometry levels to make it run better as well? It could, but this isn't the type of game that needs 240 or even 144 fps. It's very playable at anything above around 50 fps, with 60 being more than sufficient. Basically, if you think it's "unoptimized" because it can't run at max details and 150 fps or whatever, that's not the intended design. 4K60 is possible on a variety of cards and settings, just not on midrange stuff. With the next generation of consoles, this is basically going to become the new baseline for graphics and CPU requirements is my expectation. -

Shadowclash10 ReplyJarredWaltonGPU said:I really dislike claims like this.

It's an open-world game with hundreds (perhaps thousands) of active vehicles and people running around the city, following schedules. I'm sure there's some behind-the-scenes stuff to keep things from bogging down too much, but the more realistic a simulation becomes, the more demanding it is on the CPU. Visually, there's also a lot going on with a relatively faithful recreation of London. It's not photo-realistic, but all the textures, shadows, lighting, reflections, etc. all tax your GPU -- and that's before enabling ray tracing.

What 'unoptimized' part of the game needs to be fixed? Should Ubisoft cut down the number of AI entities to reduce the CPU load? Drop the geometry levels to make it run better as well? It could, but this isn't the type of game that needs 240 or even 144 fps. It's very playable at anything above around 50 fps, with 60 being more than sufficient. Basically, if you think it's "unoptimized" because it can't run at max details and 150 fps or whatever, that's not the intended design. 4K60 is possible on a variety of cards and settings, just not on midrange stuff. With the next generation of consoles, this is basically going to become the new baseline for graphics and CPU requirements is my expectation.

I totally agree. Although I'd rather 60 over 50fps :p. -

JarredWaltonGPU Reply

My point was that 60+ was the target, rather than some extreme framerate like 200 fps. It's not an FPS, basically, so 60 and above are sufficient. And dropping a few settings (to stay under your GPU's VRAM limit) definitely helps the 2060 and 5600 XT. I just didn't show every possible configuration and result.Shadowclash10 said:I totally agree. Although I'd rather 60 over 50fps :p. -

jepeman Reply

Problem is, the performance often tanks, while your CPU and GPU are nowhere near fully utilized.JarredWaltonGPU said:I really dislike claims like this.

It's an open-world game with hundreds (perhaps thousands) of active vehicles and people running around the city, following schedules. I'm sure there's some behind-the-scenes stuff to keep things from bogging down too much, but the more realistic a simulation becomes, the more demanding it is on the CPU. Visually, there's also a lot going on with a relatively faithful recreation of London. It's not photo-realistic, but all the textures, shadows, lighting, reflections, etc. all tax your GPU -- and that's before enabling ray tracing.

What 'unoptimized' part of the game needs to be fixed? Should Ubisoft cut down the number of AI entities to reduce the CPU load? Drop the geometry levels to make it run better as well? It could, but this isn't the type of game that needs 240 or even 144 fps. It's very playable at anything above around 50 fps, with 60 being more than sufficient. Basically, if you think it's "unoptimized" because it can't run at max details and 150 fps or whatever, that's not the intended design. 4K60 is possible on a variety of cards and settings, just not on midrange stuff. With the next generation of consoles, this is basically going to become the new baseline for graphics and CPU requirements is my expectation.

I guess you could argue its buggy instead of poorly optimized. -

JarredWaltonGPU Reply

I actually haven't noticed much in the way of major drops in performance, but then I'm running on a relatively high-end setup. There are so many factors that can cause issues. Is it loading data off HDD and stuttering, is it running out of RAM, is it driver cruft, or some other background application, or one of dozens of other possibilities? I fully expect a game like this to struggle on a 4-core CPU, particularly 4-core/4-thread i5 from 7600K and earlier. I realize just throwing hardware at the problem isn't sound advice either, but it's amazing how often that fixes any issues. Major games are always buggy, though, and things will likely improve quite a bit in the coming months. That's the problem with the holiday rush: everyone trying to get games released before Christmas / Black Friday, which inevitably leads to crunch, bugs, and other issues.jepeman said:Problem is, the performance often tanks, while your CPU and GPU are nowhere near fully utilized.

I guess you could argue its buggy instead of poorly optimized. -

charloa99 Any idea on why the game is using between 85-90% of my cpu all the time in the game ? . Here's my config : i9-9900k 3.6GHz, Evga rtx 20800 super xc ultra 8gb, 32gb of RAM at 3000Mhz. Everything is set on high or very high and I'm getting between 70-90 fps. I checked the task manager and it looks like only the game is using the cpu when I'm playing. I am using windows gamebar to check the performance of the cpu,gpu and ram. Also Performance mode is enabled on windows 10. I would really like to play the game but I don't want to risk my cpu. Do you think gamebar readings are incorrect . Also raytracing is turned off and the problem is really not my gpu nor ram but the cpu, its really weird. Its the only game that it happens on. Sorry for my english btwReply