The Radeon RX 7800 XT is one of the best graphics cards. It's also slightly faster than the Radeon RX 6800 XT - at least in Windows. Thanks to Phoronix, we see the other side of the coin where the Radeon RX 6800 XT is colossally better than the Radeon RX 7800 XT in Linux 1080p (1920x1080) gaming performance.

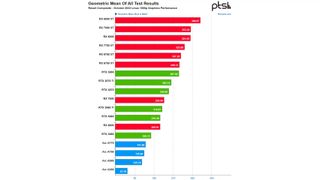

The Radeon RX 6800 XT reigned supreme over all the other AMD, Nvidia, and Intel graphics cards. The last-generation Radeon RX 6800 XT was up to 12% faster than the Radeon RX 7800 XT. The latter's performance was comparable to that of the Radeon RX 6800. In RDNA 2's defense, the Radeon RX 6000 series has been on the market for a while now, so they're running on more optimized drivers than the Radeon RX 7000 series, which isn't even a year old yet.

If you look at the raw specifications, the Radeon RX 7800 XT packs 12 fewer compute units than the Radeon RX 6800 XT. The former delivers around 80% higher FP32 performance than the latter on paper. The higher clock speeds on the Radeon RX 7800 XT aren't enough to make that much of a difference. It's by virtue of the RDNA 3 architecture that allows AMD to double-pump the compute units, which theoretically offers twice the performance. Logically, the final result depends on the workload and, more importantly, the driver since it tells the graphics card when such an execution is feasible.

Meanwhile, the Radeon graphics cards were at the top of the Linux gaming charts, followed by Nvidia's GeForce RTX 30 series (Ampere), with Intel's Arc Alchemist in last place. The GeForce RTX 3080 was the best Nvidia performer, lagging behind the Radeon RX 6700 XT. Interestingly, the Arc A580 wasn't too far behind the Arc A750, with an 8% performance gap. Meanwhile, the Arc A770 exhibited 13% higher performance than the Arc A580.

AMD's dominance wasn't strictly in the gaming department, as the RDNA 2-powered graphics cards also excelled in workstation workloads. Returning to the comparison with the Radeon RX 6800 XT and Radeon RX 7800 XT, the former offered between 14% and 87% higher OpenGL performance in SPECViewPerf 2020 3.0. The Radeon RX 7800 XT finally won in ParaView 5.10.1, outperforming the Radeon RX 6800 XT by a 5% margin.

RDNA 3 hasn't lived up to its potential in the Linux environment, and whether performance will improve with newer drivers remains to be seen. In the meantime, RDNA 2 is just fine for gaming on Linux, while we give RDNA 3 time to mature. Perhaps pricing will also have improved when RDNA 3 hits its fullest potential, but for now, there's not much incentive to upgrade to RDNA 3 if you're a Linux gamer who games at 1080p.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a Freelance News Writer at Tom’s Hardware US. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

thisisaname If the drivers need so much time to make good that the hardware has been superseded by the next generation maybe they should look at making the hardware easier to write a driver for?Reply -

tommtajlor Not a suprise, this "7800XT" is just really a 7700XT at best. (And even then I was very generous) You can check out I'm a MAC on youtube analyzing this whole rdna3 mess...Reply -

bit_user Reply

I think AMD did the same as Nvidia, where they designed their lower-tier chips to sit a tier lower than they do, but the current GPU market wouldn't support that positioning. Consider that the 6800 XT's launch price was $650, while the 7800 XT's launch price is $500 - and that's after > 2 years of inflation. My guess is the 7900 XT was meant to be the new 7800 XT, while the 7900 XTX should've been the 7900 XT.tommtajlor said:Not a suprise, this "7800XT" is just really a 7700XT at best. (And even then I was very generous)

If we look at the specs, it becomes quite apparent that it wasn't meant as a true successor. The 7800 XT has 64 MB of L3 cache, whereas the 6800 XT has 128 MB of L2 cache. In terms of shaders, the 6800 XT has 4608 whereas the 7800 XT has only 3840. So, you basically have to conclude that the 7800 XT wasn't meant to supersede the 6800 XT, but rather as more of an 6750 XT successor. They almost got away with it by boosting the 7800 XT's clocks, but not quite.

I think AMD's saving grace is that both perform pretty much in line with how they're currently priced. Okay, the 6800 XT is a slightly better deal, but the 7800 XT has better RT and AI performance. -

tommtajlor Reply

Yes, they did a similar thing with the upnaming, difference being rdna3 did not hit target (I would debate it is 15-20% off), while Nvidia actually quiet overrun (2 node jump, new uarch, etc). So now the gap is very big. Hence nvidia is able to somewhat run away with a product named one tier higher, and sold even one more or simetimes 2 more tier higher prices. AMD could not do that.bit_user said:I think AMD did the same as Nvidia, where they designed their lower-tier chips to sit a tier lower than they do, but the current GPU market wouldn't support that positioning. Consider that the 6800 XT's launch price was $650, while the 7800 XT's launch price is $500 - and that's after > 2 years of inflation. My guess is the 7900 XT was meant to be the new 7800 XT, while the 7900 XTX should've been the 7900 XT.

If we look at the specs, it becomes quite apparent that it wasn't meant as a true successor. The 7800 XT has 64 MB of L3 cache, whereas the 6800 XT has 128 MB of L2 cache. In terms of shaders, the 6800 XT has 4608 whereas the 7800 XT has only 3840. So, it's really quite apparent that AMD didn't really intend the 7800 XT to supersede the 6800 XT. They almost got away with it by boosting the 7800 XT's clocks, but not quite.

I think AMD's saving grace is that both perform pretty much in line with how they're currently priced. Okay, the 6800 XT is a slightly better deal, but the 7800 XT has better RT and AI performance.

Imagine this:

-Last time their 6900XT was able to fight with with a 3090 (give or take).

-Now, their 7900XTX (->"7900XT")is only able to fight with a 4080 (->"4070Ti really")

That is a disaster. Or look at it this way, in reality we got:

- a 1200 USD 3070Ti successor (up from 600USD)

- a 1000 USD xx70Ti competitor from AMD (up from how much? 579USD?) So its not even a 7900XT, but based on past more like a 7800 non-XT (maaaaaaybe an XT if we want to be very generous)

Inflation is a very bad argument. I am working in the industry, and have a huge insight (negotiating with partners, detailed cost breakdown, custom hardware orders, component manufacturing, etc). The thing is overall, this generation does NOT cost them more than the previous one. In fact, it is slightly cheaper. Not to mention, that technology overall is deflationary, balancing out the overall inflationary effects. It is all about shareholders, EBIT growing, more profit, simple as that.

Just theoretically, imagine the scenario, where Nvidia really releasing the currently upnamed 4080 16gb, as a 4070Ti for 600 USD. Thats it for AMD, gpu division I guess would have been needed to be dismissed maybe alltogether. Or would they sell the current 7900XTX as a 7800XT for 500USD? I highly doubt it.

Their saving grace is:

1) nvidia being hell of a greedy now

2) most people being dumby

3) pruchase based on emotions and not on logic in most cases.

Most Popular