Seeed Studio debuts Raspberry Pi 5 PCIe 3.0 HAT with dual M.2 slots for $45

Offers one lane of PCIe 3.0 bandwidth per-slot, or a total of 8 GT/s for both slots combined

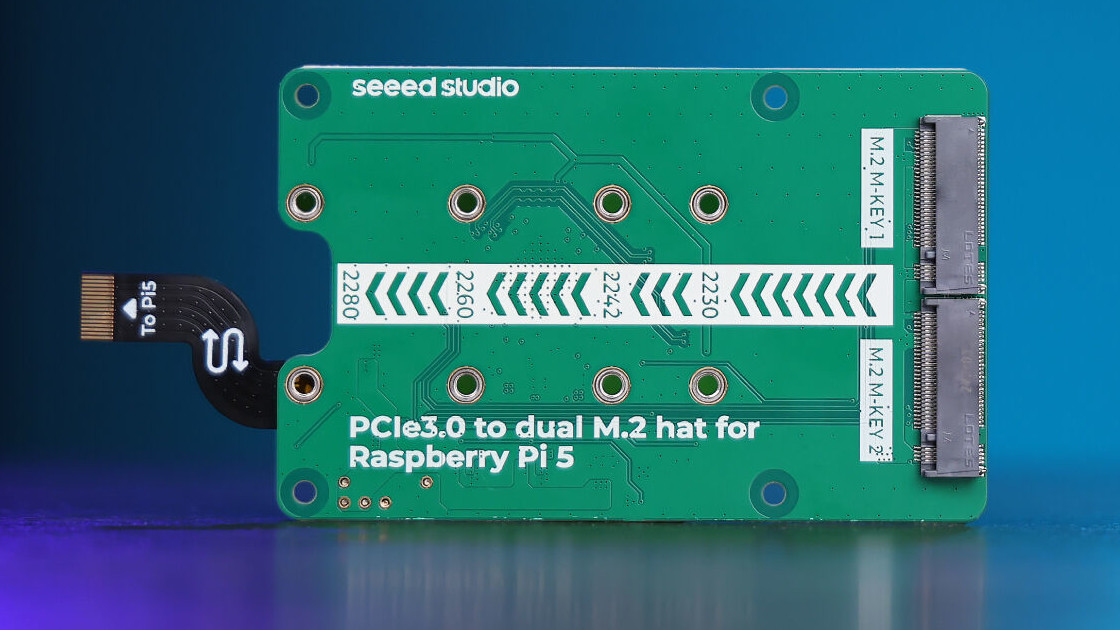

Earlier this week, a notable entry-level Raspberry Pi 5 PCIe HAT was released, supporting the PCIe 3.0 standard instead of PCIe 2.0 while also providing dual M.2 slots. The Seeed Studio PCIe 3.0 to Dual M.2 HAT [h/t CNX] costs just $45 per the official store page. For that price, you get one PCIe 3.0 lane per M.2 slot, with 8 GT/s throughput across both slots.

Besides the obvious use case of M.2 SSDs, Seeed Studio's Pi 5 PCIe 3.0 to Dual M.2 adapter is also intended for use with other devices, including AI accelerators, which also function within the form factor. Seeed Studio also notes that some SSDs may have compatibility issues, and lists some of its own drives as recommended companions. However, as CNX Software notes, your SSD should be fine as long as the ASMedia ASM2806 switch is supported.

Another compatibility concern, though likely a minor problem for much of the Raspberry Pi 5's target audience, is that you'll need to 3D print your own case for a Pi 5 setup with this adapter. It wasn't made to fit the default Raspberry Pi 5 case, unlike the official single-drive M.2 HAT.

So, does all that make this one a contender for the best Raspberry Pi 5 HATs? It's undoubtedly competitive for the price if you intend to increase throughput over the Raspberry Pi 5's default SD card, whose speeds are anemic in comparison. However, as CNX notes, if your tastes in storage veer more high-end, or if you have higher hopes for a proper mini NAS, the yet faster four-slot CM3588 computing module may serve you better.

In any case, this is a pretty nifty Raspberry Pi 5 HAT, and as the first one to use an ASM2806-based board. However, it remains to be seen how it will fare in the long run in a competitive market surrounding the Raspberry Pi 5.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

bit_user A PCIe switch is definitely the way to go, but the Pi's SoC really needs to expose more lanes.Reply

Very nice! The RK3588 should have PCIe 3.0 x4. I wonder if that board also uses a PCIe switch, but I'd bet it just devotes one lane per SSD. Considering you're limited to just 2.5 Gbps Ethernet, that's more than enough.The article said:if you have higher hopes for a proper mini NAS, the yet faster four-slot CM3588 computing module may serve you better.

P.S. @TheyCallMeContra , it'd be nice if you included a pic of the underside of Seeed Studio's hat (visible on CNX's page), which shows the PCIe switch chip. -

abufrejoval Reply

The RK3588 itself has tons of I/O, way more and more diverse than the Broadcom SoC inside the Raspberry PI 5 (RP5).bit_user said:A PCIe switch is definitely the way to go, but the Pi's SoC really needs to expose more lanes.

Very nice! The RK3588 should have PCIe 3.0 x4. I wonder if that board also uses a PCIe switch, but I'd bet it just devotes one lane per SSD. Considering you're limited to just 2.5 Gbps Ethernet, that's more than enough.

P.S. @TheyCallMeContra , it'd be nice if you included a pic of the underside of Seeed Studio's hat (visible on CNX's page), which shows the PCIe switch chip.

It even has two native Ethernet controllers, but those only go to 1Gbit and my Orange Pi 5+ (OP5+) using the RK3588 seems to drive its dual 2.5 Gbit RK8125BG NICs off two of the combo ports, which can be configured as either USB3/SATA or PCIe 2.1, while the 3rd is available as M.2 e.g. for Wifi: all are configured as PCIe 2.1 here.

The single PCIe v3 x4 port fully remains and with bifurcation support: there is no extra switch chip required and I've tested it with an Aquantia AQC107 10Gbit NIC: works, but I'm happier using it with NVMe storage at near 4 GByte/s bandwidth. Even if you split off 2 bifurcated lanes say for a 6x SATA port ASmedia adapter (e.g. 24TB HDDs), that leaves you with twice the NVMe bandwidth of an RP5 using PCIe v3.

And that still leaves 3x 5Gbit/s USB 3.1 ports (one with Alt-DP on the OP5+, two per SoC) and two USB 2.0 as well as several serial interfaces for cameras, audio or even a console: have a look at the Rockchip data sheet, it's really quite impressive in terms of I/O, but just about equal in CPU power, since it won't overclock and those A55 cores are just enough to bridge the gap to the RP5's 3 GHz overclock on perfect multi-core workloads.

AFAIK the RP5 only exposes one PCIe lane, which is v2 by default, but can be configured for v3, I believe. That's how it's wired with its custom "Southbridge" and nothing any HAT could change, so a switch would be required for dual NVMe support, limiting the bandwidth to 1/4 of what the RK3588 can do at best.

I don't quite get why people bother putting NVMe on the RP5. I use Kingston Data Traveller MAX USB3.2 sticks for my RP5 8GB, which can do almost 2x SATA speeds on a matching port and manage SATA SSD bandwidth and latency on the RP5. I then use a 2.5 Gbit Ethernet Realtek NIC on the other USB3 port for the Proxmox cluster connecting both my RP5 and my OP5+ and leave all internal expansion alone. Most importantly that allows me to use a cheap fully passive metal case for the RP5 even at 3GHz overclock, for the OP5+ I managed to do with a tiny Noctua fan in a metal case running slower on 3.3V without overheating at peak clocks and I/O loads. It's not silent but unnoticeable, which is good enough for me.

The almost 10:1 storage bandwidth difference to the near 4GByte/s on the OP5+ is noticeable, but much less than going from SDcards to high-end USB SSDs. 32 vs 8GB of RAM on the OP5+ vs RP5 and a much more potent GPU deliver quite a lot more interactivity for the OP5+, especially at 4k resolution, but in my case that's mostly academic: my KVM cascade makes interactive use possible, but I prefer my x86 workstations for interactive use, these ARM minis are used as µ-servers in the home-lab.

Note that the RK3588S is a cut down variant with far fewer I/O options at lower cost and thermals.

I've just had a peek at Orangepi.org again and it seems they've been adding plenty new form factors and variants, including a compute module and base board variants. -

bit_user Reply

Thanks for the detailed rundown!abufrejoval said:The RK3588 itself has tons of I/O, way more and more diverse than the Broadcom SoC inside the Raspberry PI 5 (RP5).

Which case?abufrejoval said:for the OP5+ I managed to do with a tiny Noctua fan in a metal case running slower on 3.3V without overheating at peak clocks and I/O loads. It's not silent but unnoticeable, which is good enough for me.

Also, what Linux distro do you run on it?

Once Hardkernel added support for in-band ECC on their ODROID-H4, I went that way for micro-servers and haven't looked back. The H4 models can idle at very low power and have built-in 2.5G Ethernet, which is enough for what I need. Their M.2 slot is full PCIe 3.0 x4, which is actually somewhat uncommon for Alder Lake-N boards. While you can run them fanless, I don't. At idle, the 92mm PWM fan I used is inaudible.abufrejoval said:these ARM minis are used as µ-servers in the home-lab.

Have you seen the Radxa Orion O6, based on the Cix CD8180 SoC? I might just skip the Orange Pi Plus and go with it, since it has twice as many A7xx cores and they're at least 4 generations newer!

https://radxa.com/products/orion/o6/

They're not yet shipping, so I'm going to wait and see what kind of real world experiences people are having with them. Radxa has an English language user forum, which seems like probably the best place to find out how it works in practice.

I don't know much about Radxa, but they've been around for a while and some of their boards seems reasonably popular. The thin mini-ITX form factor is great for me, because I've got this Silverstone PT13 case that I equipped with a 92 mm fan that should be perfect for it (not the case I'm using for the ODROID-H4):

https://www.silverstonetek.com/en/product/info/computer-chassis/PT13/ -

abufrejoval Reply

My pleasure!bit_user said:Thanks for the detailed rundown!

Something off Aliexpress or Amazon, just a basic stamped, bent and painted sheet metal case (most seem to be clones) that already came with a fan I found too noisy. The only significant change was to swap to a Noctua and to use 3.3V from the GPIO instead of 5V from the fan header to keep the noise down. Well, actually I also added a heat sink with tall fins to the SoC (had to file off some aluminum to make it fit) and thermal pads between the case and the NVMe drive on the bottom of the board. Without the fan it would heat up and throttle after a few minutes of power viruses, nothing above 60°C and no noise with that fan on 3.3 Volts. Perfect for 24x7 operation, the main disadvantage would be dust sucked into the case where that can't happen with the RP5.bit_user said:Which case?

For the RP5 there is plenty of bespoke designs using massive aluminum for passive use: I ordered the two variants that looked best for around €5-8 and then used the one that showed better thermal performance. Again, with storage on a 1TB Kingston Data Traveller Max USB3 and network on a Realtek 2.5 GB USB3, nothing gets hot enough to cause trouble or throttle, even on a 3GHz overclock with a load of power viruses.

Latest Raspian on the RP5 and the standard Debian 12 from OrangePi. Proxmox installs on top of both using an ARM fork from a Chinese developer, jiangcuo on Github.bit_user said:Also, what Linux distro do you run on it?

For the OrangePI I had also tested Armbian, which seemed to be almost identical originally, except that the names for tools and files were all changed a bit: I stuck with the OrangePI variant initially, because their documentation was better.

Since then it seems that Armbian has done better on GPU integration, where OrangePi's Debian is missing Mali GPU support, or at least the OpenGL parts: still looking for some time to test that before potentially making the change. Having a team from Slovenia in charge of the OS these days seems slightly safer, even if the SoC is still very much Chinese.

Can't use Ubuntu, because Proxmox won't just run on it and is more important than 3D acceleration for me.

I also shortly tested all the other OrangePi distros, including Android and hybrids, except OpenWRT, but they were little more than alphas and have not seen any updates, either.

It seems everybody has been relying on a single open source developer, Joshua Riek, and that guy is tired of getting next to nothing in return.

ECC would have tempted me, too. I still will pay the extra buck if it's just that. But unfortunately even with AMD it's fused on mobile chips and Pro variants aren't sold on SBCs.bit_user said:Once Hardkernel added support for in-band ECC on their ODROID-H4, I went that way for micro-servers and haven't looked back. The H4 models can idle at very low power and have built-in 2.5G Ethernet, which is enough for what I need. Their M.2 slot is full PCIe 3.0 x4, which is actually somewhat uncommon for Alder Lake-N boards. While you can run them fanless, I don't. At idle, the 92mm PWM fan I used is inaudible.

On the other hand I've now been operating clusters of Mini-ITX Atoms (triple replica GlusterFS) and i7 NUCs (triple replica Ceph) 24x7 for years without any noticeable stability issues, so my ECC worries have lessened a bit. Of course I do regular security patches with reboots, so it's not like their uptime counted in years. Still would not want to run ZFS without ECC on my primary file server, though.

Instead I am still mostly using hardware RAIDs on Windows server for HDD data at rest, all data on SSD either has a HDD or cloud backing store.

bit_user said:Have you seen the Radxa Orion O6, based on the Cix CD8180 SoC? I might just skip the Orange Pi Plus and go with it, since it has twice as many A7xx cores and they're at least 4 generations newer!...

Thanks for the link, I'll have a look. But for now, my interest in ARM has declined significantly, I've mostly learned what could be learned from them and for research ARM was closed as a topic with my employer.

For personal use, SBCs based on them aren't that attractive: x86 is much easier to use and has a far wider range in performance and function. And I got plenty of those, generally with much fewer memory or other expansion constraints.

Some of the ARM control flow integrity feature stuff of ARM9 simply don't exist in these current SBCs and their SoCs and CHIERI like extensions currently seem to be RISC-V, only. So if they advance functionally significantly beyond what x86 can offer, that might change again. -

bit_user Reply

The board doesn't even have a PWM fan header?abufrejoval said:Something off Aliexpress or Amazon, just a basic stamped, bent and painted sheet metal case (most seem to be clones) that already came with a fan I found too noisy. The only significant change was to swap to a Noctua and to use 3.3V from the GPIO instead of 5V from the fan header to keep the noise down.

Is your heatsink pure aluminum? If so, you might find that you get better cooling performance by inserting a copper shim between it and the SoC.abufrejoval said:Well, actually I also added a heat sink with tall fins to the SoC (had to file off some aluminum to make it fit)

I've had the idea to get some washable air filter material and glue strips of Velcro along the edges, in order to make a removable, washable dust filter I can attach over the intake fan of my mini-PC's case. Haven't done it, yet.abufrejoval said:the main disadvantage would be dust sucked into the case where that can't happen with the RP5.

What's your favorite power virus? On my N97, the best one seems to be stress-ng --cpu=N --cpu-method=fft although I haven't done a similar search for power viruses on any ARM cores.abufrejoval said:even on a 3GHz overclock with a load of power viruses.

Also, on my N97, a mysteriously intensive way to stress the iGPU seem to be: glmark2 -s 1536x1152 -b buffer:columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=subdata --run-forever

In terms of power consumption, it's much worse than any of the other test cases in glmark2 --benchmark .

When I get both of them going at the same time, my N97 mini-PC exceeds 60 W at the wall, which is sort of crazy for a SoC that's supposedly just 12 W. It would thermally throttle way before that, until I put quite some effort into improving its cooling. FWIW, this is a different board - not the ODROID-H4 (which actually uses a N305).

Yeah, I thought one of the bigger differentiators of Armbian was how it's meant to ship with proprietary drivers for the GPU and stuff.abufrejoval said:For the OrangePI I had also tested Armbian, which seemed to be almost identical originally, except that the names for tools and files were all changed a bit: I stuck with the OrangePI variant initially, because their documentation was better.

Since then it seems that Armbian has done better on GPU integration, where OrangePi's Debian is missing Mali GPU support, or at least the OpenGL parts: still looking for some time to test that before potentially making the change.

AFAIK, the in-tree support for ARM GPUs (which is all that Debian would have) is still lagging badly behind Intel and Qualcomm. At least, it should be better than Imagination.

🥲

Yeah, I don't really mind using a Chinese SoC, as long as I can use a non-Chinese distro. For SoCs, there aren't a lot of other options. MediaTek has been dabbling in this space, but I only see the Genio 1200 in higher-end boards. NXP has some ARM-based SoCs, but they're not very competitive with the better Chinese options and seem more oriented towards industrial use cases. Amlogic at least has US headquarters, but they haven't launched anything very competitive since the S922X. Since then, they've seemed to focus more on low-end stuff.abufrejoval said:Having a team from Slovenia in charge of the OS these days seems slightly safer, even if the SoC is still very much Chinese.

I use Linux software RAID. Yeah, it has that pesky write hole, but that's what backups are for. Performance-wise, there's no reason not to use SW RAID, if your devices are mechanical disks or SATA SSDs. Even my old AMD Phenom II could basically max out the media rate of the disks.abufrejoval said:Instead I am still mostly using hardware RAIDs on Windows server for HDD data at rest, all data on SSD either has a HDD or cloud backing store.

I'm intrigued by the prospect of having an ARM mini-PC with SVE that I can tinker with. I suppose I could also use my phone for that, but it's easier if I just have a full Linux install right on the device.abufrejoval said:Thanks for the link, I'll have a look. But for now, my interest in ARM has declined significantly, I've mostly learned what could be learned from them and for research ARM was closed as a topic with my employer.

No chance of it replacing my ODROID-H4 as my micro server, though. Not without ECC support, of some kind. I also like that the ODROID-H4 has upgradable/replaceable memory (although speed is limited to DDR5-4800).

This post says where you can apply to get a ARM + CHERI (AKA Morello) development board:abufrejoval said:Some of the ARM control flow integrity feature stuff of ARM9 simply don't exist in these current SBCs and their SoCs and CHIERI like extensions currently seem to be RISC-V, only.

https://www.phoronix.com/forums/forum/hardware/processors-memory/1516285-linux-patches-updated-for-experimental-arm-morello-that-combines-arm-cheri-isa?p=1516345#post1516345 -

abufrejoval Reply

It does, but the logic to support it isn't baked into the kernel. And then variable noises are more noticeable when constant flow isn't. The dynamic range of energy consumption of these boards is very low anyway: they are really more optimized for constantly low power not lowest power in all usage scenarios. Most phones couldn't survive for long on what these systems consume, while offering much better peak performance.bit_user said:The board doesn't even have a PWM fan header?

It's good enough, currently.bit_user said:Is your heatsink pure aluminum? If so, you might find that you get better cooling performance by inserting a copper shim between it and the SoC.

And I'm a bit off copper, after I had evidently traces of it in a shim that Eryin puts on top of an i7-12000H: after I then used liquid metal to get 90Watts sustained compute power, that actually resulted in pitting and basically a new isolation layer a few months later: some improvements can set you back significantly, switched to carbon sheets instead to protect the naked CPU die...

stress and stress-ng are both available just the same, as is s-tui to monitor what there is in terms of sensors.bit_user said:What's your favorite power virus? On my N97, the best one seems to be stress-ng --cpu=N --cpu-method=fft although I haven't done a similar search for power viruses on any ARM cores.

But my favorite is perhaps the simplest to use on nearly all platforms: using Firefox to run a WASM benchmark with a selectable number of threads.Of course, other browsers would work, too, but I prefer Firefox and then it's not about comparing browser engines.

iGPU power consumption tends to be pretty boring: they have a rather low fixed upper limit and when you mix both GPU and CPU loads, the GPU typically gets priority. So if you're able to saturate SoC limits with the CPU, adding iGPU won't raise the overall load, just give the GPU it's maximum slice.bit_user said:Also, on my N97, a mysteriously intensive way to stress the iGPU seem to be: glmark2 -s 1536x1152 -b buffer:columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=subdata --run-forever

In terms of power consumption, it's much worse than any of the other test cases in glmark2 --benchmark .

For x86 GPU/CPU TDP sharing is easiest to observe via Furmark/Prime95 on Windows, so I don't bother much about GPU tests on Linux. I use glmark2 mostly for some basic functional testing there.

With ARM, getting sensor data often isn't as easy, lots of info you can get with perf on x86 simply lacks hardware instrumentation.

My main interests is stability and being able to live with them in the room: I'm not trying to break speed records.

So I put thermal loads for the noise and energy base and then make sure that I/O devices are being loaded as well.

Having a machine go down, because a CPU peak coincides with a drive waking up from sleep and a network being pumped is what I need to avoid.

That's Alder Lake for you. With all the Goldmonts, TDP limits were still pretty much respected. Jasper Lake already marked a turning point, where peak consumption was often quite higher. Intel was so bent to win in performance benchmarks, they were rather more willing to take far more Wattage. But so far BIOS limits seem to be respected rather well, if they can be set. It's mostly the defaults that have gone somewhat insane.bit_user said:When I get both of them going at the same time, my N97 mini-PC exceeds 60 W at the wall, which is sort of crazy for a SoC that's supposedly just 12 W. It would thermally throttle way before that, until I put quite some effort into improving its cooling. FWIW, this is a different board - not the ODROID-H4 (which actually uses a N305).

Of course your power supplies may not be nearly as efficient as you thought and then mainboard components and USB devices can ask for quite significant amounts of power, too, even RAM can want quite a bit from the bottle. The CPU or even the SoC's part of the power budget tends to be relatively low on these smaller SBCs.

What's unclear to me is where the bottleneck is. Unfortunately ARM doesn't provide a complete set of Mali drivers, but evidently only portions, not fully open source nor with full documentation. So a lot of the work would need to be done by Rockchip and that's already a bottleneck. Then come the likes of Armbian or OrangePi and they can't really improve things a lot, nor do functioning 3D drivers (or even NPU drivers) sell a ton more of these boards: it's a low revenue market and that limits the efforts affordable, even at Chinese wages.bit_user said:Yeah, I thought one of the bigger differentiators of Armbian was how it's meant to ship with proprietary drivers for the GPU and stuff.

AFAIK, the in-tree support for ARM GPUs (which is all that Debian would have) is still lagging badly behind Intel and Qualcomm. At least, it should be better than Imagination.

🥲

I'd say they evaluate their TAM rather well and providing consumers with additional choices, doesn't enlarge that.bit_user said:Yeah, I don't really mind using a Chinese SoC, as long as I can use a non-Chinese distro. For SoCs, there aren't a lot of other options. MediaTek has been dabbling in this space, but I only see the Genio 1200 in higher-end boards. NXP has some ARM-based SoCs, but they're not very competitive with the better Chinese options and seem more oriented towards industrial use cases. Amlogic at least has US headquarters, but they haven't launched anything very competitive since the S922X. Since then, they've seemed to focus more on low-end stuff.

No, the main advantage of hardware RAID is management, OS neutrality and that I already owned the hardware.bit_user said:I use Linux software RAID. Yeah, it has that pesky write hole, but that's what backups are for. Performance-wise, there's no reason not to use SW RAID, if your devices are mechanical disks or SATA SSDs. Even my old AMD Phenom II could basically max out the media rate of the disks.

But that hardware is now more than 10 years old, also eats power and the spares aren't as drop-in compatible as they should be and need their own maintenance and testing to ensure their readiness.

So I am testing all types of software RAIDs currently, including storage spaces and ReFS on Windows. There 8TB of storage spaces and ReFS got 'disconnected' on a Windows 10 workstation, because I had the audacity to boot a Windows 2025 server to Go on it.

No the Storage Spaces pool was not upgraded, but unless you prohibit that via a registry entry, ReFS file systems will up transparently upgraded to any newer OS... Microsoft just cannot imagine that a Windows 11 or Server 2025 might just be temporary... It was easy enough to recover, justed booted server 2025 again, copied to another drive, and then used NTFS again, which hasn't seen any upgrades.... I kind of liked the notion of ReFS consistency checking...

I really want to run a mix of Proxmox and Univention at the base, with some hot storage on Ceph, some hot storage via pass-through on NVMe and bigger data-at-rest via ZFS with replication and available via SAMBA and NFS4.

But that's not a turnkey mix just yet.

Yeah, I remember reading that. But it's bound to be expensive, either in proposing, proving, doing and documenting your work or in purchase later. You'd have to dedicate a team of engineers for that and that's hard to get budgeted with my employer and impossible in the home lab... so far. Once only CHERI ensures survival in an all-out war of malware, that might change.bit_user said:I'm intrigued by the prospect of having an ARM mini-PC with SVE that I can tinker with. I suppose I could also use my phone for that, but it's easier if I just have a full Linux install right on the device.

No chance of it replacing my ODROID-H4 as my micro server, though. Not without ECC support, of some kind. I also like that the ODROID-H4 has upgradable/replaceable memory (although speed is limited to DDR5-4800).

This post says where you can apply to get a ARM + CHERI (AKA Morello) development board:

https://www.phoronix.com/forums/forum/hardware/processors-memory/1516285-linux-patches-updated-for-experimental-arm-morello-that-combines-arm-cheri-isa?p=1516345#post1516345 -

bit_user Reply

stress-ng has many different CPU methods, and they vary pretty significantly in the amount of power they consume. This is why it bugs me, when I see reviewers like Les Pounder (author of this article) who run it without selecting one of the more intensive options. By default, it just cycles through a bunch of stressors, not all of which are even designed to stress the CPU cores.abufrejoval said:stress and stress-ng are both available just the same, as is s-tui to monitor what there is in terms of sensors.

But my favorite is perhaps the simplest to use on nearly all platforms: using Firefox to run a WASM benchmark with a selectable number of threads.Of course, other browsers would work, too, but I prefer Firefox and then it's not about comparing browser engines.

I'd encourage you to try playing with the different stress-ng options, if you really want to stress your system to the max. FFT might not be so highly-optimized on ARM. Hardkernel has used --cpu-method=matrixprod, but that's not as good for stressing Gracemont as fft.

What's weird is that Alder Lake-N can seemingly use far more package power than whatever you have PL1/PL2 configured for. At stock settings, it does seem like the iGPU begins throttling the CPU cores, but then it goes on to basically use as much power as it wants. Maybe this is just a weakness of the Linux driver.abufrejoval said:iGPU power consumption tends to be pretty boring: they have a rather low fixed upper limit and when you mix both GPU and CPU loads, the GPU typically gets priority. So if you're able to saturate SoC limits with the CPU, adding iGPU won't raise the overall load, just give the GPU it's maximum slice.

Weirdly, I tried furmark on Linux and it's really not as good at loading the Xe iGPU as that glmark2 commandline I mentioned. From what I recall, it's not CPU-bottlenecked, either. Maybe it's bottlenecking on Alder Lake-N's 64-bit memory interface? You used to be able to query the memory throughput with intel_gpu_top, but I think that broke a while back.abufrejoval said:For x86 GPU/CPU TDP sharing is easiest to observe via Furmark/Prime95 on Windows, so I don't bother much about GPU tests on Linux. I use glmark2 mostly for some basic functional testing there.

Yeah, I put a lot of effort into my N97's cooling, simply because I wanted the fan to be inaudible, unless I slammed it with a heavy workload. Originally, I had wanted to make it fanless, but that wasn't going to happen in a form factor like the case I wanted to use.abufrejoval said:My main interests is stability and being able to live with them in the room: I'm not trying to break speed records.

Eh, I admittedly don't have much experience with it, but in the little time I spent toying around with an Apollo Lake and Gemini Lake ASRock (consumer-oriented; not industrial) mini-ITX boards, they would readily gobble well more power than spec'd.abufrejoval said:That's Alder Lake for you. With all the Goldmonts, TDP limits were still pretty much respected.

The PSU is a 60 W Seasonic power brick that claims 89% efficiency. They no longer make them, sadly. As for the other stuff, the system idles at around 7.5 W, headless (9W with KDE desktop and 1080p monitor via HDMI). That board is an industrial motherboard (Jetway brand) with a bunch of serial ports, a SATA controller, 2x 2.5G Ethernet, but not much else. The SSD is SK hynix P31 Gold (500 GB) and the M.2 slot is PCIe 3.0 x2. The RAM is a 32 GB DDR5-5600 (SK hynix die), but running at only 4800.abufrejoval said:Of course your power supplies may not be nearly as efficient as you thought and then mainboard components and USB devices can ask for quite significant amounts of power, too, even RAM can want quite a bit from the bottle.

Hardkernel's ODROID-H4 has better idle power consumption, but I haven't tested it because I'm still in the setup & migration phase over from the Jetway board. Here's what they claim:

Desktop GUIActivityPower Consumption (W)Ubuntu Desktop Booting15.7Gnome Desktop GUI Idle6.2CPU stress20.54K YouTube play on Chrome Browser15.4WebGL aquarium demo on Chrome Browser16.4WebGL + CPU Stress21.6Power Off0.2Sleep (Suspend to RAM)1.3

Headless ServerTestConfigurationH4H4+H4 UltraUbuntu Desktop GUI

(Power-Save Governor)HDMI connected4.6 W6.1 W6.2 WHDMI disconnected3.9 W5.3 W5.4 WUnlimited Performance mode On (PL4=0)3.9 W5.3 W5.4 WOff (PL4=30000, Default)3.9 W5.2 W5.3 WPCIe ASPM option in BIOS settings All Disabled (Defaut)3.9 W5.2 W5.3 WAll Auto2.0 W2.7 W2.8 WEthernet connection Yes2.0 W2.7 W2.8 WNo1.5 W2.4 W2.4 W

Source: https://www.hardkernel.com/shop/odroid-h4/

I don't use an OS-neutral filesystem and I like being able to take my disks and read them on any PC running a modern linux build.abufrejoval said:No, the main advantage of hardware RAID is management, OS neutrality and that I already owned the hardware.

I used to like the hardware RAID on Dell servers, until their backplanes started failing (admittedly, these are older models, long past expiration of their warranty). I had some repurposed servers at work that I was using to host some shared filesystems, and it wasn't until the second one failed that I figured out it wasn't actually the disks that had gone bad.abufrejoval said:But that hardware is now more than 10 years old, also eats power and the spares aren't as drop-in compatible as they should be and need their own maintenance and testing to ensure their readiness.

When it comes to storage, I'm a believer in KISS (Keep It Simple, Silly). The more widely-used and standard configuration, the less likelihood of encountering problems.abufrejoval said:So I am testing all types of software RAIDs currently, including storage spaces and ReFS on Windows.

I never trust Microsoft not to mess up any other filesystems or operating systems, when I do an install or upgrade. I always disconnect all other storage devices, when I do those things.abufrejoval said:becasuse I had the audacity to boot a Windows 2025 server to Go on it.

No the Storage Spaces pool was not upgraded, but unless you prohibit that via a registry entry, ReFS file systems will up transparently upgraded to any newer OS... Microsoft just cannot imagine that a Windows 11 or Server 2025 might just be temporary...

In my experience, Linux installers are always good about at least telling you what they're going to do, before they do it.

I'm just using NFS + Samba and use rsync for backups. I keep it simple.abufrejoval said:I really want to run a mix of Proxmox and Univention at the base, with some hot storage on Ceph, some hot storage via pass-through on NVMe and bigger data-at-rest via ZFS with replication and available via SAMBA and NFS4. -

abufrejoval Reply

It depends on your aim. These systems aren't designed for HPC, so Les Pounder won't test for that.bit_user said:stress-ng has many different CPU methods, and they vary pretty significantly in the amount of power they consume. This is why it bugs me, when I see reviewers like Les Pounder (author of this article) who run it without selecting one of the more intensive options. By default, it just cycles through a bunch of stressors, not all of which are even designed to stress the CPU cores.

I'd encourage you to try playing with the different stress-ng options, if you really want to stress your system to the max. FFT might not be so highly-optimized on ARM. Hardkernel has used --cpu-method=matrixprod, but that's not as good for stressing Gracemont as fft.

And with ARM there is tons of ISA extensions which are optional within and then again different across various ISA generations: it's really quite complex, even a mess.

I believe on the RK3588 they even have an issue similar to the AVX512 topic on Alder-Lake, where the A55 cores lack some of the A76 extensions the RK3588 actually supports in hardware. So you have to build your software without them or control their CPU core placement via numactl.

For one of my work projects it was actually crucial to try to measure the energy consumption of individual workloads on different architectures and by selectively enabling CPU cores or moving them to more efficient systems.

That never got nowhere, because the instrumentation simply isn't there, neither in the software nor the hardware. And one of the biggest problems is that with today's CPUs every single instruction might have vastly different energy requirements and then different again depending on tons of context. There is no instrumentation at the hardware level nor in the software which allows you to properly measure how much energy an application consumes. Just getting system wide data isn't even trivial everywhere.

I wonder if your Odroid actually exposes all variables for power control: PL1 Watts, PL2 Watts, PL1 duration and PL2 duration are minimum. And there is additional ampere settings as well as clock limits in some BIOSes. And to make sure these aren't overstept, you actually may need to reduce Turbo level supports or disable it entirely, after all it is basically a license to overstep limits with parameters that Intel details in big huge documents not available to the general public.bit_user said:What's weird is that Alder Lake-N can seemingly use far more package power than whatever you have PL1/PL2 configured for. At stock settings, it does seem like the iGPU begins throttling the CPU cores, but then it goes on to basically use as much power as it wants. Maybe this is just a weakness of the Linux driver.

Where the system exposes all of these, like my Erying G660 (Alder Lake i7-12700H), I can make sure the SoC never exceeds e.g. 45 Watts, as per HWinfo. That's still much more at the wall, but that's another discussion below.

The single channel memory on Alder Lake N is definitely a bottleneck for the iGPU and one of the many reasons I never wanted to buy one (another that almost certainly all are 8-core dies intentionally culled).bit_user said:Weirdly, I tried furmark on Linux and it's really not as good at loading the Xe iGPU as that glmark2 commandline I mentioned. From what I recall, it's not CPU-bottlenecked, either. Maybe it's bottlenecking on Alder Lake-N's 64-bit memory interface? You used to be able to query the memory throughput with intel_gpu_top, but I think that broke a while back.

Jasper Lake has a far weaker predecessor UHD605 and it's still nearly twice the GPU performance with dual channel RAM. Goldmont wasn't nearly as sensitive but also a smaller GPU.

And on the far bigger 96EU Xe iGPU of my Alder Lake i7-12700H, single channel memory halves the GPU performance as one would expect. Xe has rather big caches for the GPUs that Jasper Lake doesn't have but in any case all Atom class iGPUs cut off at 5 Watt power so it may not matter as much.

What is exposed by the perf interface depends a lot on the hardware, nothing that the software can fix. And Intel changes that as they see fit. If I recall correctly I get distinct power consumption values for E-cores and P-cores on Linux for Alder Lake, but RAM power is no longer separated out for mobile and desktop chips, which on my Xeon E5-2696 v4 is reported and also often quite higher for 128GB of RAM than for the CPU on Prime95 max-heat.

And for some reason, you can't measure instruction statistics and power consumption at the same time.

Zen reports power consumption at the individual core level, but nobody else seems to go that deep and normal perf on Linux doesn't seem to support that yet. HWinfo has plenty more performance data for Zen on Windows, including RAM bandwidth consumption, even Watts for each DIMM, too bad there is no Linux equivalent.

On the ARM SoCs, much less sensor data and not integrated into Linux tools.

For the Mini-ITX Atoms I used a chassis, which came with a 60 Watt 12 or 19V power brick and a Pico-PSU class ATX converter. Pretty sure both are nowhere near "Gold" effiency.bit_user said:

The PSU is a 60 W Seasonic power brick that claims 89% efficiency. They no longer make them, sadly. As for the other stuff, the system idles at around 7.5 W, headless (9W with KDE desktop and 1080p monitor via HDMI). That board is an industrial motherboard (Jetway brand) with a bunch of serial ports, a SATA controller, 2x 2.5G Ethernet, but not much else. The SSD is SK hynix P31 Gold (500 GB) and the M.2 slot is PCIe 3.0 x2. The RAM is a 32 GB DDR5-5600 (SK hynix die), but running at only 4800.

Nor are the Intel NUC probably all that great, I don't recall all the measurements I'm sure I took before I put them into production. But generally I got the fully passive Goldmont+ J5005 Atoms and the far more powerful i7 NUCs with 32GB (Atoms) or 64GB (i7) with 1/2TB of SSD below 10 Watts on Linux desktop idle at the wall. For the NUCs I played with TDP limits mostly until the fan speeds under stress remained below enough not to bother. I didn't measure peak power consumption, because that wasn't important for me, noise and idle power was.

The next system here was different not by design but by need, because it was manufactured for far higher peak performance, a mobile-on-desktop Mini-ITX, a G660 from Erying, offering an i7-12700H with an official 45Watts of TDP, which comes with 120/95 Watt of PL2/PL1 in the BIOS, nowhere near where Intel actually wants them to be per market segmentation aims.

I had planned to run the Erying on an official and efficient Pico-PSU, and since it was a 45 Watt TDP chip and I was ready to sacrifice peak compute power for low-noise and overall efficiency on a 24x7 system, I felt a 90 Watt variant of the Pico should be enough: Pico themselves didn't sell them stronger.

But the 90 Watt variant would always fold on power peaks, even with what I believe were matching limits. My wall plug measurement device also wasn't very good or quick.

After running it off a regular 500 Watt "Gold" ATX PSU for a year or so I recently gave that another go, because I needed to reclaim that ATX PSU space to instead house a big fat Helium HDD in a backward facing drive bay there. Also I had added 4 NVMe drives with a switch chip to the system, meaning an extra few Watts to manage, while an Aquantia AQC107 10Gbit NIC had been there all along.

I got a 120 Watt Pico-PSU knockoff with an external 120 Watt power brick and a new wallplug measurement device and started testing. Without power limits imposed, the system would fold even without the hard disk or the NVMe RAID0 inserted.

But after playing with the limits, observation and load testing I was able to reach an absolute worst case of 110 Watt at the wall, while the SoC reported the very same 45 Watt of power I had configured for PL1 and PL2 via HWinfo, no matter which combination of power virus I threw at it. And that was Prime95, Furmark, HDsentinel, extra USB sticks etc., something I'd never expect to run at once.

Yet that's still around 30 Watts at desktop idle on the wall, interestingly enough slightly less with a visible desktop than after locking the screen. Seems Microsoft is getting busy once it's unobserved, even if this is a heavily curated Windows 11 Enterprise IoT LTSC with practically every phone-home activity deactivated...

But it's almost 5 Watts at the wall shut down. Not hibernating or with Ethernet on stand-by as far as I can tell, but perhaps the management engine inside the Intel with its Minix OS is still running...

Anyhow, peak 100% overhead at the wall over configured (and observed) TDP lmits at the SoC, no longer astound me. -

bit_user Reply

He does it when trying to characterize peak temperatures & power utilization, though. And it doesn't have to be HPC - I could easily believe a video game with some tightly-optimized loops could get a few cores burning as brightly.abufrejoval said:It depends on your aim. These systems aren't designed for HPC, so Les Pounder won't test for that.

There have been SoCs where they've disabled CPUID bits on one core, due to bugs in another (like A710 having bits disabled because it shipped in a SoC where the A510 had bugs in those instructions).abufrejoval said:I believe on the RK3588 they even have an issue similar to the AVX512 topic on Alder-Lake, where the A55 cores lack some of the A76 extensions the RK3588 actually supports in hardware. So you have to build your software without them or control their CPU core placement via numactl.

If you're correct that A76 is a superset of A55, then what you'd want to do is built for -march=A55 -mtune=A76. At least, that's what I'd do on x86. I think the ARM backend of GCC switched around -march vs. -mcpu relative to how they work on x86. I'm pretty sure there was a recent patch to make them work the same as on x86.

Alder Lake-N does, in a way that you can twiddle at runtime. This script works on every Intel CPU where I've tried it, including my N97:abufrejoval said:I wonder if your Odroid actually exposes all variables for power control: PL1 Watts, PL2 Watts, PL1 duration and PL2 duration are minimum.

https://github.com/horshack-dpreview/setPL

Alder Lake-N has locked frequency tables. The CPU firmware limits peak frequencies, depending on how many cores are active. That's essentially what it means for a CPU to be non-overclockable.abufrejoval said:And there is additional ampere settings as well as clock limits in some BIOSes.

I actually had some confusion about this, because the way I was trying to monitor clock frequencies was heavy enough that the CPU decided another core was active and throttled the ones I was trying to measure, on that basis. I did eventually find a way that avoided this Heisenbergian dilemma, though I didn't even start looking for a workaround until I became convinced that's what was happening.

You can certainly dial back CPU frequency limits, if you want. These are exposed by the frequency scaling governor in the /sys/ filesystem though I'm foggy on the details. I did some MHz/W measurements, a while back, though I think I used power limits as the independent variable. I found that Alder Lake-N will down-clock all the way to 400 MHz, if you set the power limits low enough. I think the lowest I was able to achieve was about 3 W. You can set it lower and it'll try, but RAPL will tell you actual package power under load is higher than that.abufrejoval said:And to make sure these aren't overstept, you actually may need to reduce Turbo level supports or disable it entirely,

BTW, turbostat is what I used for measurements. Very helpful, if a little clunky to use (i.e. not good diags, when you feed it some format string it doesn't like).

I was most disappointed by that, but it's still fine for what I need.abufrejoval said:The single channel memory on Alder Lake N is definitely a bottleneck for the iGPU and one of the many reasons I never wanted to buy one (another that almost certainly all are 8-core dies intentionally culled).

Chips & Cheese mentioned that (at least through Zen 2), its RAPL is software-estimated, rather than actual hardware measurements.abufrejoval said:Zen reports power consumption at the individual core level,

I got a Corsair iCue power supply, so I can take more detailed measurements. Sadly, my N97 board uses only a 4-pin CPU power socket, rather than a full ATX power block.

Did you twiddle with PL3 or PL4?abufrejoval said:But the 90 Watt variant would always fold on power peaks, even with what I believe were matching limits. My wall plug measurement device also wasn't very good or quick.

Yeah, I get the sense that the package power reported by turbostat isn't giving me the full story, either.abufrejoval said:But after playing with the limits, observation and load testing I was able to reach an absolute worst case of 110 Watt at the wall, while the SoC reported the very same 45 Watt of power I had configured for PL1 and PL2 via HWinfo, no matter which combination of power virus I threw at it. And that was Prime95, Furmark, HDsentinel, extra USB sticks etc., something I'd never expect to run at once.

Probably indexing all of your files and I think they've now gone back to defragging, even on SSDs.abufrejoval said:Seems Microsoft is getting busy once it's unobserved, even if this is a heavily curated Windows 11 Enterprise IoT LTSC with practically every phone-home activity deactivated... -

abufrejoval Reply

That's what I'm using, too.bit_user said:Alder Lake-N does, in a way that you can twiddle at runtime. This script works on every Intel CPU where I've tried it, including my N97:

https://github.com/horshack-dpreview/setPL

Yes, but it's an upper limit where TDP or temperature can hit much earlier. With the mobile i7s 'overclock' essentially means unlocking something nearly idential to its desktop potential on those 90-120 Watts the G660 comes with, not quite K-type 6GHz clocks that might cost 150 Watts or more for even a single core.bit_user said:

Alder Lake-N has locked frequency tables. The CPU firmware limits peak frequencies, depending on how many cores are active. That's essentially what it means for a CPU to be non-overclockable.

Even the Serpent Canyon NUC with its ARC770m dGPU which I passed on to my son came with comething like 90 Watts configured for PL2, while officially it's got the same i7-12700H 45 Watt SoC.

They don't go quite that crazy on Atoms yet.

With Alder Lake and potentially more hybrid CPUs coming even for servers (which didn't really happen, thankfully) the research goal was to try finding out, how one could optimize energy use for predictable loads on our server farms.bit_user said:You can certainly dial back CPU frequency limits, if you want. These are exposed by the frequency scaling governor in the /sys/ filesystem though I'm foggy on the details. I did some MHz/W measurements, a while back, though I think I used power limits as the independent variable. I found that Alder Lake-N will down-clock all the way to 400 MHz, if you set the power limits low enough. I think the lowest I was able to achieve was about 3 W. You can set it lower and it'll try, but RAPL will tell you actual package power under load is higher than that.

Given a predictable steady stream of transactions coming in and needing to be serviced, the question was how one should configure the servers to not waste energy on high turbo states, yet ensure SLAs on response times were kept.

Hyperscalers avoid that issue entirely by getting rid of Turbo and trying to run their CPUs just below 100% peak utilization by controlling the scheduled workloads. But if your business doesn't allow selling off excess capacity at a variable price points for steering opportunistic workloads, so all we can do is tune our hardware to match the load.

Because clocks vs. power is nowhere linear in CMOS, but have these funny knee shaped curves, you can already trade clocks vs cores. And with E and P cores you have non-intuitive overlaps in their efficiency curves, especially when you add maximum durations into the mix.

There is tons of optimization support via counters in modern CPUs, but they focus exclusively on instruction cycles, branch misses etc, not on energy consumption. And when you can't measure or at least project with a certain degree of reliability, you can't make good decisions.

So we tried to see if we could at least come up with good-enough projects using synthetic loads and measuring total power at the chip level and then again using the instruction counters, because on Intel you can only do one or the other, not both.

And then generate tons of data points to come up with a tuning plan and validate that against simulations again, pretty much like tuning a car engine for economy at load points on a dyno with all its levers and sensors.

And of course measuring adds your 'Heisenberg' cost and cumulative errors but mostly the concept of energy proportional computing once proposed by Amazons CTO, is mostly used as an argument why you can't economically win against clouds. Which is why the measurements aren't being added the the chips: the debate was over before it could ever turn into hardware facilities.

Funnily enough, Ampere was just about to introduce at least a two gears or two clock rates solution to their data center CPUs, mostly for license optimization (another form of energy).

Let's see if they survive against all those proprietary ARM designs we know nothing about.

AMD has done a lot of work for perf, where I haven't yet found an equivalent for Intel, who might have tons of stuff in their Xeons mere mortals never learn about. And can easily imagine why this stuff doesn't wind up in the upstream kernel, because energy consumption is a very hot potato nobody likes to share.bit_user said:BTW, turbostat is what I used for measurements. Very helpful, if a little clunky to use (i.e. not good diags, when you feed it some format string it doesn't like).

Real measurements would just cost too much extra hardware, estimates are fine. The biggest issue is accounting at process level so per-core data is good enough. That means the scheduler needs to become a bean counter or have a side-kick to track that.bit_user said:Chips & Cheese mentioned that (at least through Zen 2), its RAPL is software-estimated, rather than actual hardware measurements.

My initial issues with the 90 Watt variant may have been the fact that the BIOS was doing RAM training at default PL1/2 settings. It took me a while to realize I didn't have a broken board or bad RAM sticks.bit_user said:

Did you twiddle with PL3 or PL4?

During the last round I got the 90 Watt variant stable with the mainboard. But once I added peripherals I could see it going triple digit even if it didn't immediately fail. It still did, with Prime95.

Since I already had the 120 Watt PSU, I decided to stick with a bit of safety margin, "underwatting PSUs" isn't for great for productive server use, even in the home-lab, and especially when SSDs don't have power caps.