HighPoint's Gen5 M.2 NVMe PCIe card is here — promises 50 GB/s+ speeds for less than $1,000

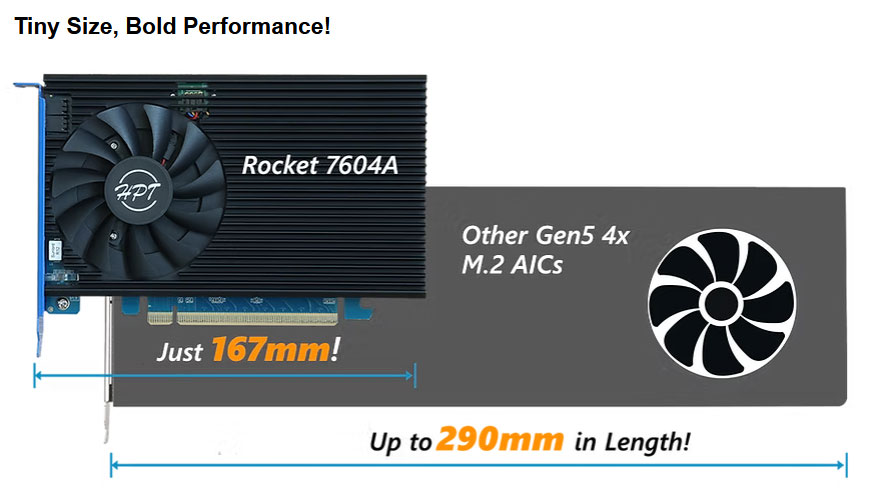

Single-slot 167mm-long card can fit and actively cool up to four M.2 SSDs.

Speedy storage expert HighPoint has revealed product details and pricing for its new Rocket 7604A RAID add-in-card (AIC). As you might have guessed from the product name, this is a PCIe card which plugs into a spare slot in your PC’s motherboard to add NVMe SSD storage. Impressively, this particular PCIe Gen5 x16 M.2 NVMe card can fit up to four drives and deliver “50 GB/s+ of real world transfer speed.” Pricing seems keen, too, with the Rocket 7604A now available for $999.

Providing some context to HighPoint’s new Rocket 7604A RAID add-in-card performance and pricing is our recent coverage of Phison’s Apex RAID card demo from Computex 2025. One of those new M.2 toting PCIe cards from Apex could be loaded with up to 32 SSDs, and a setup delivered a blistering 113 GB/s in the exhibition floor demo.

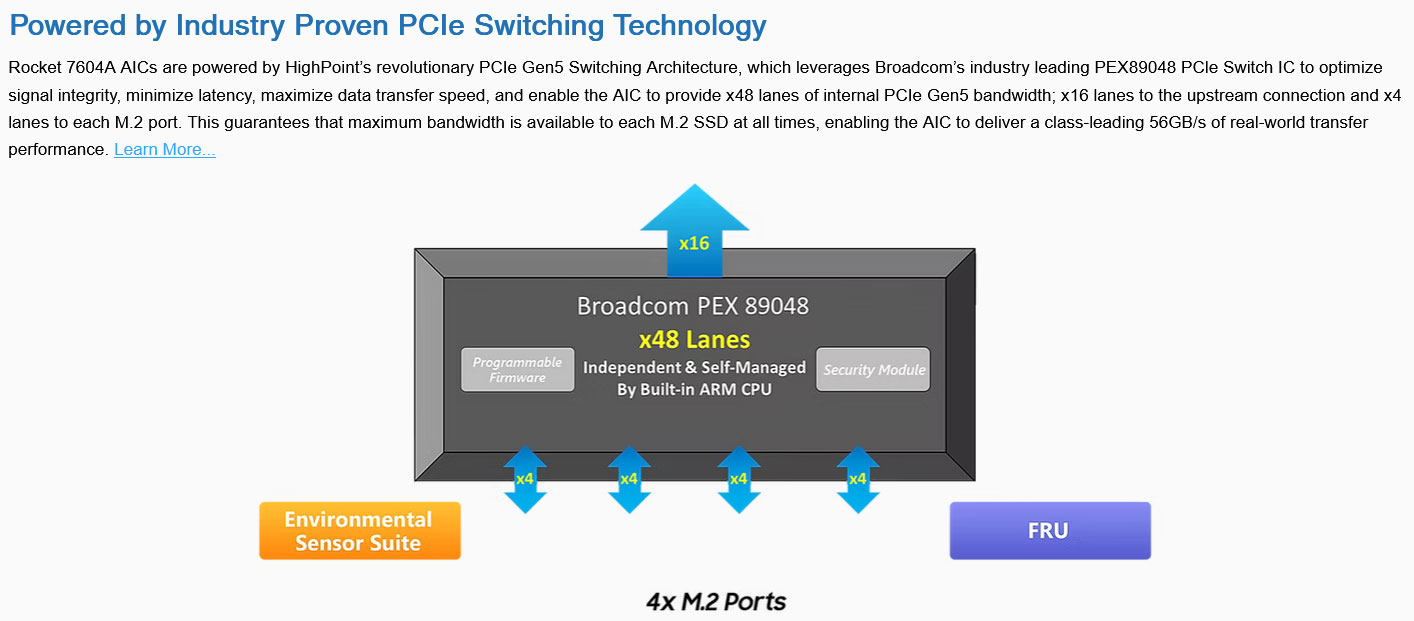

Key specs of HighPoint's solution appear to be in a more modest league, with its capacity for ‘just’ four M.2 SSDs, and its real-world performance of 50 GB/s or a little more. The theoretical max transfer rate for this AIC is up to 64 GB/s, with up to 12 Million IOPs. These stats might fall in the shadow of Phison's 32 SSD-toting Apex card, but would be just right, or even plenty, for some people or organizations wanting extra superfast onboard storage.

HighPoint has a couple of significant wins on its side, too. Firstly, its immediate availability and $999 price tag are laudable. In contrast, we were told at Computex that the Apex RAID card was coming in 30 days, and would be sold at $3,995 unpopulated.

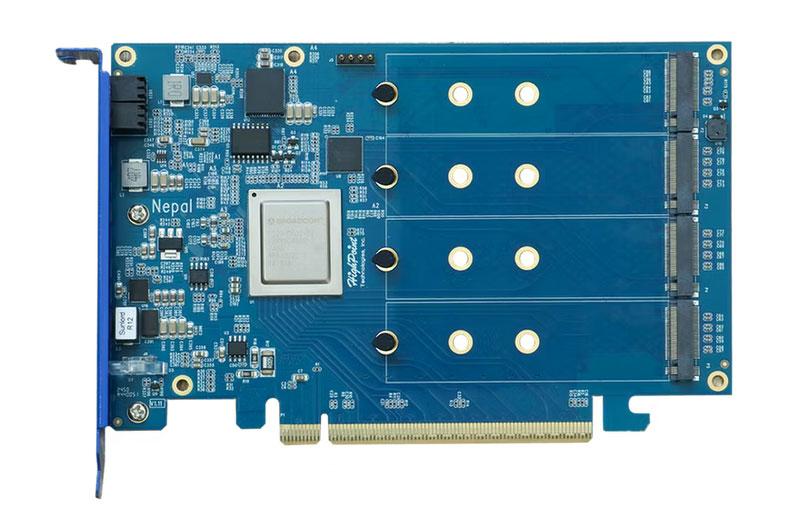

Secondly, HighPoint’s Rocket 7604A RAID AIC is a pleasingly small device. Its single-slot width, 110mm height, and 167mm length make it easy to fit into many PCs. Those dimensions sound Mini ITX friendly, and no external power connector is required, as the slot will deliver plenty of juice for the maximum of four M.2 SSDs.

Interface | PCIe 5.0 x16 connector providing four lanes per-port |

SSD capacity | Up to four M.2 (2242, 2260, 2280) drives, up to 32TB in total |

Performance | Up to 64 GB/s and 12 Million IOPs |

Controller | Broadcom’s 48-lane PCIe Gen5 PEX89048 wwitch |

RAID support | Single, RAID 0, 1, 10 |

Cooling | Ventilated bracket, heatsink with 8010 fan, and thermal pads supplied |

LEDs | SSD access, plus color-coded status, and fault LEDs |

Management | WebGUI, CLI, API package, and UEFI BIOS/HII |

Support | Windows and Linux, no Arm platforms |

Beyond the tech specs provided above, it is important to know that the hardware comes with comprehensive management and monitoring tools. Its full-coverage active cooling solution and provided M.2 thermal pads are claimed to “keep the performance-sapping threat of thermal throttling at bay.” We also think it is worth mentioning the easily administered OPAL SSC TCG based NVMe hardware encryption (SED) data security.

Hit the link in the intro for more details direct from HighPoint. Meanwhile, if you are interested in the best storage devices available for your PC, check out our extensive SSD buyer's guide.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

newtechldtech Sadly , we dont have enough lanes for such cards , it is time to increase the CPU PCIe lanes to 32 ? Intel and AMD ?Reply -

GeorgioPapa Wait, so a thousand dollars, for a PCIe to 4x m.2 NVME drive adapter, and it doesn't even come with any drives?! hahaReply

Yikes. -

thestryker Reply

Welcome to how much PCIe switches above 3.0 cost now. Avago (now Broadcom) bought PLX Technology and jacked up the prices so they could fleece business customers who don't have a choice. The only alternative to Broadcom's offerings is Microchip, but they play in the same market so they're no cheaper. ASMedia is the only one (I'm aware of) with consumer level chips, but they don't sell anything above PCIe 3.0.GeorgioPapa said:Wait, so a thousand dollars, for a PCIe to 4x m.2 NVME drive adapter, and it doesn't even come with any drives?! haha

Yikes. -

retro77 Reply

This is a RAID card, not a simple 4x adapter.GeorgioPapa said:Wait, so a thousand dollars, for a PCIe to 4x m.2 NVME drive adapter, and it doesn't even come with any drives?! haha

Yikes. -

abufrejoval The insane price is mostly a consequence of Broadcomm's aquisitions spree creating a near monopoly for high-end PCIe switch chips.Reply

What's really crazy is that the IOD on any non-APU Ryzen is basically a multi-protocol switch chips with similar bandwidth, which also speaks PCIe (and Inifinity Fabric, USB, SATA and RAM), yet sells cheaper as part of a "CPU".

So stitching together Zen SoCs should allow creating a cheaper variant of these cards with extra CPUs as a bonus, but perhaps some extra cooling required.

We really need some competition out there, that Broadcomm can't just buy like PLX and all those others: anyone listening in China? -

newtechldtech Reply

there is no switch here .. 4lanesx4 =16 lanes , and this is a 16 lanes card.thestryker said:Welcome to how much PCIe switches above 3.0 cost now. Avago (now Broadcom) bought PLX Technology and jacked up the prices so they could fleece business customers who don't have a choice. The only alternative to Broadcom's offerings is Microchip, but they play in the same market so they're no cheaper. ASMedia is the only one (I'm aware of) with consumer level chips, but they don't sell anything above PCIe 3.0. -

thestryker Reply

Unsure if you didn't read the article and/or don't understand how these devices work... but it quite literally says in the article it uses a Broadcom PEX89048 which is a PCIe switch.newtechldtech said:there is no switch here .. 4lanesx4 =16 lanes , and this is a 16 lanes card.

https://www.broadcom.com/products/pcie-switches-retimers/expressfabric/gen5/pex89048 -

abufrejoval You're both right in a way, with only four M.2 drives on a x16 slot there isn't really any need for an expensive 48 lane/port switch...Reply

But yes, there is in fact a switch and it's the main cost driver for it.

What it gives you is the ability to use it in a system which lacks bifurcation support (never met one) and the ability to use it in systems that don't actually have all 16 lanes connected or available (but with a loss of bandwidth).

You also gain RAID boot support (but RAID levels not specified), which many mainboards also support via their BIOS and perhaps proprietary drivers.

Overall it has the benefits of this drive appear much slimmer than I really considered, on Linux at least I'd expect very similar performance with a simple bifurcation adapter and MD drivers, a configuration I've run on several systems in the company lab for years, albeit on PCIe v3 servers and drives. -

thestryker Reply

No Intel client platform (including Xeons* based on client architecture) to date supports 4 way bifurcation natively (has been 8/8 or 8/4/4).abufrejoval said:What it gives you is the ability to use it in a system which lacks bifurcation support (never met one) and the ability to use it in systems that don't actually have all 16 lanes connected or available (but with a loss of bandwidth).

*looks like IVB Xeon had 20 lanes exposed and gained 4 lanes for use outside of the primary 16 which was still 8/4/4

They list the same switch for all of their PCIe 5.0 NVMe cards so I'm just guessing whatever volume rate they get is better than buying the 24 or 32 lane versions.abufrejoval said:really any need for an expensive 48 lane/port switch -

abufrejoval Reply

Sounds plausible, I used them on dual socket Xeons and still have one on a Xeon D 1541, which offers 4/4/4/4.thestryker said:No Intel client platform (including Xeons* based on client architecture) to date supports 4 way bifurcation natively (has been 8/8 or 8/4/4).

I don't think I saw that restriction on my Zen 3 and 4 systems, but those aren't as tight with PCIe lanes as Intel used to be.

Highpoint-Tech controllers were never cheap for what little hardware the often had. But given their small scale and the fact that they offered in niches nobody else served (or much more expensively), I've used their controllers for many years.thestryker said:They list the same switch for all of their PCIe 5.0 NVMe cards so I'm just guessing whatever volume rate they get is better than buying the 24 or 32 lane versions.

Yet at €999 sales price, the Broadcomm chip must be the biggest chunk of their price and switch prices tend to scale at least linearly with the number of ports. And I might actually be tempted to get a variant based on the 24 port chip with x8 or x4 host side for €400 at PCIe v5, especially if it could switch from PCIe v3/v4 to PCIe v5 during transfer.

So I am really puzzled by their choice of the 48-port ASIC on this SKU, programmability and tools would be all the same across the 24-144 port PEX 89000 product range and 32 ports would certainly be enough for this use case: wasting 16 PCIe v5 lanes is a brutal waste and hard to explain, especially since Broadcomm simply isn't known to go easy on prices nor does HighPoint-Tech have enough scale to obtain significant discounts.

I don't know how many die variants Broadcomm makes, perhaps it all about blowing fuses below 48 ports while yields are great, still I don't see them relaxing on price: it's just not who Broadcomm is and why we love them so much.

I can appreciate the management advantage these switches offer, you basically gain a full level of "physical" virtualization, but again Broadcomm doesn't give that sort of thing away for free: you have to buy the hardware and then still license the software to operate that layer, likely with a yearly renewal. It addresses that shrinking on-premise niche between clouds and desktops.

Broadcomm ate so many startups that only SAN vendors seemed able to afford PCIe switch chips for the last decade. Hyperscalers use their own fabrics or co-develop with Broadcomm, but some new startups seem to appear, although also typically at the high-end and with CXL in mind.

Wherever this is going, cheap home-lab or hobbyist use doesn't seem to be part of that... even if all those lesser capacity, still good-enough M.2 drives keep piling up in my drawers, much more difficult to re-purpose than those SATA-SSDs.