HighPoint's adapter enables GPUDirect storage — up to 64 GB/s from drive to GPU, bypassing the CPU

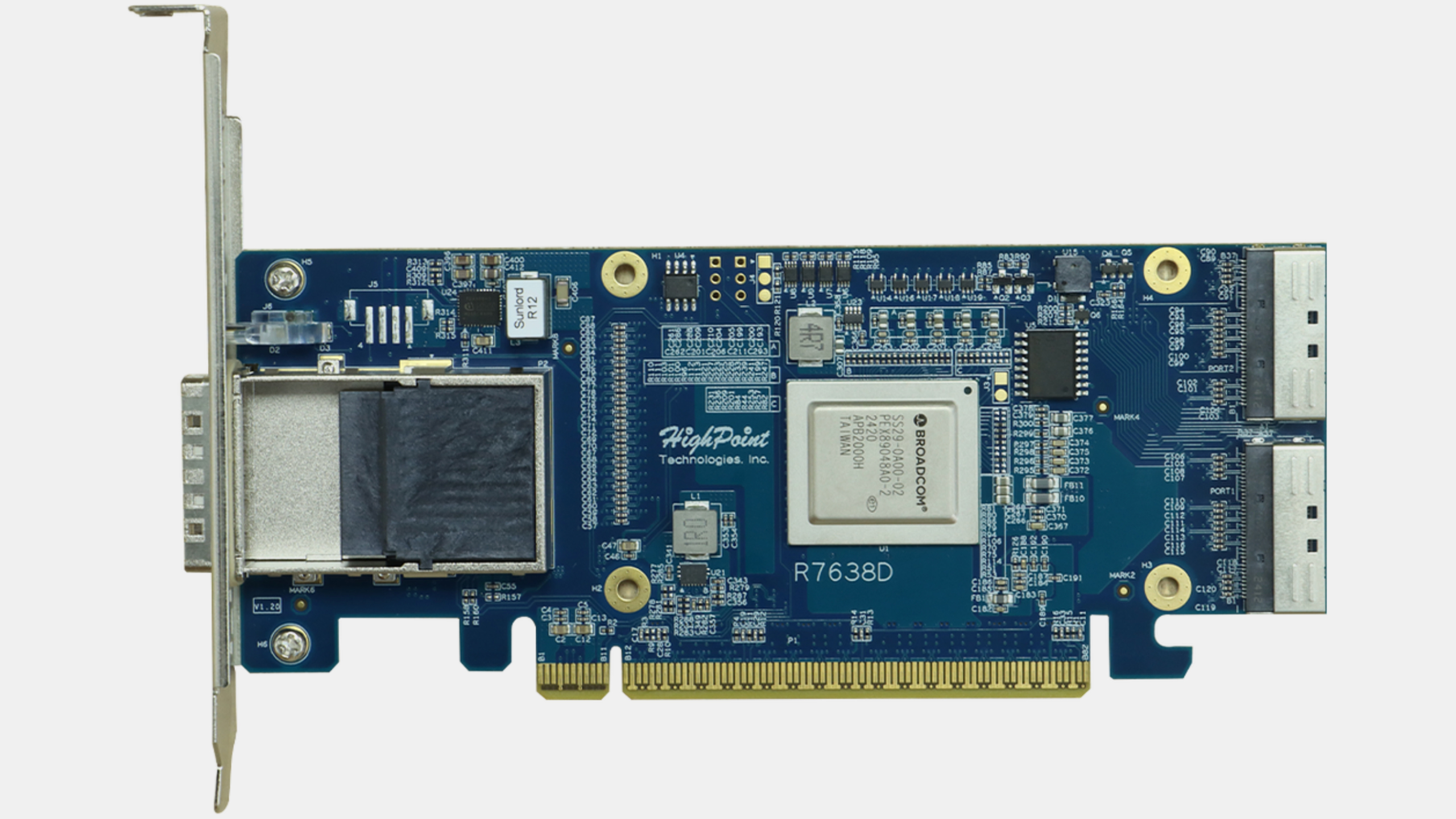

Meet the Rocket 7638D switch card

HighPoint on Thursday introduced its Rocket 7638D PCIe 5.0 switch card that is designed to enable Nvidia's GPUDirect interconnection between AI GPUs and NVMe storage devices. The device is designed to speed up AI training and inference workloads when operating with software that fully support GPUDirect.

The latest GPUs from Nvidia (starting from A100) support GPUDirect technologies that enable direct data transfers between GPUs and other devices, such as SSD or network interfaces, bypassing the CPU and system memory to increase performance and free CPU resources for other workloads. However, GPUDirect requires support both from the GPU and from a PCIe switch that supports P2P DMA capability, but not all PCIe Gen5 switches support this feature, which is where switch cards come into play.

HighPoint's Rocket 7638D switch card packs the Broadcom PEX 89048 switch enabling system integrators to build systems with GPUDirect capability. The adapter features 48 PCIe 5.0 lanes: 16 lanes to connect to host, 16 lanes to connect to external GPU box using a CDFP CopprLink connector, and 16 lanes are dedicated to internal NVMe storage devices using MCIO 8i connectors. The MCIO ports support up to 16 NVMe drives, enabling configurations with up to 2PB of high-performance storage.

The Rocker 7638D adapter enables GPUDirect Storage workflows that avoid host CPU and RAM entirely and provide predictable bandwidth (up to 64 GB/s) and latency when paired with compatible software, which includes operating system, GPU drivers, and filesystem. The device (or rather systems that it enables) is particularly useful in scenarios involving large-scale training datasets that use plenty of storage.

Since the the Broadcom PEX 89048 switch chip contains an Arm-based CPU, it is completely independent and self managed, so it is compatible with both Arm and x86 platforms. The adapter works out of the box with all major operating systems, without the need for special drivers or additional software installation.

The Rocket 7638D includes field-service features such as VPD tracking for hardware and firmware matching and a utility that monitors health status and PCIe link performance. These tools simplify troubleshooting and replacement, especially in multi-node or hyperscale installations where hardware tracking matters.

HighPoint did not disclose pricing of its Rocket 7638D switch card, which will probably depend on volumes and other factors.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

abufrejoval To my knowledge any GPU can bypass the CPU for storage or network data transfers, if it talks PCI and asks the OS nicely for setting up address mappings between both sides: that administrative part requires some CPU/OS support, but then they can just fire away, while arbitration will protect all parties from monopolising all bandwidth.Reply

And again, for all I know, CUDA supports these functionalities pretty much since it became popular for HPC, because nobody there wants to bother with CPU overheads while other GPU software stacks might (or should) for the same reason.

Reaching back into the older crevasses of my mind, I believe the IBM XGA adapter should have been able to do the same, since it supported bus master operations. And once GPU and network/storage data are both memory mapped, it only takes software to make things happen.

And that software support, which is really just about delegating some of the (CPU based) OS authority over the PCI(e) bus to a GPU (or any other xPU device, that might want it), isn't naturally hardware dependent (the article mentions A100), but a matter of driver and OS support to negotiate and set up that delegation: AFAIK this is rather older, but might have mostly supported network/fabric support for HPC workloads, targeting MPI transfers over Infiniband; local storage wasn't popular in HPC for a long time, because it was typically more bother than help until NV-DIMMs or really fast flash storage came along.

In short, this isn't a HighPoint feature or a result of their work, even if HighPoint might want to create that impression. These are just basic PCIe, OS and GPU/CUDA facilities that HighPoint supports just like any other PCIe storage would. It's like a window vendor claiming to also support "extra clean air". -

jp7189 Reply

I thought the same as you initially, but I believe this is targeting systems without PEX switches. In many systems all PCIe is routed through the CPU and boards just have retimers not true "smart" PEX switches. The difference here is the GPU plugs in to the Highpoint card not the motherboard. It's literally connecting a GPU directly to storage - not just logically, but physically as well. It means data transfers never traverse the motherboard and never have bandwidth contention.abufrejoval said:To my knowledge any GPU can bypass the CPU for storage or network data transfers, if it talks PCI and asks the OS nicely for setting up address mappings between both sides: that administrative part requires some CPU/OS support, but then they can just fire away, while arbitration will protect all parties from monopolising all bandwidth.

And again, for all I know, CUDA supports these functionalities pretty much since it became popular for HPC, because nobody there wants to bother with CPU overheads while other GPU software stacks might (or should) for the same reason.

Reaching back into the older crevasses of my mind, I believe the IBM XGA adapter should have been able to do the same, since it supported bus master operations. And once GPU and network/storage data are both memory mapped, it only takes software to make things happen.

And that software support, which is really just about delegating some of the (CPU based) OS authority over the PCI(e) bus to a GPU (or any other xPU device, that might want it), isn't naturally hardware dependent (the article mentions A100), but a matter of driver and OS support to negotiate and set up that delegation: AFAIK this is rather older, but might have mostly supported network/fabric support for HPC workloads, targeting MPI transfers over Infiniband; local storage wasn't popular in HPC for a long time, because it was typically more bother than help until NV-DIMMs or really fast flash storage came along.

In short, this isn't a HighPoint feature or a result of their work, even if HighPoint might want to create that impression. These are just basic PCIe, OS and GPU/CUDA facilities that HighPoint supports just like any other PCIe storage would. It's like a window vendor claiming to also support "extra clean air".

I'm struggling to understand the niche where a single GPU would need this. I guess maybe you could cram 16 of these in a single chassis each with its own external GPU and each GPU with dedicated storage. -

abufrejoval Reply

I am afraid that Anton, and you in return, may have been somewhat misdirected by HighpointTech's marketing.jp7189 said:I thought the same as you initially, but I believe this is targeting systems without PEX switches. In many systems all PCIe is routed through the CPU and boards just have retimers not true "smart" PEX switches. The difference here is the GPU plugs in to the Highpoint card not the motherboard. It's literally connecting a GPU directly to storage - not just logically, but physically as well. It means data transfers never traverse the motherboard and never have bandwidth contention.

First of all GPUdirect, just as I described, is all about avoiding bounce buffers which both reside in CPU RAM and have the CPU transfer between two physical regions of mapped memory, one accessing storage, the other GPU RAM.

And that's what GPUdirect does on its own.

Traditional storage operation involves transfers between CPU RAM and the storage adapter, CPU manages setup, transfer is mostly DMA, but to CPU RAM.

GPU RAM is typically memory mapped into the CPUs virtual memory space, so in a bounce buffer scenario the CPU then copies the data between the CPU RAM based storage buffer to the GPU region via CPU instructions, because unlike on IBM mainframes, there is nobody to do RAM to RAM DMA (likely because the benefit would be near zero, but that's another topic).

That's evidently wasteful, but relatively easy to fix when the storage DMA just goes directly to mapped GPU RAM on PCIe. And that's what GPUdirect does and it requires nothing really new, mostly just driver support.

It's also what would happen if you plug both this HPT adapter with storage attached and the GPU into the mainboard today.

And before it centered around storage, "GPUdirect" or CPU bypass for MPI or even GPU/GPU shared RAM has been around for many years now, because it just uses PCIe (or NVlink).

Now the HPT adapter is really nothing more than a 48 port PCIe switch, 16 of which go to the mainboard (you could put it into a single lane of PCIe v1, and miners might have even done that at one point, but that's just to show the flexibility of PCIe).

What you do with it, how you use it, is completely up to you. It doesn't have to be storage nor GPUs at all!

You can hang lots of Ethernet there, accelerators of all sorts (NPU, IPU, FPGA), but also GPUs, even two or three of them, if that's your heart's desire.

You can even put another computer there, if both can agree who manages the fabric. In fact, Norway's Dolphin Interconnect Solutions from Oslo have been doing that for decades! NVlink and CXL are designed to make this scale-out easier (and faster), but with a Dolphin software stack to manage, it has been done for a very long time.

And if you put a GPU on one set of PCIe lanes on the HPT card, whilst you hang storage on another, then yes, GPUdirect data transfers then would not necessarily go through the mainboard's PCIe root complex (mostly just a PCIe switch, too), nor would it necessarily*) touch CPU memory, freeing both from contention, because PCIe, unlike PCI, is point-to-point for data transfers. But you need to set up source and target properly.

The setup and most likely the file system code to manage the storage would still most likely run on the CPU, but that's not mandatory. In theory GPU cores or their ARM support processors could do the job, as long as everybody agrees...

HighpointTech certainly won't rewrite Linux or Windows to enable that, so I am confident to claim that this is just normal OS code on the CPU... because it should just work like that already: it's just PCIe as it should be.

And there is no reason why you shouldn't be able to hang various of these HP/PEX switches behind each other, into a nice big fat tree. In fact, that's how a lot of SAN storage boxes looked inside, last time I had a gander; perhaps not a biological tree but something more akin to a power distribution grid.

You might encounter some OS bugs that way if you go too wild, and it might not satisfy many real-world computational workloads, but it's simply using PCIe the way it was designed, not a new product or even idea.

The main issue with PCIe, even with v5 and 48 lanes is that it's far too slow for GPU scale-out.jp7189 said:I'm struggling to understand the niche where a single GPU would need this. I guess maybe you could cram 16 of these in a single chassis each with its own external GPU and each GPU with dedicated storage.

There are good technical reasons why NVidia designed NVLink ASICs and can sell them at far higher prices than Broadcom/HPT can charge for PCIe, not that those are anywhere near as cheap as they used to be before Broadcom bought PEX.

*) if you were (foolishly?) map CPU RAM to a memory range that GPUs and storage adapters behind the HPT switch use for data transfer, that data would go (twice) throught all intermediary PCIe switches, including the CPUs root complex and contend with its RAM access even across various hops in the PCIe fabric: the physical topology and the data movements aren't strictly tied together.