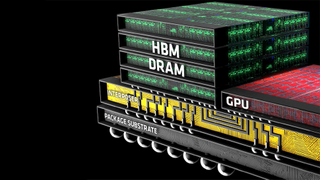

HBM3E

Latest about HBM3E

Micron puts stackable 24GB HBM3E chips into volume production

By Matthew Connatser published

Micron's HBM3E is coming into volume production, bringing the stackable 24GB chips capable of 1.2TB/s to the world.

SK Hynix says new high bandwidth memory for GPUs on track for 2024 - HBM4 with 2048-bit interface and 1.5TB/s per stack is on the way

By Anton Shilov published

HBM4 memory with a 2048-bit interface on track for production in 2026.

China's CXMT reportedly aims to make HBM memory for AI chips

By Anton Shilov published

CXMT to compete against Micron, Samsung, and SK Hynix for lucrative HBM memory market, says report.

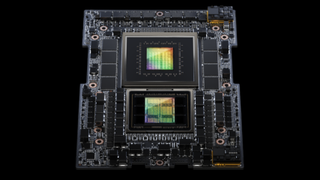

Nvidia reportedly races to secure memory supply for next-gen H200 AI GPUs

By Anton Shilov published

Nvidia allegedly pre-purchases HBM3E memory from Micron and SK Hynix, says report.

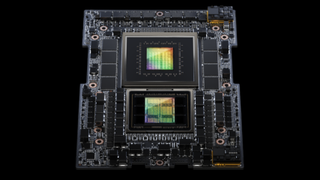

AWS and Nvidia build a supercomputer with 16,384 Superchips

By Anton Shilov published

Amazon Web Services to offer Nvidia-powered supercomputing infrastructure for generative AI, including 65 ExaFLOPS AI supercomputer.

HBM4 memory to double speeds in 2026

By Anton Shilov published

HBM4 to revolutionize memory market, says TrendForce.

Nvidia's Grace Hopper GH200 Powers 1 ExaFLOPS Jupiter Supercomputer

By Anton Shilov published

Nvidia's Grace Hopper now powers 40 AI supercomputers globally.

21 ExaFLOP Isambard-AI Supercomputer Uses 5,448 GH200 Grace Hopper Superchips

By Anton Shilov published

U.K.'s Isambard AI supercomputer for AI and HPC workloads boasts 200 PetaFLOPS for HPC and 21 ExaFLOPS for AI workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.