Samsung Expects HBM4 Memory to Arrive by 2025

HBM3E is good, but HBM4 will be even better.

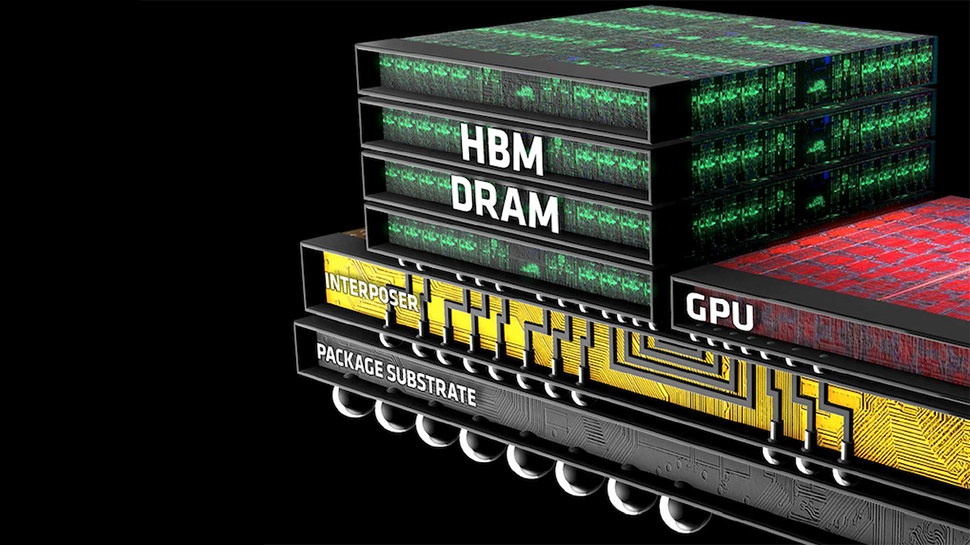

We've heard about HBM4 memory several times over the past few months, and this week Samsung revealed that it expects HBM4 to be introduced by 2025. The new memory will feature a 2048-bit interface per stack, twice as wide as HBM3's 1024-bit.

"Looking ahead, HBM4 is expected to be introduced by 2025 with technologies optimized for high thermal properties in development, such as non-conductive film (NCF) assembly and hybrid copper bonding (HCB)," SangJoon Hwang, EVP and Head of DRAM Product and Technology Team at Samsung Electronics, wrote in a company blog post.

Although Samsung expects HBM4 to be introduced by 2025, its production will probably start in 2025–2026, as the industry will need to do quite a lot of preparing for the technology. In the meantime, Samsung will offer its customers its HBM3E memory stacks with a 9.8 GT/s data transfer rate that will offer bandwidth of 1.25 TB/s per stack.

Earlier this year Micron revealed that 'HBMNext' memory was going to emerge around 2026, providing per-stack capacities between 32GB and 64GB and peak bandwidth of 2 TB/s per stack or higher — a marked increase from HBM3E's 1.2 TB/s per stack. To build a 64GB stack, one will need a 16-Hi stack with 32GB memory devices. Although 16-Hi stacks are supported even by the HBM3 specification, nobody has announced such products so far and it looks like such dense stacks will only hit the market with HBM4.

To produce HBM4 memory stacks, including 16-Hi stacks, Samsung will need to polish off a couple of new technologies mentioned by SangJoon Hwang. One of these technologies is called NCF (non-conductive film) and is a polymer layer that protects TSVs at their solder points from insulation and mechanical shock. Another is HCB (hybrid copper bonding), which is a bonding technology that uses copper conductor and oxide film insulator instead of conventional solder to minimize distance between DRAM devices as well as enable smaller bumps required for a 2048-bit interface.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Diogene7 I wish that more resources would be allocated to bring standalone emerging Non-Volatile-Memory (NVM) MRAM to High Volume Manufacturing (HVM).Reply

The European research center IMEC seems to do a great job with their VG-SOT-MRAM concept.

An affordable standalone low power, low latency (~ 1ns) NVM 64Gbit (8GB) VG-SOT-MRAM die chip used as both DRAM and storage would open so many new opportunities, especially in IoT devices, smartphones,…

We badly need this type of technology to be brought to HVM and the sooner, the better… -

jp7189 According to semiengineering 8 stacks of HBM3 need up to 13,000 traces. Will moving from 1024 HBM3 to 2048 HBM4 double the traces?Reply -

InvalidError Reply

A stack of HBM is 1024 data + ECC + control lines + strobe lines + clocks + ancillary signals and you need all of those for each stack. If you make each stack twice as wide, you do need twice as much of nearly everything and can expect the total trace count to roughly double.jp7189 said:According to semiengineering 8 stacks of HBM3 need up to 13,000 traces. Will moving from 1024 HBM3 to 2048 HBM4 double the traces?

Except it won't. The bloatware on smartphones including always-on sensors, WiFi, 5G, BT, etc. consume a lot more power than DRAM does, you won't get much smartphone battery life out of it. Last I read, MRAM has only a ~1M cycles endurance, which means you can expect the memory to fail within weeks if you used it as a DRAM replacement. Most IoT devices don't need enough *RAM for RAM power draw to have any meaningful impact on battery life. Things like temperature sensors don't even need any RAM, you just write parameters from SRAM to EEPROM once the config phase is done and power-off between timer intervals or trigger events like pre-IoT battery-operated sensors did.Diogene7 said:An affordable standalone low power, low latency (~ 1ns) NVM 64Gbit (8GB) VG-SOT-MRAM die chip used as both DRAM and storage would open so many new opportunities, especially in IoT devices, smartphones,…