Micron's new ultra-fast memory to power Nvidia's next-gen AI GPUs — 24GB HBM3E chips put into production for H200 AI GPU

36GB coming later

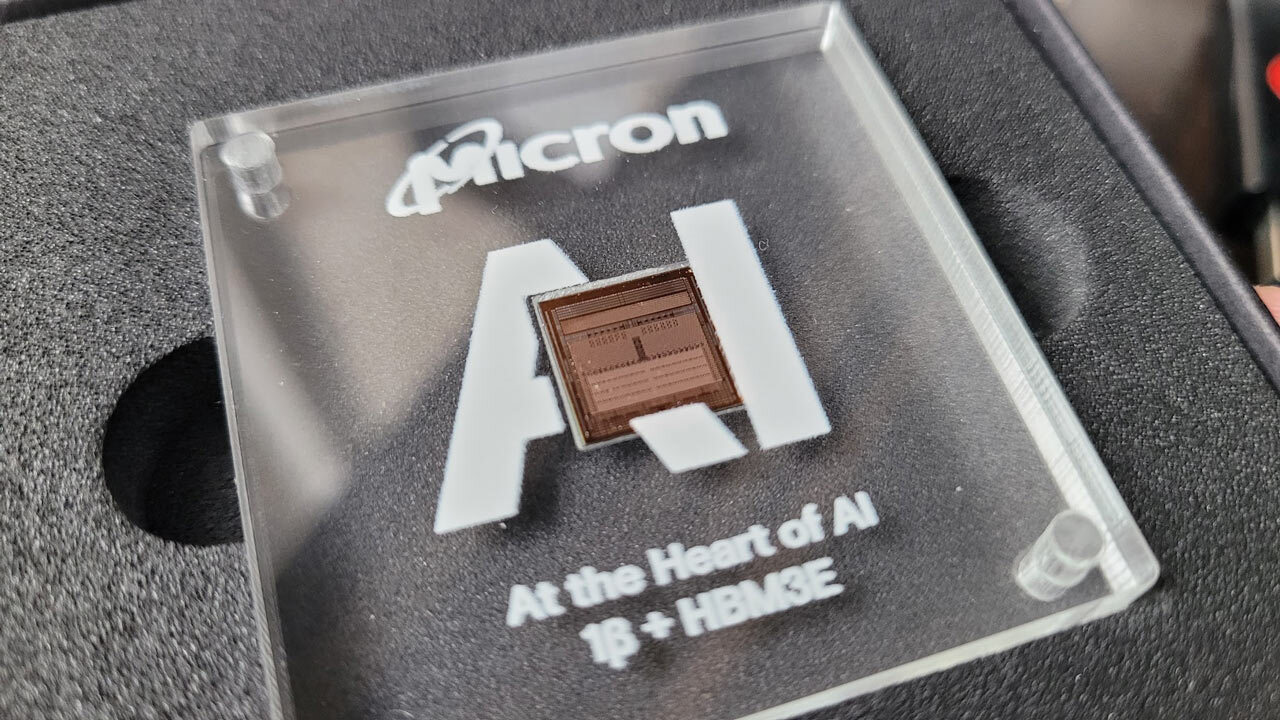

Micron announced today that it is starting volume production for its HBM3E memory, the company's latest memory for datacenter- and AI-class GPUs. In particular, Micron says its HBM3E is being used for Nvidia's upcoming H200, which is slated to launch in the second quarter of the year with six HBM3E memory chips. Additionally, Micron also said it would detail its upcoming 36GB HBM3E chips in March at Nvida's GTC conference.

Compared to regular HBM3, HBM3E boosts bandwidth from 1TB/s to up to 1.2TB/s, a modest but noticeable jump in performance. HBM3E also increases max capacity per chip to 36GB, though Micron's HBM3E for Nvidia's H200 will be 'just' 24GB per chip, on par with HBM3's maximum. These chips also support eight-high stacks, rather than the maximum 12 HBM3E can theoretically offer. Micron claims its particular HBM3E memory has 30% lower power consumption than its competitors, Samsung and Sk hynix, which also have their own implementations of HBM3E.

Micron didn't say whether it would be the only company providing Nvidia with HBM3E for H200, and it probably won't be. There is so much demand for Nvidia's AI GPUs that the company reportedly pre-purchased $1.3 billion worth of HBM3E memory from both Micron and Sk hynix, even though the latter's memory's bandwidth is only 1.15TB/s and according to Micron uses more power. For Nvidia however, being able to sell a slightly less performant product is more than worth it, given the demand.

Micron won't be able to claim it has the fastest HBM3E however, since Samsung's Shinebolt HBM3E memory is rated for 1.225TB/s. Still, Micron can at least claim it's the first to volume production, as far as we can tell. Neither Samsung nor Sk hynix have said they have achieved volume production, so presumably Micron is first in that respect.

AMD might also be a customer of Micron's HBM3E. The company's CTO Mark Papermaster has said AMD has "architected for HBM3E," implying that a version of its MI300 GPU or a next-generation product is set to use the same HBM3E found in H200. It's not clear whether it will be Micron's HBM3E in particular, however.

Micron also says it will be sharing details about its upcoming 36GB HBM3E chips at Nvidia's upcoming GTC conference in March. The company says its 36GB HBM3E chips will have more than 1.2TB/s of bandwidth, more power efficiency, and will bring the stacking limit to 12 chips. Sampling for these 36GB modules also begins in March.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.