ChatGPT's ancestor GPT-2 jammed into 1.25GB Excel sheet — LLM runs inside a spreadsheet that you can download from GitHub

Release shines a light on the Transformer architecture behind most LLMs.

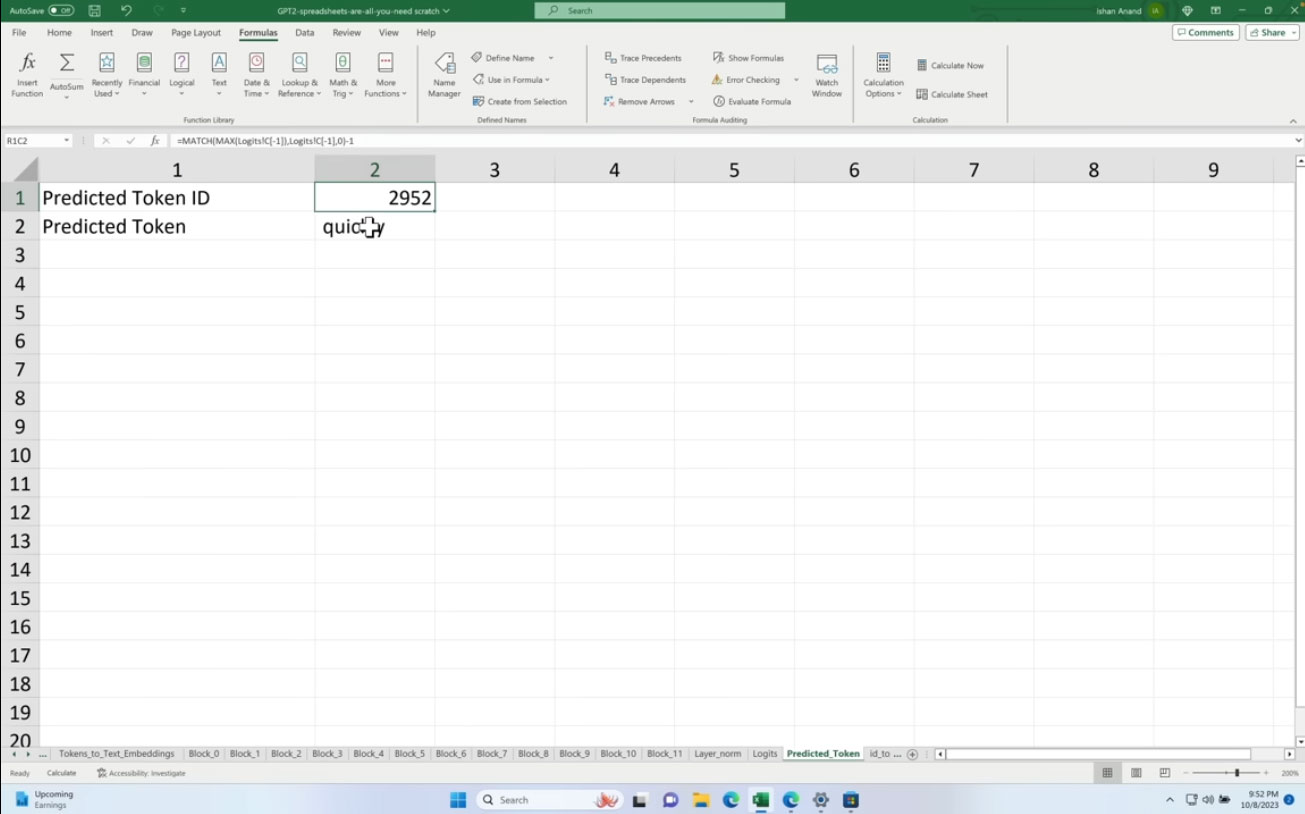

Software developer and self-confessed spreadsheet addict Ishan Anand has jammed GPT-2 into Microsoft Excel. More astonishingly, it works – providing insight into how large language models (LLMs) work, and how the underlying Transformer architecture goes about its smart next-token prediction. "If you can understand a spreadsheet, then you can understand AI," boasts Anand. The 1.25GB spreadsheet has been made available on GitHub for anyone to download and play with.

Naturally, this spreadsheet implementation of GPT-2 is somewhat behind the LLMs available in 2024, but GPT-2 was state-of-the-art and grabbed plenty of headlines in 2019. It is important to remember that GPT-2 is not something to chat with, as it comes from before the 'chat' era. ChatGPT came from work done to conversationally prompt GPT-3 in 2022. Moreover, Anand uses the GPT-2 Small model here, and the XLSB Microsoft Excel Binary file has 124 million parameters, and the full version of GPT-2 used 1.5 billion parameters (while GPT-3 moved the bar, with up to 175 billion parameters).

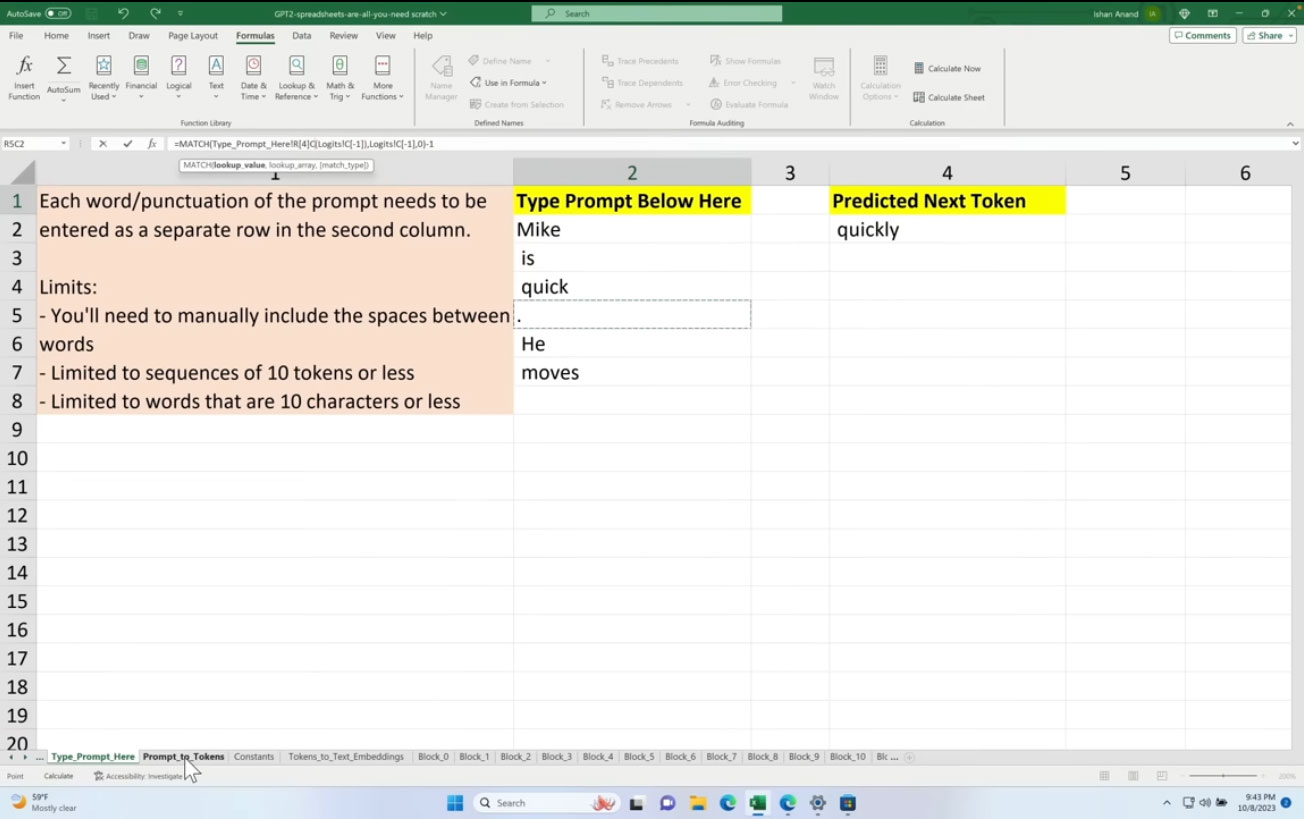

GPT-2 works mainly on smart 'next-token prediction', where the Transformer architecture language model completes an input with the most likely next part of the sequence. This spreadsheet can handle just 10 tokens of input, which is tiny compared to the 128,000 tokens that GPT-4 Turbo can handle. However, it is still good for a demo and Anand claims that his "low-code introduction" is ideal as an LLM grounding for the likes of tech execs, marketers, product managers, AI policymakers, ethicists, as well as for developers and scientists who are new to AI. Anand asserts that this same Transformer architecture remains "the foundation for OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Bard/Gemini, Meta’s Llama, and many other LLMs."

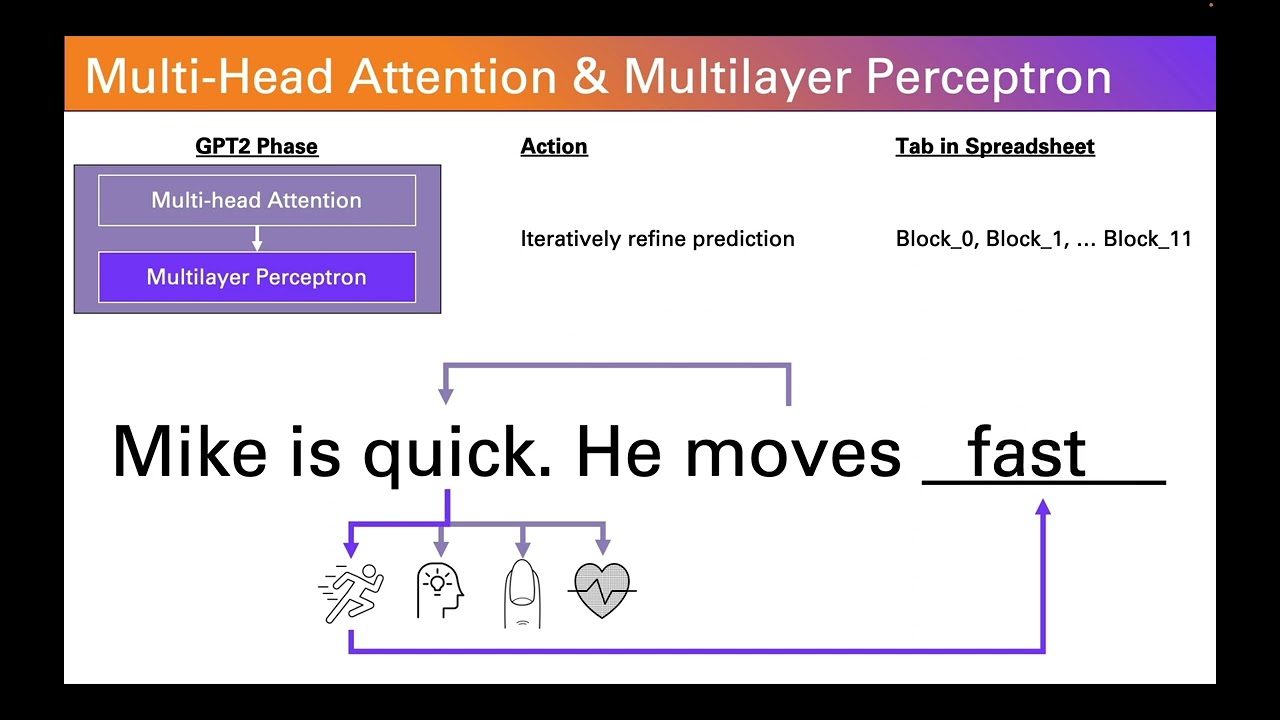

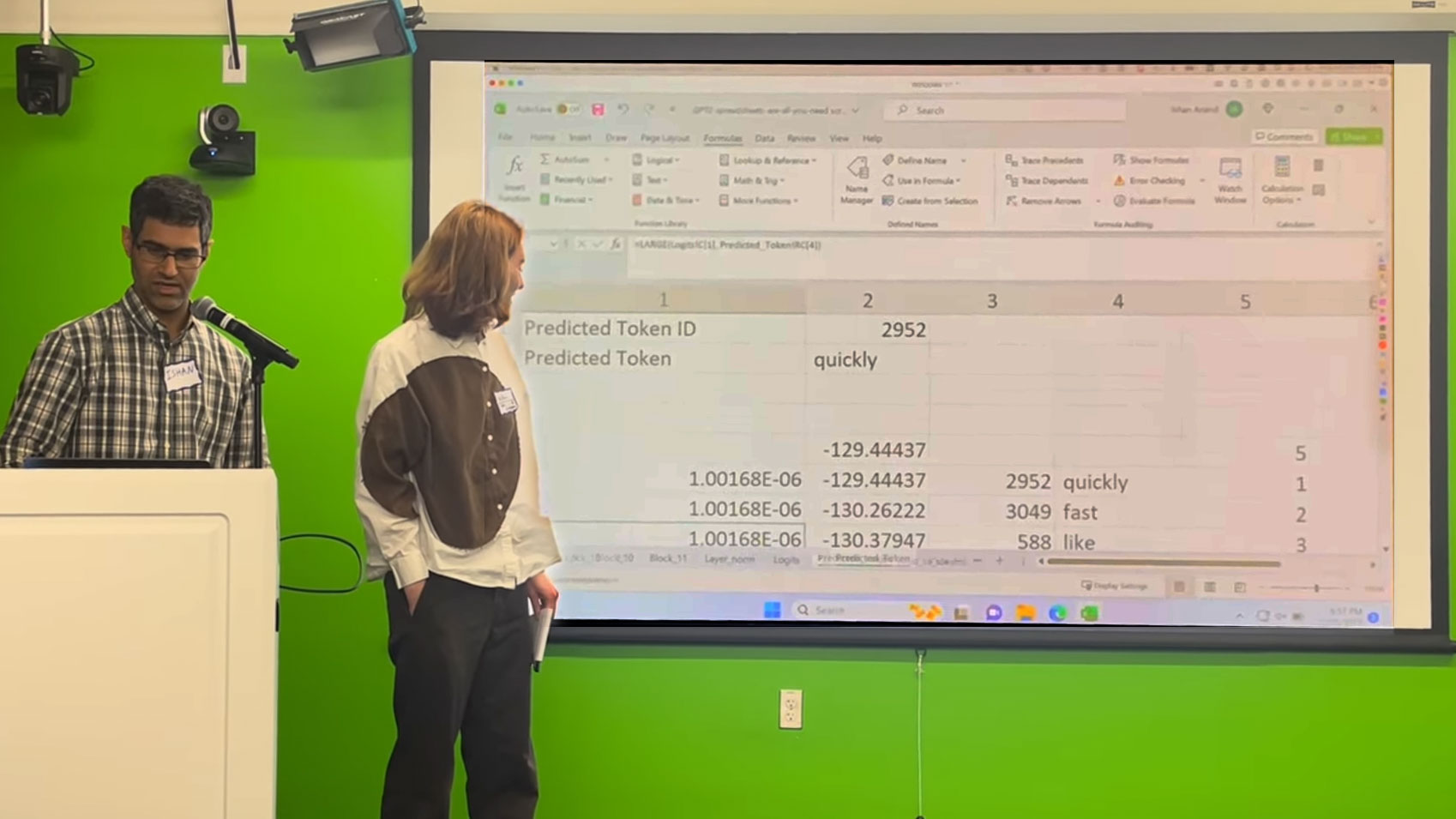

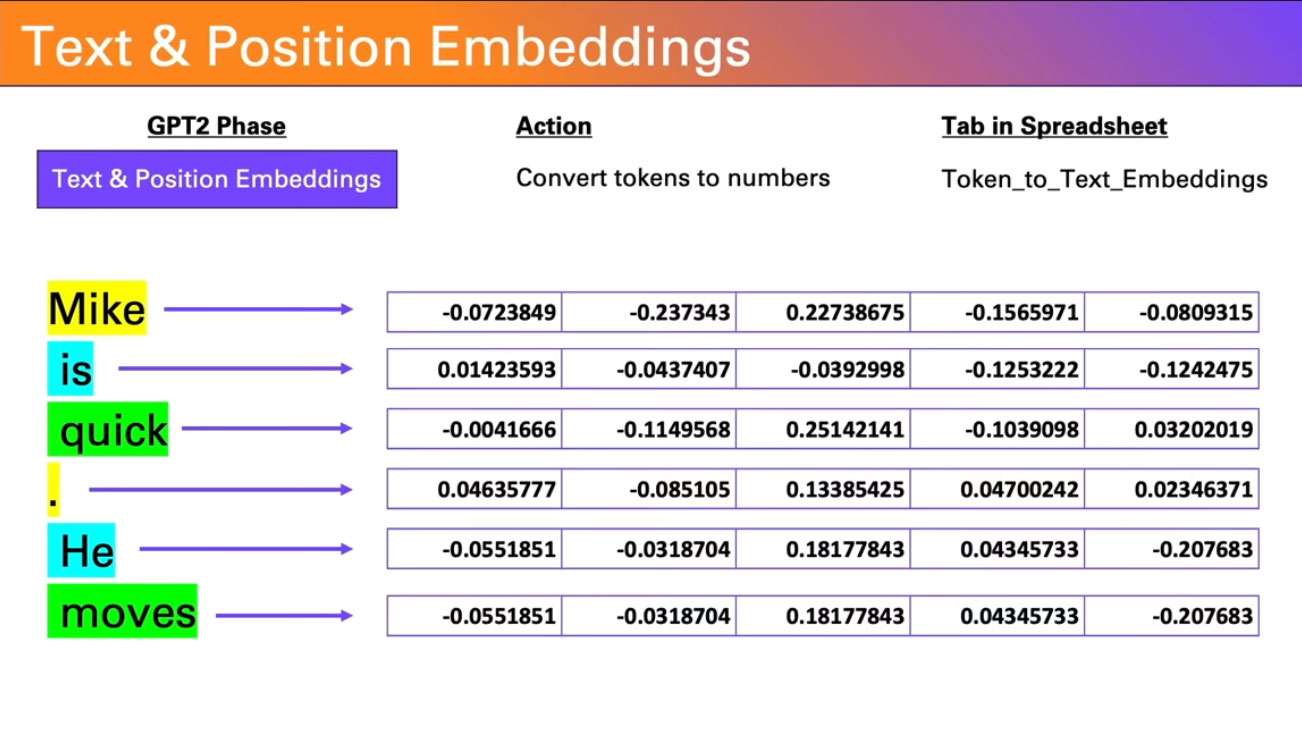

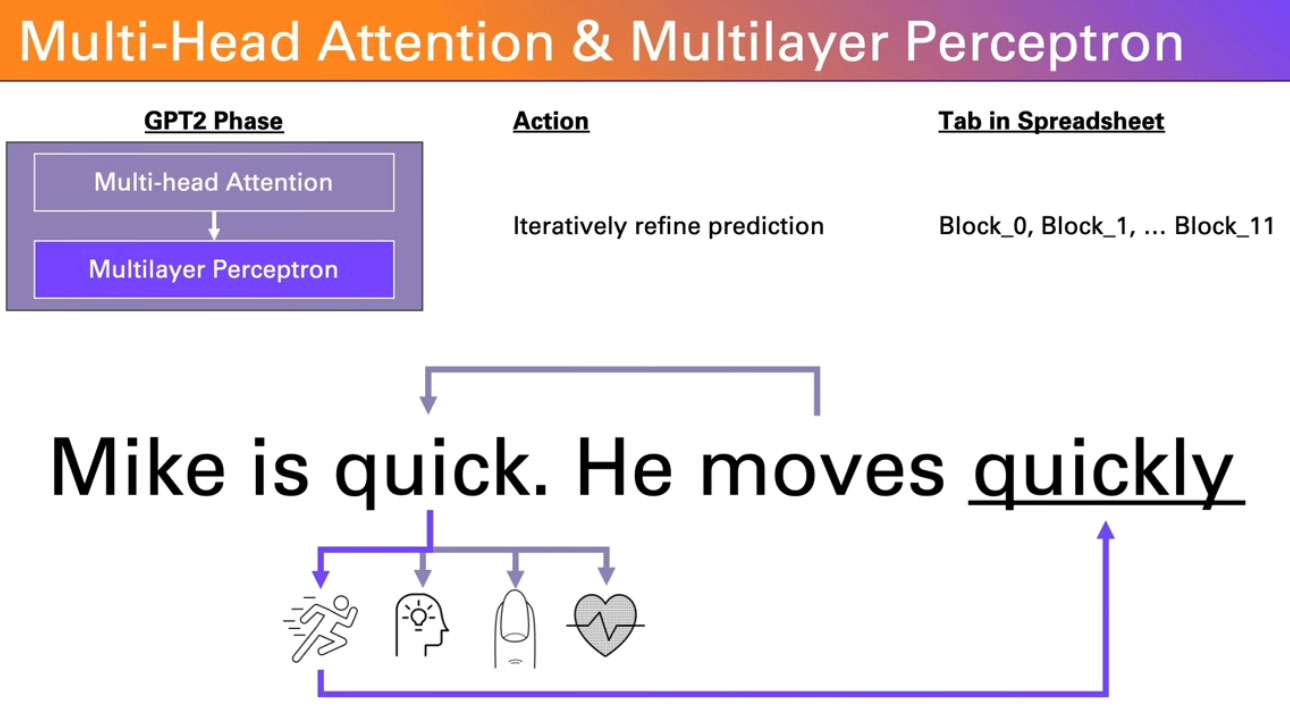

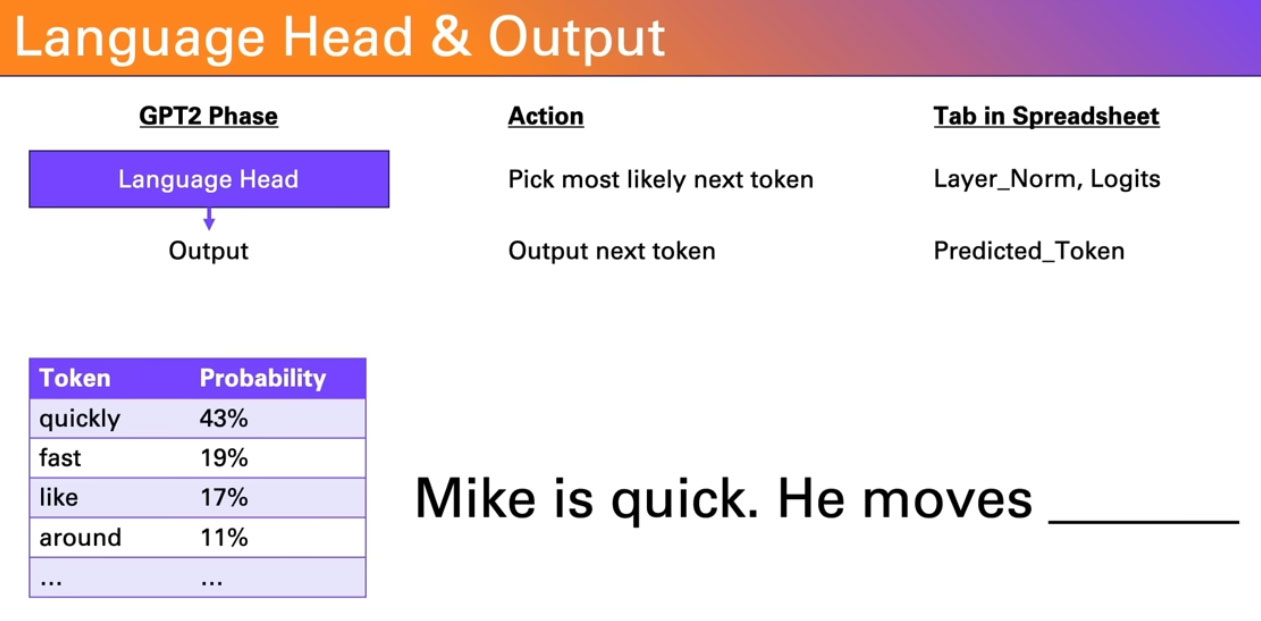

Above you can see Anand explain his GPT-2 as a spreadsheet implementation. In the multi-sheet work, the first sheet contains any prompt you want to input (but remember the 10-prompt restriction). He then talks us through word tokenization, text positions and weightings, iterative refinement of next-word prediction, and finally picking the output token – the predicted last word of the sequence.

We have mentioned the relatively compact LLM used by GPT-2 Small, above. Despite using an LLM which might not be classified as such in 2024, Anand is still stretching the abilities of the Excel application. The developer warns off attempting to use this Excel file on Mac (frequent crashes and freezes) or trying to load it on one of the cloud spreadsheet apps – as it won't work right now. Also, the latest version of Excel is recommended. Remember, this spreadsheet is largely an educational exercise, and fun for Anand. Lastly, one of the benefits of using Excel on your PC is that this LLM runs 100% locally, with no API calls to the cloud.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

BX4096 This video should be a mandatory watch for everyone who rants about the "AI" being "smarter" than the humans, "stealing" data from creators, or being "sentient" (as that Google dummy claimed).Reply -

stoppostingcrapnews Reply

Yes, I'm sure we should judge human intelligence based on its ancestors as well like Eutherians. I'm sure you can explain all the emergent properties of LLMs like learning languages it wasn't taught and the ability to pass theory of mind tests that wasn't in the training data.BX4096 said:This video should be a mandatory watch for everyone who rants about the "AI" being "smarter" than the humans, "stealing" data from creators, or being "sentient" (as that Google dummy claimed).

I'm also sure you know exactly how the human mind works which is why you're so certain technology cannot replicate it. /s -

jimbo007 Reply

No, it only shows that even an Excel spread sheet can do so many things a fraction of which you may hope to understand.BX4096 said:This video should be a mandatory watch for everyone who rants about the "AI" being "smarter" than the humans, "stealing" data from creators, or being "sentient" (as that Google dummy claimed). -

What a load of crap, however we will continue like sheep to blindly allow these mega companies to invade and analyse all our lives and data and pigeon hole us until one day you will wake up not having any control or say in how you live think or work.Reply

Non of this is necessary, none of these AI machines can ever hope to be a tenth as clever or as intuitive as a human brain yet we are all blindly jumping into the rabbit hole because big data companies tell us we need it, create the need then supply it Nvidia did very well -

cknobman Reply

Or he could be saying that the human mind is so complex that technology is currently, and possibly never, able to replicate it.stoppostingcrapnews said:Yes, I'm sure we should judge human intelligence based on its ancestors as well like Eutherians. I'm sure you can explain all the emergent properties of LLMs like learning languages it wasn't taught and the ability to pass theory of mind tests that wasn't in the training data.

I'm also sure you know exactly how the human mind works which is why you're so certain technology cannot replicate it. /s

AI is just an imitation of intelligence. All its "decisions" are based on what we understand intelligence to be and therefore its output is a direct reflection of who creates it. -

NinoPino Reply

Is like saying that even a pen can do so many things a fraction of which you may hope to understand.jimbo007 said:No, it only shows that even an Excel spread sheet can do so many things a fraction of which you may hope to understand.