Chinese chipmaker launches 14nm AI processor that's 90% cheaper than GPUs — $140 chip's older node sidesteps US sanctions

If there's a way to sidestep sanctions, you know China is on that beat.

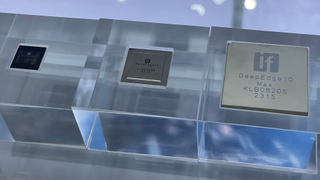

Aiming at the high-end hardware that dominates the AI market and has caused China-specific GPU bans by the US, Chinese manufacturer Intellifusion is introducing "DeepEyes" AI boxes with touted AI performance of 48 TOPS for 1000 yuan, or roughly $140. Using an older 14mn node and (most likely) an ASIC is another way for China to sidestep sanctions and remain competitive in the AI market.

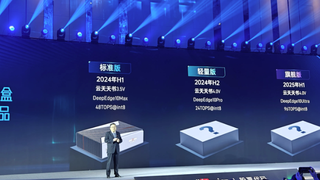

The first "Deep Eyes" AI box for 2024 leverages a DeepEdge10Max SoC for 48 TOPS in int8 training performance. The 2024 H2 Deep Eyes box will use a DeepEdge10Pro with up to 24 TOPS, and finally, the 2025 H1 Deep Eyes box is aiming at a considerable performance boost with the DeepEdge10Ultra's rating of up to 96 TOPS. The pricing of these upcoming higher-end models is unclear. Still, if they can maintain the starting ~1000 yuan cost long-term, Intellifusion may achieve their goal of "90% cheaper AI hardware" that still "covers 90% of scenarios".

All of those above fully domestically-produced hardware leverages Intellifusion's custom NNP400T neural networking chip. Besides the other expected components of SoCs, this specialized (a 1.8 GHz 2+8 cores RISC CPU, GPU up to 800 MHz in DeepEdge 10), the effective NPU onboard makes this a pretty tasty option inside its market.

For your reference, to meet Microsoft's stated requirements of an "AI PC," modern PCs must have at least 40 TOPS of NPU performance. So, Intellifusion's immediate trajectory seems like it should soon be suitable for many AI workloads, especially considering most existing NPUs are only as fast as 16 TOPS. However, Snapdragon's X Elite chips are set to boast 40 TOPS alongside industry-leading iGPU performance later this year.

As Dr. Chen Ning, chairman of Intellifusion, posted, "In the next three years, 80% of companies around the world will use large models. [...] The cost of training a large model is in the tens of millions, and the price of mainstream all-in-one training and pushing machines is generally one million yuan. Most companies cannot afford such costs."

While the claim that 80% of companies worldwide will be leveraging AI seems...questionable at best, a fair point is being made here about the cost of entry for businesses to make meaningful use of AI, especially in creating their models. The DeepEdge chips use "independent and controllable domestic technology" and a RISC-V core to support extensive model training and inference deployment.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

The Historical Fidelity So they are offering discrete boxes with the performance of a mainstream Intel or AMD cpu with an NPU. This is the product the world has ever seen lolReply -

Notton If this could be slapped onto a PCIe card, and used in a multi-card setup, I guess it wouldn't be bad?Reply -

The Historical Fidelity Reply

I guess yeah if you must have Microsoft Co-Pilot but don’t want to upgrade CPU’s you could plop one into your computer.Notton said:If this could be slapped onto a PCIe card, and used in a multi-card setup, I guess it wouldn't be bad? -

PEnns "Chinese manufacturer Intellifusion is introducing "DeepEyes""Reply

Heh, the Chinese are publicizing the real names of Google, Fakebook and Microsoft!! -

Alvar "Miles" Udell Considering the term "AI" is used so generically that it can encompass anything that uses an algorithm, I think it's more than questionable that 80% of businesses will use "AI" and that more likely 100% of businesses will, since any business that tells "AI" to, say, "Examine last year's sales and revenue and generate charts and reports", something any business from an independent contractor to a Fortune 500 company will do, qualifies as "using AI".Reply -

mistermcluvin Very interesting. As someone who is long in NVDA, I am always on the lookout on where their competition in the AI space will come from. Is AI like BTC mining where it started with multi purpose GPUs but will eventually end up in dedicated ASICs?Reply -

The Historical Fidelity Reply

AI is so popular I’m surprised there is not a hip-hop artist named Algo-RhythmAlvar Miles Udell said:Considering the term "AI" is used so generically that it can encompass anything that uses an algorithm, I think it's more than questionable that 80% of businesses will use "AI" and that more likely 100% of businesses will, since any business that tells "AI" to, say, "Examine last year's sales and revenue and generate charts and reports", something any business from an independent contractor to a Fortune 500 company will do, qualifies as "using AI". -

Findecanor The primary driver for AI in China is the PRC's surveillance machine.Reply

The primary driver for AI in the West is fear over China having invested so much in AI, but without understanding why.

Otherwise it is gnomes collecting underpants all the way down.

Most Popular