Nvidia announces supercomputers based on its Grace Hopper platform: 200 ExaFLOPS for AI

Nvidia's GH200 finds a home in supercomputers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

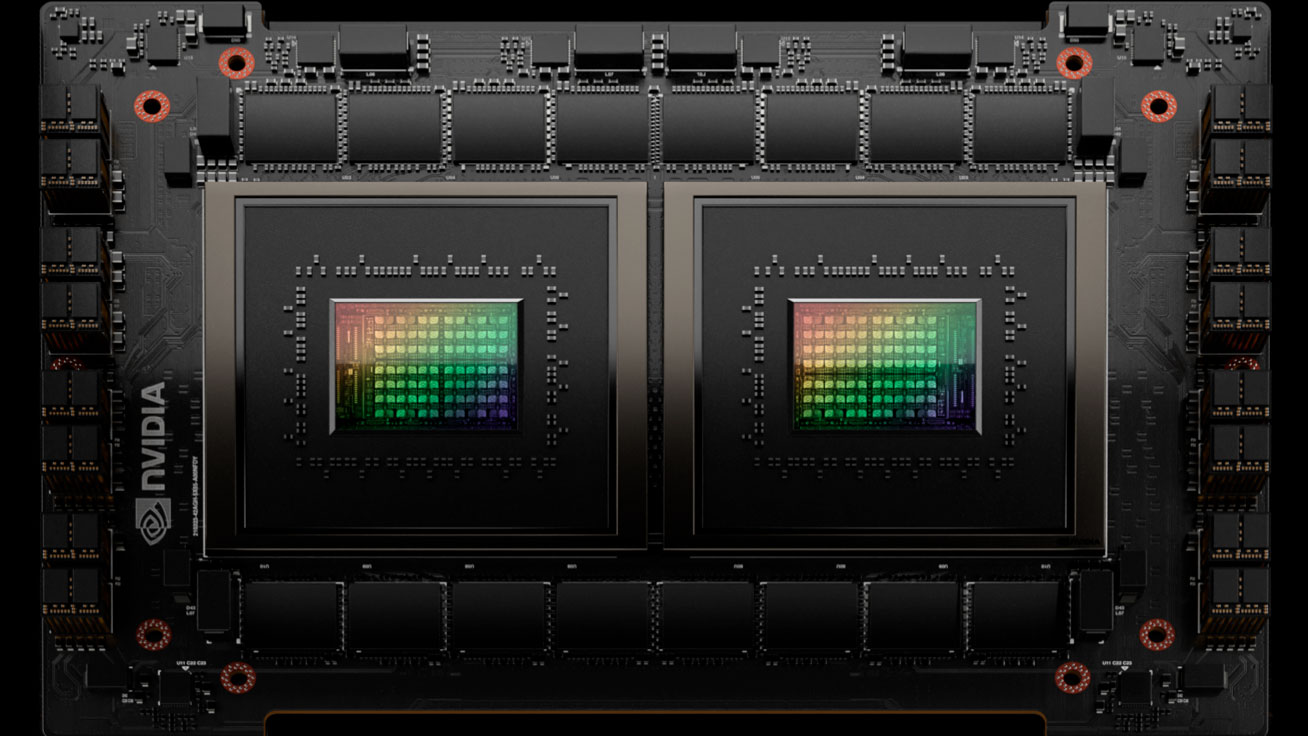

Nvidia said that its Grace Hopper GH200 platforms — consisting of one 72-core Grace processor and an H100 GPU for AI and HPC workloads — have been adopted for nine supercomputers across the globe. These supercomputers collectively achieve a staggering 200 ExaFLOPS of 'AI' computational power, though their FP64 computational performance needed for scientific simulations is significantly lower.

New installations span several countries, including France, Poland, Switzerland, Germany, the United States, and Japan. Among the standout systems is the EXA1-HE in France, developed in collaboration between CEA and Eviden. This supercomputer is equipped with 477 compute nodes that rely on Nvidia's Grace Hopper processors and deliver substantial performance. EXA1-HE is based on Eviden’s BullSequana XH3000 architecture and features a warm-water cooling system to enhance energy efficiency.

Another important Grace Hopper-based machine is of course the Isambard-AI project at the University of Bristol in the U.K., which was announced in late 2023. Initially equipped with 168 Nvidia GH200 Superchips, Isambard 3 stands as one of the most energy-efficient supercomputers to date. Upon the arrival of an additional 5,280 Nvidia Grace Hopper Superchips this summer, the system's performance is expected to increase approximately 32 times, which will turn the machine into one of the fastest supercomputers for AI-driven scientific research in the world. When fully built, the system will feature over 55,000 high-performance Arm Neoverse V2 cores, which promise to deliver quite formidable FP64 performance.

Other noteworthy machines include the Helios supercomputer at Academic Computer Centre Cyfronet in Poland, Alps at the Swiss National Supercomputing Centre, Jupiter at the Jülich Supercomputing Centre in Germany, DeltaAI at the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, and Miyabi at Japan’s Joint Center for Advanced High Performance Computing. These systems vary in specialization but all incorporate the latest Nvidia platform to drive forward their respective scientific agendas.

The key thing about the announcement is that Nvidia's Grace Hopper platform powered by the company's own CPU and GPU technologies is gaining traction in the scientific world. While Nvidia almost controls the market of AI GPUs, getting into supercomputers is also very important for the company as HPC is a particularly sizable and lucrative business.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Samlebon2306 "Nvidia announces supercomputers based on its Grace Hopper platform: 200 ExaFLOPS for AI"Reply

The title is a disrespect to the readers.

First, it's not 200 ExaFloats, but more likely 200 Exa-MiniFloats(either Bfloat16 or bfloat8).

Second, that astronomic number is an aggregate number of the performance of 9 computers.

I think TH could do better that.