Arm unveils next-gen Neoverse CPU cores and compute subsystems — hoping to entice more custom silicon customers

A major development for Arm's server platforms.

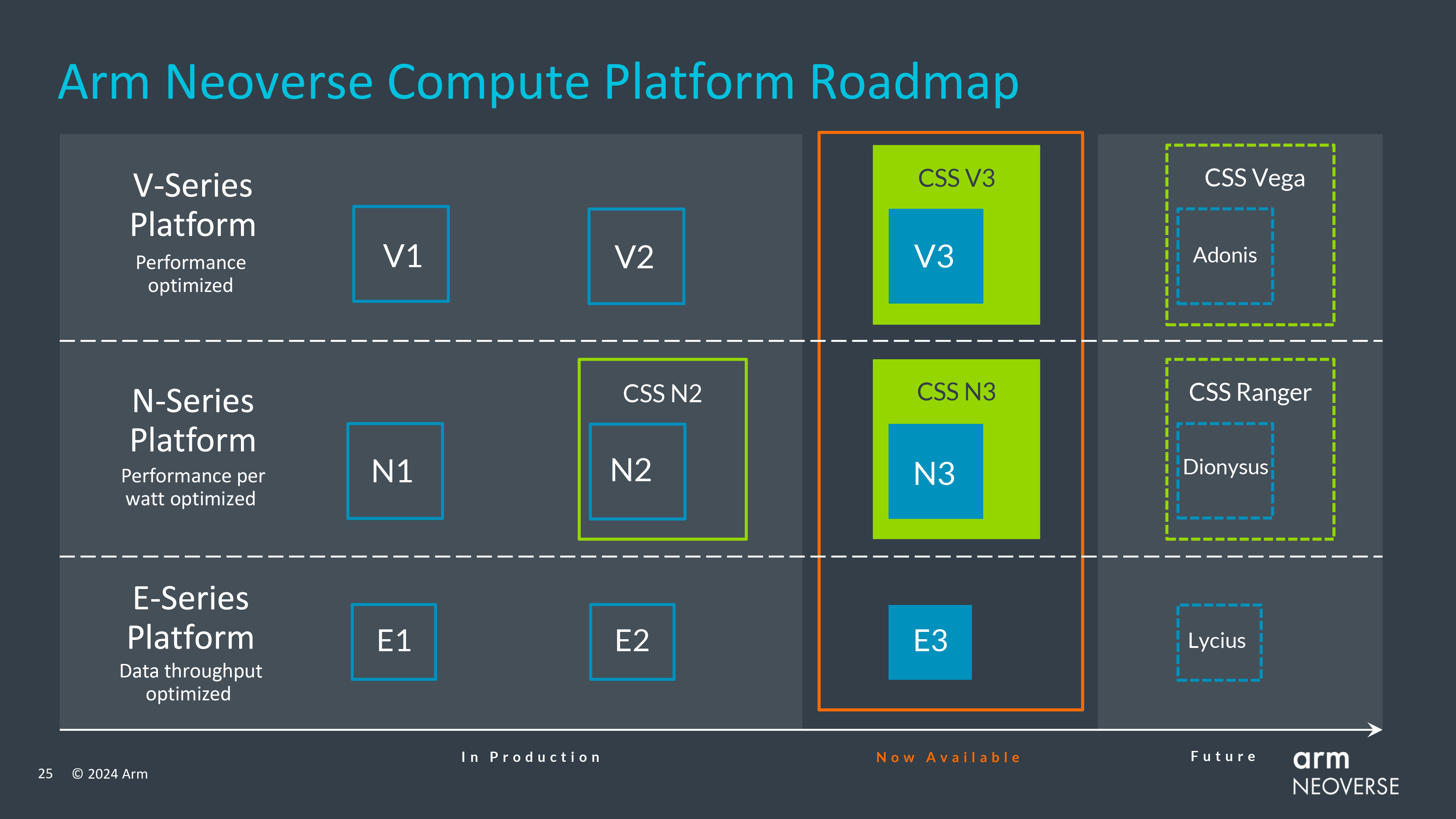

Arm on Wednesday announced its next-generation general-purpose CPU cores for datacenter processors. The new Neoverse V3, Neoverse N3, and Neoverse E3 CPU cores are aimed at high-performance computing (HPC), general-purpose CPU instances and infrastructure applications, and edge computing and low-power applications, respectively. Alongside the new cores, Arm is also rolling-out Compute Subsystems (CSS), which consist of CPU cores, memory, I/O, and die-to-die interconnect interfaces to speed up processors' development.

Arm's Neoverse Compute Subsystems (CSS) are integrated and verified platforms that bring together all the key components required for the heart of a system-on-chip (SoC). These subsystems are designed to provide a starting point for building custom solutions, enabling Arm's partners to enhance CSS with their own IP and introduce their designs to market rapidly, as the company expects it to take about nine months from design start to tape out. A CSS includes the CPU core complex, memory, and I/O interfaces, and is optimized for specific use cases across a particular market segment — such as cloud computing, networking, and AI.

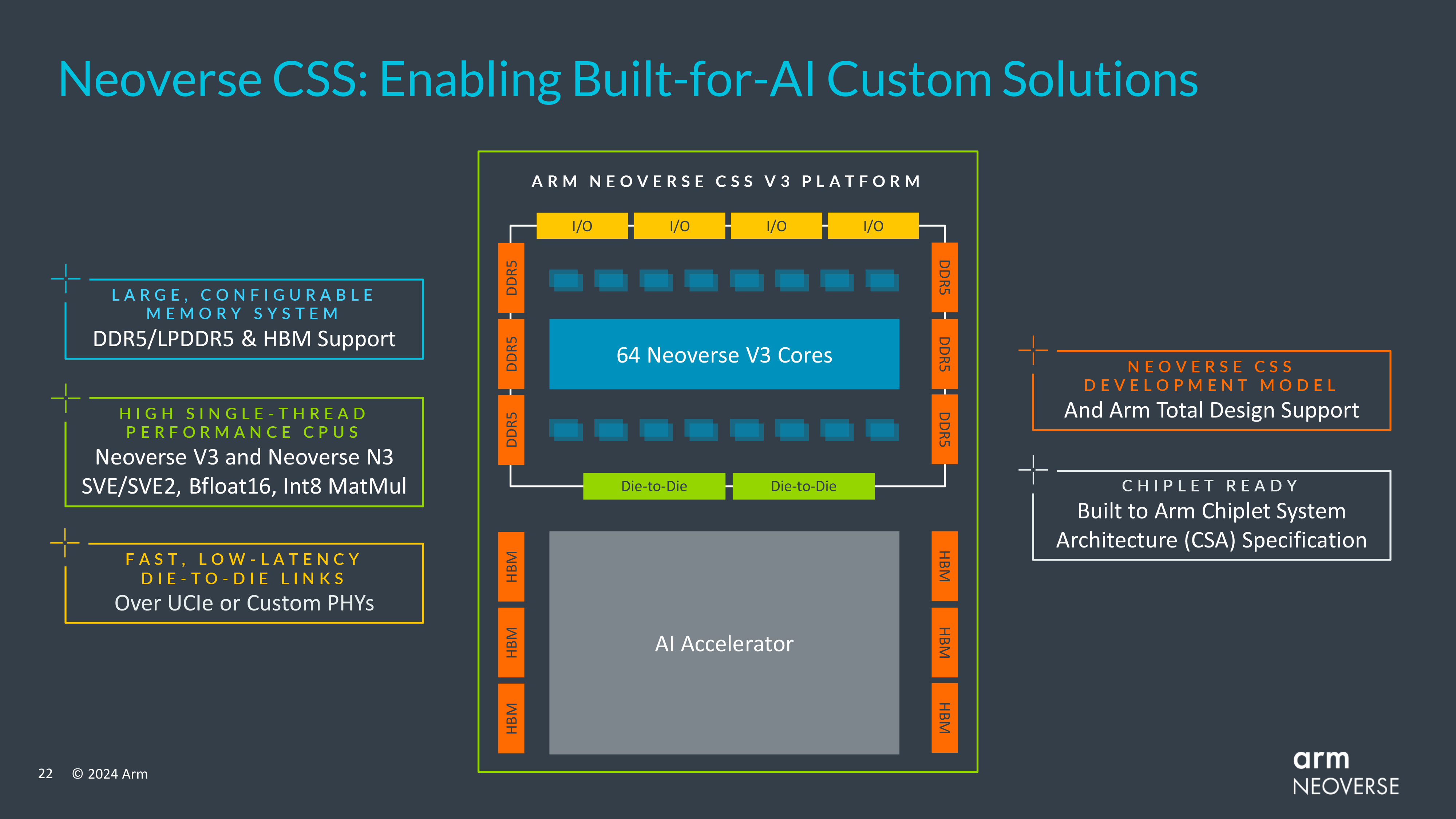

By using CSS, partners can focus on system-level and workload-specific differentiation, while leveraging Arm's technology for its underlying compute capabilities. Meanwhile, Arm's Neoverse CSS supports Arm Total Design (a package of IPs from 20 Arm's partners) as well as Arm's Chiplet System Architecture CSA and UCIe interfaces for stitching a CSS with a compatible third-party silicon.

Neoverse V3

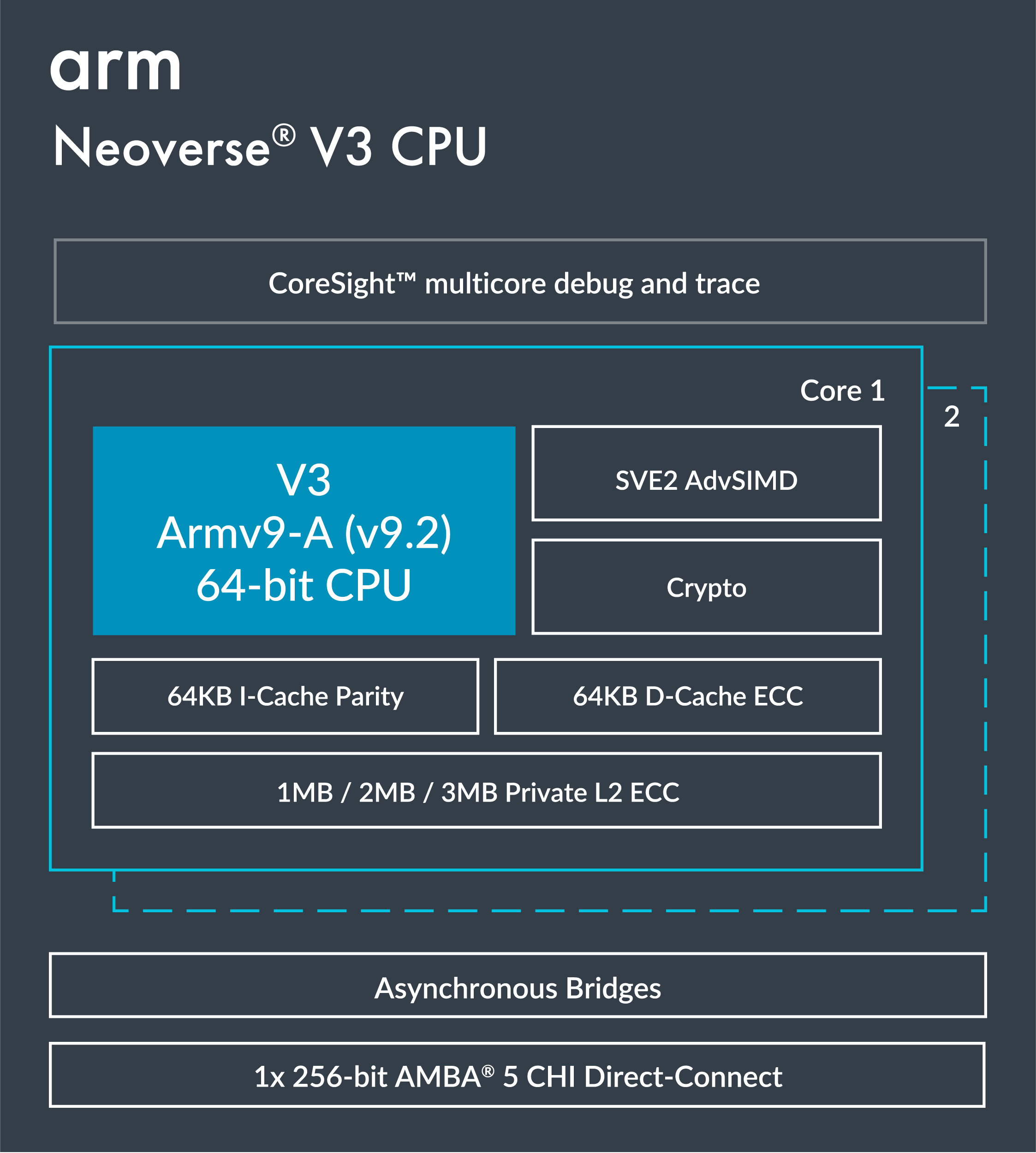

Arm's Neoverse V3 is the company's highest-performing CPU core ever. The core is based on the Armv9-A (v9.2) instructions set architecture (ISA) enhanced with SVE2 SIMD extension and equipped with 64KB + 64KB (instructions + data) L1 cache as well as 1MB/2MB/3MB L2 cache with ECC capability.

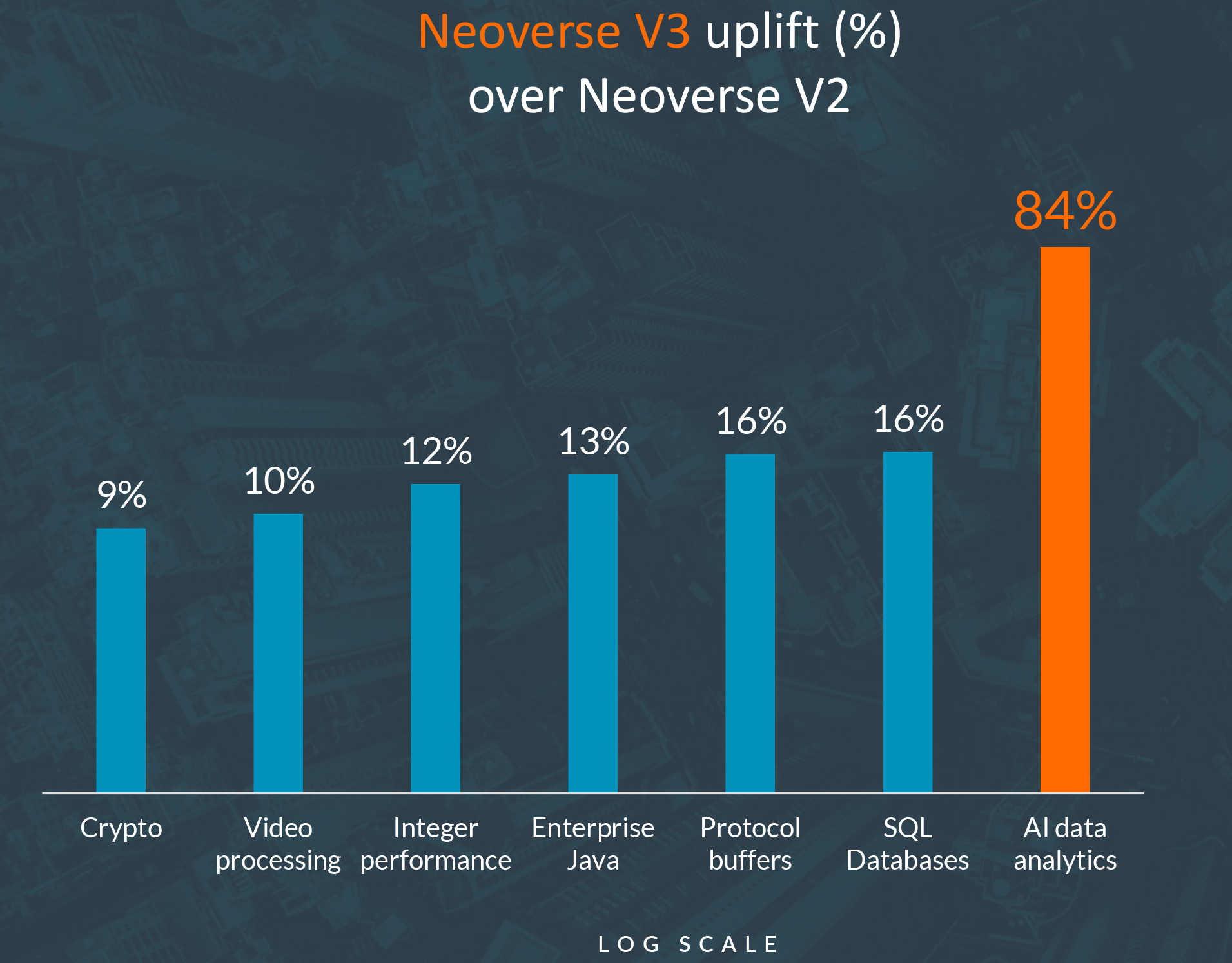

Arm says that depending on the workload, a simulated 32-core Neoverse V3 offers a 9% - 16% performance uplift compared to a simulated 32-core Neoverse V2 in typical server workloads, which looks quite decent considering we're talking about cores that compete against AMD's Zen 4 and Intel's Raptor Cove — and we rarely see huge generation-to-generation performance upticks on this market. The new Neoverse V3 processor can offer a whopping 84% performance improvement over the Neoverse V2 in AI data analytics, according to simulations by Arm. This is, of course, a major improvement, and will attract attention to the core.

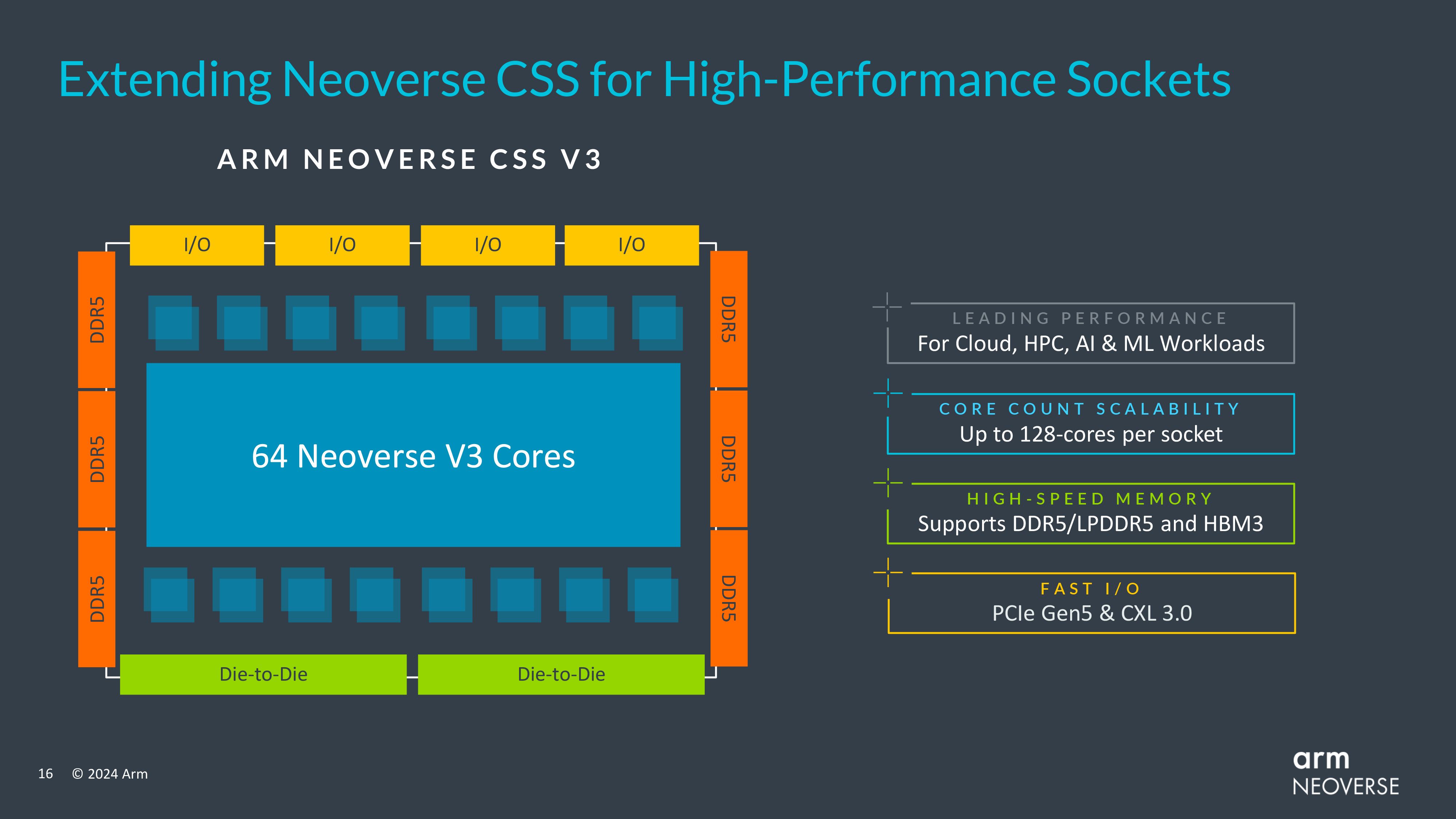

What is important is that, along with the Neoverse V3 core itself, Arm is rolling out its Neoverse V3 Compute Subsystem (CSS), which includes 64 Neoverse V3 cores (with SVE/SVE2, BFloat16, and INT8 MatMul support), a memory subsystem with 12-channel DDR5/LPDDR5 and HBM memory support, 64-lanes of PCIe Gen5 with CXL support, die-to-die interconnects, UCIe 1.1, and/or custom PHYs. The Neoverse V3 can scale to 128 cores per socket — enabling fairly formidable server CPUs.

Neoverse N3

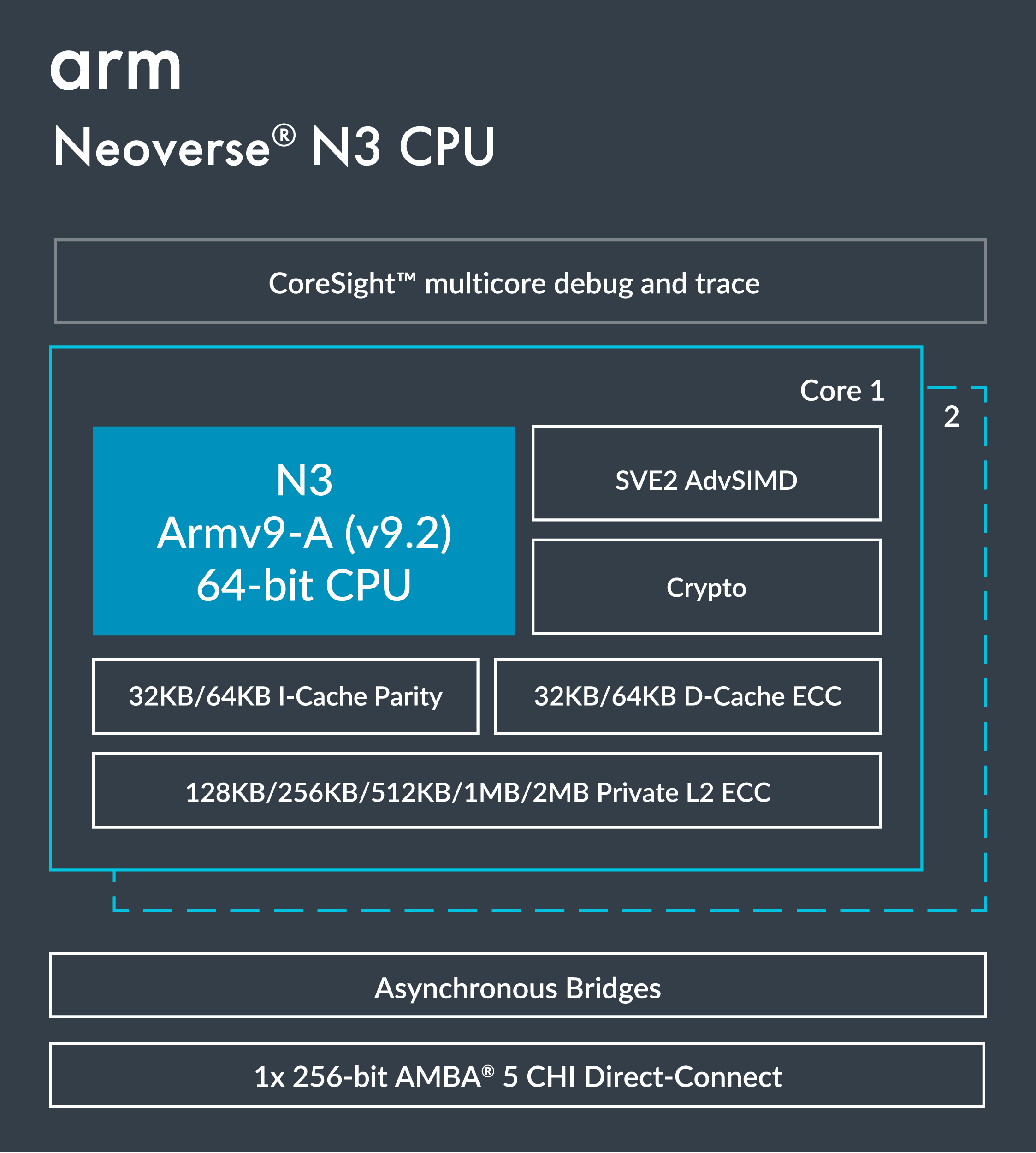

When it comes to Arm's Neoverse N3 core, these are the company's first Armv9.2-based cores for general-purpose CPU instances and infrastructure applications that have to offer a balance between performance and power consumption. These Armv9.2 cores with SVE2 can be equipped with 32KB/64KB + 32KB/64KB (instructions + data) L1 cache as well as 128KB – 2MB L2 cache with ECC capability.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

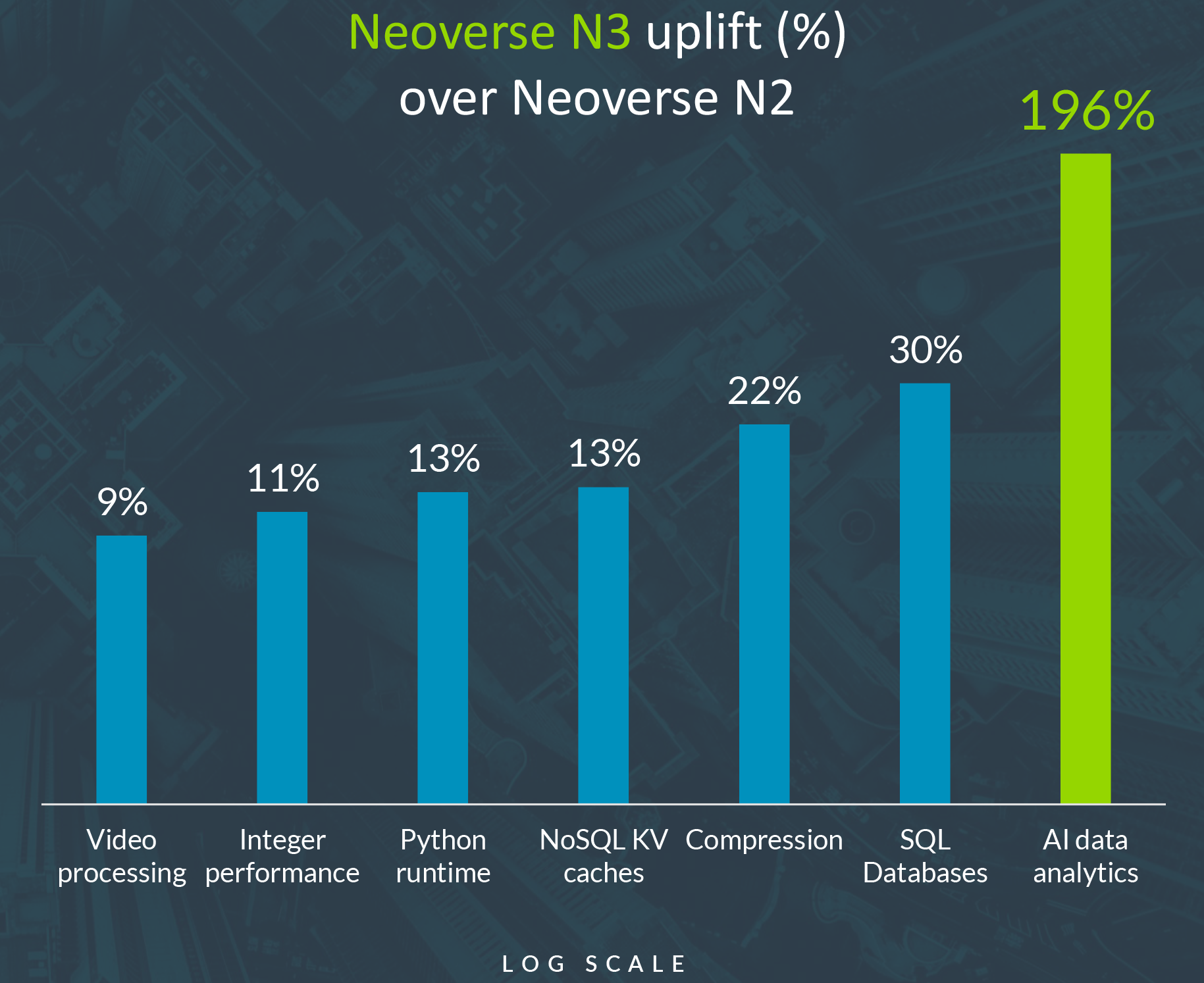

From performance point of view, Arm claims that a simulated 32-core Neoverse N3 processor outperforms a simulated 32-core Neoverse N2 processor by 9% to 30% — depending on the workload — which is quite good. In AI data analytics, the simulated Neoverse N3-based SoC is 196% faster than the simulated Neoverse N2 chip.

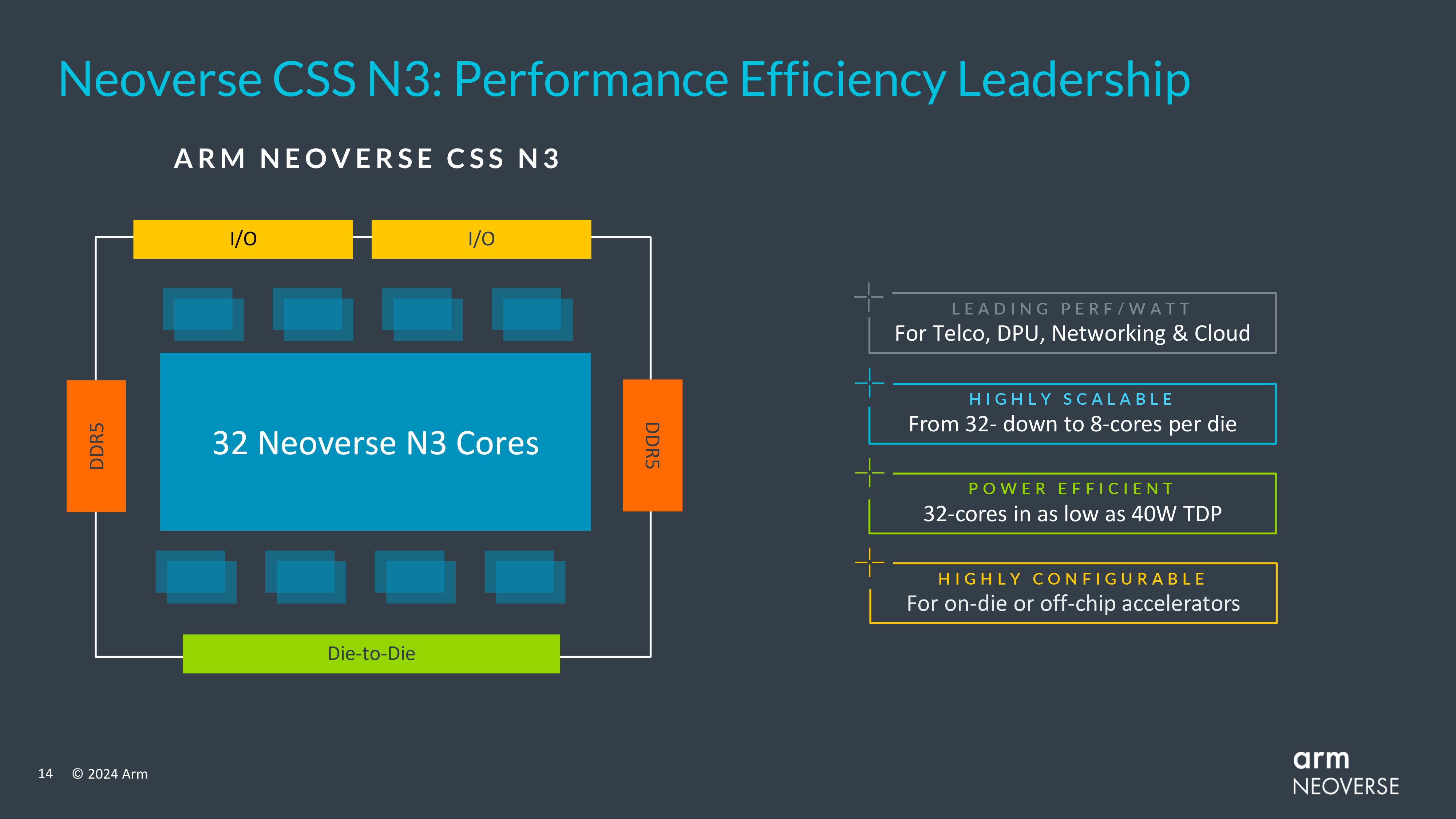

Neoverse CSS N3

Arm's Neoverse CSS N3 is aimed at workloads that do not need performance at all costs, so one N3 Compute Subsystem packs 32 N3 cores, four 40-bit DDR5/LPDDR5 memory channels, 32 PCIe Gen5 lanes with CXL support, high-speed die-to-die links, and UCI 1.1 support. Such a solution has a TDP of 40W, according to Arm, which did not elaborate on process technology used.

So far, Arm's Neoverse CSS has been adopted by Microsoft for its Cobalt 100 general-purpose server processor. However, Arm expects considerably broader adoption of its CSS offerings going forward.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user I found these details of ARMv9.1-A:Reply

General Matrix Multiply (GEMM) instructions

Fine grained traps for virtualization

High precision Generic Timer

Data Gathering Hint

...and ARMvv9.2-A:

Enhanced support for PCIe hot plug

Atomic 64-byte load and stores to accelerators

Wait For Instruction (WFI) and Wait For Event (WFE) with timeout

Branch-Record recording

Source: https://developer.arm.com/documentation/102378/0201/Armv8-x-and-Armv9-x-extensions-and-features -

DaveLTX V2 competes against Zen 4? I've only seen Graviton4 using V2 which is hardly a fleshed out roster of comparisons because I've hardly seen any comparison of AWS instances of Genoa Vs G4 eitherReply -

bit_user Reply

Nvidia's Grace uses Neoverse V2 cores. Overall, Genoa does better, even when you account for the difference in core count, but Grace does manage to chalk up a few notable wins.DaveLTX said:V2 competes against Zen 4? I've only seen Graviton4 using V2 which is hardly a fleshed out roster of comparisons because I've hardly seen any comparison of AWS instances of Genoa Vs G4 either

Grace vs. 64-core and 96-core ThreadRipper:

https://www.phoronix.com/review/nvidia-gh200-amd-threadripper

I would disregard the OpenVINO benchmarks, which probably just haven't been optimized for SVE2. Besides, if you're running Nvidia Grace, then you probably paired it with a Hopper GPU that's way faster at AI.

Here, it's compared against a bunch of Intel and AMD servers, in both 1P and 2P configurations:

https://www.phoronix.com/review/nvidia-gh200-gptshop-benchmark

Its Geomean is 95.2% of a 64-core Zen 4 EPYC 9554. Again, I think that's pretty good if you consider how lopsided a few of the benchmarks are towards x86 - they surely must be dragging down its score. -

jp7189 Regarding memory support, the N3 portion of the article states the N3 support quad 40 bit channels. First, I thought ecc was 36 bits (32+4 for ecc) per channel. Second, this is essentially the same bandwidth as 128bit "dual channel" consumer desktops. That can't be right.Reply -

bit_user Reply

No, it's correct. DDR5 has 8 bits of ECC per 32-bit subchannel. You're thinking of DDR4, which has 8 bits of ECC per 64-bit channel.jp7189 said:Regarding memory support, the N3 portion of the article states the N3 support quad 40 bit channels. First, I thought ecc was 36 bits (32+4 for ecc) per channel.

The comparison is apt. It's a 32-core, 32-thread SoC that you're comparing against a 16-24 core, 32-thread CPU. It's for smaller-scale edge servers and line cards.jp7189 said:Second, this is essentially the same bandwidth as 128bit "dual channel" consumer desktops. That can't be right.

Note where it's scalable down to 8 cores and how it can run on only 40 W even for 32 cores! Running on such a modest power budget, and not their widest cores, you probably don't need more than about 128-bits worth of DDR5 bandwidth. -

jp7189 Reply

Thanks for that. I was shopping for ECC and found some Kingston ram that only supported 4 bits per channel (EC4). I generalized that to be the configuration of all DDR5 ECC. After reading your comment I dug deeper and now see there is an 8 bit mode (EC8) that I didn't notice before. It seems it's up to the memory controller and dram maker to choose either 4 or 8 in this generation. It's something I need to pay closer attention to when choosing components.bit_user said:No, it's correct. DDR5 has 8 bits of ECC per 32-bit subchannel. You're thinking of DDR4, which has 8 bits of ECC per 64-bit channel.