Nvidia Claims Grace CPU is 2X Faster Than AMD Genoa, Intel Sapphire Rapids at Same Power

Nvidia has shared new benchmarks for the company's Grace CPU Superchip. The next-generation Arm Neoverse-based chip, which will power data centers, delivers twice the performance of AMD's 4th Generation EPYC Genoa and Intel's 4th Generation Sapphire Rapids Xeon processors at the same power consumption.

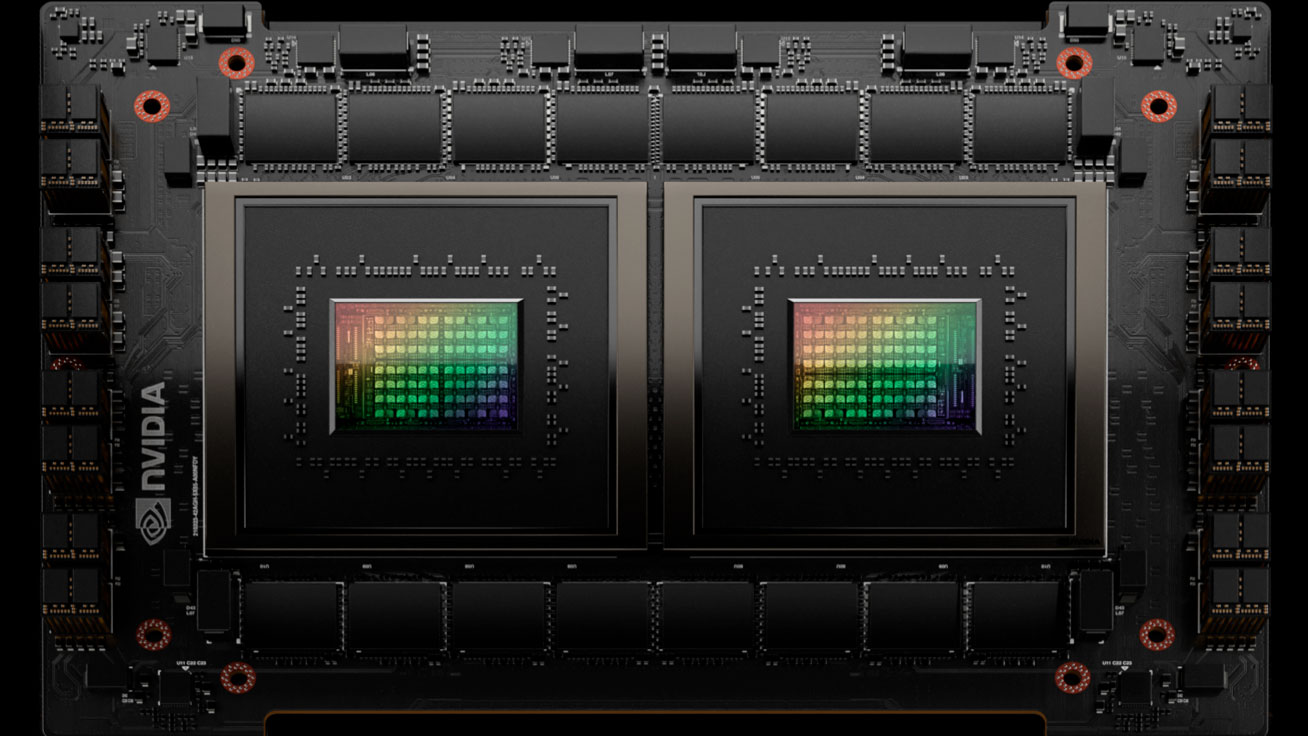

As a quick introduction, the Grace CPU Superchip features two 72-core chips on a single board, amounting to 144 Arm Neoverse V2 cores. An NVLink-C2C interface connects the two chips, enabling a bidirectional bandwidth of up to 900 GB/s. Each processor has eight dedicated LPDDR5X packages for up to 1TB. In the other two corners, we have the EPYC 9654 with 96 Zen 4 cores and the Xeon Platinum 8480+ with 56 Golden Cove cores.

The Grace CPU Superchip is a dual-chip solution; therefore, Nvidia tested the EPYC 9654 and Xeon Platinum 8480+ in a 2P platform. As a result, we have a battle of the juggernauts with 144 Neoverse V2 cores going up against 192 Zen 4 cores and 112 Golden Cove cores.

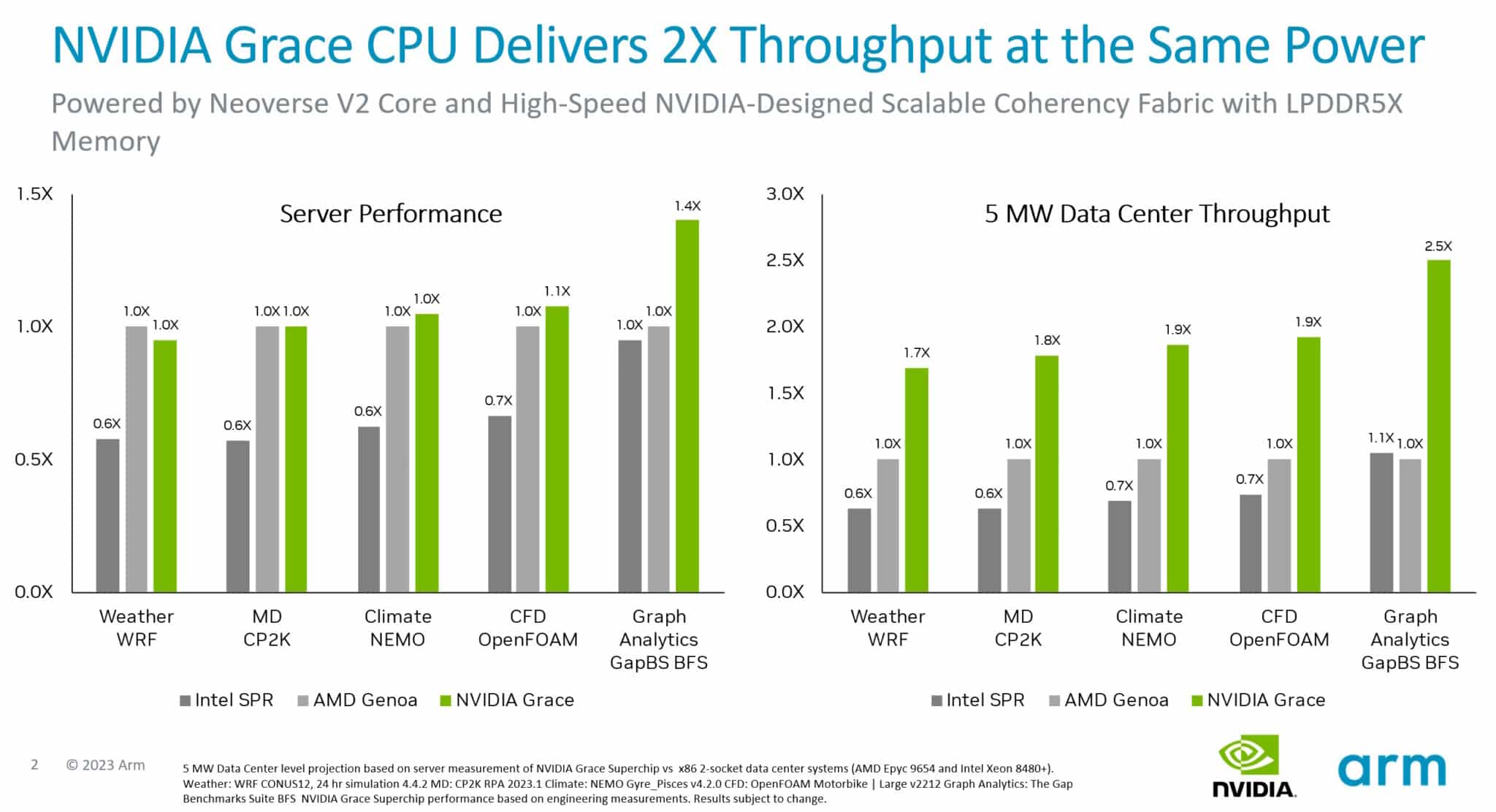

Being vendor-provided benchmark results, we recommend the usual amount of caution when looking at them. Nvidia chose five different specialized workloads to compare the three core-heavy processors. The list includes Weather WRF, MD CP2K, Climate NEMO, CFD OpenFOAM, and Graph Analytics GapBS BFS.

According to Nvidia's benchmark results, the Grace CPU Superchip delivered 40% higher performance than the Xeon Platinum 8480+ across the board. But it was pretty much neck-to-neck with the EPYC 9654 — except for the Graph Analytics GapBS BFS benchmark, where the Grace CPU Superchip posted a 40% lead over the AMD processor.

The EPYC 9654 outperformed the Xeon Platinum 8480+ with a similar 40% margin in the majority of the workloads. The AMD chip was up to 30% faster in CFD OpenFOAM and tied with its Xeon rival in Graph Analytics GapBS BFS.

The Grace CPU Superchip's strong suit lies in the chip's power efficiency. Nvidia's charts show the Grace CPU Superchip with up to 2.5X better power efficiency than the competition in a 5 MW data center environment — it was close to 2X more efficient than the AMD EPYC 9654, while the Xeon Platinum 8480+ was the least power-efficient of the trio.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To put the numbers into perspective, the Grace CPU Superchip has a peak power draw of 500W for the package composed of the two processors and the LPDDR5X memory. The default TDP for the EPYC 9654 and Intel Platinum 8480+ is 360W and 350W, respectively. In a dual-processor platform the AMD and Intel chips have the potential to pull up to 720W and 700W, respectively.

Initially set to launch in the first half of 2023, the Grace CPU Superchip had suffered a setback. However, Nvidia CEO Jensen Huang recently told us in March that the Grace CPU Superchip is currently in production, so OEM systems will likely launch in the second half of this year.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

ingtar33 correct me if i'm wrong but doesn't the Graph Analytics GapBFS bench already take into account power consumption, so if this new datacenter chip is more efficient then the AMD part but performs the same I believe the Graph Analytics bench would show the intel with a significant lead.Reply

don't get me wrong, the intel part is impressive, i mean it has a little less then 4/5 the core count of the AMD chip and it's on par with the same performance, so this is an impressive part -

PEnns The next-generation Arm "Neoverse"Reply

Enough with this Verse madness!

Everybody is running around with this crazy name and they think it's cool or supposed to impress simple minds: The Metaverse, Mutiverse, and now Neoverse......Gah! -

Kamen Rider Blade I'll wait till Phoronix, Level1Techs, ServeTheHome, or Chips and Cheese gets ahold of these and benchmarks them.Reply

Until then, I'm not holding my breath for any of their claims. -

Makaveli Reply

1000% this!Kamen Rider Blade said:I'll wait till Phoronix, Level1Techs, ServeTheHome, or Chips and Cheese gets ahold of these and benchmarks them.

Until then, I'm not holding my breath for any of their claims. -

bit_user The claim is almost moot, unless/until Nvidia would sell Grace-based machines for general purpose computing, which I haven't heard them announce. Grace seems designed primarily (if not exclusively) to feed & harness their H100-class GPUs.Reply

At best, the claim portends good things for Graviton 4, or whatever cloud processor comes along that uses Neoverse V2 cores and can be used by the general public for standard computing tasks. -

bit_user Reply

ARM's Neoverse branding was originally rolled out about 5 years ago. So, you really can't say they copied off of Facebook/Meta.PEnns said:The next-generation Arm "Neoverse"

Enough with this Verse madness!

Everybody is running around with this crazy name and they think it's cool or supposed to impress simple minds: The Metaverse, Mutiverse, and now Neoverse......Gah!

https://www.anandtech.com/show/13475/arm-announces-neoverse-infrastructure-ip-branding-future-roadmap -

bit_user Reply

I expect someone will manage to run independent benchmarks on them, but I doubt Nvidia will be sending around review samples - or even giving reviewers free access to their cloud platform. These superchip modules are probably only usable in a DGX system (or whatever the current incarnation is called) and I'd guess the entry price on one is a lot more than the hardware budget of anyone out there doing half-decent server reviews.Kamen Rider Blade said:I'll wait till Phoronix, Level1Techs, ServeTheHome, or Chips and Cheese gets ahold of these and benchmarks them.

Until then, I'm not holding my breath for any of their claims.

Most likely, someone will rent some time on a cloud instance of one, and that's where they'll run the benchmarks. Because the machine will probably also house highly sought-after H100 chips, time will be scarce and dearly expensive.

The obvious drawback, with a cloud instance, is limited (if any) visibility into power dissipation - especially at the system level or anything that would let us estimate this "5 MW Datacenter" metric. -

ikjadoon For what it's worth, the Arm Neoverse V2 is based on the Cortex-X3, which is actually the newest Arm P-core uArch.Reply

This acceleration means that the V2 provides a two-generation microarchitecture jump over the V1, resulting in a big boost in single-thread performance that could put it neck-and-neck with the leading x86 server CPUs.

Perf / clock, the Cortex-X3 exceeds Golden Cove & Zen4, so it's probably clocked a good lower than SPR & Genoa → saving power. Notably, the Cortex-X3 below is 1MB L2, but Neoverse V2 is upgraded to 2MB L2.

GB61 1T pts per GHzArm Cortex-X3: ~559 (S23U)

Intel Golden Cove: ~485 (i9-12900KS)

AMD Zen4: ~510 (7950X)

As NUVIA shared, per-core, datacenter CPUs are at basically smartphone power usage. At 2.8 GHz, one whole Arm Neoverse V2 core + L2 cache uses 1.4W:

Arm discussed more on V2 at Hotchips last week.

Will wait for actual benchmarks, though, before passing judgement for / against the V2.

EDIT: V2, not N2, eghad. Thank you, @bit_user