Nvidia CEO Comments on Grace CPU Delay, Teases Sampling Silicon

New Arm-based chips have slipped, but are now 'flying through production.'

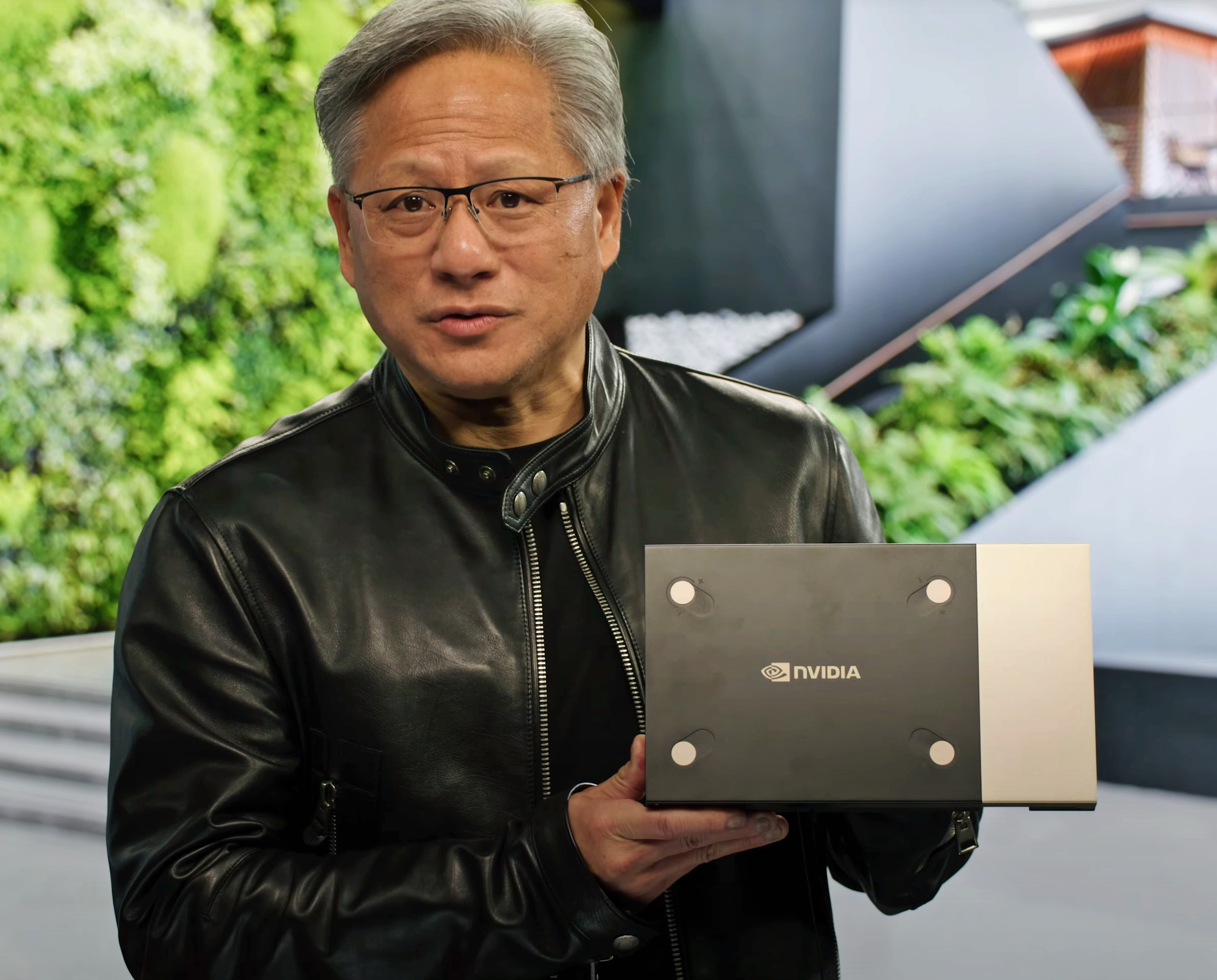

Nvidia teased its forthcoming Arm-based Grace CPU at GTC 2023, but the company's announcement that systems will now ship in the second half of this year represents a delay from its original launch timeline that targeted the first half of 2023. We asked Nvidia CEO Jensen Huang about the delay during a press question and answer session today, which we'll cover below. Nvidia also showed its Grace silicon for the first time and made plenty of new performance claims during its GTC keynote, including that its Arm-based Grace chips are up to 1.3X faster than x86 competitors at 60% of the power, which we'll also cover.

I asked Jensen Huang about the delay in delivering the Grace CPU and Grace Hopper Superchip systems to the end market. After he playfully pushed back about the expected release date (it was undoubtedly 1H23, now 2H23), he responded:

"Well, first, I can tell you that Grace and Grace Hopper are both in production, and silicon is flying through the fab now. Systems are being made, and we made a lot of announcements. The world's OEMs and computer makers are building them." Huang also remarked that Nvidia has only been working on the chips for two years, which is a relatively short time given the typical multi-year design cycle for a modern chip.

Today's definition of shipping systems can be fuzzy — the first systems from AMD and Intel often ship to hyperscalers for deployment long before the chips see general off-the-shelf availability. However, while Nvidia says it is sampling chips to customers, it hasn't said Grace is being deployed into production yet. As such, the chips are late according to the company's projections, but to be fair, perennially late chip launches from companies like Intel aren't uncommon. That highlights the difficulty of launching a new chip, even when building around the dominant x86 chips with established hardware and software platforms built upon for decades.

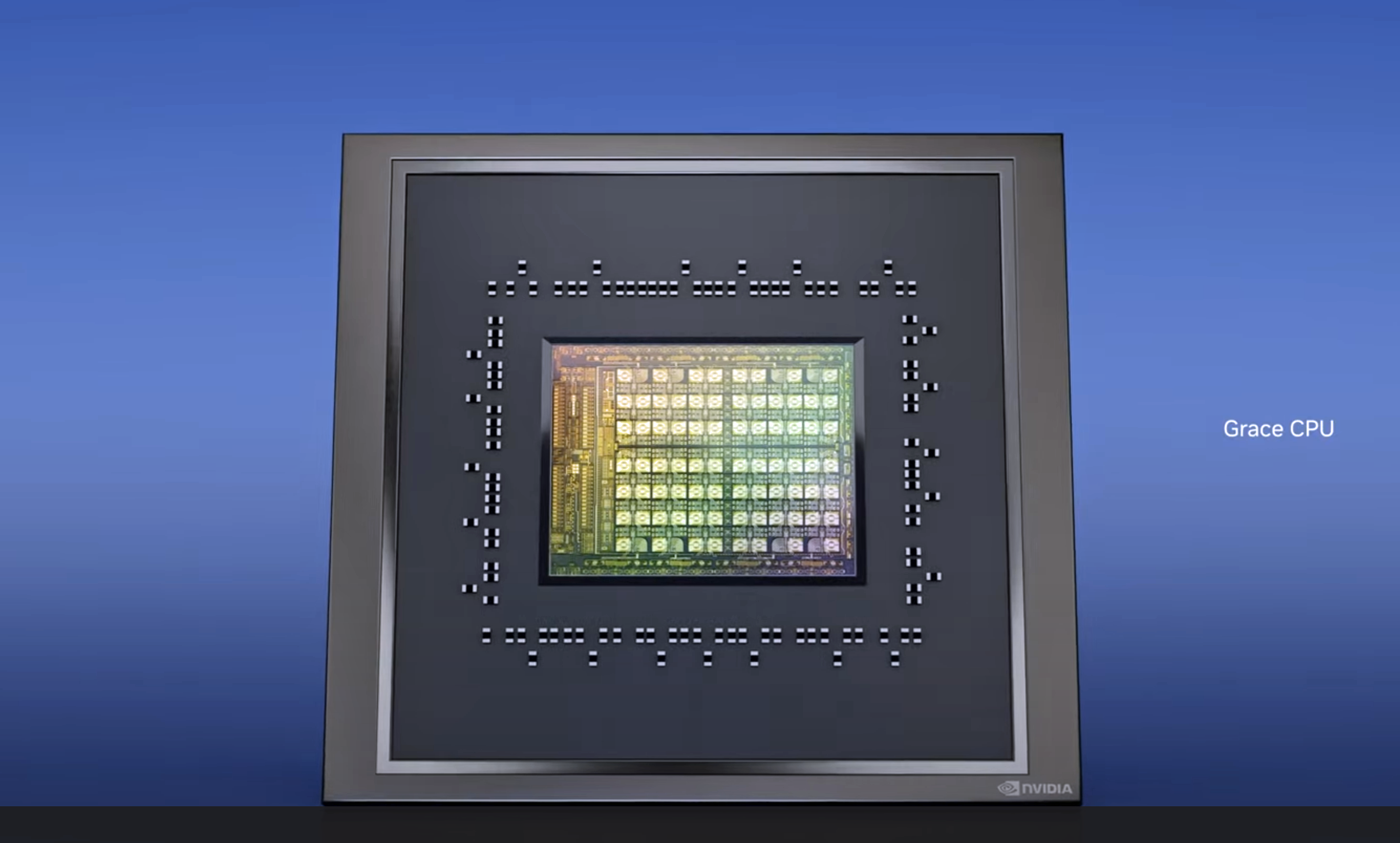

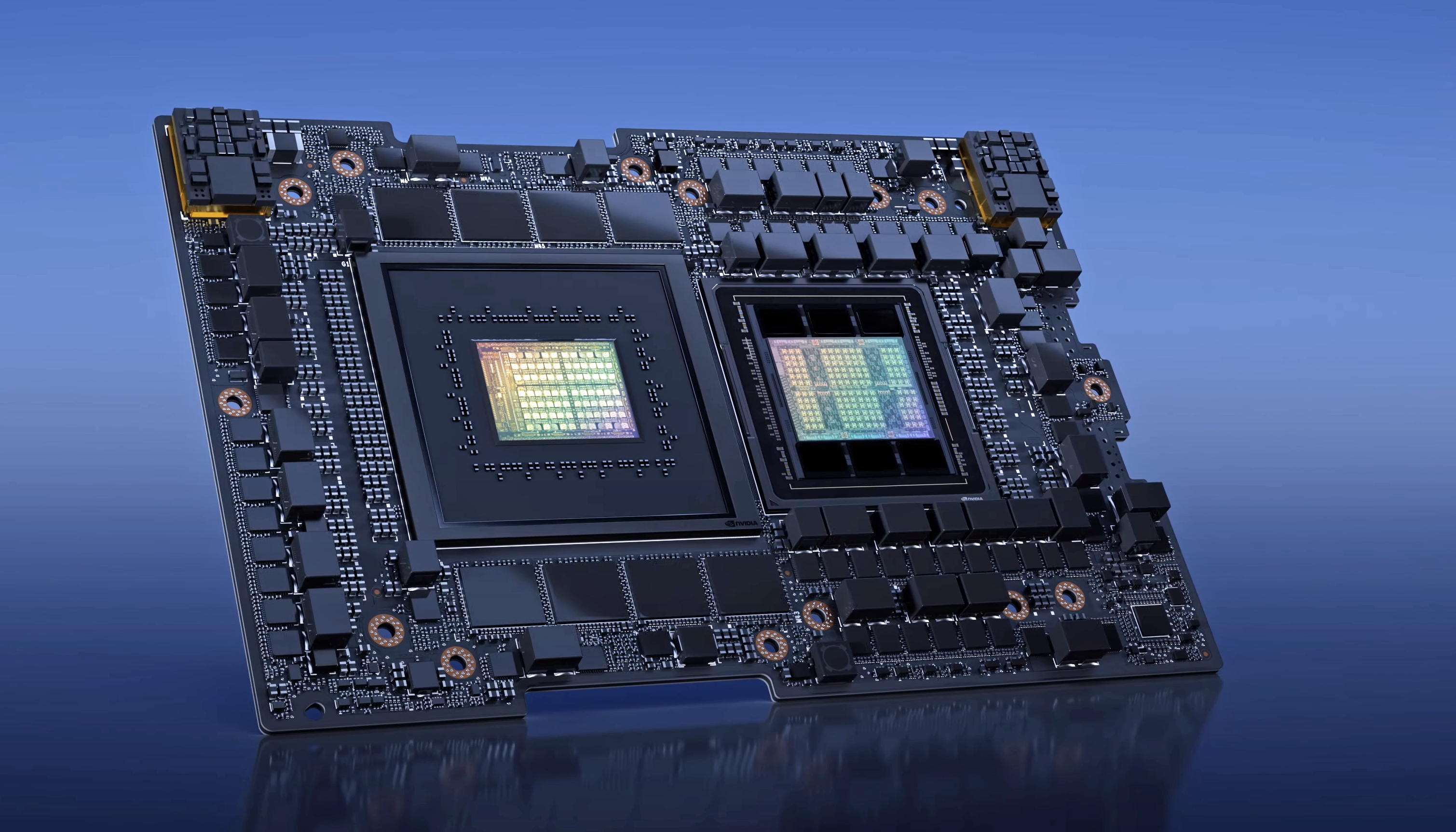

In contrast, Nvidia's Grace and Grace+Hopper chips are a ground-up rethinking of many of the fundamental aspects of chip design with an innovative new chip-to-chip interconnect. Nvidia's use of the Arm instruction set also means there's a heavier lift for software optimizations and porting, and the company has an entirely new platform to build.

Jensen alluded to some of that in his extended response, saying, "We started with Superchips instead of chiplets because the things we want to build are so big, And both of these are in production today. So customers are being sampled, the software is being ported to it, and we're doing a lot of testing. During the keynote, I showed a few numbers, and I didn't want to burden the keynote with a lot of numbers, but a whole bunch of numbers will be available for people to enjoy. But the performance was really quite terrific."

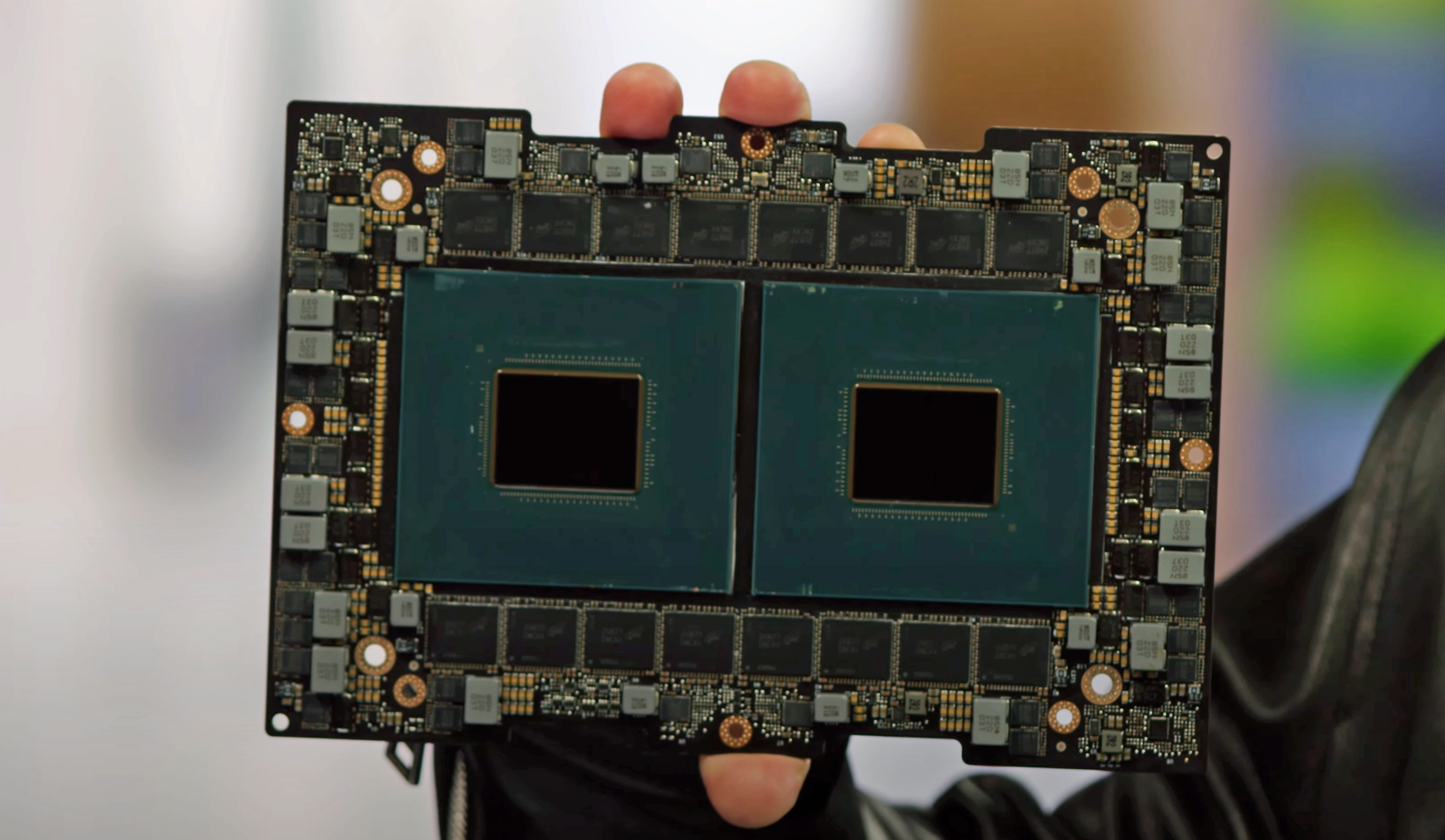

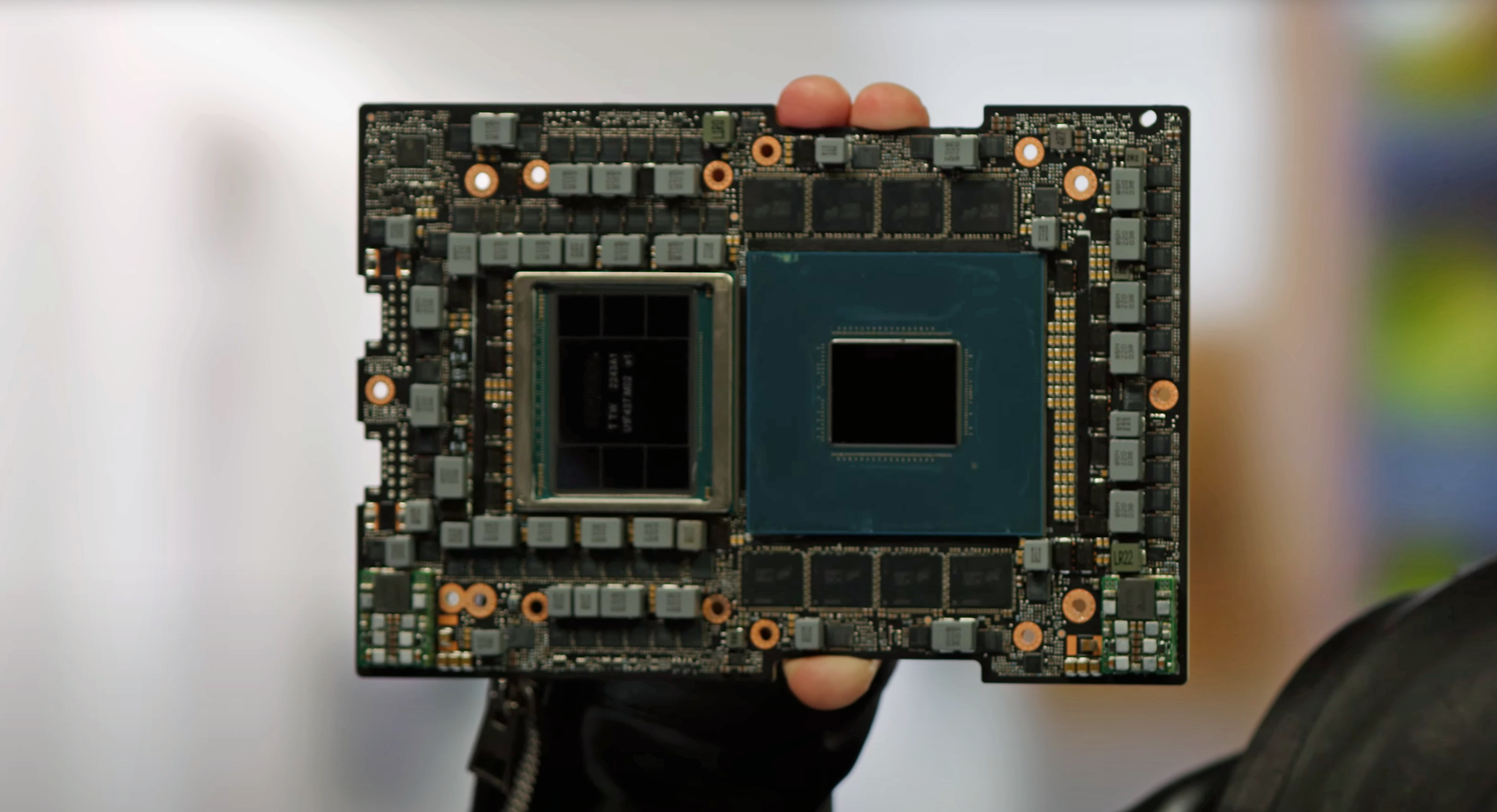

And Nvidia's claims are impressive. For example, in the above album, you can see the Grace Hopper chip that Nvidia showed in the flesh for the first time at GTC (more technical details here).

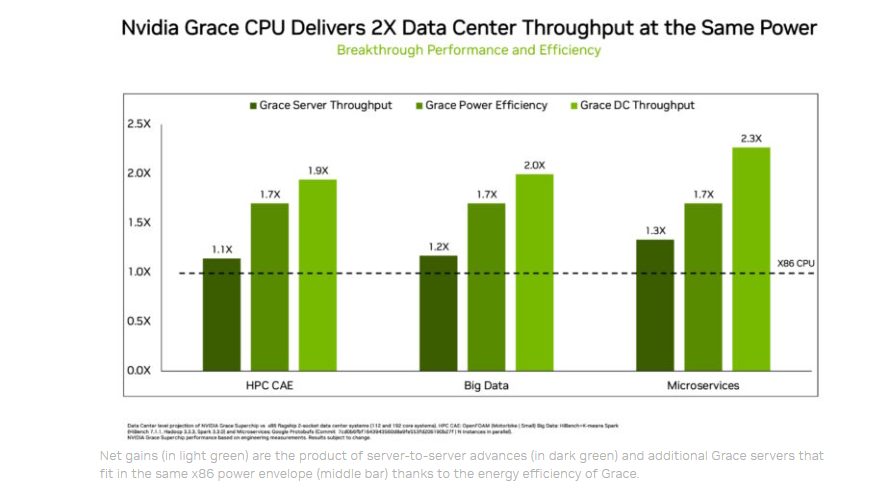

During the presentation, Huang claimed the chips are 1.2X faster than the 'average' next-gen x86 server chip in an HiBench Apache Spark memory-intensive benchmark and 1.3X faster in a Google microservices communication benchmark, all while drawing only 60% of the power.

Nvidia claims this allows data centers to deploy 1.7X more Grace servers into power-limited installments, with each providing 25% higher throughput. The company also claims Grace is 1.9X faster in computational fluid dynamics (CFD) workloads.

However, while the Grace chips are ultra-performant and efficient in some workloads, Nvidia isn't aiming them at the general-purpose server market. Instead, the company has tailored the chips for specific use cases, like AI and cloud workloads that favor superior single-threaded and memory processing performance in tandem with excellent power efficiency.

"[..]almost every single data center is now powered limited, and we designed Grace to be extraordinarily performant in a power-limited environment," Huang told us in response to our questions. "And in that case, you have to be both really high in performance, and you have to be really low in power, and just incredibly efficient. And so, the Grace system is about two times more power/performance efficient compared to the best of the latest generation CPUs."

"And it's designed for different design points, so that's very understandable," Huang continued. "For example, what I just described doesn't matter to most enterprises. It matters a lot to cloud service providers, and it matters a lot to data centers that are powered unlimited."

Energy efficiency is becoming more of a concern than ever, with chips like the AMD EPYC Genoa we recently reviewed and Intel's Sapphire Rapids now pulling up to 400 and 350 watts, respectively. That requires exotic new air cooling solutions to contain the prodigious power draw at standard settings and liquid cooling for the highest-performance options.

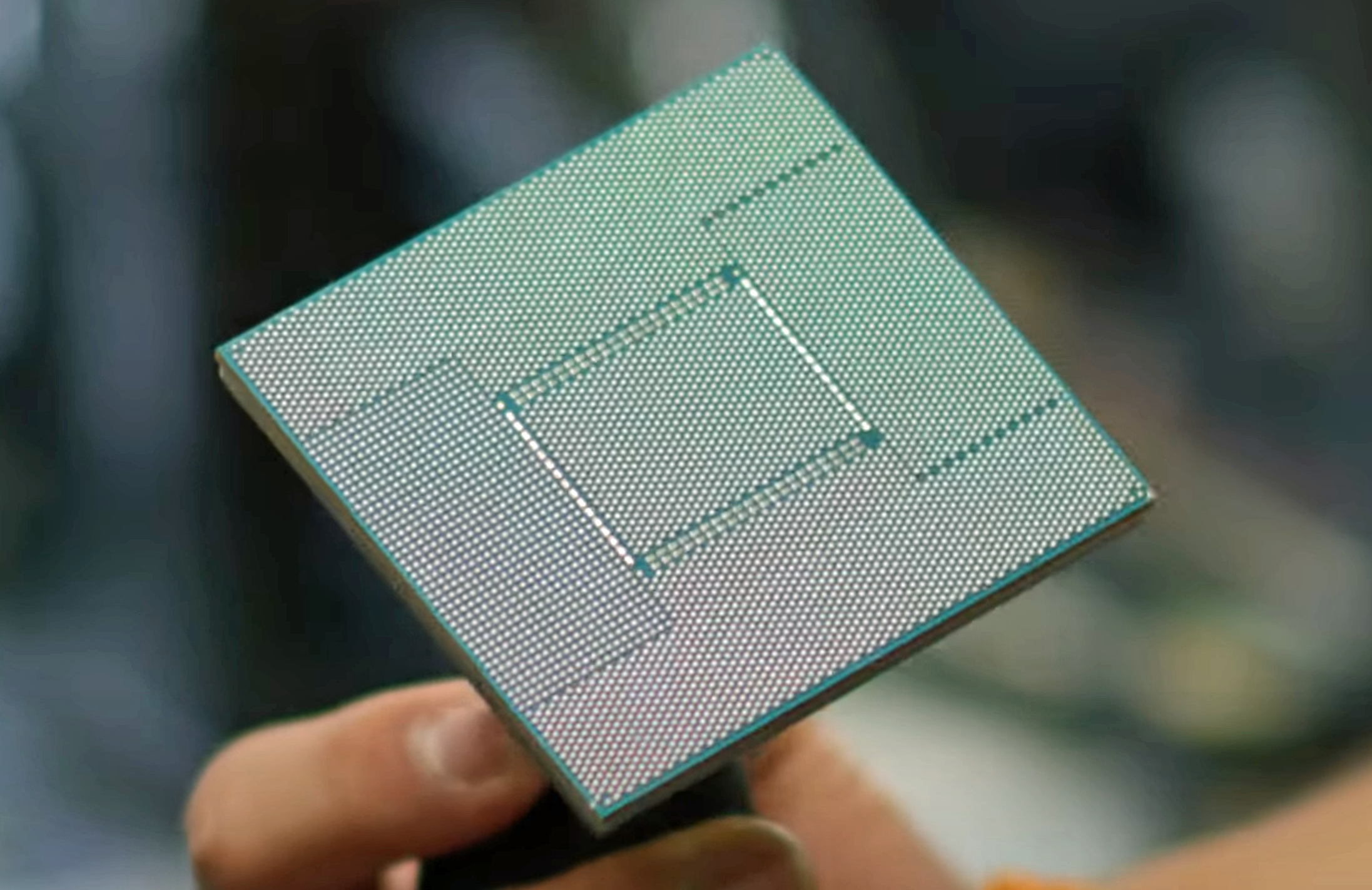

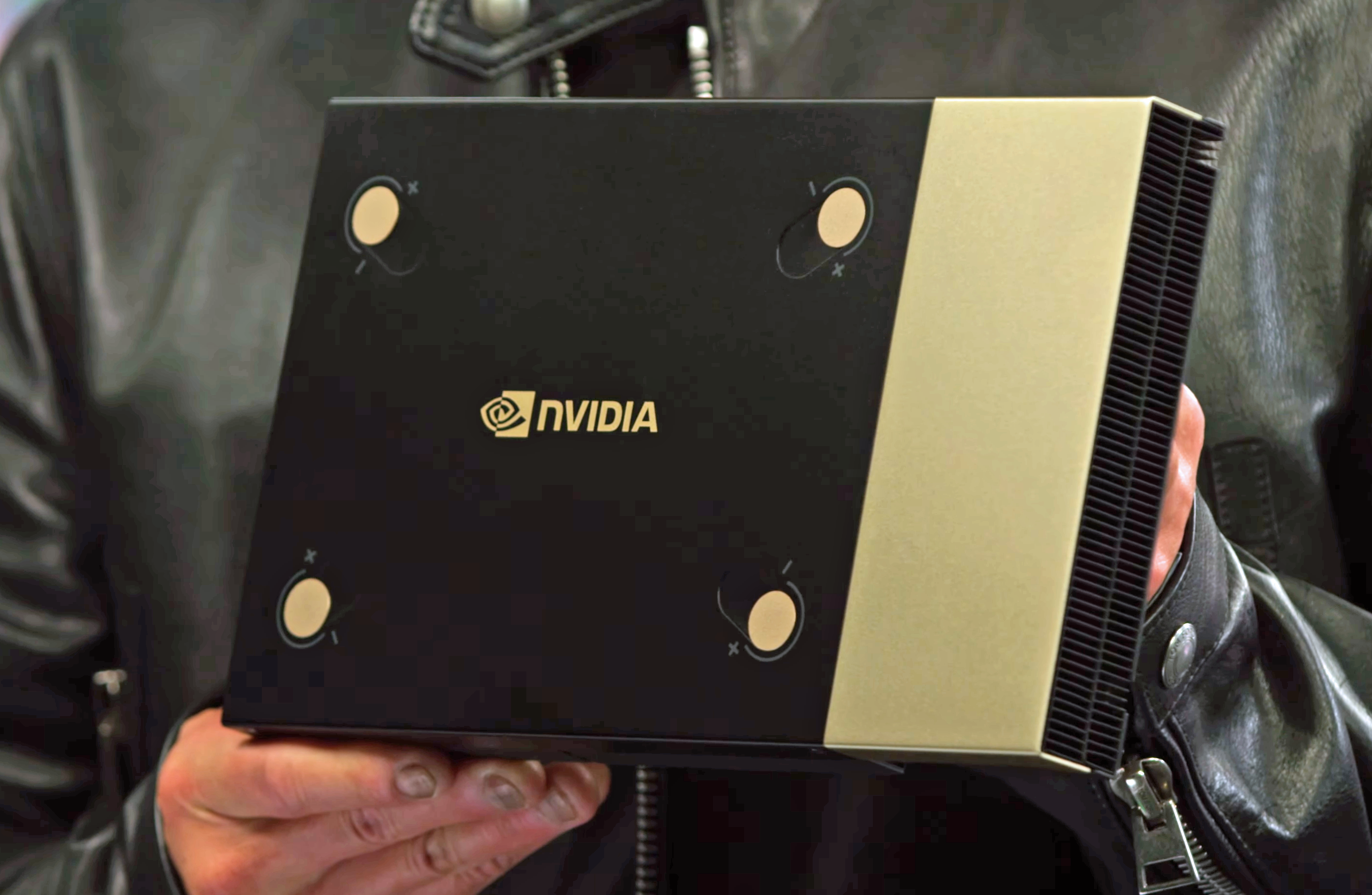

In contrast, Grace's lower power draw will make the chips more forgiving to cool. As revealed at GTC for the first time, Nvidia's 144-core Grace package is 5" x 8" and can fit into passively-cooled modules that are surprisingly compact. These modules still rely upon air cooling, but two can be air-cooled in a single slim 1U chassis.

Nvidia also showed its Grace Hopper Superchip silicon for the first time at GTC. The Superchip combines the Grace CPU with a Hopper GPU on the same package. As you can see in the album above, two of these modules can also fit into a single server chassis. You can read the deep-dive details about this design here.

The big takeaway with this design is that the enhanced CPU+GPU memory coherency, fed by a fat low-latency chip-to-chip connection that's seven times the speed of the PCIe interface, allows the CPU and GPU to share information held in memory at a speed and efficiency that's impossible with previous designs.

Huang explained that this approach is ideal for AI, databases, recommender systems, and large language models (LLM), all of which are in incredible demand. By allowing the GPU to access the CPU's memory directly, data transfers are streamlined to boost performance.

Nvidia's Grace chips may be running a bit behind schedule, but the company has a bevy of partners, with Asus, Atos, Gigabyte, HPE, Supermicro, QCT, Wiston, and Zt all preparing OEM systems for the market. Those systems are now expected in the second half of the year, but Nvidia hasn't said whether or not they will come towards the beginning or end of the second half.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

waltc3 I enjoyed reading him saying "System builders and OEMs are building systems with them right now" except for the fact that they won't be shipping until the latter half of the year. JHH is a hoot with "It just works" (marketing from Apple), and--what was the latest?--"nVidia everywhere" (previous marketing for Microsoft Windows.) JHH needs to work on his presentations a bit, imo.Reply -

ikjadoon Seems clearer the uArch, and less the nodes, is what drives perf/W/core.Reply

AMD Genoa (Zen4) on TSMC N5P still isn't a big enough efficiency leap. Frankly, I don't think Intel or AMD have a chance in perf/W/core until a clean sheet uArch.

The power consumption is higher with these new Socket SP5 processors but as shown by many of the performance-per-Watt metrics, when it comes to the power efficiency it's often ahead of AMD EPYC 7003 "Milan" or worst case was roughly similar performance-per-Watt to those prior generation parts

When NVIDIA, Amazon, Apple, Google, Microsoft, Jim Keller, and even bloody Linux are giving up on the x86 monopoly...and the only laggards are AMD & Intel...sounds pretty obvious money & perf talk.

Thank God NVIDIA couldn't buy Arm Ltd. They didn't need it.

All right, that should be enough feathers rusted. -

bit_user @PaulAlcorn , thanks for the coverage!Reply

Huang also remarked that Nvidia has only been working on the chips for two years, which is a relatively short time given the typical multi-year design cycle for a modern chip.

Nvidia has made no secret of the fact that they're using ARM's Neoverse V2 cores. That means most of their work on this was probably just a matter of integration, rather than ground-up design. ARM tries to make it as fast & easy as possible for its customers to get chips to market that use its IP.

https://developer.nvidia.com/blog/nvidia-grace-cpu-superchip-architecture-in-depth/

For reference, Amazon's Graviton 3, which launched about 15 months ago, uses ARM's Neoverse V1 cores.

Nvidia's use of the Arm instruction set also means there's a heavier lift for software optimizations and porting, and the company has an entirely new platform to build. Jensen alluded to some of that ...

This feels more like an excuse than whatever was their main issue. They've officially supported CUDA on ARM for probably 5 years, now. They've shipped ARM-based SoCs for at least 15 years. All their self-driving stuff is on ARM. All the big hyperscalers have ARM instances. And Fujitsu even launched an ARM-based supercomputer, using their A64FX, which I'm sure prompted some HPC apps to receive ARM ports & optimizations.

It's weird that their Genoa-comparison slide compares against NPS4, as if it's the only option. You don't have to use that - you could also just use NPS1 or NPS2, if you have VMs big enough. -

bit_user Reply

This was designed back before the deal fell-through. Of course they didn't need to own ARM, in order to have access to its IP, but doing so would've enabled them to effectively lower their unit costs (i.e. since they'd have been paying themselves the license fee, and probably would've been tempted to cut themselves a better deal).ikjadoon said:Thank God NVIDIA couldn't buy Arm Ltd. They didn't need it. -

That may be true however, consumer prices would not have went down, rather, they would’ve went up if they had purchased the company. Nvidia has proven they are the extreme of greed.Reply

-

bit_user Reply

My point was purely about the cost to Nvidia - not to their customers. By now, I think we all know that Nvidia will price its products in a profit-maximizing way, irrespective of their costs.Mandark said:That may be true however, consumer prices would not have went down, rather, they would’ve went up if they had purchased the company. Nvidia has proven they are the extreme of greed.