Why you can trust Tom's Hardware

AMD sent us a Titanite SP5 reference platform for our tests. This is a reference platform, so not all of its specs are necessarily POR — refer to AMD’s official specifications for any needed clarifications.

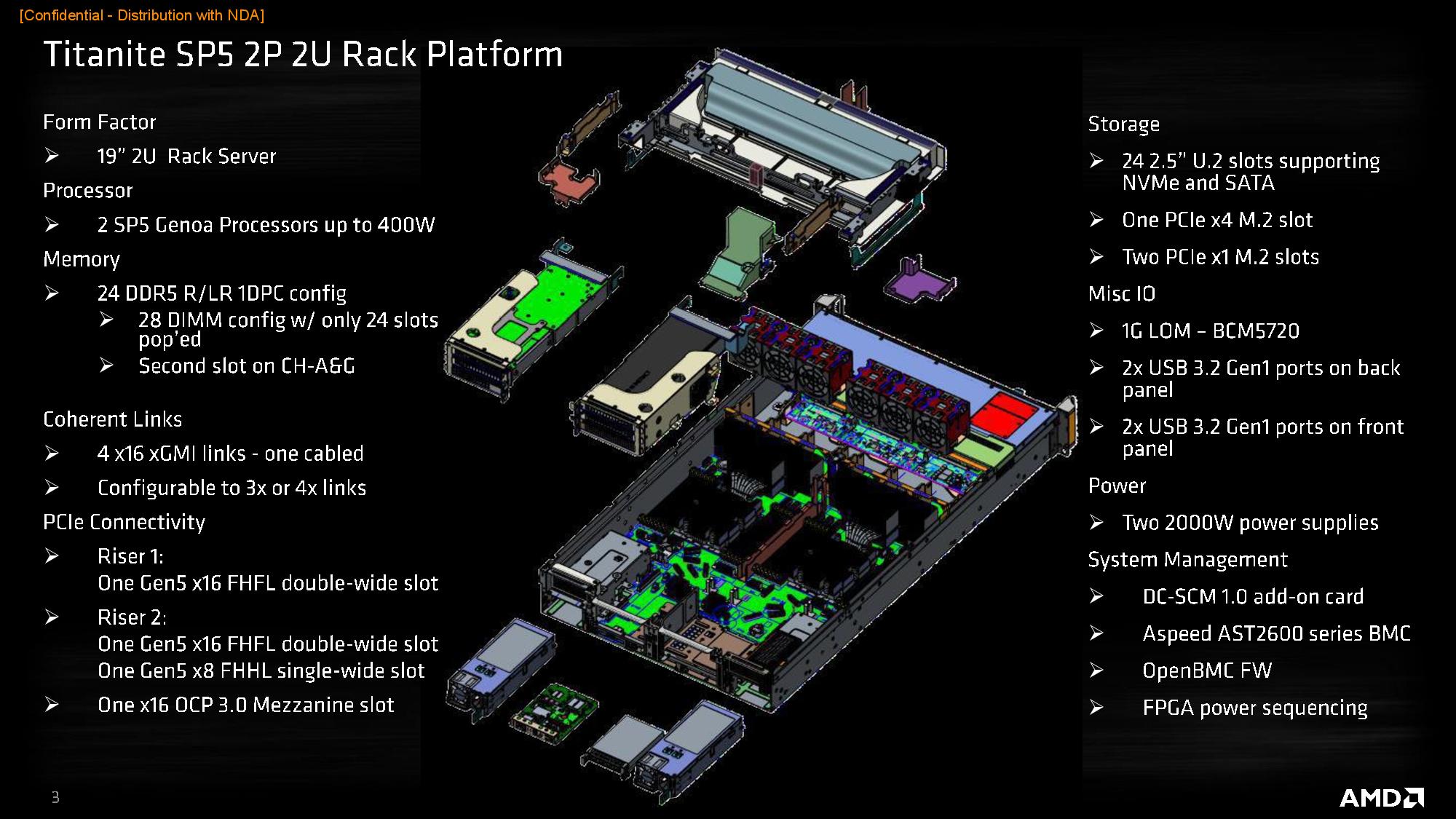

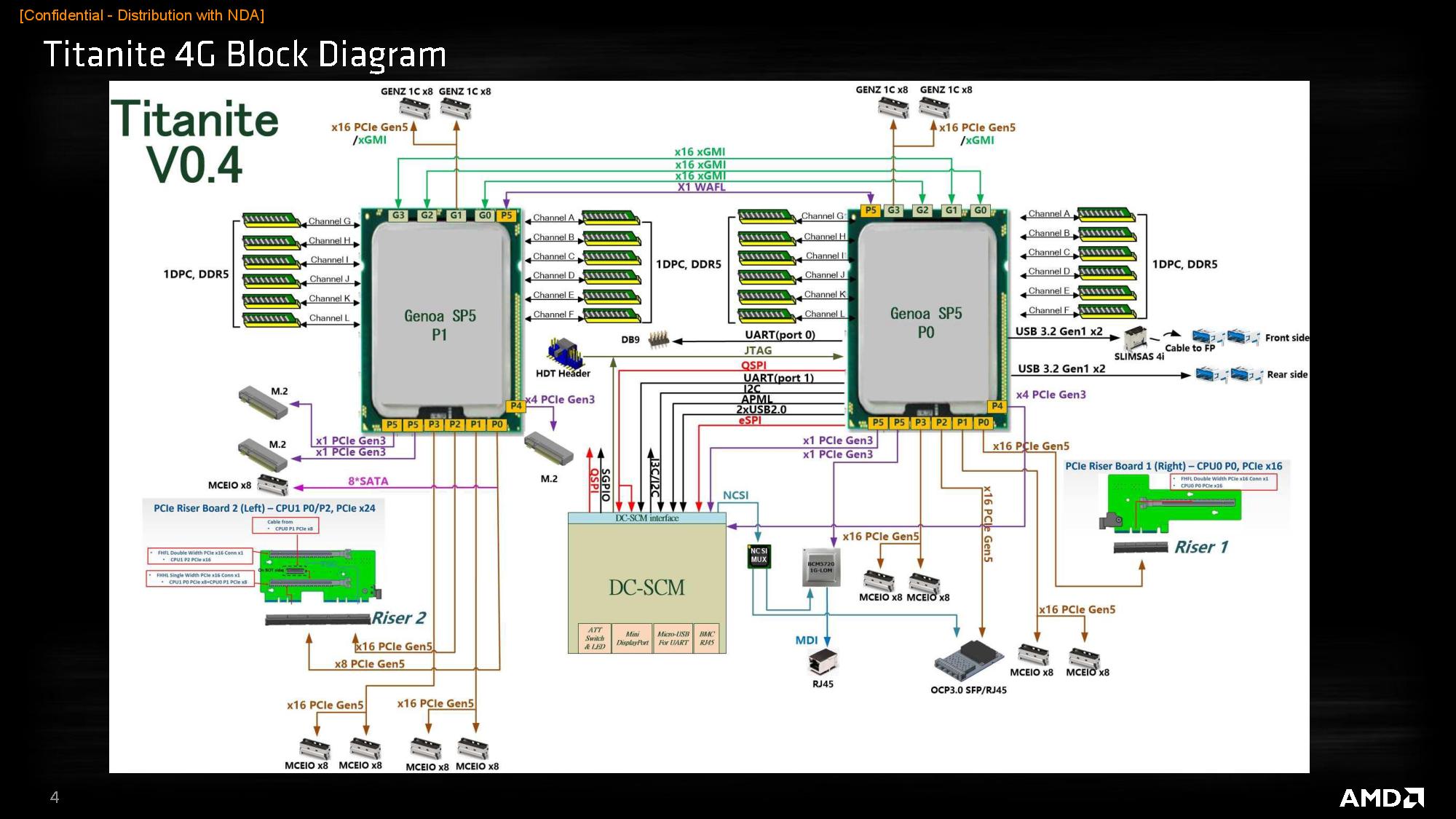

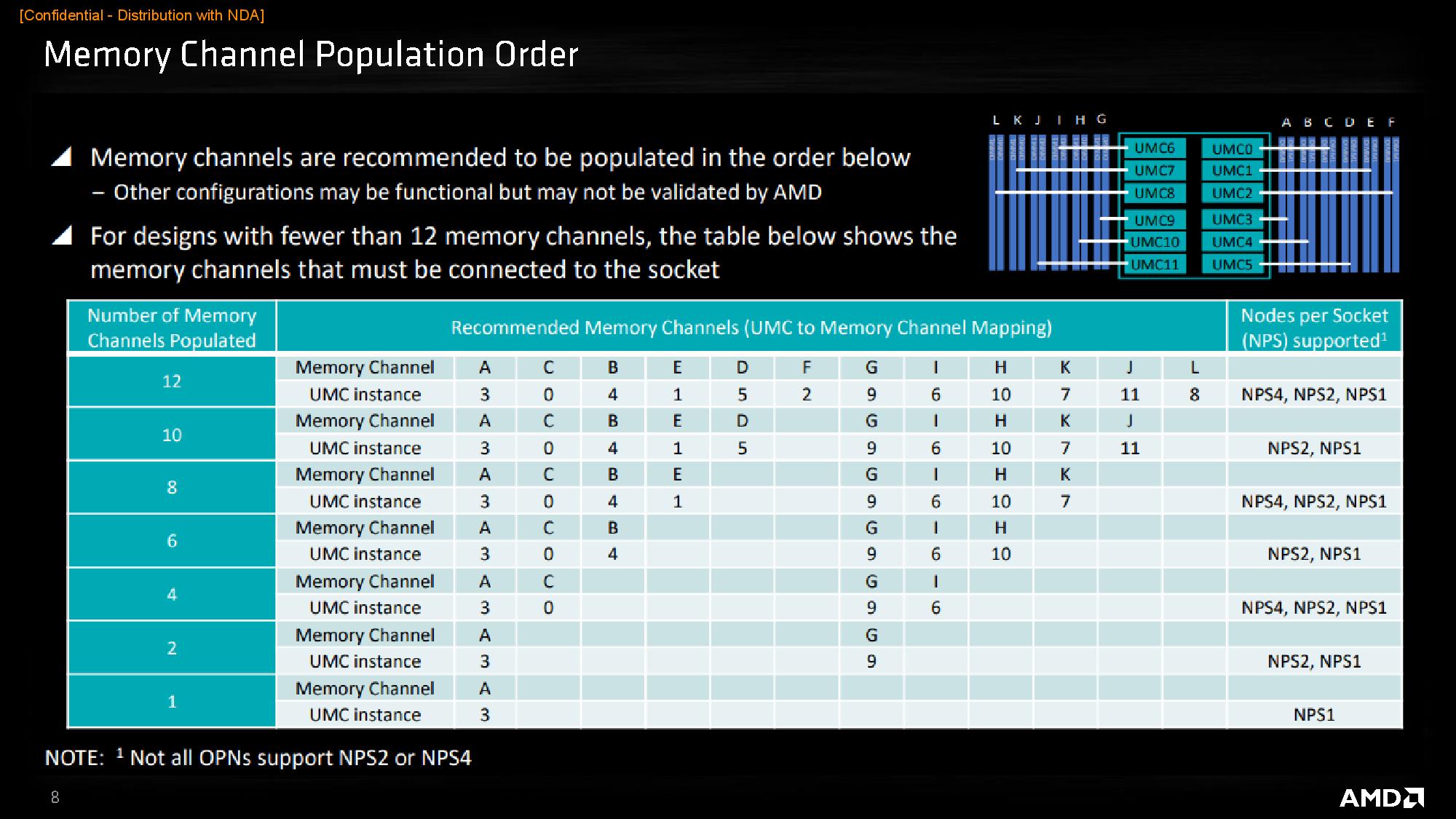

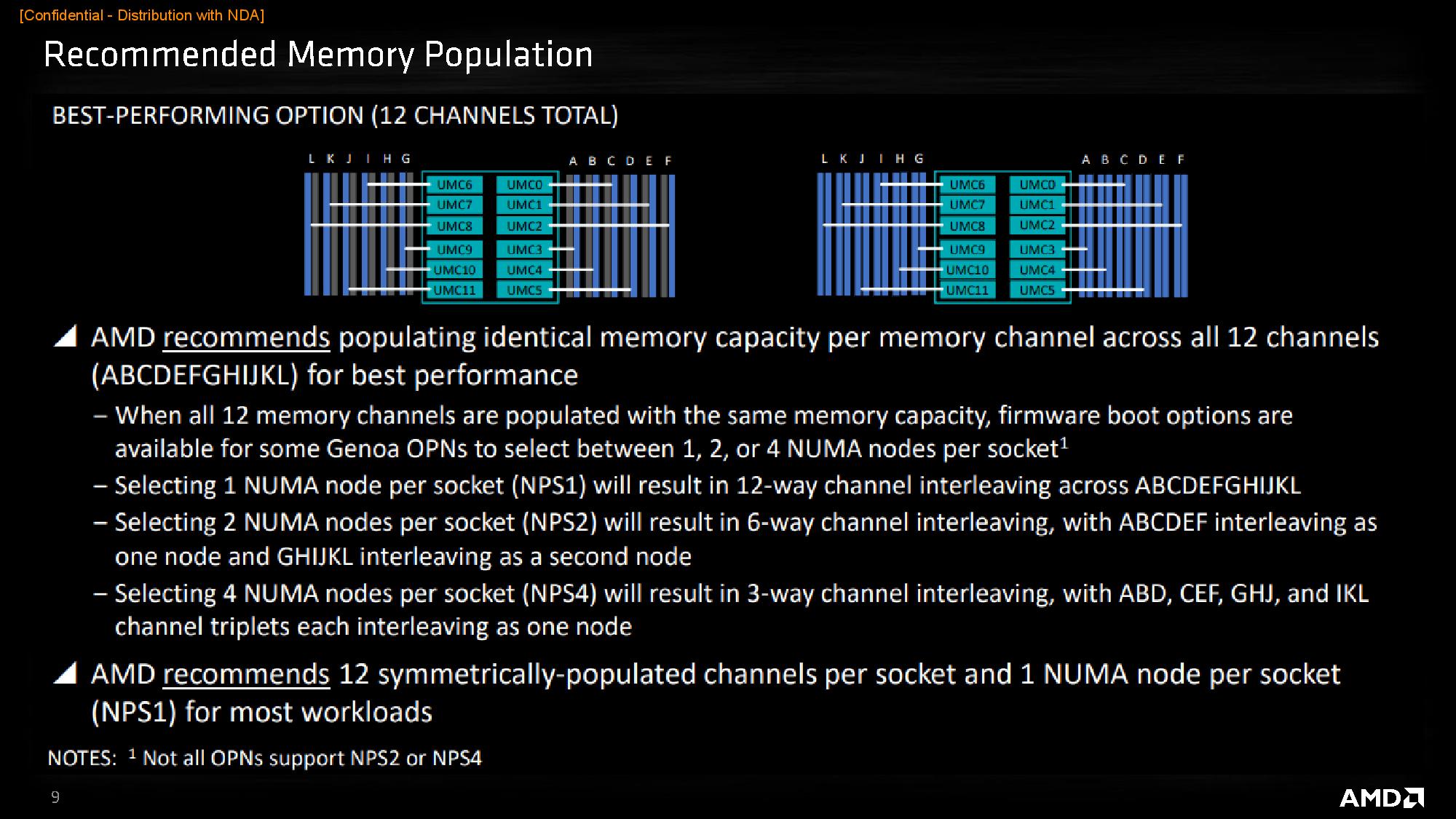

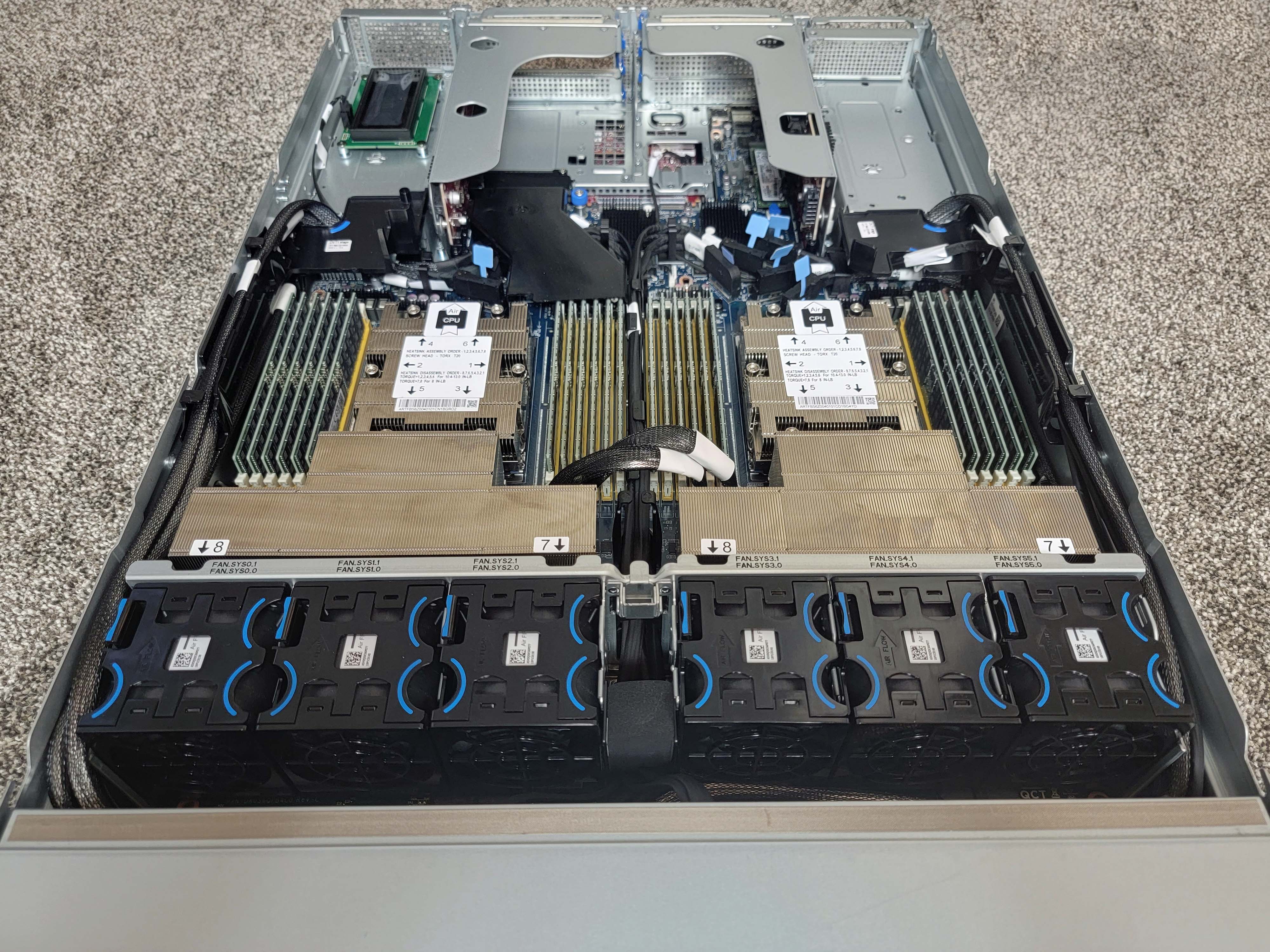

As you can see, the system is a standard 19” 2U rack server that can accommodate two Genoa processors up to 400W. The system has 24 DDR5 slots populated, though the company actually routed 28 slots for 2DPC developmental purposes. The system has four x16 xGMI links, with one cabled link enabling 3L or 4L configurations.

The system has dual 2000W redundant power supplies, though it dynamically spreads the power load across both power supplies during use. As such, we were advised to plug the system into two separate circuits, as the peak power consumption can trip a single circuit breaker. The remainder of the slides are self-explanatory and show the system’s accommodations quite well, so we won’t bother repeating them.

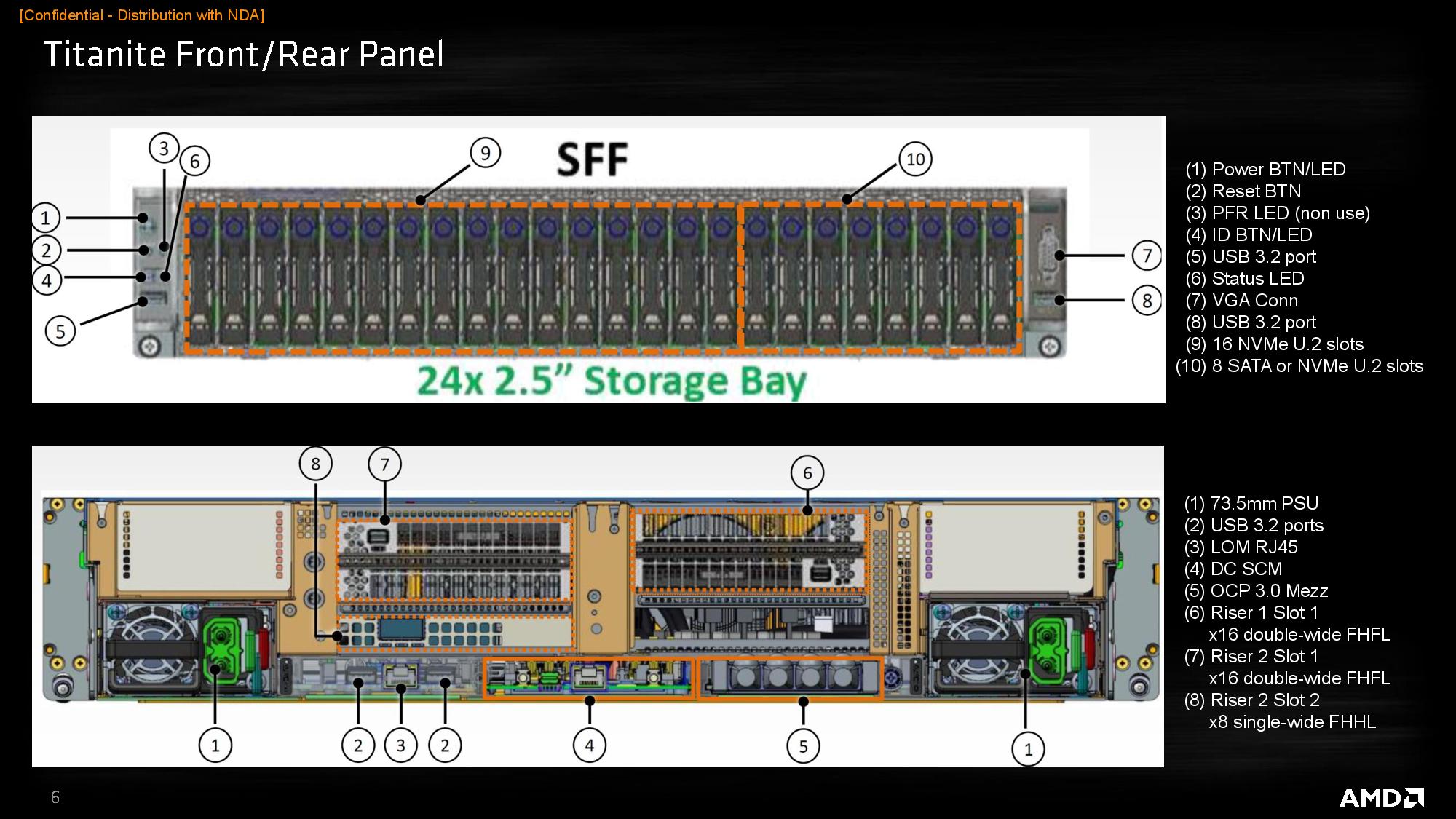

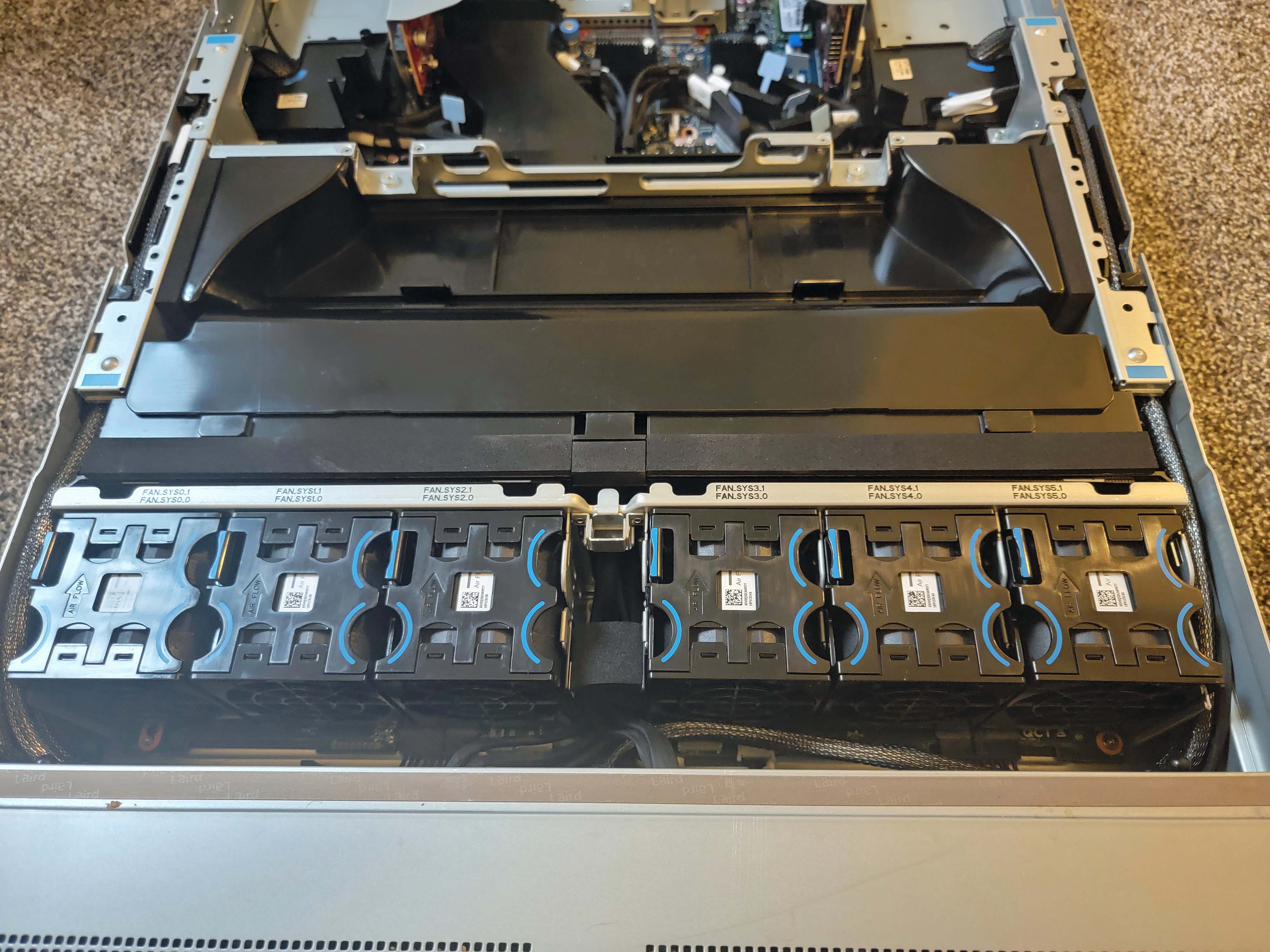

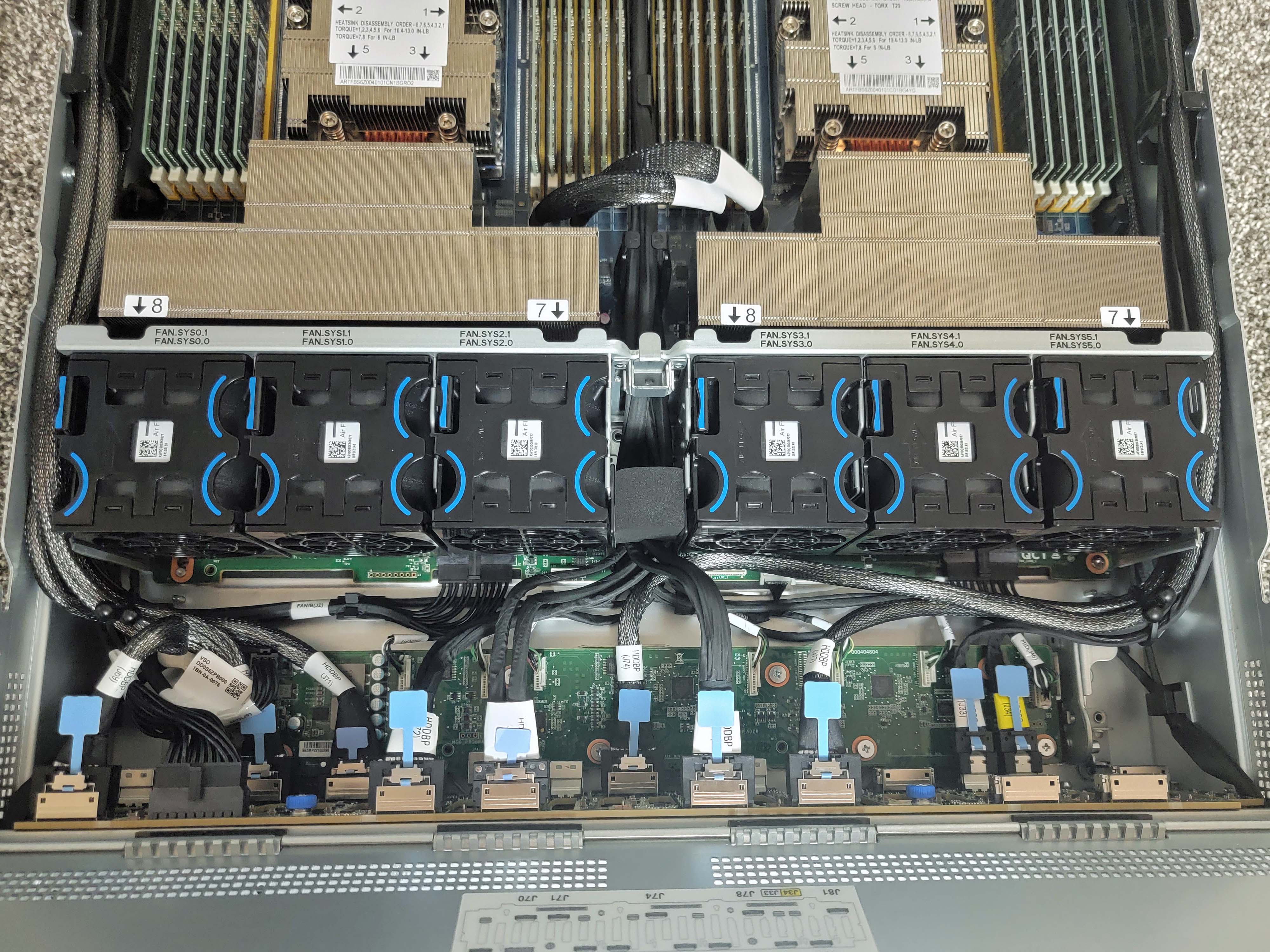

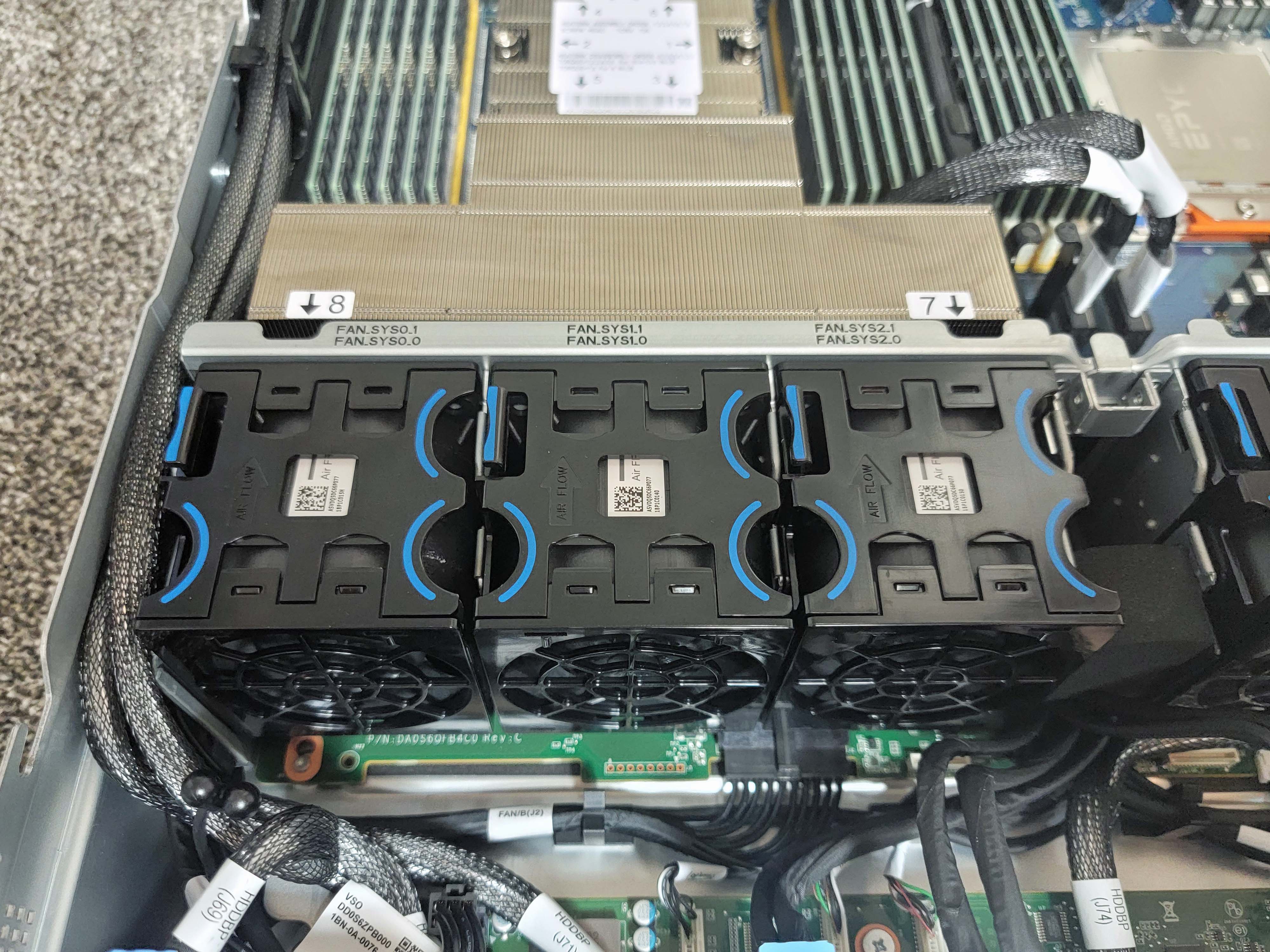

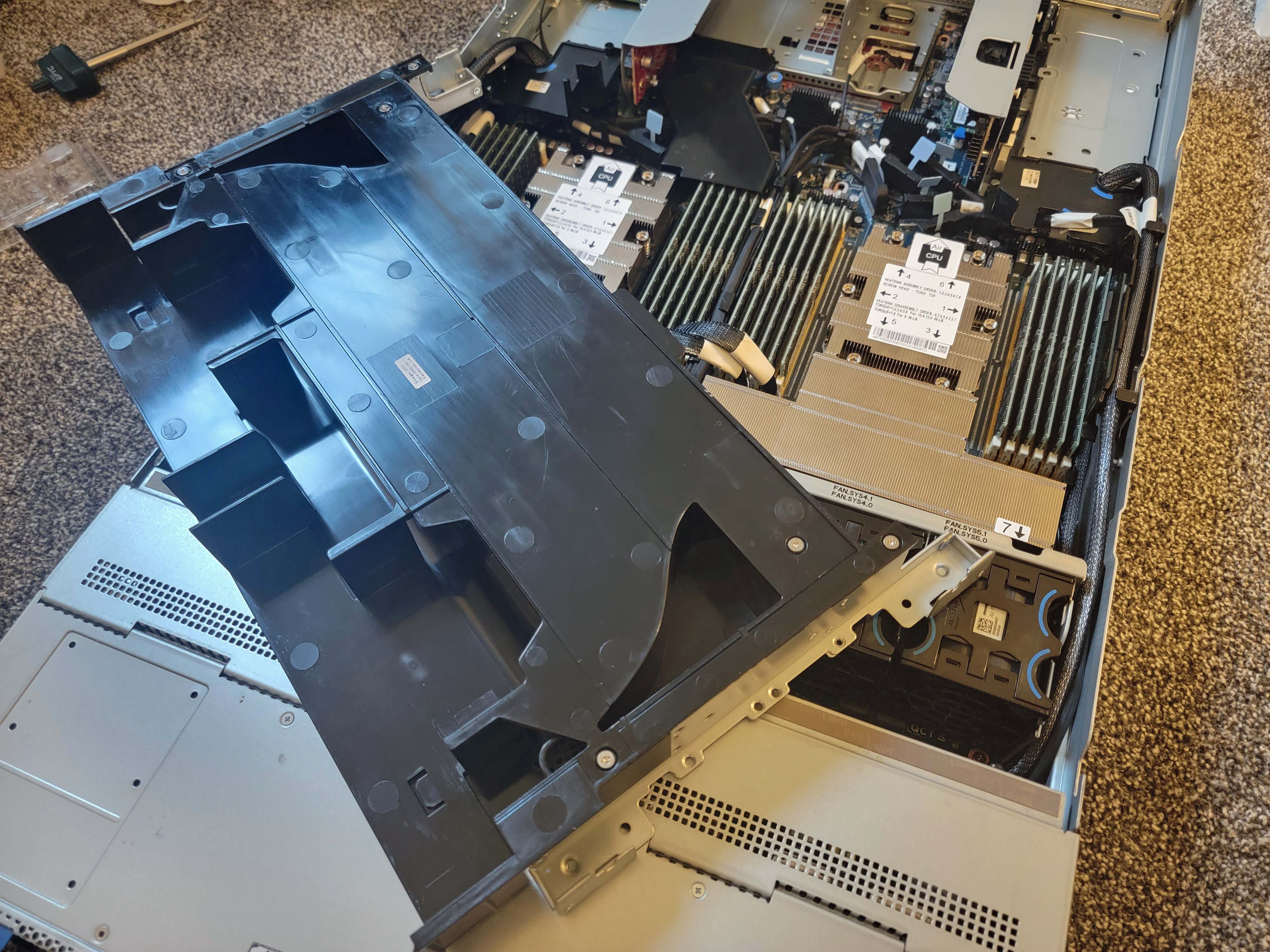

The system has twenty-four 2.5” NVMe bays up front, leveraging EPYC’s incredible PCIe connectivity, but we used the in-built M.2 port for our tests. As you can see, the system has a large black air baffle directing air over incredibly large heatsinks with a large frontal fin stack — more on those in a second.

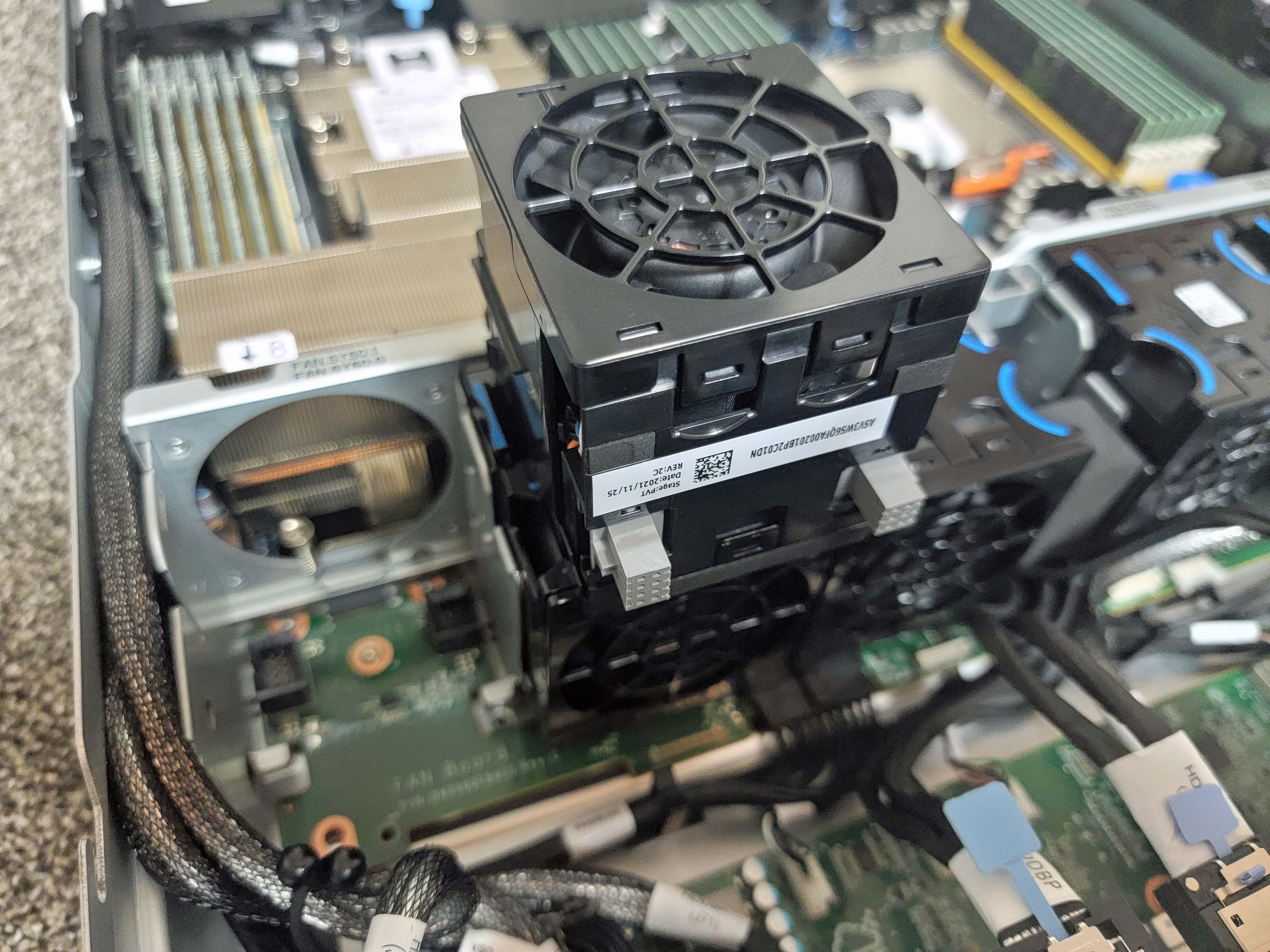

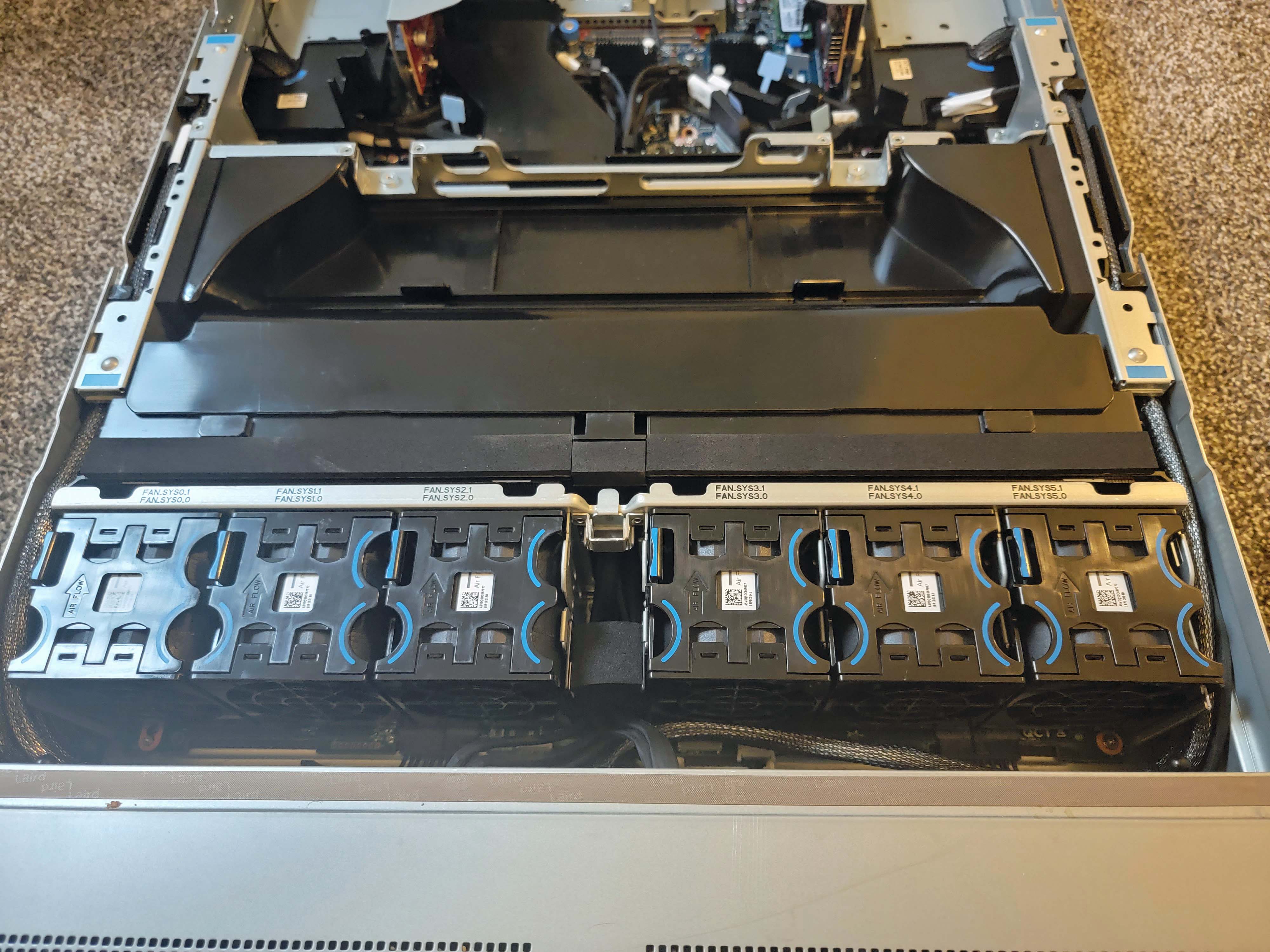

We can also see six fans aligned across the front of the system. These 20,000-RPM fans alone can draw up to 300W when the system is under full load. That’s necessary to cool the 800W of CPU power, and the additional ~300W of power used by just the 1.5TB of DDR5 memory.

As a result of such high secondary power consumption, power measurements taken at the wall plug aren’t effective for precise measurement. We’re working on exposing software-based counters to quantify power consumption for this review, but that is a work in progress.

We did see ~1800W of power use at the wall for the system during heavy workloads like Gromacs and OpenSSL, but again, a big portion of that power consumption is due to the fans and memory, so it will vary widely based upon the system and memory configuration. Notably, the 2000W power supplies are 80 Plus Titanium rated and incredibly small given their output.

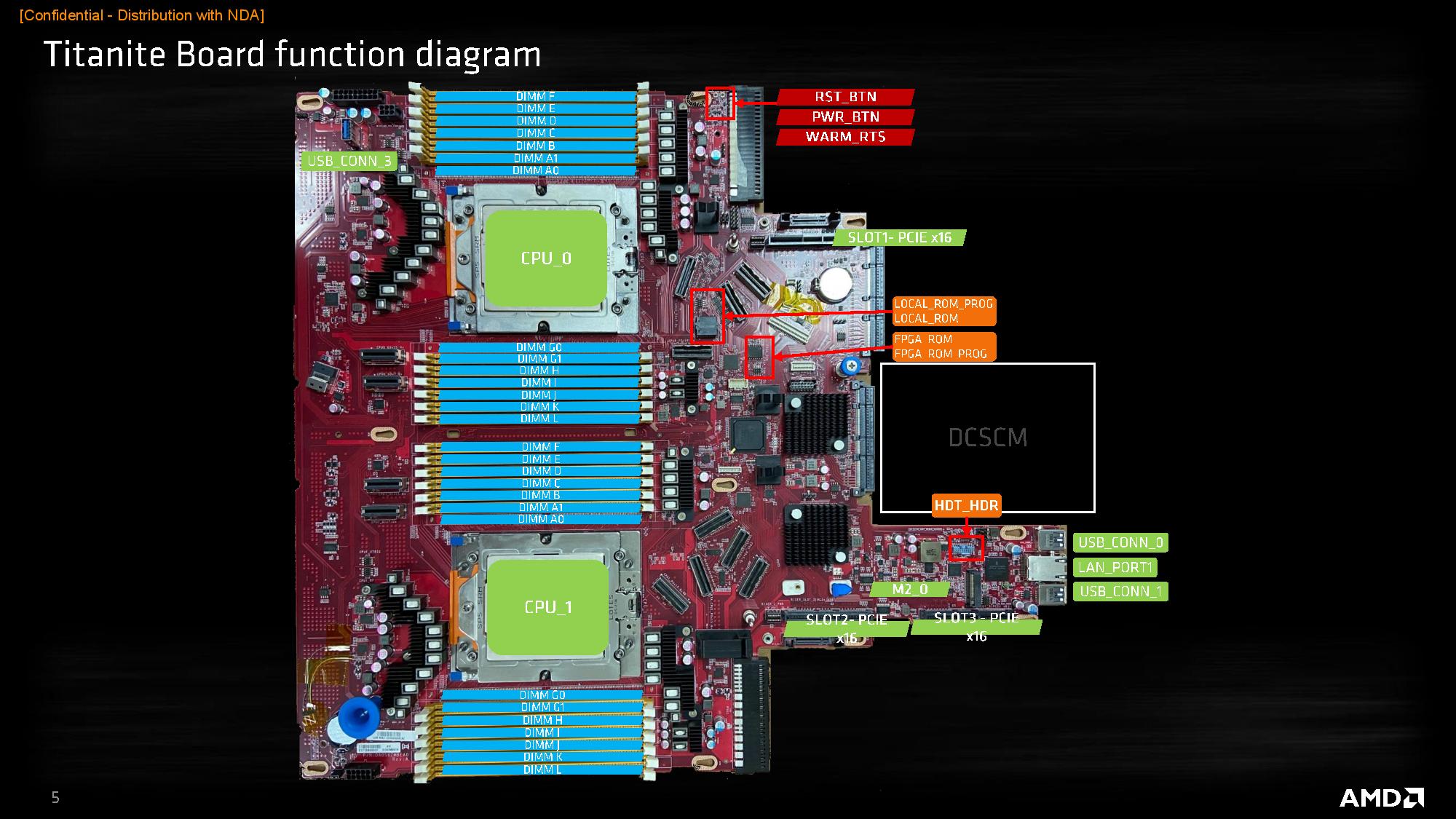

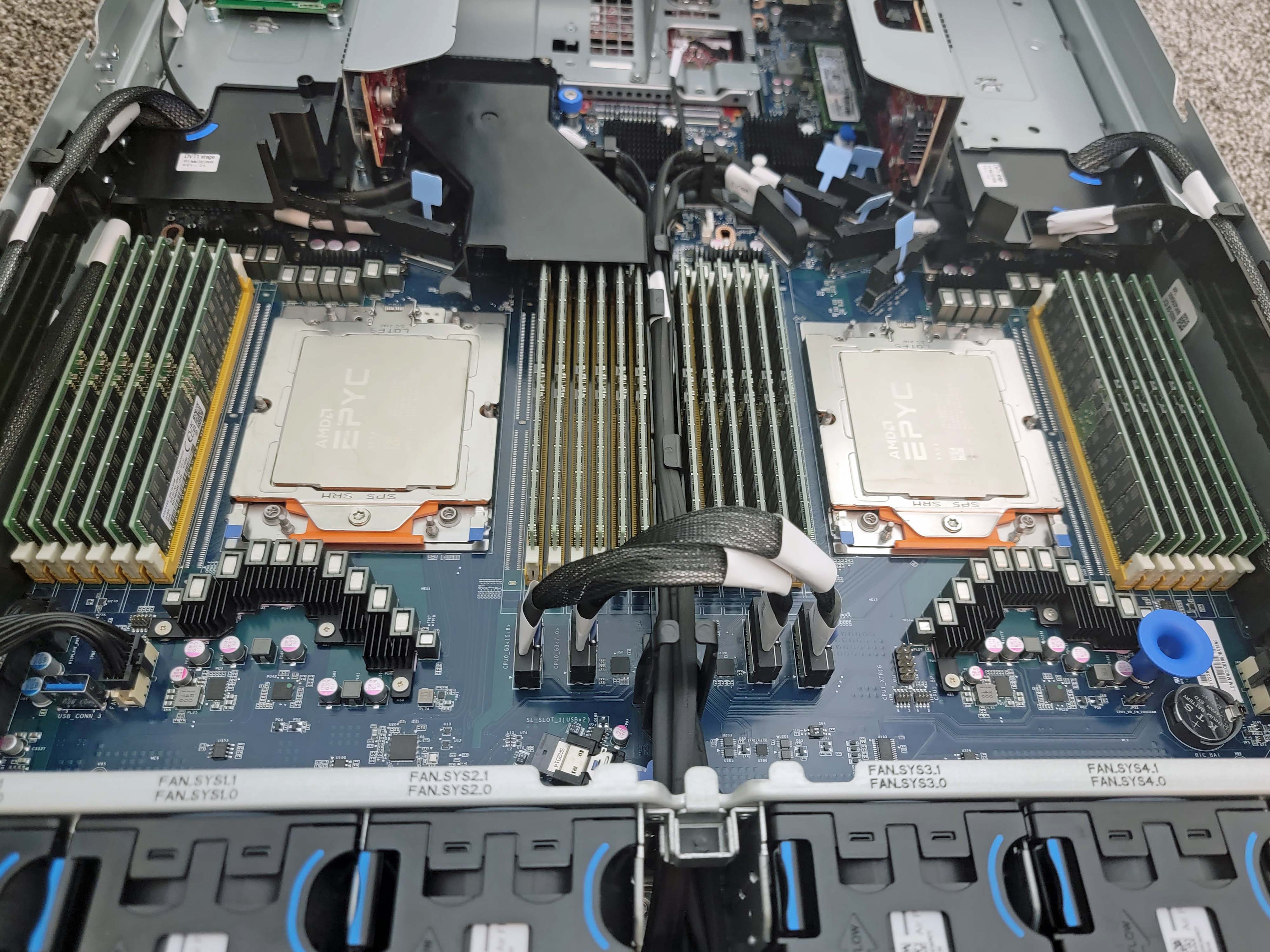

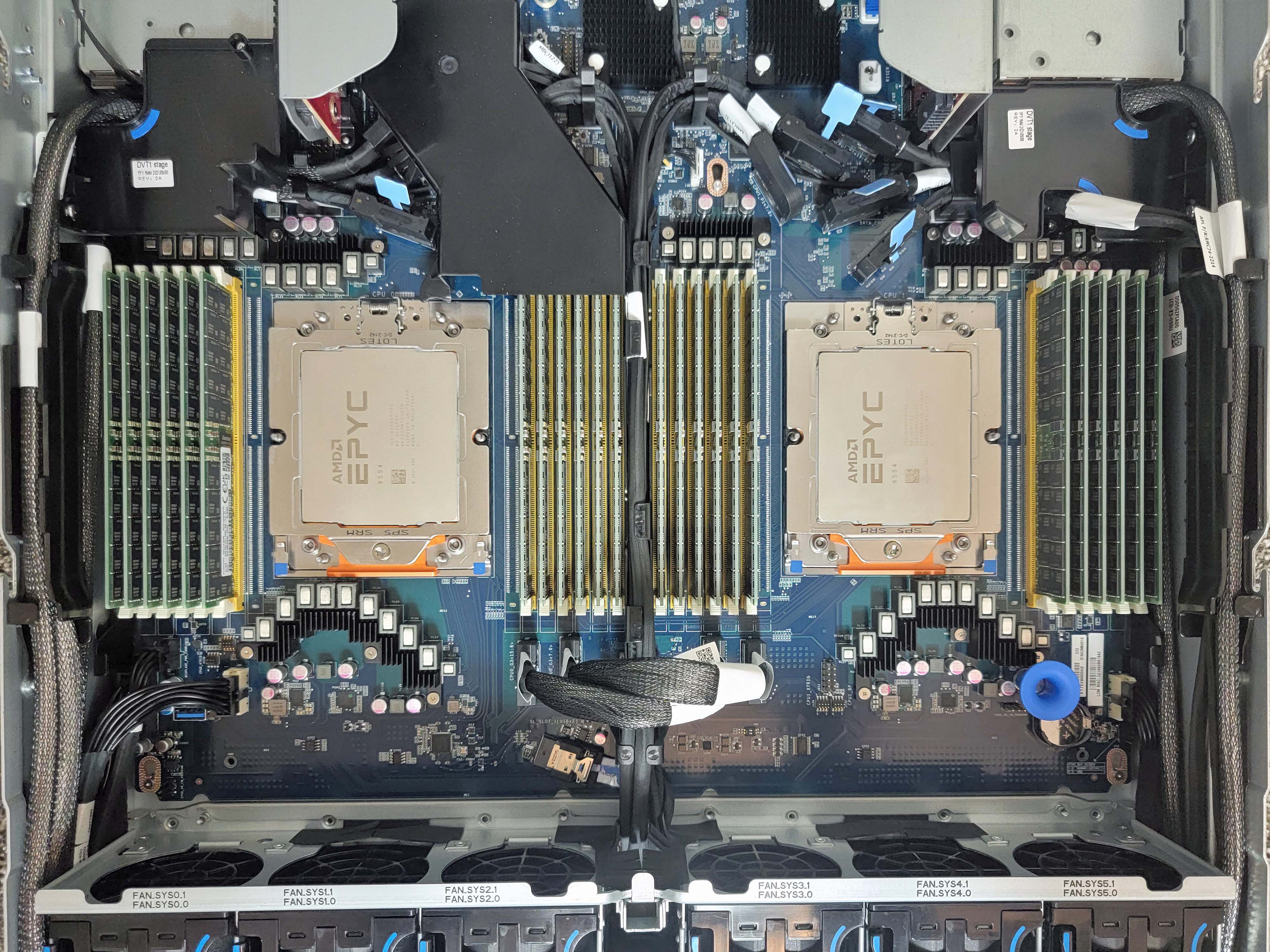

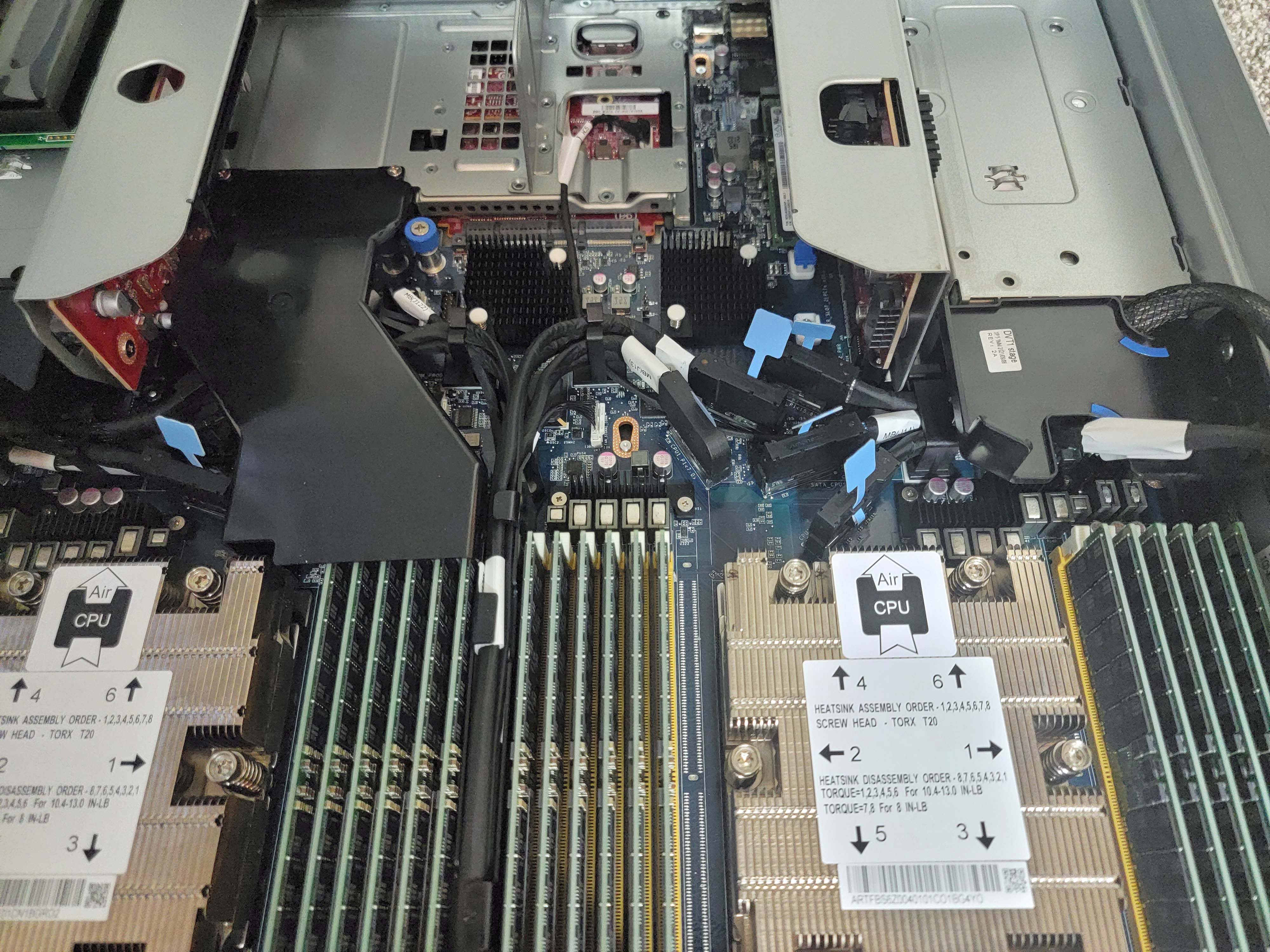

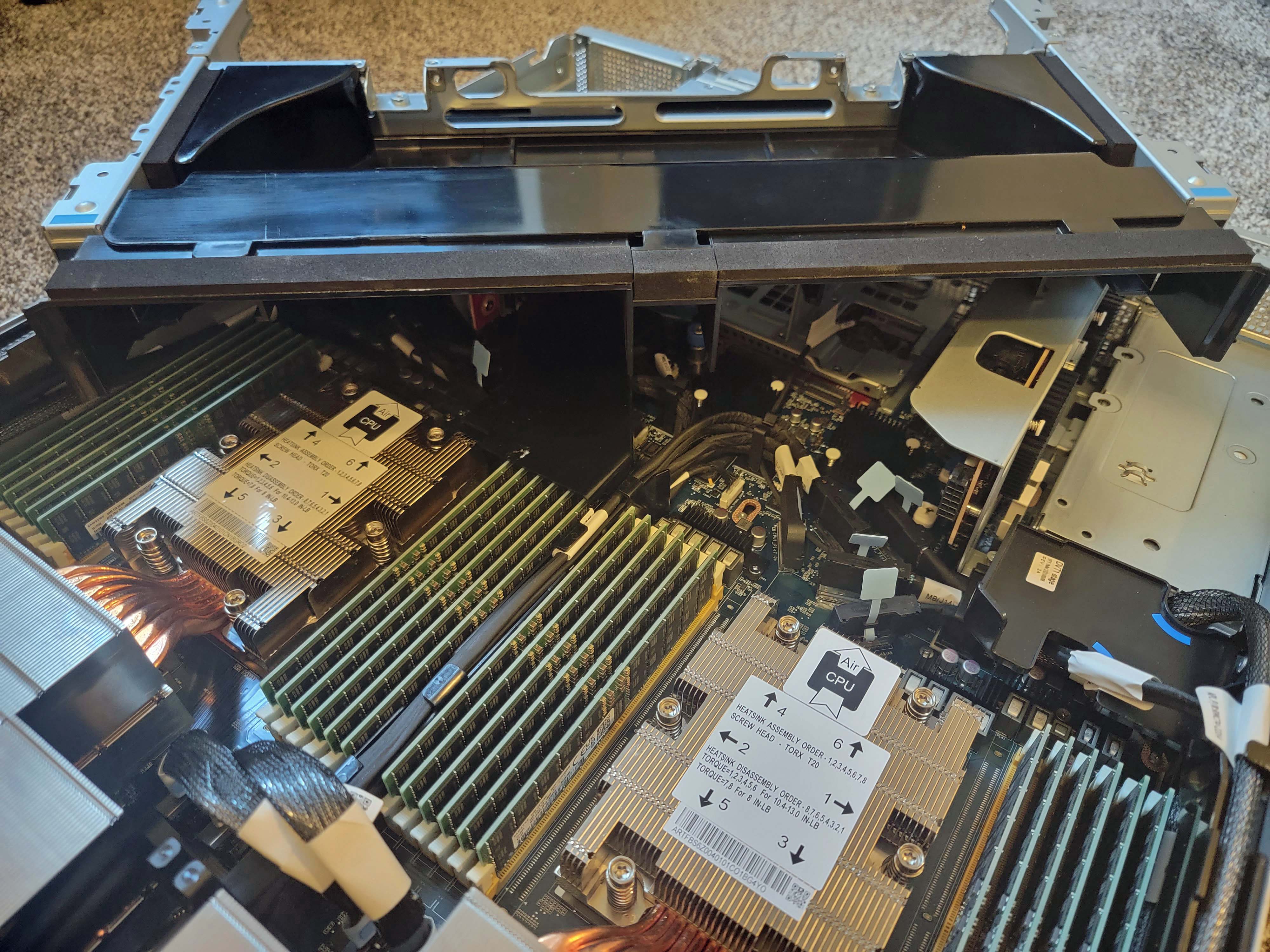

The motherboard houses multiple rows of VRMs, all dedicated to delivering power to the socket. (Remember, DDR5 has the power circuitry on the DIMM itself.) Two rows of these VRMs are arranged in a ‘flying V’-esque formation to avoid the various motherboard traces on one side and the DDR5 DIMMs on the other. Board space is obviously at a premium.

We also see several cables that plug into the motherboard near the CPU sockets. These connections are splayed across the motherboard in a seemingly haphazard fashion, but this is to prevent wiring crossovers that would prevent such a dense cabling arrangement. You’ll see similar designs in shipping servers to provide enough cord clearance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

These cables carry the I/O interfaces to the server's front panel, but that requires rather complex routing. This complex routing could be eased by using one of the two cabled Infinity Fabric links between the fans and the DRAM slots in the center of the chassis. Interestingly, AMD chose not to use a 3L implementation in this reference platform, but this cabling is a perfect example of why it is so useful.

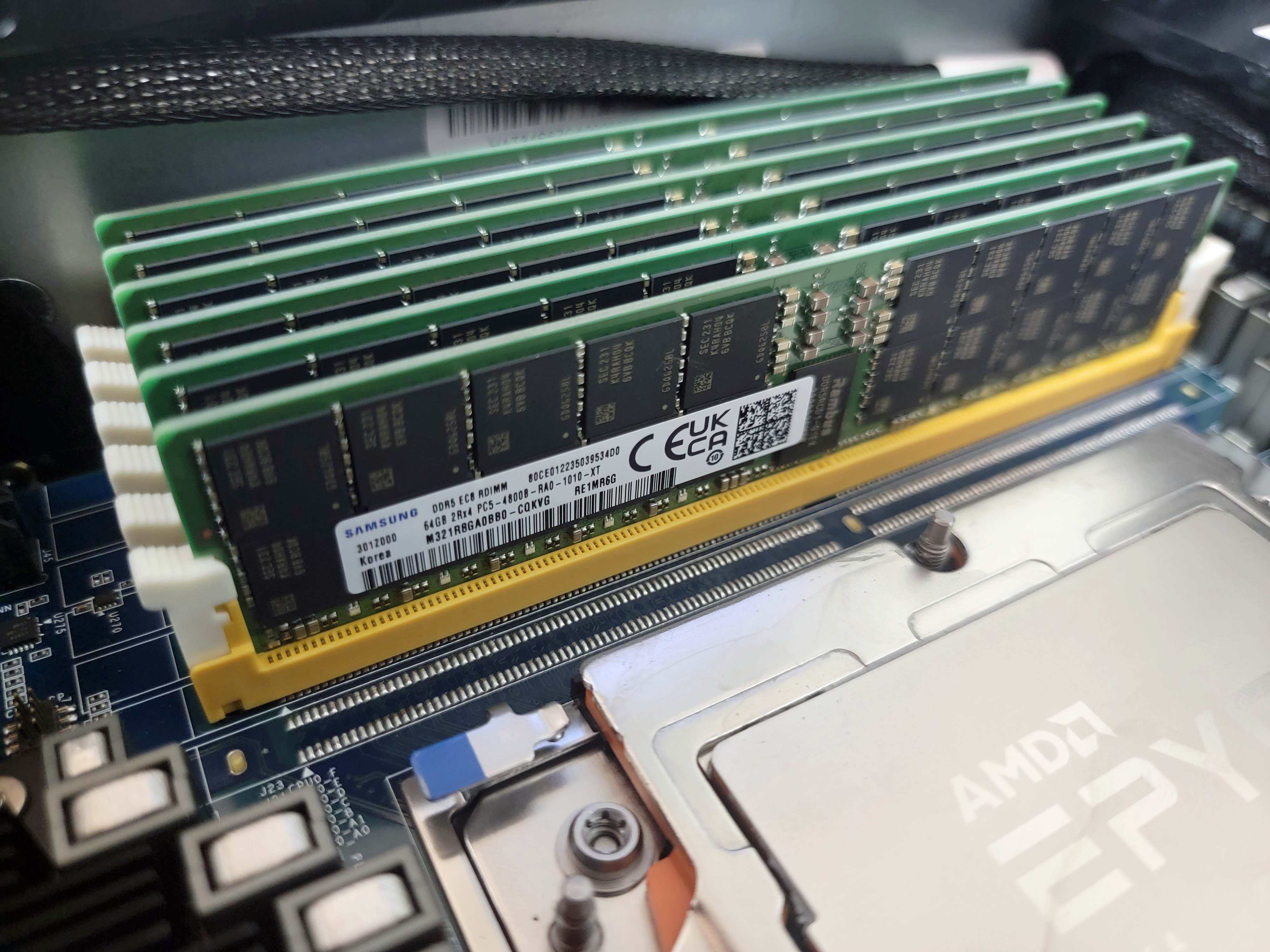

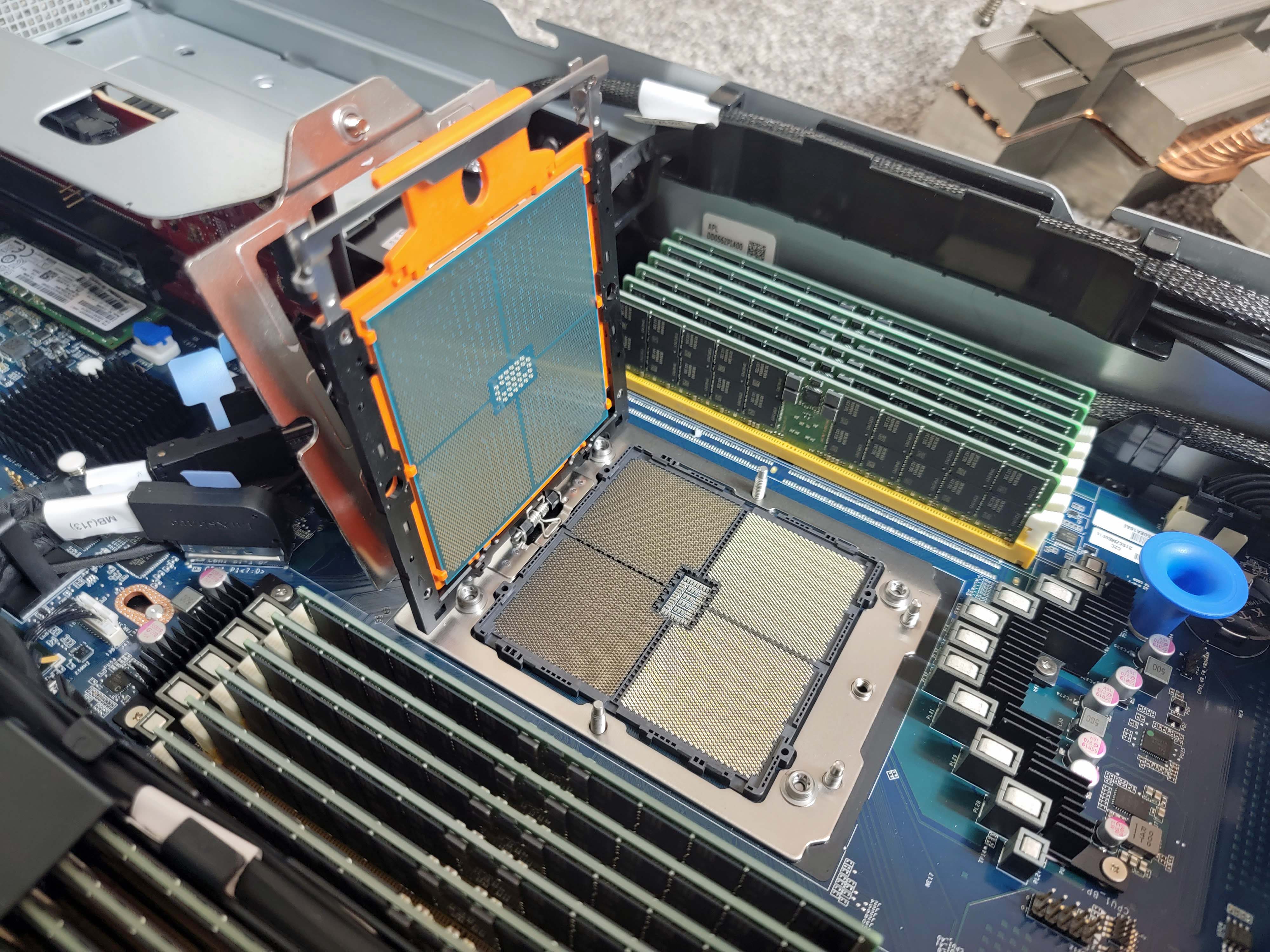

The system has 12 DRAM slots flanking each socket, for a total of 24. As you can see in the image of the DDR5 sticks, there are solder pads for one additional DRAM slot per side, but AMD chose not to mount them for this platform. Instead, these will be used to qualify 2DPC arrangements.

Interestingly, DDR5 slots are soldered directly to the top of the motherboard as a surface mounted device (SMD), whereas DDR4 slots are soldered through the board entirely. This SMD mounting is needed to accommodate the tighter signal integrity requirements of DDR5, but it does result in much 'weaker' slot attachments. Additionally, AMD has to use skinnier DDR5 slots to cram 12 channels of memory into a standard 2U server chassis.

AMD cautioned us that it has had several incidents where any lateral pressure when installing the DDR5 DIMMs can strip the DIMM socket off the board due to this weaker connection. We were also told to use caution when installing the heatsinks to avoid jostling the DDR5 slots due to their fragility.

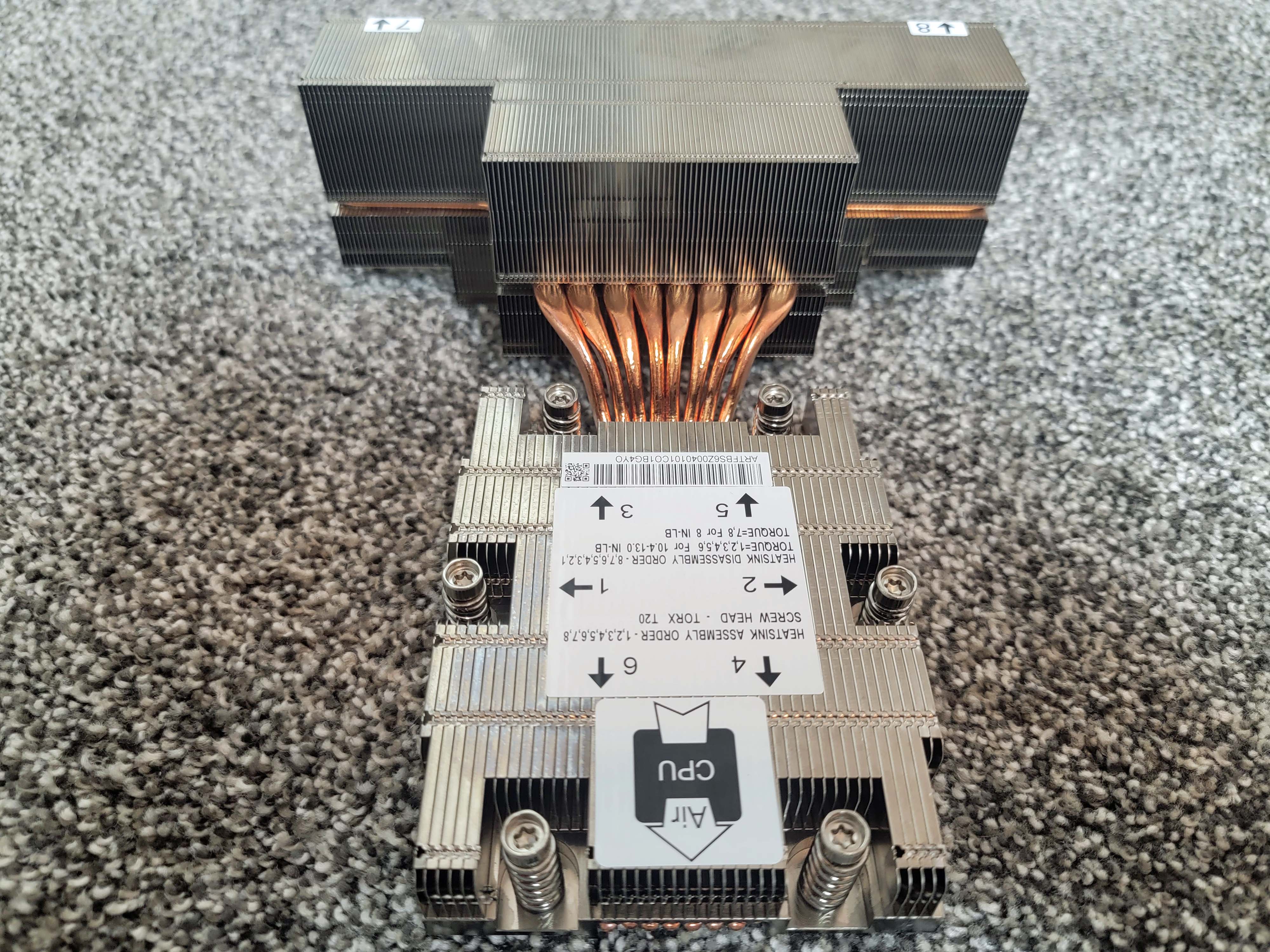

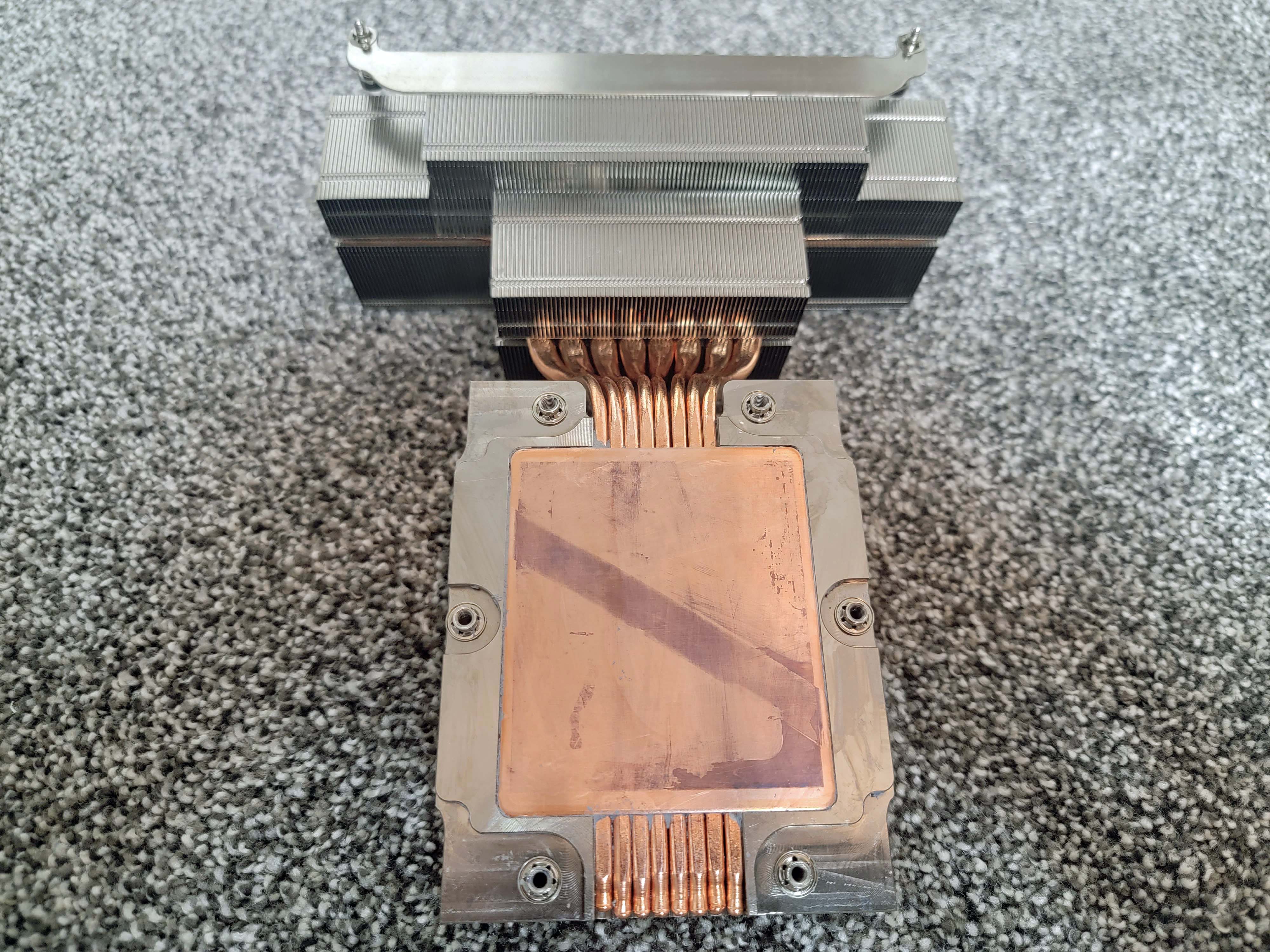

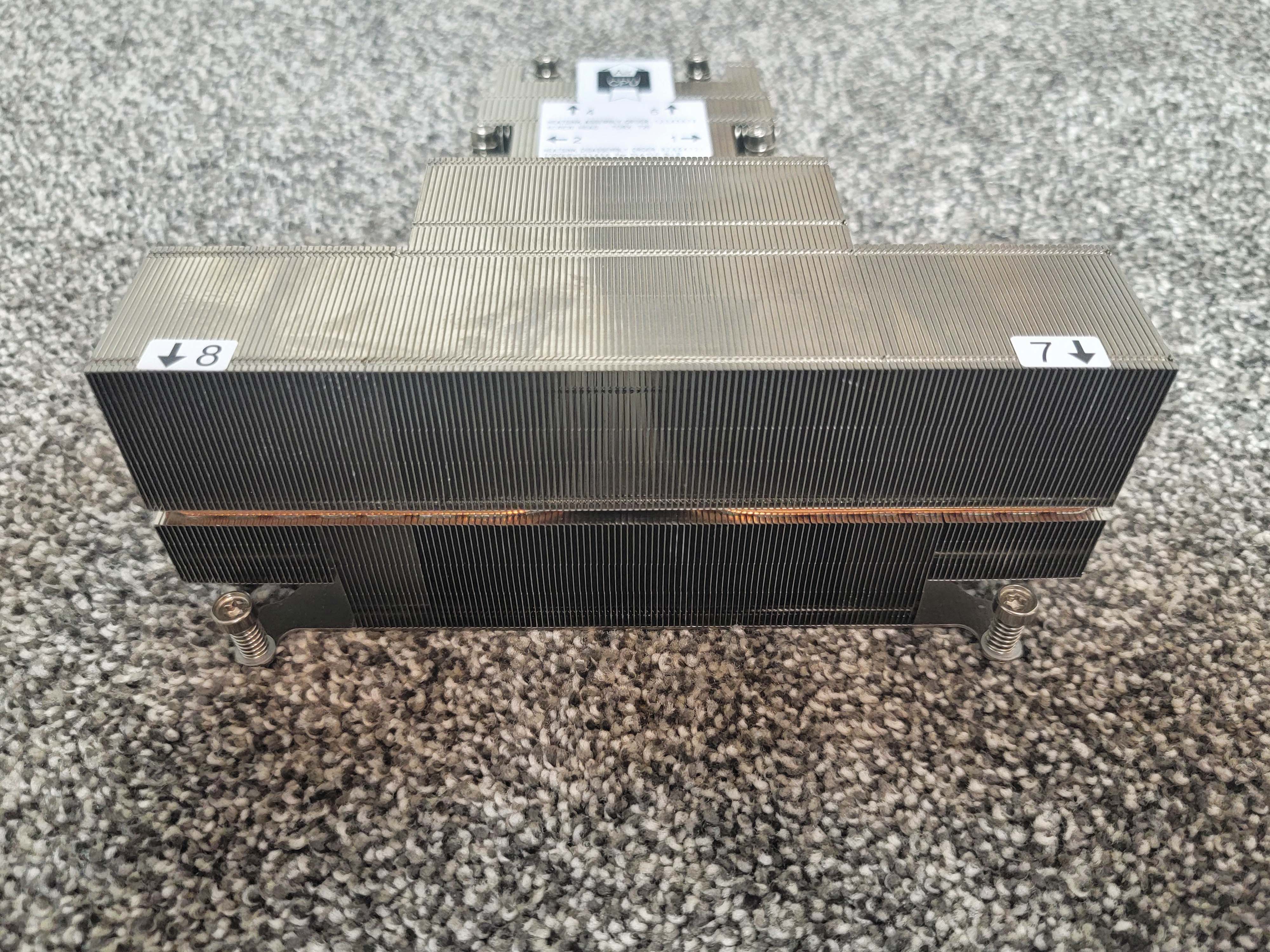

The heatsinks are massive affairs that consume far more space than we’re accustomed to seeing with previous-gen systems. As you can see, the rear of the assembly is what we would consider a ‘standard’ server CPU air cooler, much like the ones you’ll find on any AMD or Intel system. The copper cooling core mates with the CPU in standard fashion, but AMD elongated Genoa’s IHS specifically to provide more surface area to dissipate heat into the sink.

The standard portion of the heatsink has heatpipes that carry excess heat to the forward fin stack — a not-so-new but relatively rare innovation that will now become much more standard in air-cooled servers. This forward area has two fin stacks sandwiching the heat pipes, all of which provide previously unmatched cooling capabilities.

This (for lack of a better term) ‘dual-stage’ type of air cooler is largely responsible for enabling the higher level of power consumption, and thus performance, in air-cooled servers. Combined with smart system engineering to ensure tightly directed airflow over both the forward and rear fins stacks (helped along by the black air baffle), these heatsinks can dissipate up to 400W of thermal load. However, it isn’t clear if this type of heatsink will only be used in the highest-power servers, or if we’ll see these on lower-end servers as well. The fans are all hot-swappable, with power connectors integrated directly into the motherboard.

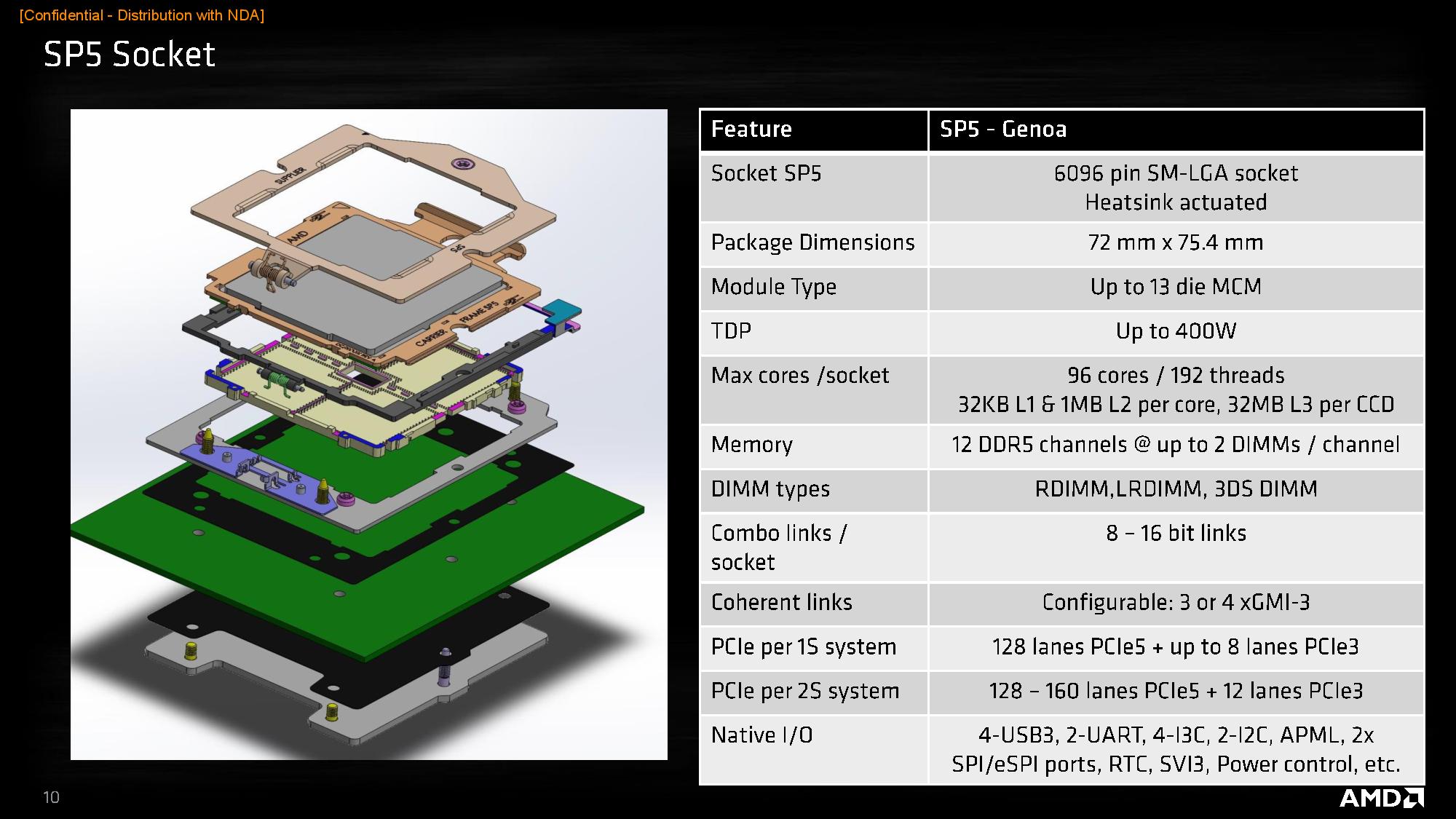

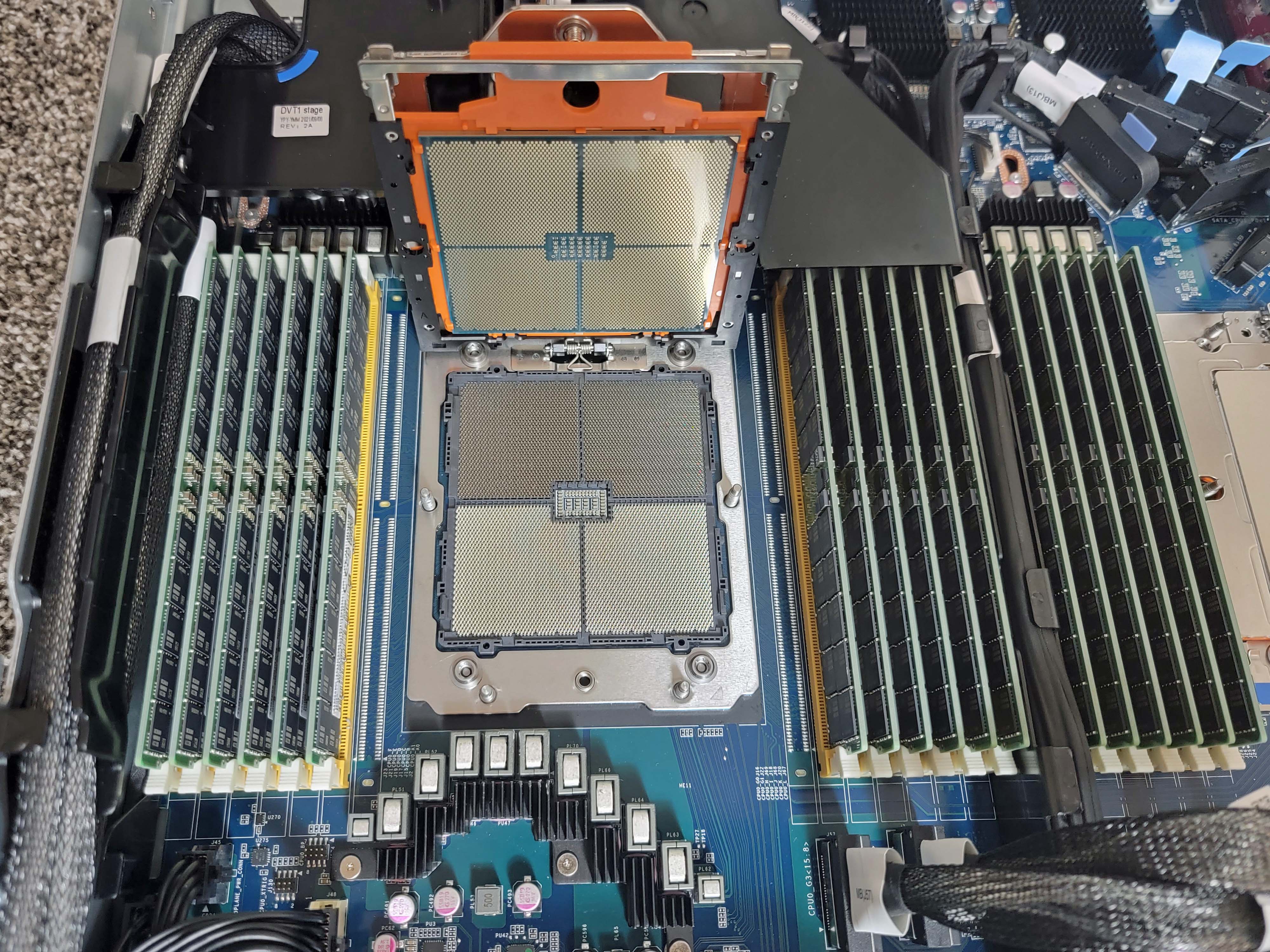

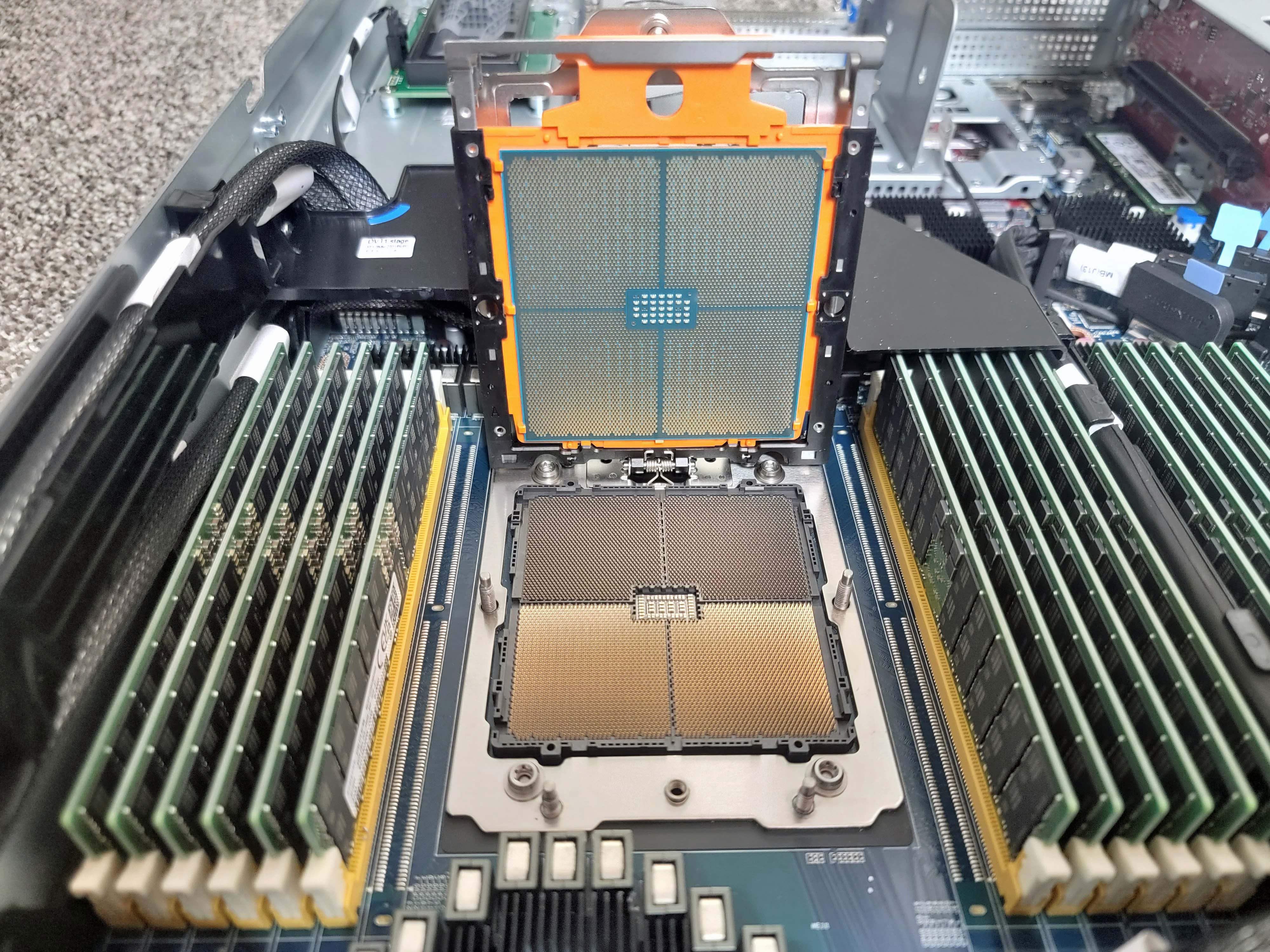

The SP5 socket is a beast of an LGA affair, housing 6,096 pins in a 72 x 75.4mm footprint and satisfying up to 400W processor TDPs.

The chip installation process will be familiar to anyone who has used AMD’s SP3 socket. However, the socket relies upon hold-down pressure from the six-fastener heatsink rather than the three screws we’re accustomed to with the SP3 socket.

Instead, one screw holds the chip down in the latching mechanism, while tightening the heatsink screws in a star pattern provides the hold-down pressure for firm mating. We found that this mounting technique is far superior to SP3 in ensuring good contact between the chip and the pins. It certainly isn’t uncommon to have memory channels disappear due to poor contact after changing chips in an SP3 socket, but we didn’t have those issues with the new SP5 mechanism after multiple chip swaps.

- MORE: Best CPUs for Gaming

- MORE: CPU Benchmark Hierarchy

- MORE: AMD vs Intel

- MORE: Zen 4 Ryzen 7000 All We Know

Current page: The SP5 Socket, Titanite Test System, Test Setup

Prev Page CXL, Zen 4 Architecture, Chiplet Designs Next Page Test Setup

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Roland Of Gilead Okay, for those more knowledgeable than me (I'm not really into server tech), how is it that Intel is so far behind in terms of core count with these systems? Looking at some of the benches (and I might as well be blind in both eyes and using a magnifying glass to scroll through the data!) it seems to me that if Intel were able to increase core count, that they would be comparable in performance to the AMD counterparts? What gives?Reply

Intel have taken the performance crown with ADL and Raptor in the consumer market, but on the bigger scales can't get close. -

rasmusdf Well, basically AMD has mastered the art and technology of connecting lots of small chips together. While Intel still has to make chips as one big lump. Big chips are harder and more expensive to produce. Plus when combining small chips you can always and easily add more.Reply

Intel might be competing right now in the consumer space - but they don't earn much profit on their expensive to produce CPUs.

Additionally - AMD is cheating a bit and is several chip nodes ahead in production process - while Intel is struggling to get past 10 nm, AMD is on what, 4 nm? Because of TSMCs impressive technology leadership and Intels stubborness. -

gdmaclew Strange that Tom's mentions that DDR5 support for these new Data Center CPUs is a "Con" but they don't mention it in yesterday's article of Intel's new CPUs using the same DDR5.Reply

But then maybe not, knowing Tom's. It's just so obvious. -

SunMaster ReplyRoland Of Gilead said:Intel have taken the performance crown with ADL and Raptor in the consumer market, but on the bigger scales can't get close.

Rumour has it that AMD prioritize less on the consumer market, and more on the server marked. So if "one core to rule them all" it means Zen4 is a core designed primarily for server chips. Whether Alder Lake took the "performance crown" or not is at best debateable, as is Raptor Lake vs Zen4 if power consumption is taken into consideration.

Intel does not use anything equivalent of chiplets (yet). The die size of raptor lake 13900 is about 257 square mm. Each Zen4 ccd is only 70 square mm (two in a 7950x). That gives AMD a tremendous advantage in manufacturing and cost.

See oMcsW-myRCU:2View: https://www.youtube.com/watch?v=oMcsW-myRCU&t=2sfor some info/estimates on yields and cost of manufacturing. -

bitbucket You have forgotten the face of your father.Reply

Most likely multiple reasons.

1) Intel struggled for a long time trying to reach the 10nm process

This delayed entire product lines for a couple of years and ultimately led to Intel outsourcing some production to TSMC which wasn't struggling to shrink the fabrication process

2) AMD moved to a chiplet strategy long before Intel, which I don't believe has a product for sale using chiplets yet, not sure though

AMD had already been using TSMC as AMD had sold off their manufacturing facilities years before

Large monolitic, high core-count CPUs are harder to make than smaller lower core-count CPUs

- An example is AMD putting two 8-core chiplets in a package (plus IO die) for a product that has 16 cores

- Intel has recently countered this by going with heterogeneous cores in their CPUs; a mix of bigger/faster and smaller/slower cores

- I don't believe that the heterogeneous core strategy has been implemented in servers products yet -

InvalidError The DIMM slot fragility issue could easily be solved or at least greatly improved by molding DIMM slots in pairs for lateral stability and sturdiness.Reply -

Roland Of Gilead Reply

Fair pointSunMaster said:Whether Alder Lake took the "performance crown" or not is at best debateable, as is Raptor Lake vs Zen4 if power consumption is taken into consideration. -

GustavoVanni It is just me or do you guys also think that AMD can fit 24 CCDs in the same package in the not so distant future?Reply

Sure there's some small SMDs in the way, but it should be doable.

Just imagine one of those with 192 ZEN4 cores ou 256+ ZEN4c cores.

Maybe with ZEN5? -

-Fran- Intel's only bastion seems to be accelerators and burn as much money as they can on adoption, even worse than AVX512.Reply

I just looked at the numbers of OpenSSL and I just laughed... Intel is SO screwed for general purpose machines. Their new stuff was needed in the market last year.

Regards.