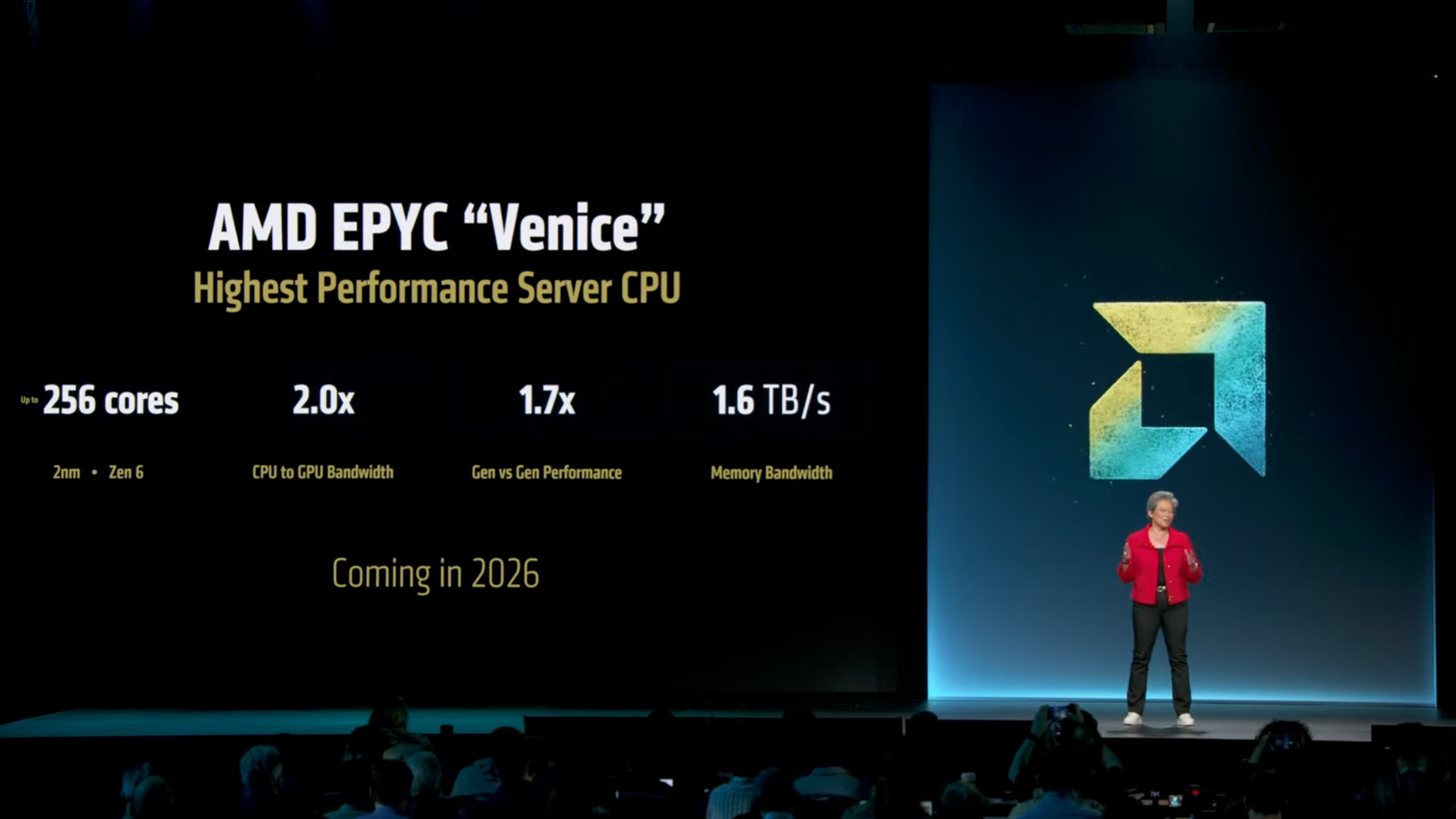

AMD EPYC Venice boasts 256 cores and bandwidth galore — next-gen server CPUs arrive in 2026

Per-socket memory bandwidth is increasing to to 1.6 TB/s.

AMD on Thursday revealed some of the first technical details about its next-generation Zen 6-based EPYC 'Venice' processor at its Advancing AI event. The company disclosed that the new server CPU will feature up to 256 cores, which increases the number of cores from the current generation EPYC 'Turin' processor by 33%. But the new microarchitecture and the increased number of cores will not be the only innovation that AMD's 2026 data center CPU will bring.

AMD says by packing up to 256 next-generation high-performance Zen 6 cores, the upcoming 6th Generation EPYC 'Venice' CPU will increase performance compared to the existing 5th Generation EPYC 'Turin' 9005-series processor by up to 70%, though the company refrained from elaborating exact workloads it used for comparison.

Perhaps more importantly, the new EPYC 'Venice' processor will more than double per-socket memory bandwidth to 1.6 TB/s (up from 614 GB/s in case of the company's existing CPUs) to keep those high-performance Zen 6 cores fed with data all the time. AMD did not disclose how it plans to achieve the 1.6 TB/s bandwidth, though it is reasonable to assume that the new EPYC ‘Venice’ CPUS will support advanced memory modules like like MR-DIMM and MCR-DIMM.

In addition, AMD's 6th Generation EPYC 'Venice' CPU will also double CPU-to-GPU bandwidth, which most likely means that this processor and the company's next-generation Instinct MI400X-series GPUs will use a PCIe 6.0 interface for communication. That would which mean AMD will be able to transfer up to 128 GB (not counting encoding overhead) of data per second in each direction. And with 128 PCIe lanes, the total amount of data that can be moved is likely much higher.

"Venice extends our leadership across every dimension that matters in the data center," said Lisa Su, chief executive officer of AMD. "More performance, better efficiency, and outstanding total cost of ownership. It is built on TSMC 2nm process technology and features up to 256 high performance Zen 6 cores. It delivers 70% more compute performance than our current generation EPYC 'Turin' CPU and and to really keep feeding [the Instinct MI400X accelerators] with data at full speed, at even at rack scale, we have doubled both the GPU and the memory bandwidth and optimized Venice to run at higher speeds. […] We just got 'Venice' back in the labs and it is looking fantastic."

AMD's 6th Generation EPYC processors are expected to adopt the all-new SP7 form-factor that is projected to enable the company to place more compute complex dies (CCDs) on the package, increase the number of memory channels, and boost peak power delivery well beyond 700W supported by the SP5 packaging.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Let's say they go to 16 memory channels and MR/MCR DIMMs. 1.6 TB/s over 16 channels gives you 100 GB/s per DIMM, which means it'd have to use something like DDR5-12500. The roadmap for MRDRAM supposedly goes out to 12800, so I guess it's possible.Reply

256 cores / 512 threads per CPU... wow. 1024 threads in a dual-GPU system. That's a lot, any way you look at it! So, if 256 cores is 1.7x as fast as 192 cores, then each Zen 6C core should be 27.5% faster than Zen 5C. However, maybe they're talking about the non-C version being 1.7x as fast. In that case, if they're talking about 192 cores vs. 128 cores, then Zen 6 would be only 13.3% faster than Zen 5. That's rather less impressive, but more inline with the sort of speedup we got in Zen 5.

Lastly, let's look at what that memory bandwidth does for your I/O capacity. PCIe 6.0 supports roughly 8 GB/s per lane per direction. So, at the nominal max speed, you could imagine its PCIe controllers might want 1 TB/s per direction. Using nominal figures, they could get 80% of that, however I'm sure various bottlenecks would knock it down quite a lot. Still, perhaps you could get 1 TB/s of PCIe traffic, aggregate. Remember, DRAM is simplex/unidir. -

thestryker This sounds like they're talking 256 Zen 6 cores as opposed to a Zen 6c which would be surprising. Moving to 16 channels for the memory controller ought to mean death of 2DPC for these processors, but perhaps single socket could use it still. JEDEC still hasn't released their MRDIMM standard to my knowledge, but the press release from last year stated 12800 so it seems a fair guess this is exactly what AMD is referring to.Reply -

bit_user Reply

How big are Zen 6 CCDs rumored to be? Weren't they going to 12 big cores per chiplet? In that case, it basically can't be 256 big cores.thestryker said:This sounds like they're talking 256 Zen 6 cores as opposed to a Zen 6c which would be surprising.

Don't MRDIMM's basically double-up what you get on an a standard RDIMM? If so, then between that and 33% more memory channels would seem to reduce the need for 2DPC quite a lot. Plus, CXL 3.0 should present a more viable path towards memory expansion.thestryker said:Moving to 16 channels for the memory controller ought to mean death of 2DPC for these processors, -

thestryker Reply

I don't believe MRDIMMs have higher capacity than RDIMMs, but they certainly support it. JEDEC is making a "Tall" specification which I'm pretty sure would eliminate the need for 2DPC entirely. While nothing is cement it didn't sound like this is launching with the MRDIMM spec, but perhaps AMD/Intel are pushing for it given how 2DPC is already a nightmare with 12 channel.bit_user said:Don't MRDIMM's basically double-up what you get on an a standard RDIMM? If so, then between that and 33% more memory channels would seem to reduce the need for 2DPC quite a lot. -

Stomx Reply

Most probably 256 cores will be with Zen6c unless they increase TDP to 650-700W needed to jump from 128 to 256 cores. That is what they claimed in April :thestryker said:This sounds like they're talking 256 Zen 6 cores as opposed to a Zen 6c which would be surprising. Moving to 16 channels for the memory controller ought to mean death of 2DPC for these processors, but perhaps single socket could use it still. JEDEC still hasn't released their MRDIMM standard to my knowledge, but the press release from last year stated 12800 so it seems a fair guess this is exactly what AMD is referring to.

"The company expects its manufacturing technology to offer either a 24% to 35% reduction in power consumption or a 15% increase in performance at constant voltage, along with a 1.15X boost in transistor density compared to the previous N3 (3nm-class) generation. These gains are primarily driven by the new type of transistors and the N2 NanoFlex design-technology"

But may be Zen5c/Zen6c are not losing much compared to Zen5/Zen6 if the tasks you mostly run are memory bandwidth bound and not the processor itself. In my case, these are particle-in-cell codes, but unfortunately I did not see any benchmarks of PIC codes, the closest were molecular dynamics ones.

Anyone has Turin Zen5c 192 core processor to try with Gigabyte MZ73 (work with Turin) and compare to Genoa Zen4? Or even better -- can anybody add PIC code to the benchmark test suite? There exist on GitHub very simple ones to compile and run (EPOCH for example, see their one page "Getting Started"). Chip manufacturers will be happy to see that as PIC codes behave pretty well with strong and weak scalings. Tests suites full of old not scaling well tests like the one in this site, Passmark or even Phoronix i suspect more make manufacturers furious than happy :) -

jeremyj_83 I hope that AMD decides to give more than 128 PCIe (up to 160 in 2 CPU situations) lanes. IMO ideally they should have 192 lanes. That would allow for 32 E3.S NVMe drives with direct link to the CPU and 4x NIC using the full x16 lanes. At worst they should have 160 lanes to all for 24 U.3 drives with direct link to the CPU and 4x NICs.Reply -

bit_user Reply

That's not what the article says. CXL 3.0 supports switched fabrics, though. I think that's a better bet, since the CPU packages are already big enough and they don't even have enough memory bandwidth to feed all 128 PCIe 6.0 lanes.jeremyj_83 said:I hope that AMD decides to give more than 128 PCIe (up to 160 in 2 CPU situations) lanes. IMO ideally they should have 192 lanes.

Here's where a switch would probably make a lot of sense. If you multiplexed 64 device lanes over 16 CPU lanes, that would probably work alright. It's unlikely more than 4 out of 16 drives would be running at max PCIe 6.0 x4 rate, at any given point in time. Some may prefer just 2:1 multiplexing.jeremyj_83 said:That would allow for 32 E3.S NVMe drives with direct link to the CPU and 4x NIC using the full x16 lanes. At worst they should have 160 lanes to all for 24 U.3 drives with direct link to the CPU and 4x NICs. -

abufrejoval Reply

All I'd like to say at this point is that "c" vs "non-c" for AMD isn't as clear cut as P vs E cores with Intel.thestryker said:This sounds like they're talking 256 Zen 6 cores as opposed to a Zen 6c which would be surprising.

From a functional or RTL perspective the two are identical (not counting cache size or V-cache layers) and mostly a matter of just how dense you make the physical cells to trade heat vs clocks. And every new process node means a physical redesign and a new set of options, you can't just interpolate current c vs non-c performance gaps to newer nodes.

And while AMD tries to keep CCD variants as minimal as possible (only one for a long time, currently two), that's mostly an economic decision used to deal with competitive pressure, not a technological constraint.

So as the competitive landscape shifts and their market share and scale increases, so do their options to perhaps also exploit different process nodes for variants: they only need to ensure IOD interoperability. -

bit_user Reply

Has anyone confirmed whether Zen 5C server cores have half or full-width AVX-512? Their laptop Zen 5 cores use half-width.abufrejoval said:All I'd like to say at this point is that "c" vs "non-c" for AMD isn't as clear cut as P vs E cores with Intel.

From a functional or RTL perspective the two are identical (not counting cache size or V-cache layers) and mostly a matter of just how dense you make the physical cells to trade heat vs clocks. And every new process node means a physical redesign and a new set of options, you can't just interpolate current c vs non-c performance gaps to newer nodes.

Also, I think we don't know whether Zen 6C will remain as similar to Zen 6 as C-cores have traditionally been. AMD could always decide to do further perf/W or perf/mm^2 optimizations on them.