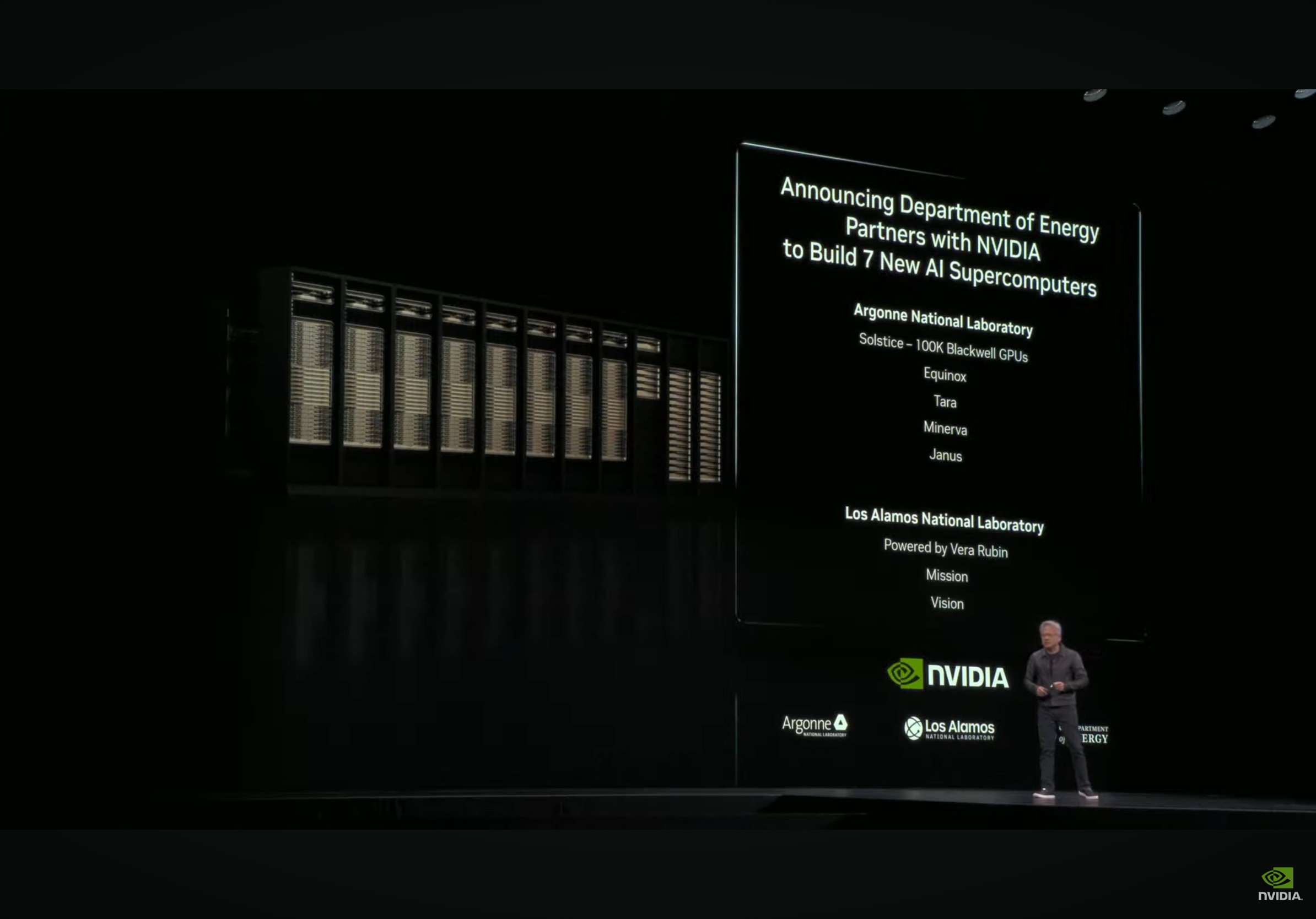

Nvidia and partners to build seven AI supercomputers for the U.S. gov't with over 100,000 Blackwell GPUs —combined performance of 2,200 ExaFLOPS of compute

And even more.

Coming on the heels of the Vera Rubin-based supercomputers for Los Alamos National Laboratory, Nvidia announced on Tuesday at GTC 2025 that, together with partners, it would build seven ExaFLOPS-class AI supercomputers for Argonne National Laboratory. Two out of five systems will be built by Oracle and will use over 100,000 Blackwell GPUs, delivering a combined performance of up to 2,200 ExaFLOPS.

The first of five AI supercomputers for Argonne National Laboratory is Equinox, which will pack 10,000 Blackwell GPUs and serve as the first phase of the project, coming online in 2026. The second phase of the project — called Solstice — will be a 200 MW system packing over 100,000 Blackwell GPUs. The two systems will be connected to deliver an aggregate performance of 2,200 FP4 ExaFLOPS for AI computations.

"We are proud to announce that Nvidia, the U.S. Department of Energy and Oracle are partnering to build two AI factories at Argonne National Laboratories featuring Blackwell," said Dion Harris, the head of data center product marketing at Nvidia. "This collaboration aims to significantly boost America's scientific research and development productivity and establish U.S. leadership in AI. Phase one features the Equinox system, which is 10,000 Blackwell GPUs; phase two, providing 200 MW of AI infrastructure, totaling 2,200 ExaFLOPS of AI performance."

The systems will be used to build three-trillion-parameter AI simulation models as well as for classic scientific computing.

One interesting thing to note about the Equinox and Solstice supercomputers is that they will be built by Oracle, a company that nowadays is not widely known as a vendor that designs and builds completely bespoke supercomputers for customers, as traditional HPC vendors like Atos, Dell, or HPE do. Oracle's primary business emphasis is on cloud infrastructure enabling AI/HPC workloads rather than custom HPC system integration from the ground up. While Oracle has its Cloud@Customer option, these machines also run Oracle's software and are managed by the company. Whether Equinox and Solstice will be managed by Oracle remains to be seen.

In addition, the Argonne National Laboratory will expand its Argonne Leadership Computing Facility — which will be available to researchers and scientists through competitive national programs — with Nvidia-based supercomputers, including Tara, Minerva, and Janus. For now, it is unclear which platform these systems will use or whether they will be built by HPE or Oracle, but we can be sure they will deliver formidable performance.

"Argonne's collaboration with Nvidia and Oracle represents a pivotal step in advancing the nation's AI and computing infrastructure," said Paul K. Kearns, director of Argonne National Laboratory. "Through this partnership, we are building platforms that redefine performance, scalability and scientific potential. Together, we are shaping the foundation for the next generation of computing that will power discovery for decades to come."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

usertests ReplyThe systems will be used to build three-trillion-parameter AI simulation models as well as for classic scientific computing.

Before anyone complains, these are dual-use systems with FP64 capability. And machine learning has been effectively used for scientific discoveries for years. For example, AlphaFold, which got its creators a Nobel Prize in Chemistry. -

JRStern Yeah but you know they're just doing this because everybody's doing this and it sounds good. You ask me we are already at about 300% overcapacity moving fast for 2500% overcapacity.Reply

Need a lot more R&D which will do two things, first it will fix some of the major shortcomings of current LLMs, and second it will find a way to make current technology work about 1000x cheaper.

Then we'll be able to build 100x current capacity for less than 10% of what we've spent so far. -

jp7189 Reply

Let's hope these aren't used for LLMs. That's been done and there is plenty of commercial competition to push that concept forward. Massive simulations should be the focus for these... nuke power (hopefully), weapons (unfortunately), signals (generation, propagation, interference), medicine.. just to name a few.JRStern said:Yeah but you know they're just doing this because everybody's doing this and it sounds good. You ask me we are already at about 300% overcapacity moving fast for 2500% overcapacity.

Need a lot more R&D which will do two things, first it will fix some of the major shortcomings of current LLMs, and second it will find a way to make current technology work about 1000x cheaper.

Then we'll be able to build 100x current capacity for less than 10% of what we've spent so far.

All of these AI models benefit from more parameters and more precision, are important to our future, and don't have immediate ROI (meaning it's in the realm of gov research rather than commercial interest) -

JRStern Reply

100,000 B200's is optimized for LLMs and only LLMs.jp7189 said:Let's hope these aren't used for LLMs.

I suppose it's also good for keeping your coffee warm but perhaps not optimal for that purpose. -

jp7189 Reply

Nvidia is in 8 of the top 10 supercomputers and not one of those has a stated purpose of training LLMs. They're also at #7 on the green list for energy efficiency.JRStern said:100,000 B200's is optimized for LLMs and only LLMs.

I suppose it's also good for keeping your coffee warm but perhaps not optimal for that purpose.

Not even Nvidia is pushing llm that hard. Check out the recent gtc conference. LLM was mentioned of course, but majority of use cases are non-LLM.