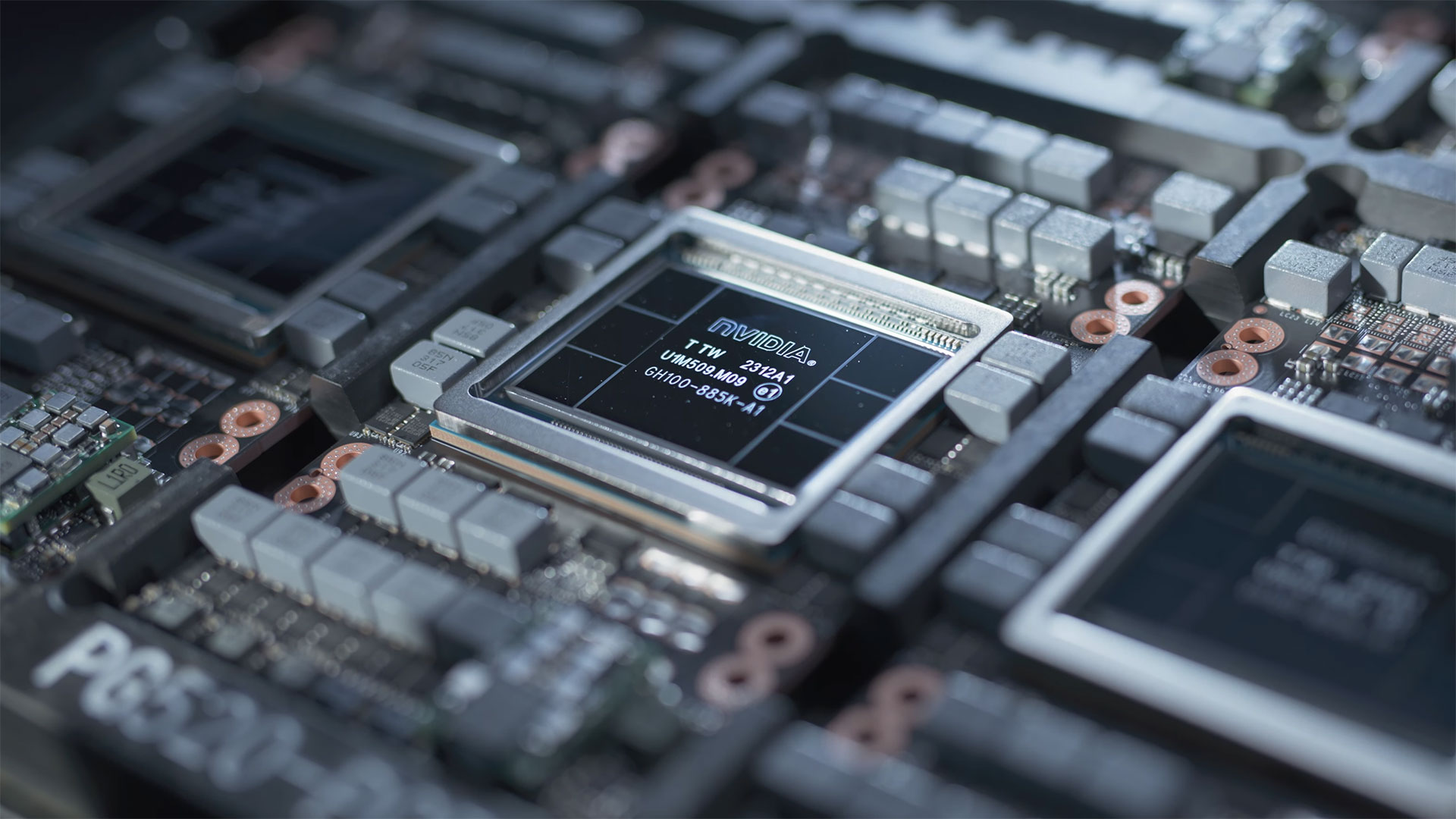

Nvidia's next-gen AI GPUs could draw an astounding 1000 Watts each, a 40 percent increase — Dell spills the beans on B100 and B200 in its earnings call

A lot of power for an AI and HPC processor.

Dell, one of the world's largest server makers, has spilled the beans on Nvidia's upcoming AI GPUs, codenamed Blackwell. Apparently, these processors will consume up to 1000 Watts, a 40% increase in power over the prior-gen, requiring Dell to use its engineering ingenuity to cool these GPUs down. Dell's comments might also hint at some of the architectural peculiarities of Nvidia's upcoming compute GPUs.

"Obviously, any line of sight to changes that we are excited about what's happening with the H200 and its performance improvement," said Yvonne Mcgill, Dell's chief financial officer. "We are excited about what happens at the B100 and the B200, and we think that's where there's actually another opportunity to distinguish engineering confidence. Our characterization in the thermal side, you really don't need direct liquid cooling to get to the energy density of 1,000 watts per GPU."

| Tom's Hardware | Nvidia H100 (current) | Nvidia B100 (Dell est.) | AMD MI300X | Nvidia H200 (current) |

| FP16/bf16 TFLOPS | 989 | ? | 1307 | 989 |

| Power Consumption | 700W | 1000W | 750W | 700W |

| Die Size (sq mm) | 814 | ? | 1017 | 814 |

Being unaware of Nvidia's plans regarding its Blackwell architecture, we can only refer to the basic rule of thumb with heat dissipation, which says that thermal dissipation typically tops out around 1W per square millimeter of the chip die area.

This is where it becomes interesting from a chip manufacturing point of view: Nvidia's H100 (being built on a custom 4nm-class process technology) already dissipates around 700W, albeit with the power of HBM memory included, and the chip die is 814^2 large, so it falls under 1W per square millimeter. This die is built on a TSMC custom performance-enhanced 4nm-class process technology.

Nvidia's next-generation GPU will probably be built on another performance-enhanced process technology, and we can only guess that it will be built with a 3nm-class process technology. Given the amount of power the chip consumes, and the required thermal dissipation, its rational to think that Nvidia's B100 might be a dual-die design, the company's first, thus allowing it to have a larger surface area to deal with the the heat generated. We've already seen AMD and Intel adopt GPU architectures with multiple dies, so this would track with other industry trends.

When it comes to high-performance AI and HPC applications, we need to consider the performance measured in FLOPS and the power it takes to achieve these FLOPS and cool the resulting thermal energy. What matters for software developers is how to use those FLOPS efficiently. What matters for hardware developers is how to cool the processors producing those FLOPS. This is where Dell says its technologies are poised to exceed the company's rivals, which is why Dell's CFO spoke out about Nvidia's next-generation Blackwell GPUs.

"That happens next year with the B200," said McGill, referring to Nvidia's next AI and HPC GPU. "The opportunity for us really to showcase our engineering and how fast we can move and the work that we've done as an industry leader to bring our expertise to make liquid cooling perform at scale, whether that's things in fluid chemistry and performance, our interconnect work, the telemetry we are doing, the power management work we're doing, it really allows us to be prepared to bring that to the marketplace at scale to take advantage of this incredible computational capacity or intensity or capability that will exist in the marketplace."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

FunSurfer Well they can't cool the new AI processors in antarctica after Iran claimed it its property...Reply -

Fruban Yikes. I hope they power all the new AI data centers with solar, wind, and battery... Unsustainable power draw.Reply -

Alvar "Miles" Udell Simple, oil immersion cooling with a double heat exchanger, with the waste heat being used to perform work, much as some cryptominers and server operators already do.Reply

https://www.tomshardware.com/news/japanese-data-center-starts-eel-farming-side-hustle -

PEnns Reply

The first person to create a script to hide / block any article or nugget of useless info about AI should win the Nobel Prize or something similar.HopefulToad said:How do I unsubscribe from "AI" news?

Enough already! -

usertests Reply

Turn off your computer.HopefulToad said:How do I unsubscribe from "AI" news?

Use AI to do it.PEnns said:The first person to create a script to hide / block any article or nugget of useless info about AI should win the Nobel Prize or something similar.

Enough already! -

bit_user Reply

Immersion cooling is expensive, but probably something we're going to see more of. From Dell's perspective, requiring immersion cooling in their mainstream products would be a fail, as it would greatly restrict their market.Alvar Miles Udell said:Simple, oil immersion cooling with a double heat exchanger, with the waste heat being used to perform work, much as some cryptominers and server operators already do.

Also, there are limited opportunities to harness waste heat, especially in the places where datacenters exist or where it makes sense to build them. It's great if you can do it, but it certainly won't be the norm. -

JTWrenn It's only astounding if you are thinking about this being in a desktop. For a high end server system as long as the perf per watt and size per watt are better than the last gen it's good. The question is are they doing that or are they just cranking up the clocks to push off AMD for a while as they prep their next big architecture jump. I hope that is not the case but sometimes performance is king no matter what....really just depends on the customer.Reply

Let's hope it is a step in the right efficiency direction and not a ploy for fastest card.