Nvidia Readies New AI and HPC GPUs for China Market: Report

Hopper and Ada Lovelace-based solutions that comply with U.S. export requirements.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

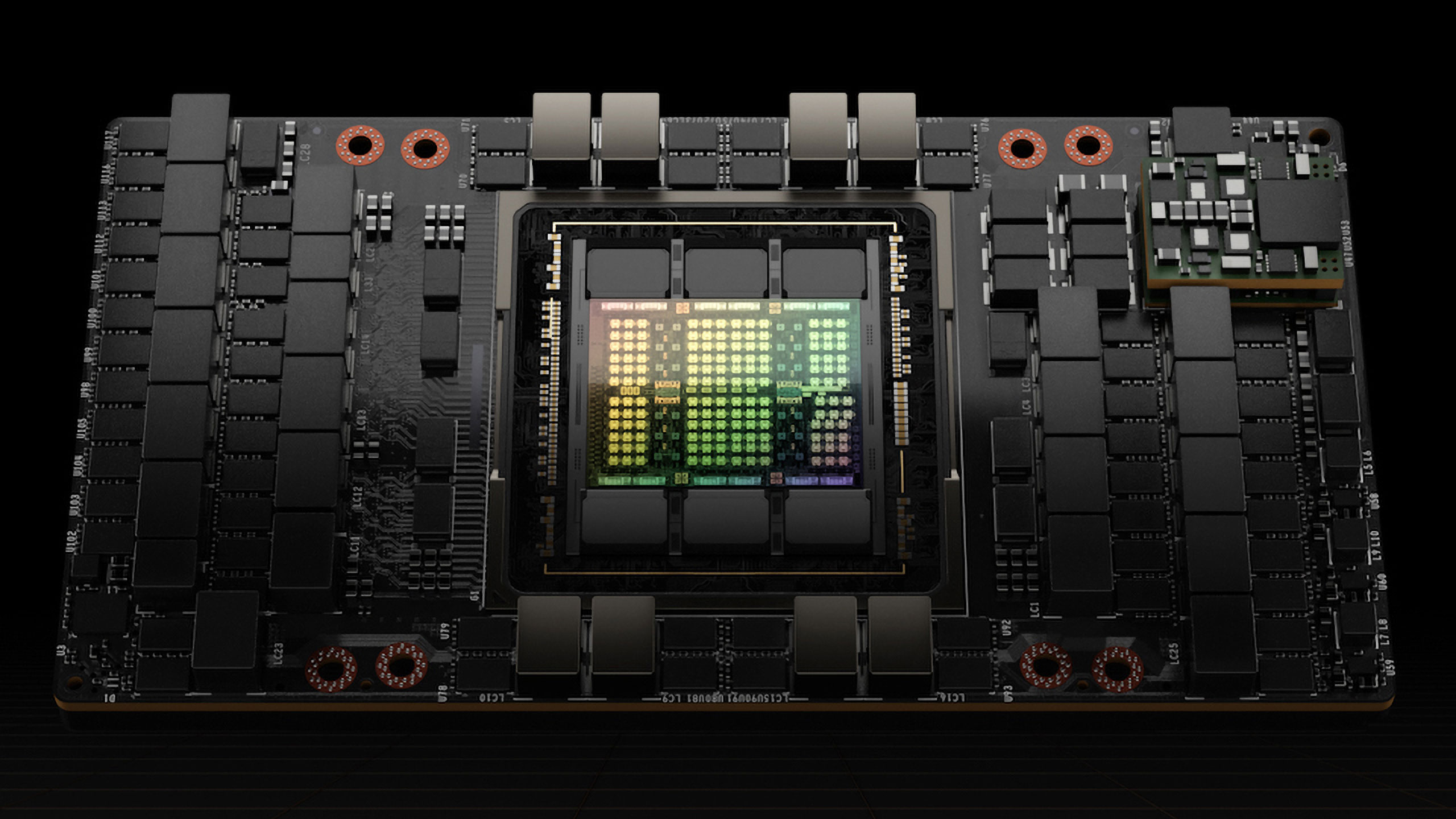

Nvidia is prepping three new GPUs for artificial intelligence (AI) and high-performance computing (HPC) applications tailored for Chinese market and to comply with U.S. export requirements, according to ChinaStarMarket.cn. The new units will be based on the Ada Lovelace and Hopper architectures, according to the leaked information.

The AI and HPC products in question are HGX H20, L20 PCle, and L2 PCle GPUs and all of them are already heading to Chinese server makers, the report claims. Meanwhile, HKEPC has published a slide which claims that the new HGX H20 with 96 GB of HBM3 memory is based on the Hopper architecture and either uses a severely crippled flagship H100 silicon, or a new Hopper-based AI and HPC GPU design. Since this is an unofficial piece of information, take it with a pinch of salt.

| GPU | HGX H20 | L20 PCle | L2 PCle |

| Architecture | Hopper | Ada Lovelace | Ada Lovelace |

| Memory | 96 GB HBM3 | 48 GB GDDR6 w/ ECC | 24 GB GDDR6 w/ ECC |

| Memory Bandwidth | 4.0 TB/s | 864 GB/s | 300 GB/s |

| INT8 I FP8 Tensor | 296 I 296 TFLOPS | 239 I 239 TFLOPS | 193 I 193 TFLOPS |

| BF16 I FP16 Tensor | 148 I 148 TFLOPS | 119.5 I 119,5 TFLOPS | 96.5 I 96.5 TFLOPS |

| TF32 Tensor | 74 TFLOPS | 59.8 TFLOPS | 48.3 TFLOPS |

| FP32 | 44 TFLOPS | 59.8 TFLOPS | 24.1 TFLOPS |

| FP64 | 1 TFLOPS | N/A | N/A |

| RT Core | N/A | Yes | Yes |

| MIG | Up to 7 MIG | N/A | N/A |

| L2 Cache | 60 MB | 96 MB | 36 MB |

| Media Engine | 7 NVDEC, 7 NVJPEG | 3 NVENC (+AV1), 3 NVDEC, 4 NVJPEG | 2 NVENC (AVI), 4 NVDEC, 4 NVJPEG |

| Power | 400 W | 275W | TBD |

| Form Factor | 8-way HGX | 2-slot FHFL | 1-slot LP |

| Interface | PCIe Gen5 x16: 128 GB/s | PCle Gen4 x16: 64 GB/s | PCle Gen4 x16: 64 GB/s |

| NVLink | 900 GB/s | - | - |

| Samples | November 2023 | November 2023 | November 2023 |

| Production | December 2023 | December 2023 | December 2023 |

When it comes to performance, HGX H20 offers 1 FP64 TFLOPS for HPC (vs. 34 TFLOPS on H100) and 148 FP16/BF16 TFLOPS (vs. 1,979 TFLOPS on H100). It should be noted that Nvidia's flagship compute GPU is too expensive to be cut down and sold at a steep discount, but there may be other explanations. The company already has a lower-end A30 AI and HPC GPU with 24 GB HBM2 that is based on Ampere architecture, and it is cheaper than the A100. In fact, A30 is faster than HGX H20 in both FP64 and FP16/BF16 formats.

As for L20 and L2 PCIe AI and HPC GPUs, these seem to be based on Nvidia's cut-down AD102 and AD104 GPUs and will address the same markets as L40 and L40S products.

In the last couple of years, the U.S. has imposed stiff restrictions for high-performance hardware exports to China. The U.S. controls for the Chinese supercomputer sector imposed in October 2022 are focused on preventing Chinese entities build supercomputers with performance of over 100 FP64 PetaFLOPS within 41,600 cubic feet (1178 cubic meters). To comply with U.S. export rules, Nvidia had to cut inter-GPU connectivity and GPU processing performance for its A800 and H800 GPUs.

Now, the limitations set in November 2023 require export licenses on all hardware that achieves a certain total processing performance and/or performance density, no matter whether the part can efficiently connect to other processors (using NVLink in Nvidia's case) or not. As a result, Nvidia can no longer sell A100, A800, H100, H800, L40, L40S, and GeForce RTX 4090 to Chinese entities without an export license from the U.S. government. To comply with the new rules, HGX H20, L20 PCle, and L2 PCle GPUs for AI and HPC compute will not only come with reduced NVLink connectivity, but also with reduced performance.

Interestingly, Nvidia recently launched its A800 and H800 AI and HPC GPUs in the U.S. formally targeting small-scale enterprise AI deployments and workstations. Keeping in mind that the company can no longer sell these units to companies in China, Saudi Arabia, the United Arab Emirates, and Vietnam, this is a good way to clear out the inventory. We wouldn't expect these parts to be interesting for large cloud service providers in the U.S. and Europe, but smaller deployments may find them useful.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

elforeign Can someone help me understand the logic here? So they will produce H100 GPUs destined for the chinese market with cut down performance, i'm guessing by having to cut down the hardware itself and not just software coded limitations. But isn't the real problem here that these will still be designed and manufactured by TSMC with the latest technologies? How's that going to bypass the restrictions meant to keep the chinese from obtaining cutting edge manufacturing tech and designs.Reply

They could just reverse engineer the chip to view how it was made. Not that they couldn't find ways to do that already, but this trade war seems a lot more problematic than it's worth? -

JarredWaltonGPU Reply

The current export controls mostly target computational potential within a given volume of space, not the tech used to make the parts. RTX 4080 and below, all made using the same TSMC 4N process as the RTX 4090 and H100, can still be sold in China. It seems these new parts come in below the current limit and are thus allowable.elforeign said:Can someone help me understand the logic here? So they will produce H100 GPUs destined for the chinese market with cut down performance, i'm guessing by having to cut down the hardware itself and not just software coded limitations. But isn't the real problem here that these will still be designed and manufactured by TSMC with the latest technologies? How's that going to bypass the restrictions meant to keep the chinese from obtaining cutting edge manufacturing tech and designs.

They could just reverse engineer the chip to view how it was made. Not that they couldn't find ways to do that already, but this trade war seems a lot more problematic than it's worth?

There are two main questions now, however. First, will Chinese companies even be interested in these gimped parts? Maybe, maybe not. But the bigger issue is that even if companies in China do start buying these GPUs, what's to prevent the U.S. government from lowering the limit yet again? The H800 and A800 after all were made to comply with the 2022 restrictions. If someone thinks the L20 or H20 are "too fast" in the coming months, we'll see the same scenario play out yet again.

There's also the question of clocks and whether some of these could be tweaked to regain lost performance. We don't have exact specs yet, but I wouldn't put it past certain players over in China to try to alter the hardware to get performance back closer to H800 levels. -

elforeign Reply

I see, thank you for that explanation. I could certainly see the bar being lowered yet again. I guess we'll have to wait and see what the specs for the revised chips will be and what headroom that will afford those possessing them to extract more performance.JarredWaltonGPU said:The current export controls mostly target computational potential within a given volume of space, not the tech used to make the parts. RTX 4080 and below, all made using the same TSMC 4N process as the RTX 4090 and H100, can still be sold in China. It seems these new parts come in below the current limit and are thus allowable.

There are two main questions now, however. First, will Chinese companies even be interested in these gimped parts? Maybe, maybe not. But the bigger issue is that even if companies in China do start buying these GPUs, what's to prevent the U.S. government from lowering the limit yet again? The H800 and A800 after all were made to comply with the 2022 restrictions. If someone thinks the L20 or H20 are "too fast" in the coming months, we'll see the same scenario play out yet again.

There's also the question of clocks and whether some of these could be tweaked to regain lost performance. We don't have exact specs yet, but I wouldn't put it past certain players over in China to try to alter the hardware to get performance back closer to H800 levels.

Another question I have is, what is the underlying fear? So far I have understood the export controls to significantly slow down the Chinese market's ability to be a player in manufacturing and production of advanced lithography chips which can then be used for defense purposes. But is it to slow down their progress in AI? to slow down their ability to innovate their technology to then threaten the West vis a vis computational power to what, hack infrastructure? defeat security to intrude into sensitive systems?

Obviously IP theft and using technology to engage in cyberwarfare is bad, but I don't yet seem to understand what are we really trying to slow down the Chinese from doing, and how slow do we want/hope them to be moving? It doesn't seem likely we will stop them from achieving their goals, but slow them down long enough for us to do what exactly? -

thisisaname Either allow them to have them or not. This giving them a cut down version does little if the "cut downness" can be "fixed". Which the Chinese maybe able to do, after all they are quite bright and they have the resources to do!Reply -

JarredWaltonGPU Reply

That is the billion dollar question. Certainly, there are elements within the US gov't that feel China is a threat. I think that's not just militarily, but economically, technologically, etc. AI isn't even fully understood by most people working in the field, never mind politicians, so it feels to me like there's an "AI = BAD!" mentality and "AI in the hands of our enemies = REALLY BAD!"elforeign said:I see, thank you for that explanation. I could certainly see the bar being lowered yet again. I guess we'll have to wait and see what the specs for the revised chips will be and what headroom that will afford those possessing them to extract more performance.

Another question I have is, what is the underlying fear? So far I have understood the export controls to significantly slow down the Chinese market's ability to be a player in manufacturing and production of advanced lithography chips which can then be used for defense purposes. But is it to slow down their progress in AI? to slow down their ability to innovate their technology to then threaten the West vis a vis computational power to what, hack infrastructure? defeat security to intrude into sensitive systems?

Obviously IP theft and using technology to engage in cyberwarfare is bad, but I don't yet seem to understand what are we really trying to slow down the Chinese from doing, and how slow do we want/hope them to be moving? It doesn't seem likely we will stop them from achieving their goals, but slow them down long enough for us to do what exactly?

I'm not sure those people are wrong, but I'm also quite sure that, given time, China is likely to overcome most of the hurdles being thrown up by these export controls. The best-case scenario is that if China is ten years behind the US, it will take ten years to get to where we are now. And then hopefully we'd be ten years further ahead! But I suspect it won't work out quite like that in practice. -

elforeign ReplyJarredWaltonGPU said:That is the billion dollar question. Certainly, there are elements within the US gov't that feel China is a threat. I think that's not just militarily, but economically, technologically, etc. AI isn't even fully understood by most people working in the field, never mind politicians, so it feels to me like there's an "AI = BAD!" mentality and "AI in the hands of our enemies = REALLY BAD!"

I'm not sure those people are wrong, but I'm also quite sure that, given time, China is likely to overcome most of the hurdles being thrown up by these export controls. The best-case scenario is that if China is ten years behind the US, it will take ten years to get to where we are now. And then hopefully we'd be ten years further ahead! But I suspect it won't work out quite like that in practice.

It's going to be interesting to see how it plays out in the next few years. The U.S and E.U will need to coordinate closely for the export controls to be even marginally successful. Between the R&D for advanced lithography and semiconductor design, to the resulting products (CPU/GPU) - that is where we are still advanced in comparison to the efforts of the Chinese et. al. - but the manufacturing and raw material sourcing will be hampered on our end because of the historical offshoring of the supply chain to these countries and it's only a matter of days before we hear what the response from China will be with respect to their added export controls.

I wonder if/when there will be a noticeable change in International Relations and Globalism when military power isn't so much measured in how many nukes you can shoot of at once, but rather whose flops determine the flow of money and power.

Looks like the 5080 will be coming at $1,999 - and you better like it! :D