AMD GPUOpen: Doubling Down On Open-Source Development

Last week, AMD began what will undoubtedly prove to be a slow trickle of information leading up to its next-generation graphics architecture launch.

In AMD GPUs 2016: HDR, FreeSync Over HDMI And New Standards, we discussed the company’s plans to implement DisplayPort 1.3 and HDMI 2.0a support, expand FreeSync availability with extensions that enable it over HDMI interfaces, and pave the way for HDR playback through its display pipeline.

Today’s software-oriented disclosures are far less tangible to PC enthusiasts, but arguably more critical to AMD’s long-term prospects, because they affect the way developers interact with hardware.

Introducing GPUOpen

Both AMD and Nvidia claim to have the pulse of software development, regularly pulling in members of the community willing to stand behind their respective philosophies. It follows, then, that the two tend to tell hand-picked stories. Nvidia likes to advocate the advantages of its ready-to-integrate middleware, which is optimized for the company’s hardware but proprietary in nature, often causing issues for the competition. Conversely, AMD rallies behind the open source banner, promoting accessibility and the benefits of collaboration. That’s really what developers want more of, AMD argues.

According to Nicolas Thibieroz, senior manager of the RTG’s ISV engineering team, porting games between consoles and PCs is one of the most identifiable challenges facing developers (of course, when your graphics technology powers the PlayStation 4, Xbox One and millions of PCs, that would be at the top of the list, right?). Fueled by incentive to facilitate cross-platform development, AMD set out to solve some of the problems it was seeing.

Nicolas identified two principal issues that hamper progress: limited access to the GPU itself and black-box effects/tools/libraries/SDKs. Nvidia addressed the former through its NVAPI, which transcends the scope of DirectX and OpenGL to facilitate more granular control over the graphics subsystem. The latter complaint, of course, is aimed at Nvidia’s GameWorks program.

AMD’s response is called GPUOpen, and it’s being framed as a continuation of the work that went into Mantle. In an unapologetic shot across Nvidia’s bow, AMD’s Thibieroz said GPUOpen includes visual effects, driver utilities, troubleshooting tools, libraries and SDKs—all of which will be open source. The idea is that developers should have code-level access to the effects they use, an opportunity to make modifications, and the freedom to share their efforts. The implied win for AMD would be that the company benefits from improvements made along the way.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

GPUOpen will become accessible through an online portal with links to open source content, blog posts written by AMD staff and guest writers, and industry updates. All of the linked code will be hosted on GitHub, enabling the benefits of that site’s collaborative functionality.

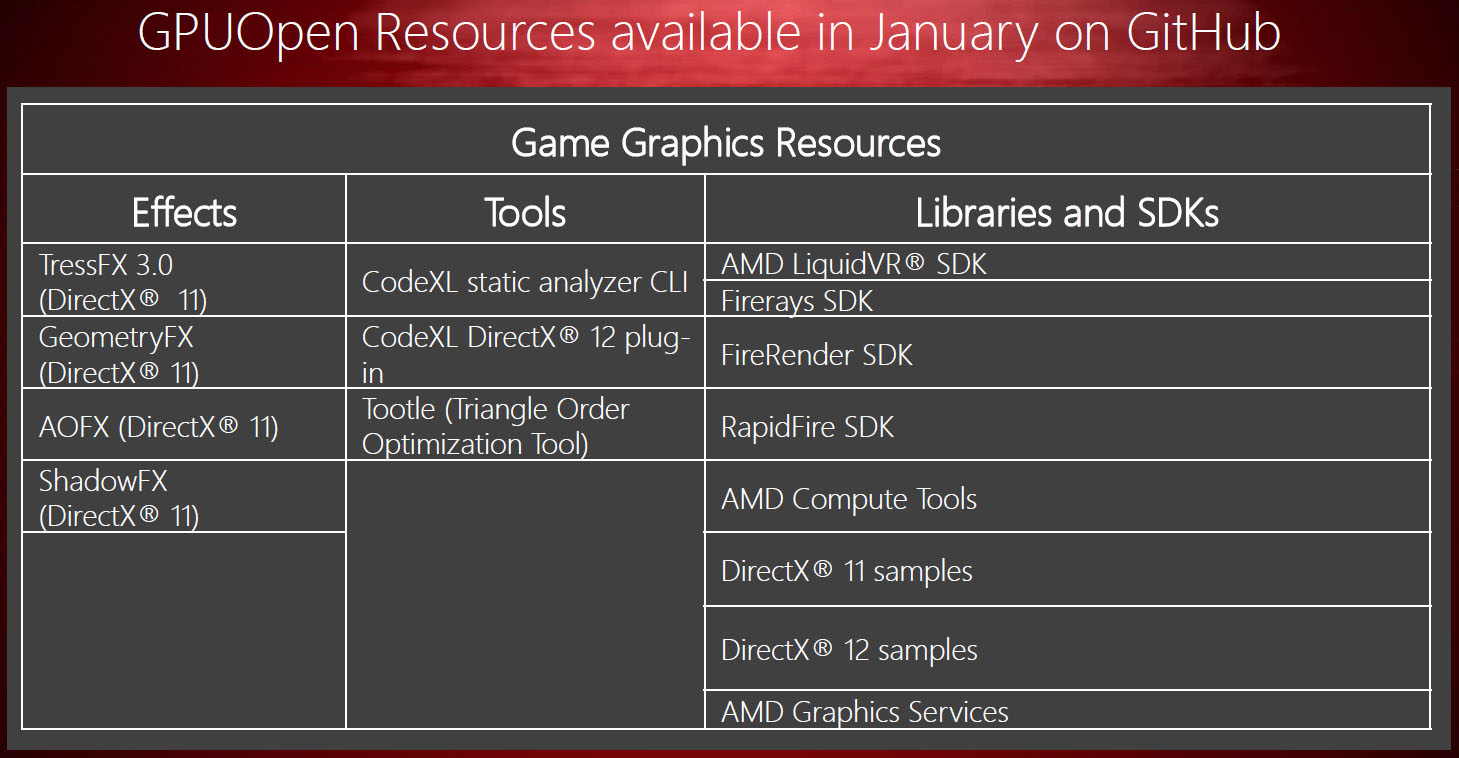

When AMD launches GPUOpen this coming January, it plans to provide access to TressFX 3.0, GeometryFX, AOFX, ShadowFX, a handful of tools, the LiquidVR SDK, DirectX 11 and 12 code samples, compute tools, and several other SDKs. The company is emphatic about its dedication to open source, too. All of the software available through GPUOpen is provided without restriction, per the MIT License.

Extending GPUOpen To Compute

Back in November, AMD announced its Boltzmann initiative, which included a headless 64-bit Linux driver focused on the HPC market, a heterogeneous compute compiler aimed at the same space, and a toolset for converting CUDA code into portable C++.

AMD acknowledged that it previously took its graphics driver and essentially repackaged it for HPC server use, creating a workable but not entirely optimized compute solution. Now it’s redesigning the driver, pulling focus from the graphics pipeline and addressing the HPC market’s greater sensitivity to latency.

The Boltzmann driver simultaneously enables a superset of the official HSA standard. Much of the discourse we’ve had with AMD regarding compute involved its APUs. However, the company is now putting emphasis on enabling HSA functionality through discrete graphics cards (specifically, its FirePro boards)—a must if it wants to gain ground on Nvidia’s incumbent compute ecosystem.

Of course, it’s all well and good to tune AMD’s dGPUs for compute workloads. But without traction on the development side, the company knows it can’t make inroads. OpenCL is notoriously challenging, so AMD wanted a more accessible tool for programming its host and graphics processors. The open source Heterogeneous Compute Compiler leverages C++, so it’s higher-level than writing for OpenCL. And as C++ evolves, the HCC will incorporate the latest standards. It already contains an early implementation of a feature called Parallel STL for parallelizing execution across available resources.

Lastly, AMD will make it possible to convert CUDA code to portable C++ through HIP, its Heterogeneous-compute Interface for Portability. The potential implications of HIP are huge, as developers already familiar with CUDA can extend their reach to AMD hardware fairly painlessly. Nvidia has the head start in this segment, though we’ve heard there are customers who don’t necessarily like being locked down to a single source, particularly as Nvidia explores licensing its software. AMD’s open approach offers a way out of the proprietary ecosystem.

Fascinated by the possibilities, we asked AMD senior fellow Ben Sander about the legal ramifications of facilitating the translation of CUDA code, and he assured us that HIP’s capabilities are based on publicly-available documentation; the CUDA API isn’t implemented anywhere. There’s no inherent performance loss either, though any specific tuning for an Nvidia architecture’s cache sizes or registers would need to be revisited.

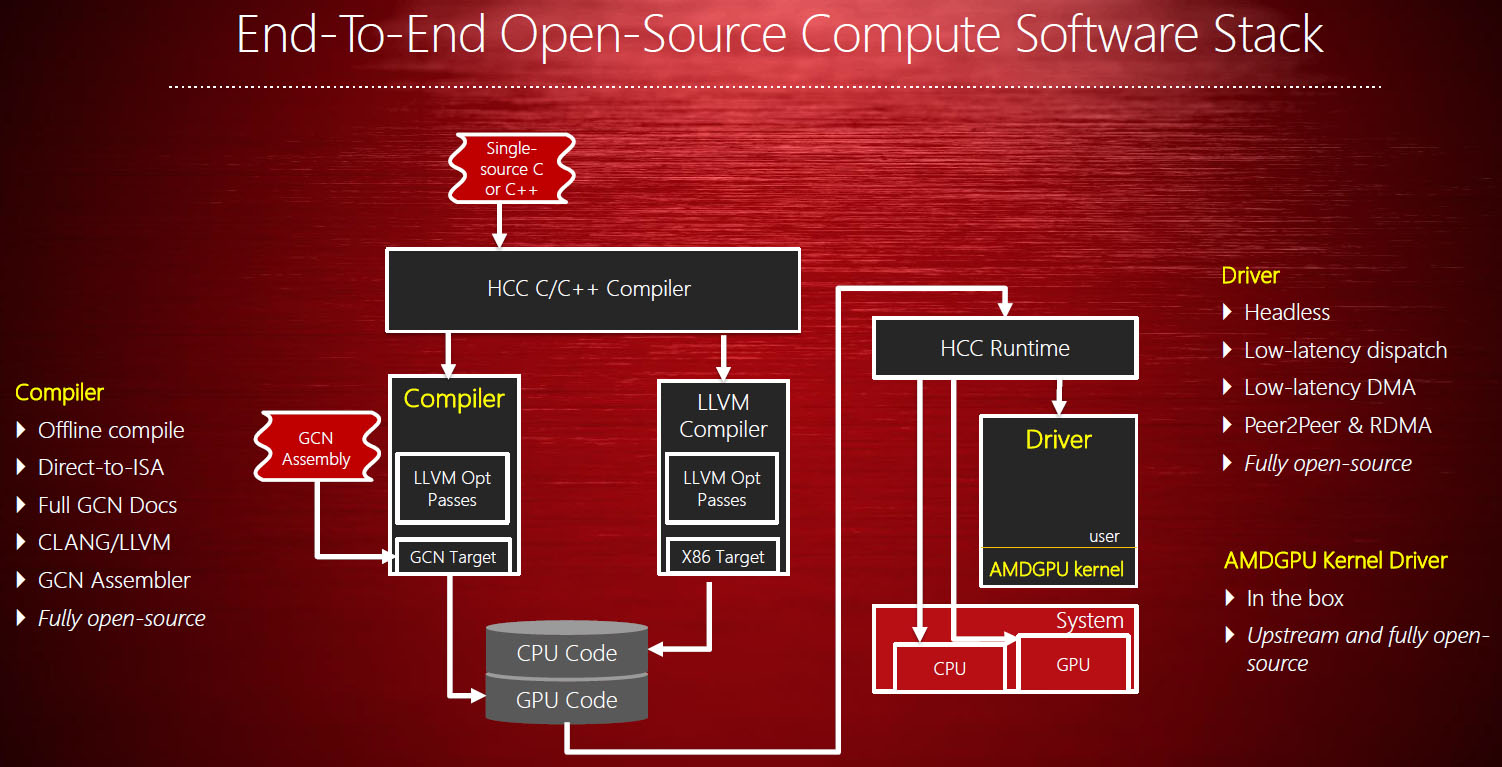

All of this was introduced at SC15 in Austin, Texas. At its recent technology summit in Sonoma, AMD went into more detail on the software stack, including what it calls its Lightning compiler, the previously-discussed Boltzmann driver, and the AMDGPU kernel driver (highlighted in yellow in the chart above). The Lightning compiler is responsible for generating GPU code all the way down to the ISA—a boon, AMD said, compared to solutions that go through intermediate languages.

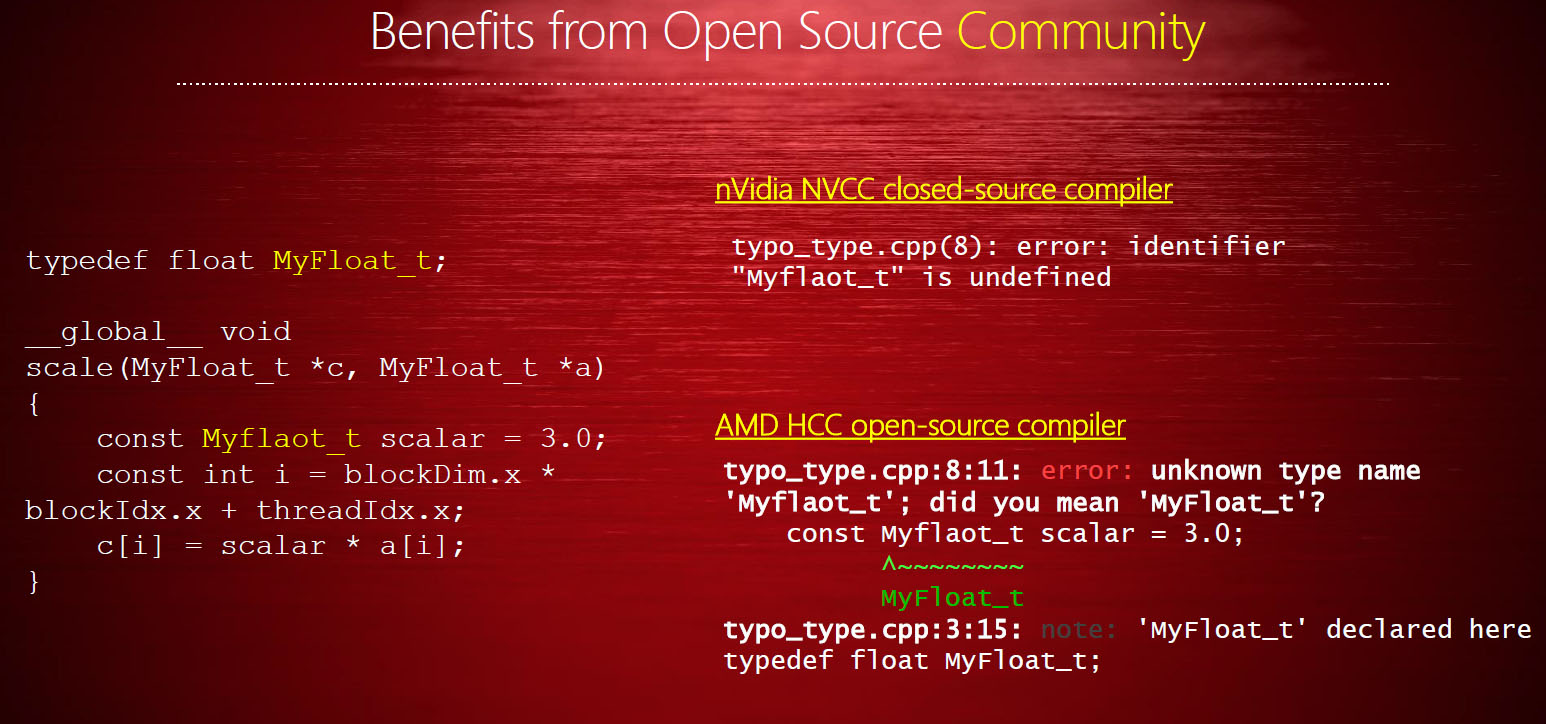

The compiler and drivers have open source accessibility in common. To illustrate the community’s collaborative power, AMD presented an example of one error in Nvidia’s NVCC and its own HCC. A single typo prevented the code snippet from compiling. NVCC identified the issue, leaving it to the developer to figure out what went wrong. But because HCC employs the open source Clang compiler front-end, which has its own spell-checker, you’re given a suggestion for fixing the problem, as well.

It’s easy to see how developers might benefit from the open source nature of these tools. By extension, the applications being written can be more granularly optimized, yielding better performance. There’s also an element of transparency that AMD said is important to certain customers in the HPC space. Access to the code can be useful for validating security and fixing bugs.

AMDGPU: Rethinking AMD’s Approach To Linux

As part of GPUOpen, AMD is discussing changes to the way it approaches Linux support, from the embedded and client markets up to HPC applications. The foundation of its strategy is AMDGPU, a base kernel driver that supports all of AMD’s 2015 GPUs/APUs. One of its purposes, according to senior fellow Christopher Smith, is enabling broader support for Linux distributions. A fully validated version of the driver is already integrated in Ubuntu and Fedora. AMD is also working to accelerate access to products and features so that when new hardware launches, it’s already supported. That’s something the company says is particularly difficult when you’re working with a proprietary Linux stack.

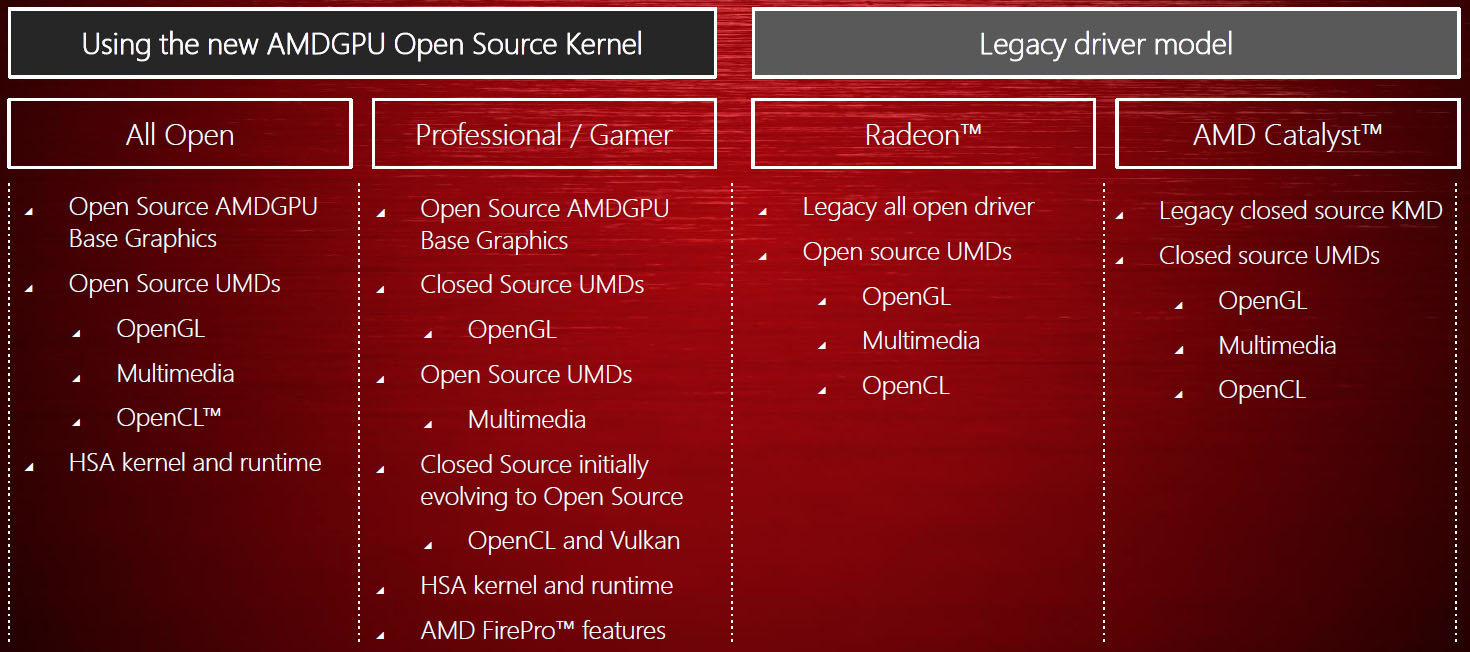

Currently, AMD offers an open source Radeon driver and a closed source Catalyst driver, both built on separate kernel-mode drivers. Smith said that AMDGPU consolidates their advantages, allowing closed and open source user-mode drivers to run on top of it. The above chart illustrates how that will look compared to AMD’s legacy model. At the far left, you have the all-open option with an AMDGPU base, open-source user-mode drivers built in Mesa, and HSA kernel and runtime, available upstream as an integrated package to distributions. The version aimed at professionals and gamers starts with the same AMDGPU base, incorporates both closed- and open-source UMDs, and will eventually offer open OpenCL and Vulkan drivers, as well.

There’s More To Come

We’re fairly certain that the next bit of news from AMD will start the buzz that grows to a roar ahead of new hardware. For now, the spotlight is on GPUOpen, the company’s playbook for accelerating cross-platform game development, adding substance to a stalled run at the HPC market and refocusing its Linux strategy. The initiative should help AMD exploit the range of hardware housing its IP and shore up other corners of its portfolio in need of reinforcement. The brilliance, of course, is that an upfront investment in open source pays dividends later when the community gets more involved. Will it be months before we start seeing the products of today’s announcements? Years? Like so many initiatives before, we’ll have to wait to gauge the impact of GPUOpen.

-

jimmysmitty I can understand why AMD is going more towards an open software agenda. It is hard to work with big companies and develop your own proprietary software with low R&D budgets.Reply

I am all for it but I do think in the end they are expecting companies to actually pick up and do this more on their own instead of nVidia who tends to work with the companies.

One downside I see is the more access you give companies to your GPU itself, the more issues that are bound to come up. Yes you can get more performance but there is a reason why most everything in Windows runs through the API layer first, it prevents the hardware from crashing and taking Windows with it like it used to back in the Windows 9x days.

Maybe this will work out for the best, maybe it wont. Only time will tell. -

firefoxx04 Ill believe the Linux support when I see it. People seem to have good luck with AMD Linux support but I dont. I have yet to have an install go well when using the official drivers.Reply

-

waltsmith This article is just one more example of why people need to stand behind AMD, we need their competition in the marketplace.Reply

A monopoly by INTEL or NVIDIA is something that is good for no one expect INTEL or NVIDIA. -

Foe West ReplyIll believe the Linux support when I see it. People seem to have good luck with AMD Linux support but I dont. I have yet to have an install go well when using the official drivers.

Same here, but I hear that the unofficial drivers are better, I haven't gotten a chance to try them yet.

-

royalcrown More likely open source because they can't seem to write good drivers lately ! No I am not an AMD hater either, they just have the stupidest bugs that shouldn't make it past QA, like the MS Surface 4 does !Reply -

hannibal ReplyMore likely open source because they can't seem to write good drivers lately ! No I am not an AMD hater either, they just have the stupidest bugs that shouldn't make it past QA, like the MS Surface 4 does !

Both companies Nvidia and AMD have had very bad drivers from time to time... So that is not a reason to open source push this time.

I think that pure reason is that Freesync aka adaptive sync can win because AMD and Intel both support it and so can every other company if they want to.

OpenGPU can win, if AMD, Intel and all other GPU maker support it. The AMD is in so tight spot that it can not make big closed system, without being cornered in very tight spot. -

cats_Paw ReplyI can understand why AMD is going more towards an open software agenda. It is hard to work with big companies and develop your own proprietary software with low R&D budgets.

I am all for it but I do think in the end they are expecting companies to actually pick up and do this more on their own instead of nVidia who tends to work with the companies.

One downside I see is the more access you give companies to your GPU itself, the more issues that are bound to come up. Yes you can get more performance but there is a reason why most everything in Windows runs through the API layer first, it prevents the hardware from crashing and taking Windows with it like it used to back in the Windows 9x days.

Maybe this will work out for the best, maybe it wont. Only time will tell.

This was also one of the things that separated good companies from bad ones (in gaming that is).

9x Win crashes were very common but there were companies that had constantly stable products, and those products could be quite amazing.

I dont like the idea of limiting the devs throu a barrier because it creates a lazy midset for companies (kinda like fifa from EA).

Today we have a lot of games going throu Win API and still being unplayable anyway, so why not reward those companies that want to do things well? -

Bloob ReplyI can understand why AMD is going more towards an open software agenda. It is hard to work with big companies and develop your own proprietary software with low R&D budgets.

Modern OSs isolate the display driver in such a way that it is extremely rare for it to affect system stability, mostly your screens just go black for a moment until the driver is reloaded (and failing that, a general display driver is loaded).

I am all for it but I do think in the end they are expecting companies to actually pick up and do this more on their own instead of nVidia who tends to work with the companies.

One downside I see is the more access you give companies to your GPU itself, the more issues that are bound to come up. Yes you can get more performance but there is a reason why most everything in Windows runs through the API layer first, it prevents the hardware from crashing and taking Windows with it like it used to back in the Windows 9x days.

Maybe this will work out for the best, maybe it wont. Only time will tell.

edit: it's why, when installing / updating your display driver you don't necessarily have to boot your system, and when you do have to, you only do it once... it was different back in the day... -

gggplaya I don't see a problem with this, today's games have to be released on such a tight schedule with a crazy amount of detail in their graphics which only adds to the workload as compared to a decade ago.Reply

The only real solution for many companies is to use a pre-made graphics engine which has taken much of the work out of the graphics side, and allows you to focus on the actual game and content.

So having full access to the video card is a great idea as these graphics engines may be able to squeeze out a few more fps compared to your competition on the same 3-4 graphics engines on the market which most games are based on.