AMD releases open-source GIM driver aimed at GPU virtualization, support for mainstream Radeon GPUs coming later

Tiny Corp's prodding has paid off.

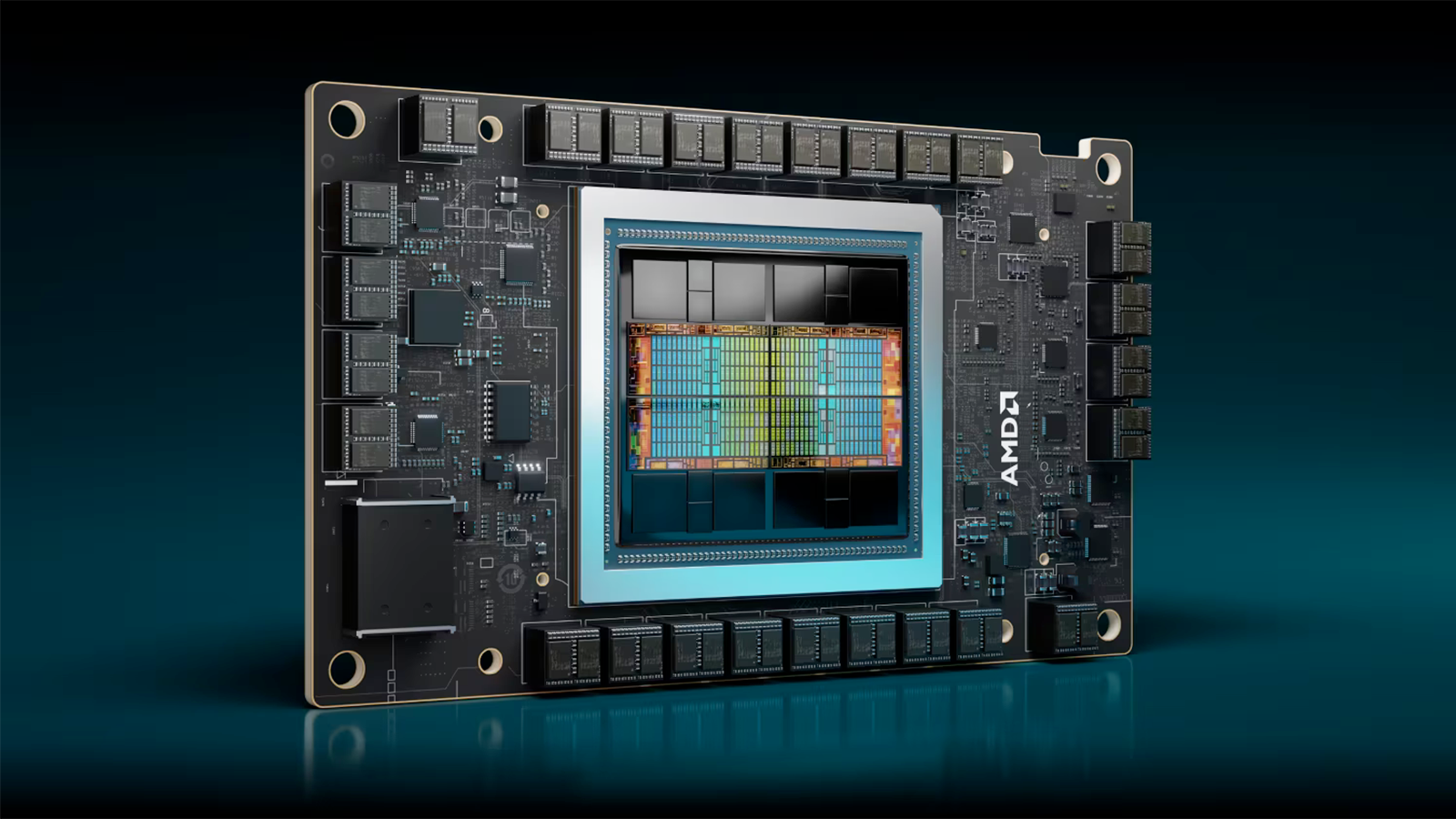

In continued efforts to open-source its software stack, AMD has made its GIM driver open-source for developers, according to Phoronix. The driver is used for virtualization with AMD's Instinct accelerators, but could be coming to Radeon desktop GPUs in the future, according to an AMD roadmap.

The AMD GPU-IOV (GIM) driver enables the various functions needed to ensure full virtualization support for the host GPU.

Currently, the GIM driver is compatible exclusively with the Instinct MI300X running on Ubuntu 22.04 LTS and using ROCm 6.4.

There is no information on when compatibility will expand or when AMD's open-source GIM driver will make its way into the mainline Linux kernel. The only detail given on the GIM driver's future is the upcoming support for Radeon desktop GPUs.

Regardless, it's a start. AMD is pushing forward with its open-sourcing plans for its software stack, something it has only seriously started doing this month. This push was initially started back in February when performance computing provider Hot Aisle lit a fire under AMD to start focusing on developers and making its hardware more accessible to AI devs.

This led to AMD finally broadening its AMD developer credit program, and giving devs a chance to try AMD's MI300X and Dell hardware for free.

Later, AMD started focusing on making its software open-source after AI hardware company Tiny Corp started pushing AMD to do so. Tiny Corp was having trouble adapting AMD's datacenter GPUs to its "TinyBox" servers, noting the GPUs were not behaving as they should. Having open-source software, firmware, and drivers would have enabled the server manufacturer to rectify these issues and customize AMD's datacenter GPUs to suit their needs.

The GIM driver is one of AMD's first drivers to be made open-source. The company has also confirmed it is making its Micro Engine Scheduler (MES) open-source, but documentation and source code for that won't be released until late May.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

TJ Hooker If this pans out for consumer cards, it could be pretty handy for any Linux users with AMD GPUs who have GPU-intensive apps (like games) that don't work well under Linux. AFAIK the functionality being discussed here would let you get the near-native performance of doing full GPU-passthrough into a Windows VM, without the usual drawback of having to give the VM exclusive access to the GPU (meaning you have no display from the host unless you have a 2nd GPU).Reply -

palladin9479 People need to comprehend how huge this is. Currently the only way to do GPU virtualization is to buy very expensive datacenter GPU's then load the driver within the hypervisor. It's not that desktop GPU's can't do virtualization, it's that there is no driver that supports it since both were closed source blackbox. Now with AMD open sourcing their driver that will make cheap virtualization possible.Reply -

DS426 Awesome! Good call, AMD. The sooner they can support and publish the documentation going all the way down to the consumer GPU's, the better.Reply -

abufrejoval Reply

I'm not sure I follow.palladin9479 said:People need to comprehend how huge this is. Currently the only way to do GPU virtualization is to buy very expensive datacenter GPU's then load the driver within the hypervisor. It's not that desktop GPU's can't do virtualization, it's that there is no driver that supports it since both were closed source blackbox. Now with AMD open sourcing their driver that will make cheap virtualization possible.

First of all: GPU pass-through is generally not an issue any more, not even with Nvidia, which used to block driver installs in pass-through GPUs, but have eliminated those restrictions ever since they wanted their consumer GPUs being usable for AI. I've run Windows VMs with games and CUDA having full access to the full GPU inside a VM on Proxmox or oVirt for many years, even before Nvidia removed the restrictions, because KVM included "cheats" to circumvent them.

What's meant with "GPU virtualization" is typically slicing the GPU into smaller bits so they can be allocated to distinct VMs.

That can be useful for some workloads e.g. VDI, but the main issue is that it's really slicing and dicing the GPU into fixed size partitions, not the abilty to "multi-task" or overcommit GPU resources as if they were CPUs.

The trouble is, that while CPUs are designed for multi-tasking and rapid context switches, both on a single core and on different cores in a CPU die, that's not the case for GPUs: GPU workloads are designed to run on a fixed number of GPU cores in matchingly sized wavefronts with the collective size of register files probably approaching megabytes, rather than say the 16 registers in a VAX. So a task switch would take ages while e.g. gaming workloads on a GPU tend to be really sensitive to latencies.

But to do anything but partitioning would require the hypervisor and perhaps the guest OS to really understand the nature and limitations of GPU...which they don't today. Most operating systems treat GPUs little better than any other I/O device.

And when it comes to the ability to partition GPUs between VMs, I can't see that the ability to split an RTX 5090 into say two RTX 5080 or four RTX 5060 equivalents would be extremely popular with PC owners.

It's nice to have a feature Nvidia charges extra for without the premium and perhaps another way for AMD to kick the competition, but unless they start getting ROCm ready for business on current consumer GPUs it's little more than a PR stunt. -

abufrejoval I've always wondered when we'll see them sold on mobile-on-desktop boards for a penny: I guess it's sooner now rather than later.Reply

Phoenix based Mini-ITX boards with a nice 8-core 5.1GHz Ryzen 7 are currently €300 with a similarly potent 780m iGPU (not that either is really great at gaming).

And for €500 you'll get a 16 core Dragon Range, just in case you'd prefer a beefier dGPU.

Those probably aren't making big bucks for AMD either, but at least they aren't selling them at a huge loss, unlike these Intel parts, which have cost a ton to make, but can't deliver any meaningful value advantage.

Still, scrapping them would be even more expensive, so I am keeping a lookout on AliExpress. -

palladin9479 Reply

PCI pass-through and GPU virtualization are entirely different things. PCI pass-through just passes a physical port into a VM. GPU virtualization is when you do GPU HW acceleration inside VMs by using a host GPU. Currently this is only possible with expensive data center GPUs because there is no hypervisor driver for consumers GPUs that supports this.abufrejoval said:I'm not sure I follow.

We have hundreds of VDI desktops with virtualizated GPUs by putting a bunch of A100s in our APEX stack. -

abufrejoval Reply

Perhaps you can enlighten us on how this works in your environment and what exactly the VMs get to see in terms of virtual hardware?palladin9479 said:PCI pass-through and GPU virtualization are entirely different things. PCI pass-through just passes a physical port into a VM. GPU virtualization is when you do GPU HW acceleration inside VMs by using a host GPU. Currently this is only possible with expensive data center GPUs because there is no hypervisor driver for consumers GPUs that supports this.

We have hundreds of VDI desktops with virtualizated GPUs by putting a bunch of A100s in our APEX stack.

The ability to partition a GPU into smaller chunks has been around for a long time and across vendors. Even Intel iGPUs on "desktop" Xeons supported this, at least according to press releases of the time. And those partitioned vGPUs would then be passed through as distinct GPUs (smaller) GPUs to the VMs.

I actually still have a Haswell Xeon somewhere, which should be able to do that, but with an iGPU so weak I never felt like sharing it: it got a GTX 980ti instead, which had all Quadro features fused off or disabled in drivers.

That's the classic domain of Nvidia's Quadro series cards and good enough for some security sensitive CAD work, rather common in the defense sector from what I heard.

To my understanding this partitioning is what Nvidia also does for their cloud gaming with GeFroce Now.

Of course you could also add an abstraction layer which uses GPU resources on the host to accelerate GPU operations inside a VM for VDI purposes. As far as I know this has been done at one time on Microsoft Servers for RDP sessions under the name RemoteFX.

I remember playing around with that and it was complex to set up and yielded modest gains at Direct X10 abstractions, and targeting AutoCAD or similar, nothing involving modern facilities like programmable shaders.

I've also used that approach on Linux using VirtGL and run some graphics benchmarks (Univention Singularity among them) on V100 GPUs sitting in a data center 600km apart from the notebook it rendered on via VNC: since the V100 is a compute device, it was actually the only way to get graphics out of it and performanc wasn't bad.... with exclusive access for a single client.

When it comes to CUDA workloads or Direct X12 or Vulkan games with programmable shaders and massive textures, to the best of my knowledge they can't deal with dynamic or overcommitted resources, they'll ask about the number of GPU cores and their share of VRAM available on startup and expect to have those resources permanently available until they finish. They organize their wave fronts all around those GPU cores and if some of those cores were to go missing or get pre-empted for somebody elses application, that could bring entire wave fronts to a stall.

And the memory pressure on VRAM would be even worse: you can't just swap gigabytes of textures (or machine learning model data) over a PCIe bus and get acceptable performance levels.

If I'm wrong I'm happy to learn about it but I just can't see several thousand GPU cores task switch dynamically, while the near lock-step wave front nature of GPGPU code simply can't deal with those stepping out of line. -

palladin9479 Replyabufrejoval said:Perhaps you can enlighten us on how this works in your environment and what exactly the VMs get to see in terms of virtual hardware?

In the case of the A40s each VM is a Windows desktop complete with full DX11 3D acceleration. This is used to provide acceleration to a host of applications that are designed to run on real GPUs.

https://www.nvidia.com/en-us/drivers/vgpu-software-driver/

Inside vmware we installed the nvidia vGPU drivers then configured Horizon to spin the desktops with the appropriate Windows vGPU driver.

Currently the only vGPU drivers that exist are for data center GPUs. Installing a commodity 4080 or 9070 XT into a server won't matter because there is no hypervisor vGPU driver nor a guest vGPU driver that could use it. AMD releasing theirs open source means the FOSS community can find ways to incorporate commodity AMD GPUs into it allowing for cheap desktop virtualization.

I had to look up our system RQ and I believe we are using A40 cards. They are the server headless add in GPUs. Here's is the documentation for driver support.

https://docs.nvidia.com/vgpu/13.0/grid-vgpu-release-notes-vmware-vsphere/index.html -

abufrejoval I carefully explained why this isn't nearly as huge a thing as many might be led to believe and you refuse that by arguing that that can't be true, because you're doing it.Reply

But you fail to realize that your use case isn't what a TH audience would expect, while I patiently try to explain that the use cases they are more likely to expect, won't be supported the way they do, because it's impossible to do so.

DX11 should be a first hint, because that's not DX12 or CUDA.palladin9479 said:

In the case of the A40s each VM is a Windows desktop complete with full DX11 3D acceleration. This is used to provide acceleration to a host of applications that are designed to run on real GPUs.

So I've looked up your references and they pretty much confirm my theories.palladin9479 said:https://www.nvidia.com/en-us/drivers/vgpu-software-driver/

Inside vmware we installed the nvidia vGPU drivers then configured Horizon to spin the desktops with the appropriate Windows vGPU driver.

Currently the only vGPU drivers that exist are for data center GPUs. Installing a commodity 4080 or 9070 XT into a server won't matter because there is no hypervisor vGPU driver nor a guest vGPU driver that could use it. AMD releasing theirs open source means the FOSS community can find ways to incorporate commodity AMD GPUs into it allowing for cheap desktop virtualization.

I had to look up our system RQ and I believe we are using A40 cards. They are the server headless add in GPUs. Here's is the documentation for driver support.

https://docs.nvidia.com/vgpu/13.0/grid-vgpu-release-notes-vmware-vsphere/index.html

You can use three basic ways to virtualize GPUs:

1. partition with pass-through of the slices

2. time-slice

3. new accelerated abstraction

Option #3 is what is historically perhaps the oldest variant, where Hypervisor vendors implement a new abstract 3D capable vGPU, which is using host GPU facilities to accelerate 3D primitives inside the VMs. I believe Nvida called that vSGA, KVM has a Vir-GL, VMware has its own 3D capable VGA, Microsoft has RemoteFX, and there is the VirtualGL I already mentioned.

I'm sure there is more but the main problem there remains that those abstractions can't keep up with the evolution of graphics hardware or overcome the base issues of context switch overhead and VRAM pressure. They lag far behind in API levels and performance, while in theory they can evenly distribute the resources of the host GPU among all VMs.

Option #2 and option #1 are the classic alternatives on how you slice and dice any multi-core computing resource, for a single core #2 is the only option and used in CPUs pretty much from the start. But as I tried to point out, the overhead of time-slicing is dictated by the context size, a few CPU registers are fine, the register files of thousands of SIMD GPU cores measure in megabytes and context switch overhead grew with the power of the GPUs: what appeared acceptable at first, might even still work for vector type CAD work, that doesn't involve complex shaders or GPGPU code may no longer be practical for modern API interactive (game) use. And then you'd still have to partition the VRAM, because even if paging of VRAM is technically supported, the performance penalty would be prohibitive for anything except some batch type HPC.

And that leads to option #1 as the most typical implementation in cases like cloud gaming.

But that isn't "huge", actually what each VM would get can only be smaller slices. And that won't be very popular when most people here actually yearn for the bigger GPU.

Long story short: when a vendor like AMD is giving something away for free, it's mostly because they can't really monetize it for much as it has low value. If there is a chance to make a buck they are much inclined to take it, even if sometimes they'll forgo a penny if they can make the competition lose a dime with it.

But here it's mostly marketing shenanigans and trying to raise misleading expectations, I don't want people to fall for.

If you didn't, that's great. But I had the impression that you were actually helping AMD in pushing a fairy tale, even if not intentional. -

TJ Hooker Replypalladin9479 said:PCI pass-through and GPU virtualization are entirely different things. PCI pass-through just passes a physical port into a VM. GPU virtualization is when you do GPU HW acceleration inside VMs by using a host GPU. Currently this is only possible with expensive data center GPUs because there is no hypervisor driver for consumers GPUs that supports this.

PCIe passthrough gets you GPU HW acceleration within the VM, on regular hardware with regular drivers. E.g. for my RX 6600, I pass it through to the VM, then install the regular AMD desktop graphics drivers from within the guest OS. Running ubuntu with the standard amdgpu driver on the host.palladin9479 said:Currently the only vGPU drivers that exist are for data center GPUs. Installing a commodity 4080 or 9070 XT into a server won't matter because there is no hypervisor vGPU driver nor a guest vGPU driver that could use it. AMD releasing theirs open source means the FOSS community can find ways to incorporate commodity AMD GPUs into it allowing for cheap desktop virtualization.