Nvidia engineer breaks and then quickly fixes AMD GPU performance in Linux

FOSS unites Red and Green.

In a surprising turn of events, an Nvidia engineer pushed a fix to the Linux kernel, resolving a performance regression seen on AMD integrated and dedicated GPU hardware (via Phoronix). Turns out, the same engineer inadvertently introduced the problem in the first place with a set of changes to the kernel last week, attempting to increase the PCI BAR space to more than 10TiB. This ended up incorrectly flagging the GPU as limited and hampering performance, but thankfully it was quickly picked up and fixed.

In the open-source paradigm, it's an unwritten rule to fix what you break. The Linux kernel is open-source and accepts contributions from everyone, which are then reviewed. Responsible contributors are expected to help fix issues that arise from their changes. So, despite their rivalry in the GPU market, FOSS (Free Open Source Software) is an avenue that bridges the chasm between AMD and Nvidia.

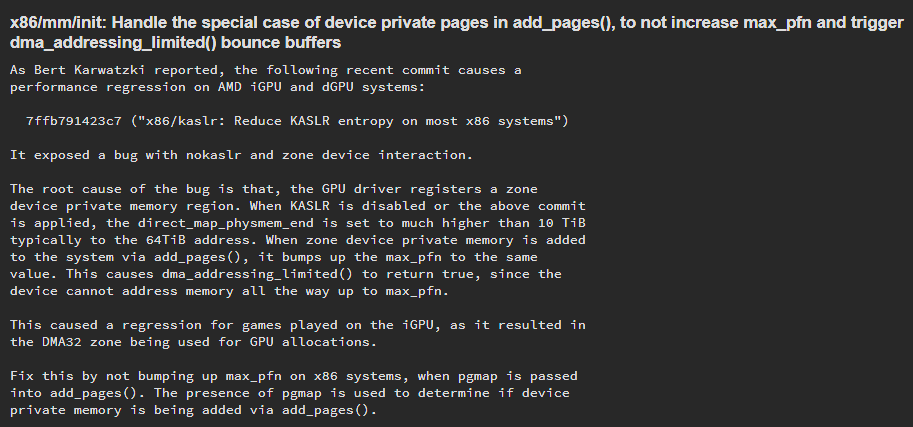

The regression was caused by a commit that was intended to increase the PCI BAR space beyond 10TiB, likely for systems with large memory spaces. This indirectly reduced a factor called KASLR entropy on consumer x86 devices, which determines the randomness of where the kernel's data is loaded into memory on each boot for security purposes. At the same time, this also artificially inflated the range of the kernel's accessible memory (direct_map_physmem_end), typically to 64TiB.

In Linux, memory is divided into different zones, one of which is the zone device that can be associated with a GPU. The problem here is that when the kernel would initialize zone device memory for Radeon GPUs, an associated variable (max_pfn) that represents the total addressable RAM by the kernel would artificially increase to 64TiB.

Since the GPU likely cannot access the entire 64TiB range, it would flag dma_addressing_limited() as True. This variable essentially restricts the GPU to use the DMA32 zone, which offers only 4GB of memory and explains the performance regressions.

The good news is that this fix should be implemented as soon as the pull request lands, right before the Linux 6.15-rc1 merge window closes today. With a general six to eight week cadence before new Linux kernels, we can expect the stable 6.15 release to be available around late May or early June.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

qxp Reply

Not necessarily. On newer systems you can expand RAM by using PCIe 5.0 slots. You could also memory map SSDs, it takes only 8 8TB SSDs to need more than 64TB addressing space.Rob1C said:You'd need an 8-way CPU to practically have enough slots for 64TiB. -

USAFRet So he didn't "improve performance".Reply

Rather, he undid the performance limiter he pushed last week.

It was fine, before he screwed with it. -

bit_user Reply

It's not just about good manners. If a contributor is found to behave in a malicious or excessively reckless manner, they could face a ban. I'm not aware of a case where this has happened, but I think the potential is real.The article said:In the open-source paradigm, it's an unwritten rule to fix what you break. The Linux kernel is open-source and accepts contributions from everyone, which are then reviewed. Responsible contributors are expected to help fix issues that arise from their changes. So, despite their rivalry in the GPU market, FOSS (Free Open Source Software) is an avenue that bridges the chasm between AMD and Nvidia. -

bkuhl Reply

Its happened:bit_user said:It's not just about good manners. If a contributor is found to behave in a malicious or excessively reckless manner, they could face a ban. I'm not aware of a case where this has happened, but I think the potential is real.

https://www.tomshardware.com/news/university-researchers-apologize-linux-community -

bit_user Reply

Yeah, I knew of that incident. What I meant was one vendor acting maliciously towards another, in a strictly anti-competitive fashion.bkuhl said:Its happened:

https://www.tomshardware.com/news/university-researchers-apologize-linux-community -

Rob1C ReplyRob1C said:You'd need an 8-way CPU to **practically** have enough slots for 64TiB.

qxp said:Not necessarily. On newer systems you can expand RAM by using PCIe 5.0 slots. You could also memory map SSDs, it takes only 8 8TB SSDs to need more than 64TB addressing space.

I did say to be practical.

Using your suggested ideology you say we wouldn't need 8-way CPUs to get enough slots for the DIMM, because we could use 8 SSDs. With modern SSDs you'd only need one. Access and execution speed would be slow.

Similarly we could use fewer DIMM slots by simply using larger DIMMs:

https://www.tomshardware.com/news/samsung-talks-1tb-ddr5-modules-ddr5-7200

Large DIMMs like that are reserved for preferred customers, and available at eye watering prices.

So, going the extreme either way isn't practical, we'd need to land somewhere near the middle ground.

More to the point of the comment, which you completely missed, they submitted a change to support a configuration which is as unlikely for Intel systems as it is impossible for AMD systems. -

nogaard777 Reply

And it was better after he fixed it. Don't let your hatred of a corporation make brainless assumptions of the individuals that work there. A large portion of Linux exists because of Nvidia engineers' contributions, and I'd wager far more than from AMD's much smaller team.USAFRet said:So he didn't "improve performance".

Rather, he undid the performance limiter he pushed last week.

It was fine, before he screwed with it. -

bit_user Reply

Why wager? If you know, you know. If you don't, well...nogaard777 said:A large portion of Linux exists because of Nvidia engineers' contributions, and I'd wager far more than from AMD's much smaller team.

The latest data I found was from 2022:

By changesetsEmployerNumber of ChangsetsPercentage of totalHuawei Technologies12819.2%Intel12549.0%(Unknown)10977.9%Google9176.6%Linaro8376.0%AMD7505.4%Red Hat6724.8%(None)5644.0%Meta4143.0%NVIDIA3892.8%

By lines changedEmployerNumber of LinesPercentage of totalOracle9185212.0%AMD8976111.7%Google565047.4%Intel440625.8%(Unknown)337654.4%Realtek332774.3%Linaro312344.1%Huawei Technologies278563.6%NVIDIA254413.3%Red Hat240733.1%

Source: https://lwn.net/Articles/915435/So, AMD changed about 3.53 times as many lines as Nvidia, in 1.93 times as many changesets. -

qxp Reply

If you use 8x 8TB PCIe 4.0 SSD you get read bandwidth of at least 56 GB/s - not stellar, but definitely usable. Using Sabrent Rocket 8TB, this will set you back less than $10K.Rob1C said:I did say to be practical.

Using your suggested ideology you say we wouldn't need 8-way CPUs to get enough slots for the DIMM, because we could use 8 SSDs. With modern SSDs you'd only need one. Access and execution speed would be slow.

The change was likely in response to customer request, as there are plenty of people that need systems with lots of RAM. And, of course, we will see these in wider uses as prices drop, and by this time the issue has been worked out.Rob1C said:Similarly we could use fewer DIMM slots by simply using larger DIMMs:

https://www.tomshardware.com/news/samsung-talks-1tb-ddr5-modules-ddr5-7200

Large DIMMs like that are reserved for preferred customers, and available at eye watering prices.

So, going the extreme either way isn't practical, we'd need to land somewhere near the middle ground.

More to the point of the comment, which you completely missed, they submitted a change to support a configuration which is as unlikely for Intel systems as it is impossible for AMD systems.