FSR 4 modded to run on RDNA 2 GPUs improves image quality by "leaps and bounds," but carries 10% performance penalty — AMD's leaked source code turns into modding frenzy

Tested by multiple sources.

Last month, AMD accidentally made FSR 4 open-source by publishing the entire source code on GitHub, as part of its FidelitySDK. That pushed modders to quickly reverse-engineer how to run FSR 4 on previously incompatible hardware, but the hacks were limited to Linux. That changed just last week when u/AthleteDependent926 on Reddit figured out how to make it work on Windows — we saw a 12-20% decrease in potential performance with it, and today new findings on older RDNA GPUs corroborate our testing.

There are actually three aspects to this story: first, we have an RX 6800 XT that showed a noticeable uptick in visual fidelity at the cost of FPS; secondly, Computer Base tested a bunch of GPUs that saw similar declines in performance; lastly, a Reddit user also tried FSR 4 on their RX 6950 XT and praised its image quality while noting fewer frames achived compared to XeSS. The focal point of the story, though, is the large overhead FSR 4 brings with it, even if it offers a much better-looking image than its predecessor.

User kkrace on Chiphell managed to get FSR 4 running on an RX 6800 XT, which is an RDNA 2 graphics card that lacks the proper hardware needed for FSR 4. As such, they saw only 100-107 FPS in Stellar Blade when using FSR 4, compared to 110+ FPS on FSR 3. Even though that's only a ~3-10% decrease, the user claims they saw 10-20% worse frame rates; however, the image quality was significantly better. Therefore, they suggest switching to FSR 4 regardless, because, at triple-digit FPS, you might as well take the slight performance hit for majorly upgraded visuals.

The OP on Chiphell modded FSR 4 onto Stellar Blade — a game that only supports FSR 3 natively — using a tweaked DLL that allowed it to work with OptiScaler. The guide to do that was posted later on Reddit by user u/NaM_77, who listed an older driver as a prerequisite. They tested it using their RX 6950 XT and, while no comparison numbers with FSR 3 were provided, the RX 6950 XT still gained about 10% more frames with FSR 4 when tallied against native (TAA) results. Intel's XeSS, on the other hand, had even better performance, but the user highlighted that it was unstable and not as good-looking.

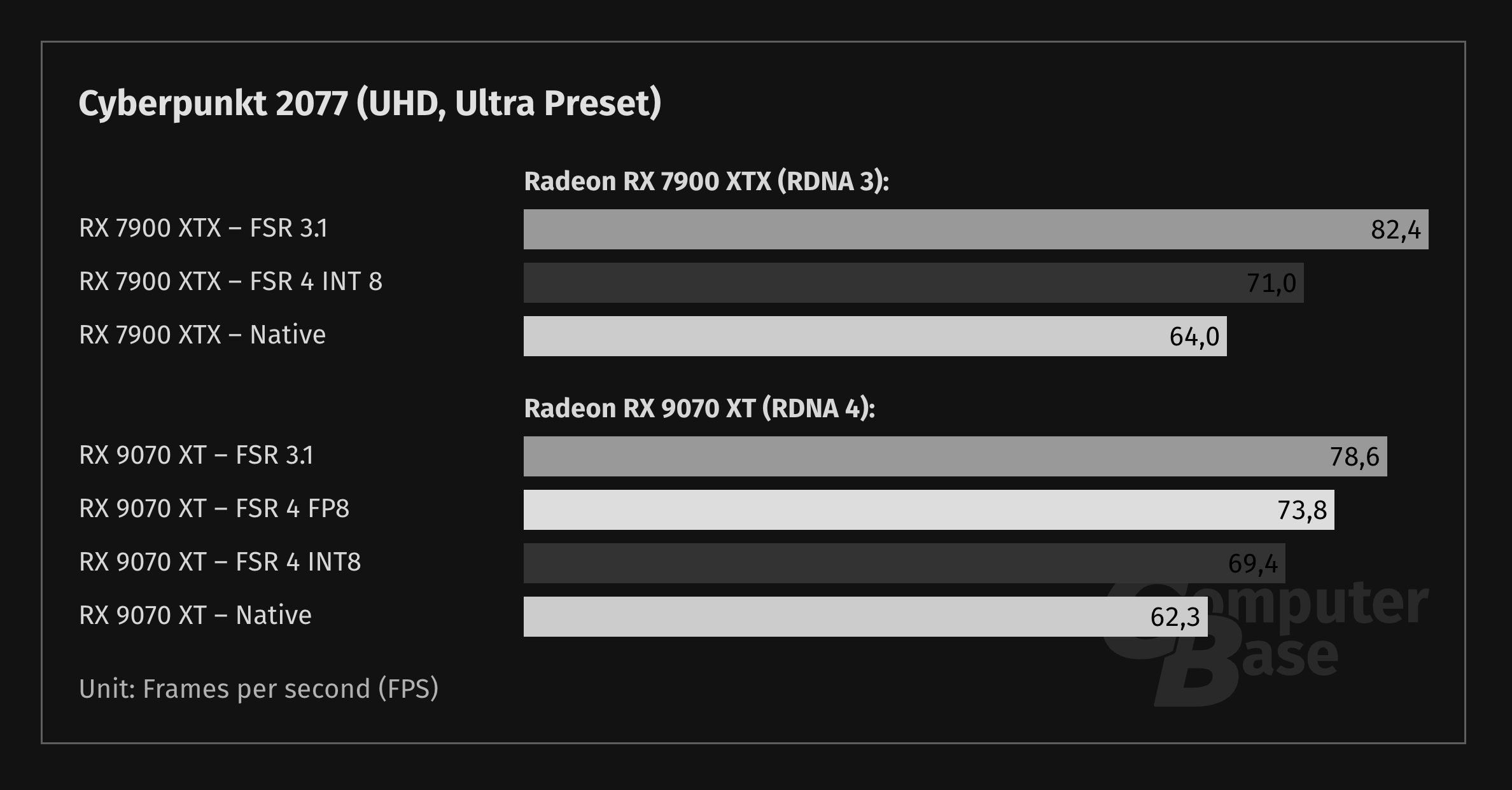

These sentiments are echoed by Computer Base's testing, which didn't use an RDNA 2 GPU. Rather, they pitted an RX 7900 XTX against an RX 9070 XT — AMD's latest flagship purpose-built with FSR 4 in mind. Surprisingly, it still underperformed compared to FSR 3. In Cyberpunk 2077, tested at 4K Ultra settings, the 9070 XT netted 77 FPS using FSR 3.1 and only 74 FPS using FSR 4. More importantly, though, the RDNA 3-based 7900 XTX saw 16% fewer frames in FSR 4 compared to FSR 3.1, but again justified that with markedly better visuals.

All of this to say, FSR 4 seems worth it when it comes to delivering solid image quality, though it still demands significant compute regardless of whatever hardware it's running on. Modders were able to tweak FSR 4 to run INT8 libraries, which themselves have a noticeable discrepancy between each other. FP8 support is only available on RDNA 4, similar to how RDNA 2 GPUs need to fall back on slower instructions like DP4a to run FSR 4 (which explains the missing FPS).

It's important to note that AMD is also working on FSR Redstone as we speak; it's the company's next-gen upscaler designed to work on a myriad of GPUs, including non-AMD ones, which is perhaps why the Red Team hasn't extended FSR 4 support beyond the RX 9000 series yet. Though it's clear that if you're dedicated enough, that's not a hurdle — as long as you can live with worse performance in exchange for sharper fidelity.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

-Fran- But the DLSS 4.0 upscaler can't run in older hardware! How is this possible for AMD to do?!Reply

Well, I'm being obviously sarcastic, because we all know why.

I wish we didn't need to depend on upscalers for anything, but here we are, thanks to nVidia.

Regards. -

The Historical Fidelity Reply

I second this comment!-Fran- said:But the DLSS 4.0 upscaler can't run in older hardware! How is this possible for AMD to do?!

Well, I'm being obviously sarcastic, because we all know why.

I wish we didn't need to depend on upscalers for anything, but here we are, thanks to nVidia.

Regards. -

Hella_D Id accept the FPS decrease if it means better visuals, even as a gamer ill take image quality over FPS anyday. Im weird like that.Reply

Provided im getting a solid 45+ FPS with 1% lows higher than, say... 25FPS with no load-hitching, im happy. -

ezst036 There is of course a give and a take to it.Reply

Any company, any chip maker (in this case AMD) wants to promote its newest products and comes up with ways to make those new products more attractive. So it makes sense that they may not backport their tech all the way to Polaris GPUs. Or in this case, RDNA 2.

However if some user is crafty enough to get it to work, then having the source code they can (if they think they can) make some attempts to get it working even if that carries a performance penalty.

AMD actually benefits from this. In contrast to an Nvidia product which "what you get" is "what you get", users actually control their own destiny when the source code is open and people unaffiliated with the company can add their changes accordingly.

(A small note - I think this code was leaked, not actually faithfully provided by AMD. That is an important item to add context) -

-Fran- Reply

The code leaked already implemented the FSR4 passes using INT8, but this still hasn't been included in the main drivers for whatever reason (well, we know why).ezst036 said:There is of course a give and a take to it.

Any company, any chip maker (in this case AMD) wants to promote its newest products and comes up with ways to make those new products more attractive. So it makes sense that they may not backport their tech all the way to Polaris GPUs. Or in this case, RDNA 2.

However if some user is crafty enough to get it to work, then having the source code they can (if they think they can) make some attempts to get it working even if that carries a performance penalty.

AMD actually benefits from this. In contrast to an Nvidia product which "what you get" is "what you get", users actually control their own destiny when the source code is open and people unaffiliated with the company can add their changes accordingly.

(A small note - I think this code was leaked, not actually faithfully provided by AMD. That is an important item to add context)

This was just grabbing the code, pretty much as it was exposed, then compiling it to produce the necessary libraries and then creating the switcheroo of it so the GPU could use the new path.

Regards. -

-Fran- Relevant to the discussion:Reply

gTrfnLvZbu4View: https://www.youtube.com/watch?v=gTrfnLvZbu4

Regards. -

Moores_Ghost Reply

Most of us are weird like that. 1-10fps hit for better visuals? All day.Hella_D said:Id accept the FPS decrease if it means better visuals, even as a gamer ill take image quality over FPS anyday. Im weird like that.

Provided im getting a solid 45+ FPS with 1% lows higher than, say... 25FPS with no load-hitching, im happy. -

mitch074 Reply

The code was published by AMD, but they pulled it right after. Someone managed to fork it first, so it's officially out but unsupported.Moores_Ghost said:Most of us are weird like that. 1-10fps hit for better visuals? All day.

tests show that it works well on RDNA3, but performance impact on RDNA2 is important and depending on the game, may lead to artifacting.