AMD GPUs 2016: HDR, FreeSync Over HDMI And New Standards

AMD launched its Fiji GPU—the company’s newest design—about six months ago. It fared well, besting Nvidia’s GeForce GTX 980 Ti gaming card at 3840x2160 across our benchmark suite. But AMD’s management knows that there’s still a lot of work to do. Most of the Radeon lineup is based on much older silicon, and although those GPUs remain competitive through strategically timed price cuts, they’re also showing their age. For instance, Nvidia’s second-gen Maxwell processors dating back over a year support HDMI 2.0, whereas all of the 300-series Radeons are limited to HDMI 1.4.

That’s going to change in 2016. Over the next few months, AMD plans to divulge more details about its next-generation architecture, including improvements made to the graphics processing, fixed-function media blocks, memory subsystem and compute capabilities. For now, though, the emphasis is on a beefier display controller and what it enables. Not only will the next-gen GPU incorporate HDMI 2.0a, but it’ll also get DisplayPort 1.3.

New Standards

HDMI 2.0 increases the interface’s maximum pixel clock to 600 MHz (from 340 MHz in version 1.4), pushing peak throughput to 18 Gb/s (from 10.2 Gb/s). That’s enough bandwidth to facilitate 4096x2160 at 60 Hz. HDMI 2.0a builds on the 2.0 specification by adding support for HDR formats. You can expect HDR to be a big bullet point in the company’s next-generation GPUs as a result of its augmented display controller.

As mentioned, Nvidia’s Maxwell-based GPUs support HDMI 2.0; they cannot be retrofitted to support 2.0a. Additionally, only certain models include HDCP 2.2 support for playback of protected 4K content. Although none of AMD’s current processors incorporate HDCP 2.2, its next-gen GPUs will.

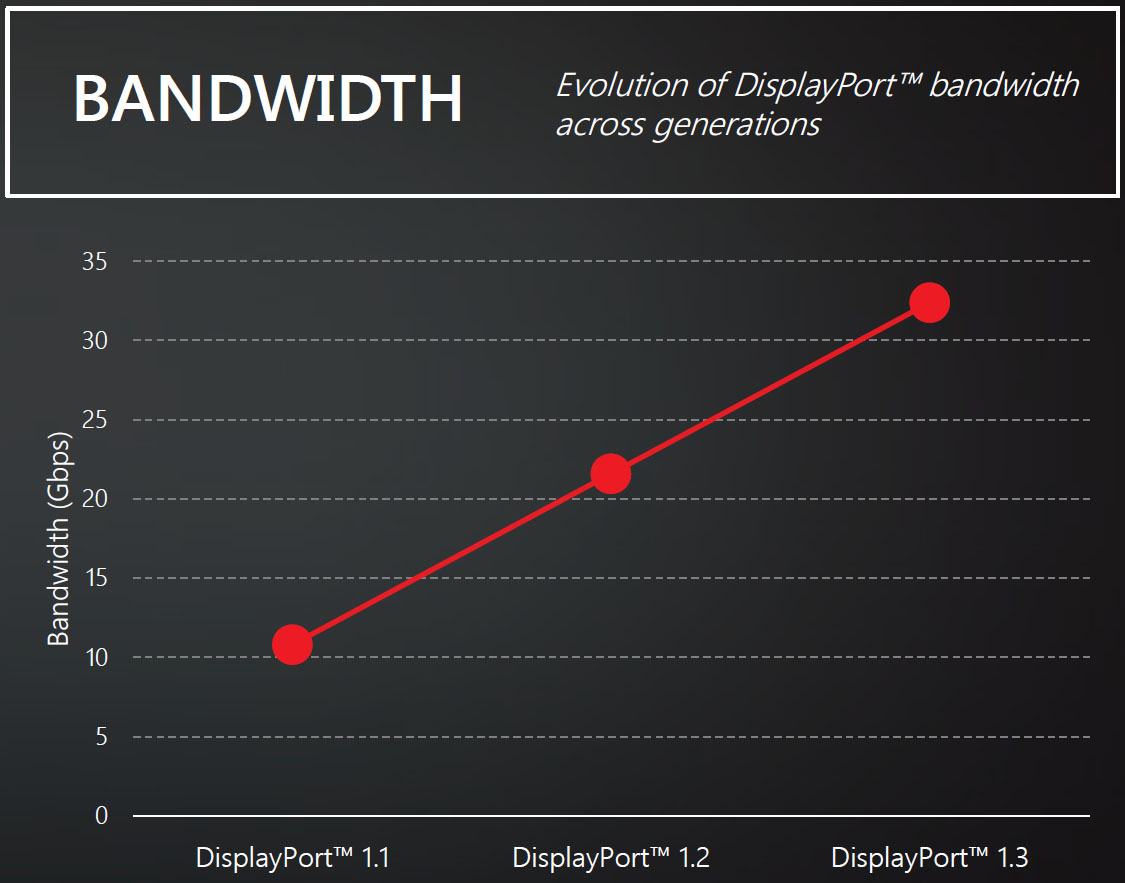

The DisplayPort 1.3 specification is even more aggressive than HDMI. Its High Bit Rate 3 mode pushes up to 8.1 Gb/s per lane, or a total of 32.4 Gb/s. With that much available bandwidth, the output options start looking pretty wild. How does 5120x2880 at 60 Hz sound? Or 4K on a 120 Hz panel? Those aren’t hypotheticals; AMD expects single-cable 5K screens by mid-2016, and 2160p120 displays supporting dynamic refresh rates (FreeSync) by the end of next year.

Doubling Down On FreeSync

Although it took longer for the FreeSync ecosystem to coalesce around AMD’s vision, the technology is certainly building momentum.

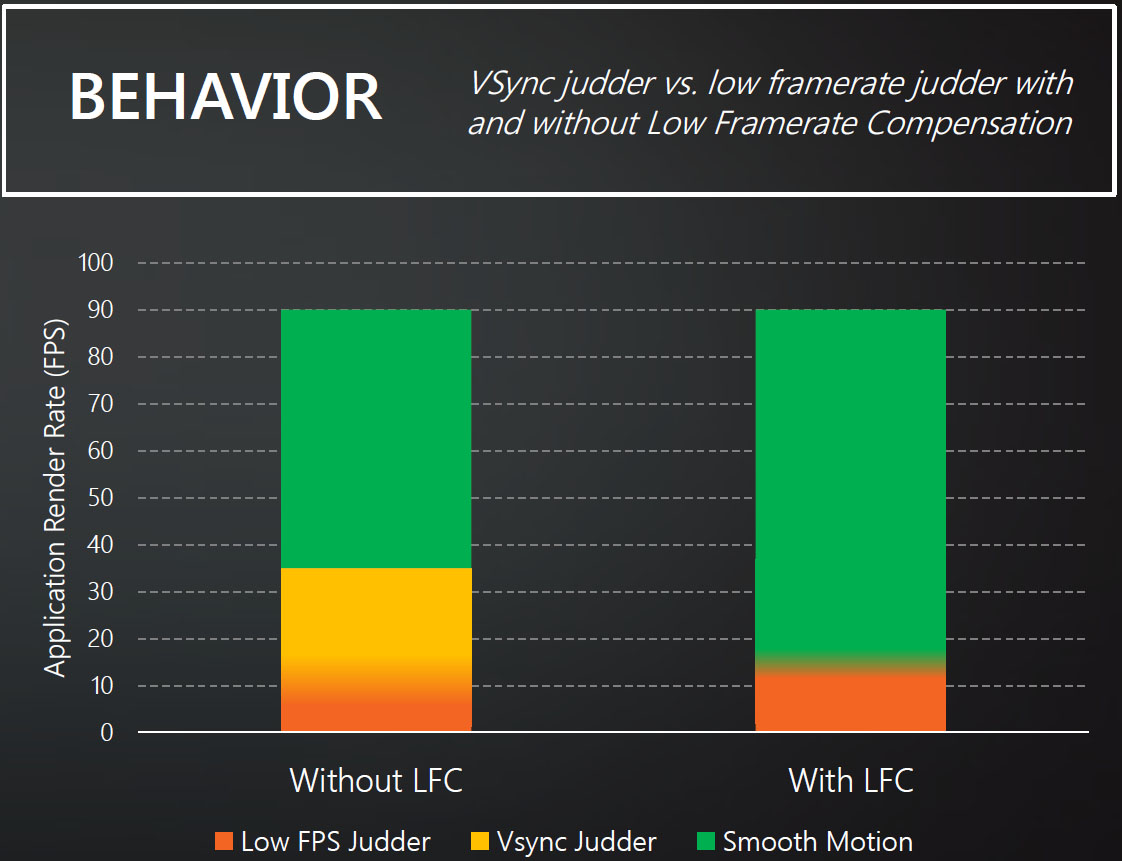

The addition of low-framerate compensation in its Radeon Software Crimson Edition driver should ameliorate the tearing/judder issues previously experienced on FreeSync-capable systems that dropped below the display’s minimum dynamic refresh rate. In short, the feature employs an algorithm that monitors application performance. Should the output fall below the variable refresh floor, frames are inserted to maintain smoothness (with V-sync on) and reduce tearing (with V-sync off). LFC is automatically enabled on existing FreeSync-capable displays with maximum refresh rates greater than 2.5x their minimum.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The corner case where performance drops too low didn’t affect our FreeSync Vs. G-Sync event, because the Asus MG279Qs we used had a 35 Hz minimum that was never breached. Rather, the issue identified by several of our readers was the panel’s 90 Hz VRR ceiling, above which they experienced tearing with V-sync turned off. Even this should become less of an issue moving forward, though; Nixeus recently launched its NX-VUE24, a 1920x1080 monitor with a 30 Hz to 144 Hz variable refresh range. We haven’t tested it yet, and the 24” TN-based panel doesn’t sound like our ideal solution. But by bringing it up, AMD clearly knows it needs to push for VRRs that match G-Sync’s capabilities.

As it looks to expand refresh ranges, AMD is also trying to make FreeSync more accessible by enabling the technology over HDMI. According to David Glen, senior fellow in the RTG’s display technologies group, scaler vendors MStar, Novatek and Realtek are already onboard. How is AMD accomplishing this when HDMI does not support variable refresh? “The HDMI specification permits something called vendor-specific extensions,” Glen stated. “They are fully compliant with the HDMI standard. We’ve used this aspect of HDMI to enable FreeSync over (the interface)… If at some future time the HDMI spec allows variable refresh rate, our graphics products, we fully expect, will be able to support both the HDMI standard method and the method we’re introducing now.”

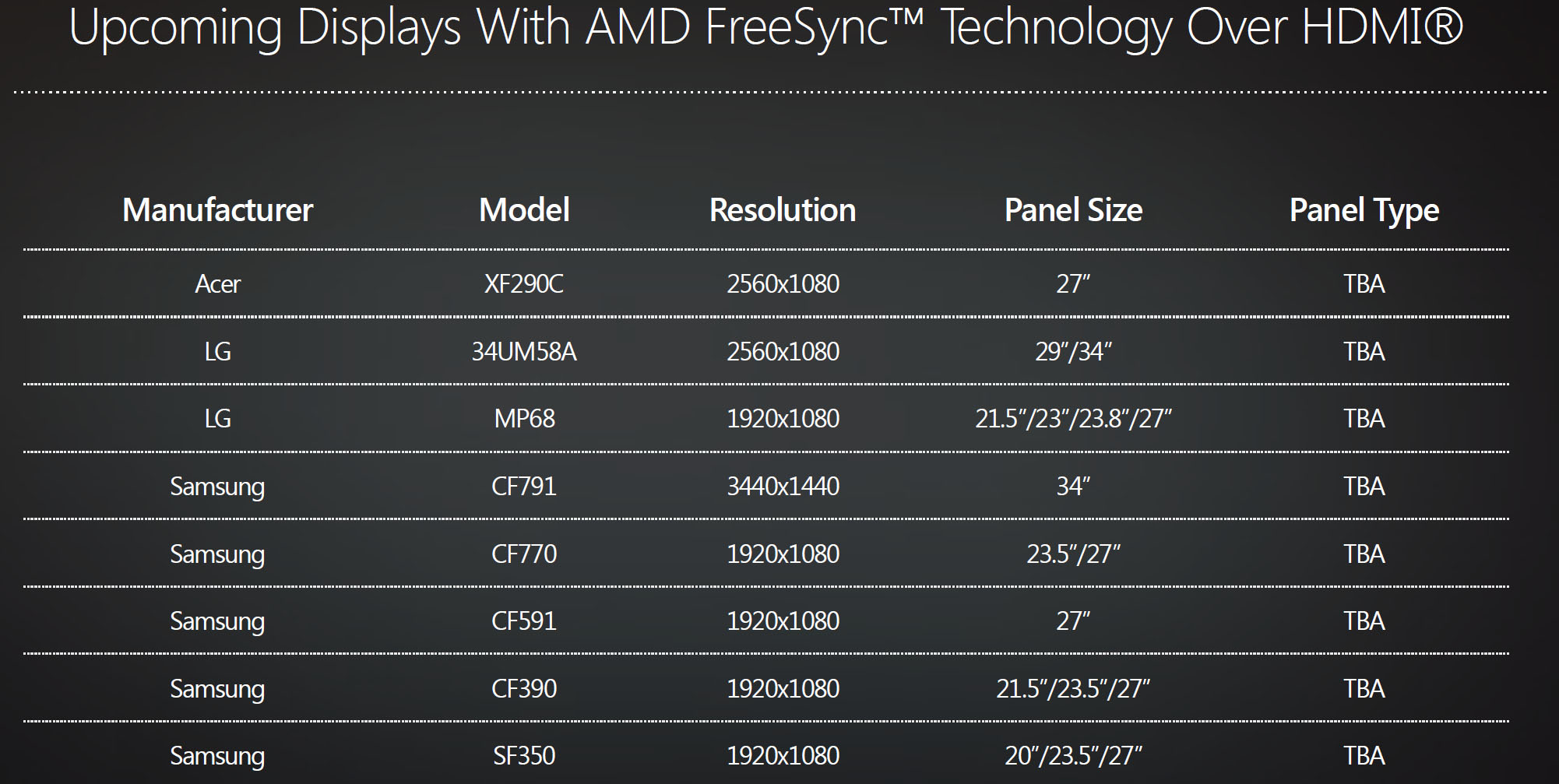

Compatible monitors are expected to share the graphics hardware’s flexibility. Acer, LG and Samsung are already announcing a combined eight models supporting FreeSync over HDMI, ranging from 1920x1080 to 3440x1440. Although we don’t have specifics on the panel types being used, AMD’s David Glen told us to expect availability starting in Q1 2016.

The bad news, of course, is that existing FreeSync-capable displays with HDMI ports probably won’t acquire this functionality. Although it doesn’t sound like there’s anything preventing firmware updates with the vendor-specific extensions, AMD’s Glen suggests that it’s more likely we’d see current models revised to include the feature.

On the GPU side, FreeSync over HDMI will work on any Radeon card capable of variable refresh over DisplayPort, including the full lineup of GCN 1.1- and 1.2-based processors. Tahiti, Pitcairn, Cape Verde and their rebranded derivatives aren’t compatible.

High Dynamic Range Is A Go

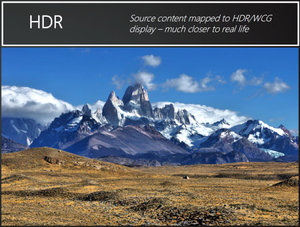

The consumer electronics industry is in the throes of embracing high dynamic range as “the next big thing” for reproducing more lifelike images, and AMD wants everyone to know that it’s onboard as well.

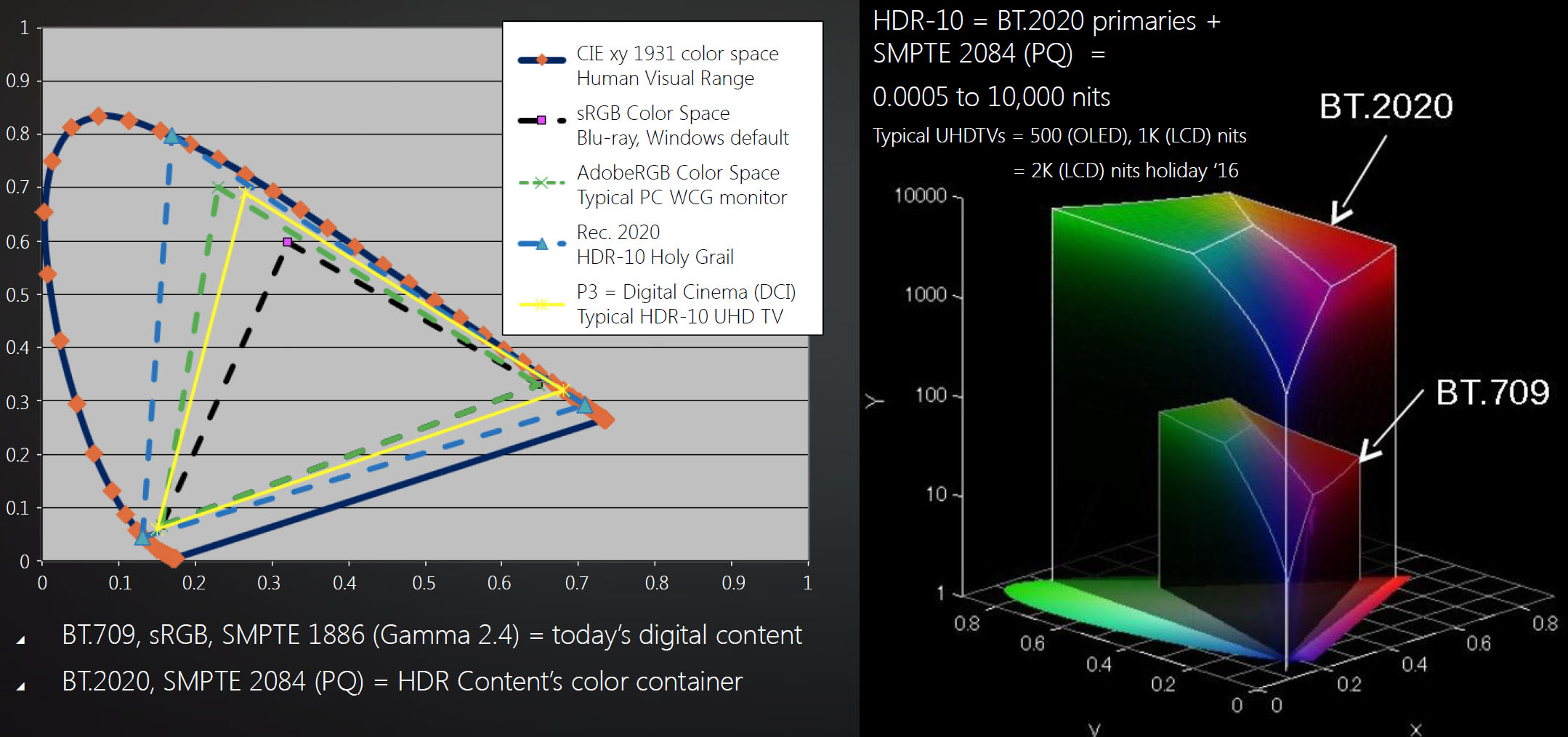

Getting there is going to take a lot of effort. Content needs to be mastered, distributed and displayed differently than what we’re accustomed to. This time next year, we’ll hopefully see LCD-based screens able to hit 2000 nits of luminance—a big improvement over the 471 cd/m² attained by the brightest UHD monitor we’ve reviewed thus far. But even that’s a far cry from the BT.2020 color space.

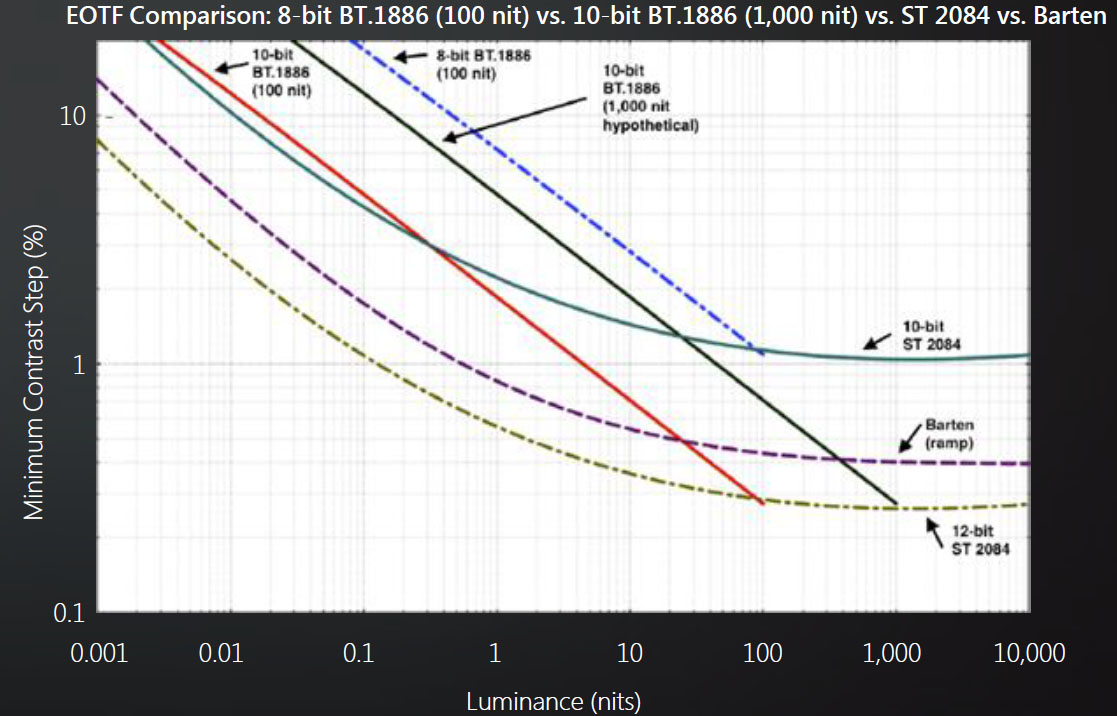

Still, Kim Meinerth, senior fellow and system architect, assured us that AMD put significant effort into building a display pipeline that supports the Society of Motion Picture and Television Engineers’ 10-bit ST 2084 electro-optical transfer function, which is much better at mimicking human vision than today’s BT.1886 curve.

Existing Radeon R9 300-series cards can be made to support HDR gaming and photos with 10 bits per channel at 1080p120, 1440p60 and 2160p30. Playing back HDR content at 2160p60 is going to require HDMI 2.0a or DisplayPort 1.3, though, and for that you’ll need to wait for AMD’s next-gen GPUs. Not that we’re holding our breath—Meinerth expects the bring-up for mass market HDR-capable displays in the second half of 2016.

For what it’s worth, Nvidia’s GM2xx processors can also be made to support HDR gaming and photos at 10 bits per component, though it remains to be seen how the company sets itself up against AMD in 2016.

-

red77star I am still gaming on 1080p which I found more than enough. 4K monitors are too expensive and not really ready for gamng yet but again why do I need 4K on screen where 1080p is just enough.Reply -

Larry Litmanen ReplyI am still gaming on 1080p which I found more than enough. 4K monitors are too expensive and not really ready for gamng yet but again why do I need 4K on screen where 1080p is just enough.

My college professor once said, if you feed a man a burger his entire life he will be perfectly happy, but if you give him fillet mignon he will never go back to regular burger meat.

-

gggplaya ReplyI am still gaming on 1080p which I found more than enough. 4K monitors are too expensive and not really ready for gamng yet but again why do I need 4K on screen where 1080p is just enough.

4k is not needed unless the actual textures are higher than 1080p density. Otherwise, all you'll get are scaled up textures with less aliasing. It'll look a little cleaner in that respect, but not "better" enough to kill your fps performance and spend big bucks on a monitor. -

2Be_or_Not2Be ReplyI am still gaming on 1080p which I found more than enough. 4K monitors are too expensive and not really ready for gamng yet but again why do I need 4K on screen where 1080p is just enough.

My college professor once said, if you feed a man a burger his entire life he will be perfectly happy, but if you give him fillet mignon he will never go back to regular burger meat.

Sure he will, when he only has $5 to spend. Your professor might have caviar tastes, but sometimes you only have a beer budget. -

cats_Paw I am gaming on 1080p and will most likely stay there for quite a while.Reply

This is because Plasma color fidelity is too good compared to LEDs (OLEDs are not yet reliable) to use LEDs, even if 4k vs 1080p.

That being said, its hard to even get a plasma nowdays so im guessing the push will be for 4K.

This is AMAZINGLY good for me. While ppl will have to buy the most expensive GPUs to play 4k ULTRA details Ill be buying budget GPUs and still playing on ULTRA.

It took years to go from 720p to 1080p as a standard (TV still did not catch up, but thats nothing surprising as public administrations always stay behind decades of the mainstream).

Looks good so far to me, but untill AMD and nVidia come up with something incredible (VR maybe?) Im not jumping of the hype train. -

mosc 4k is 4x 1080P which requires no interpolation. 4K displays work great as general purpose high res desktops and game with zero interpolation at 1080P.Reply -

Wisecracker It's just a struggle to bring everything up to speed for 4K@60 4:4:4 with HDCP 2.2 support.Reply

Keep pounding the good work THG in trying to educate folks. There will still be many angry, confused campers but they'll simply have to get over it.

Read and understand the specs!

-

2Be_or_Not2Be red77star: "I am still gaming on 1080p which I found more than enough. 4K monitors are too expensive and not really ready for gamng yet but again why do I need 4K on screen where 1080p is just enough. "Reply

17090332 said:You don't need it anymore than you need 1080p over 720p or 1368x768.

I think you might agree with red77star's comment if we include the following context: at this *current* point in time, with today's cost of 4K monitors & the cost of GPU power to run at 4k res w/60fps, he's fine with 1080p.

I would agree. When 4k gaming (monitor + GPU) sell at a much lower price point and games themselves commonly have detailed 4k graphics (not just low-detail rendered at 4k), then you will have the incentive to go 4k over 1080p.