HDMI 2.2 is here with new 'Ultra96' Cables — up to 16K resolution, higher maximum 96 Gbps bandwidth than DisplayPort, backwards compatibility & more

Full finalized spec released.

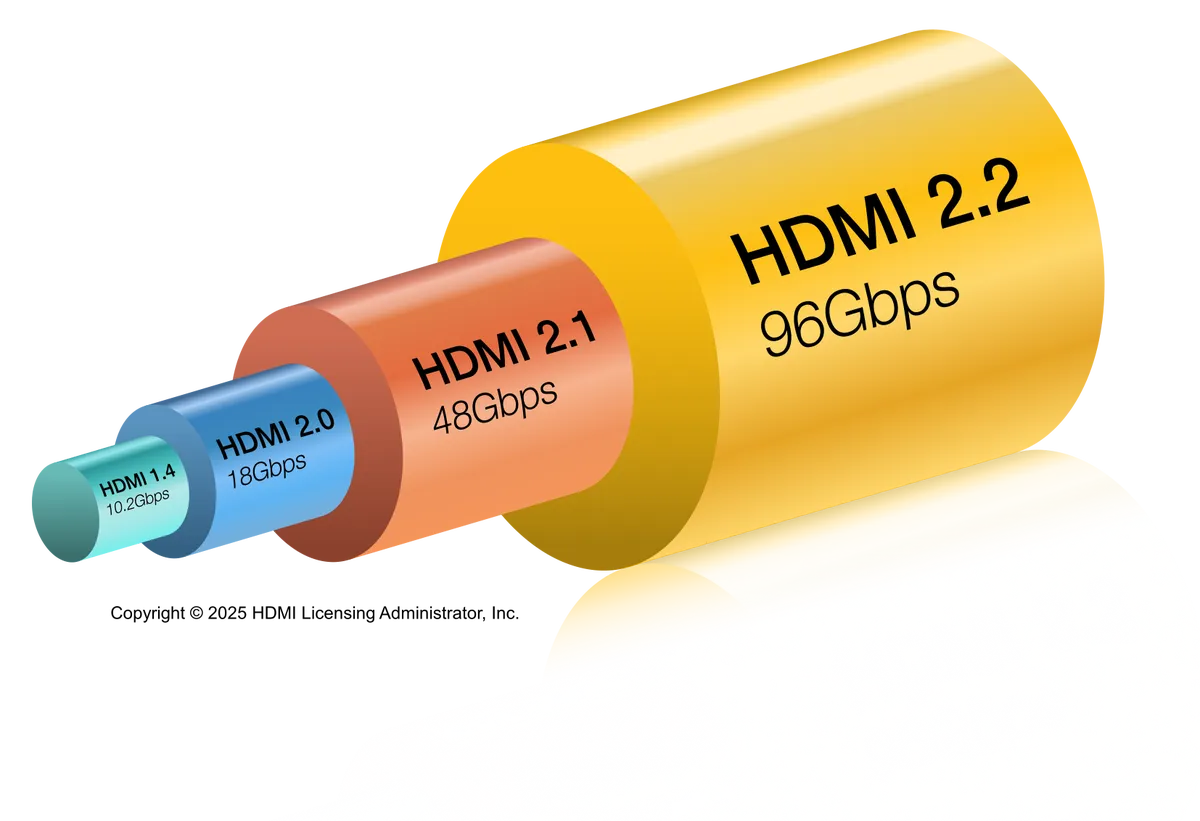

The HDMI Forum has officially finalized HDMI 2.2, the next generation of the video standard, rolling out to devices throughout the rest of this year. We already saw a bunch of key announcements at CES in January, but now that the full spec is here, it's confirmed that HDMI 2.2 will eclipse DisplayPort in maximum bandwidth support thanks to the new Ultra96 cables.

What the heck is an "Ultra96" cable?

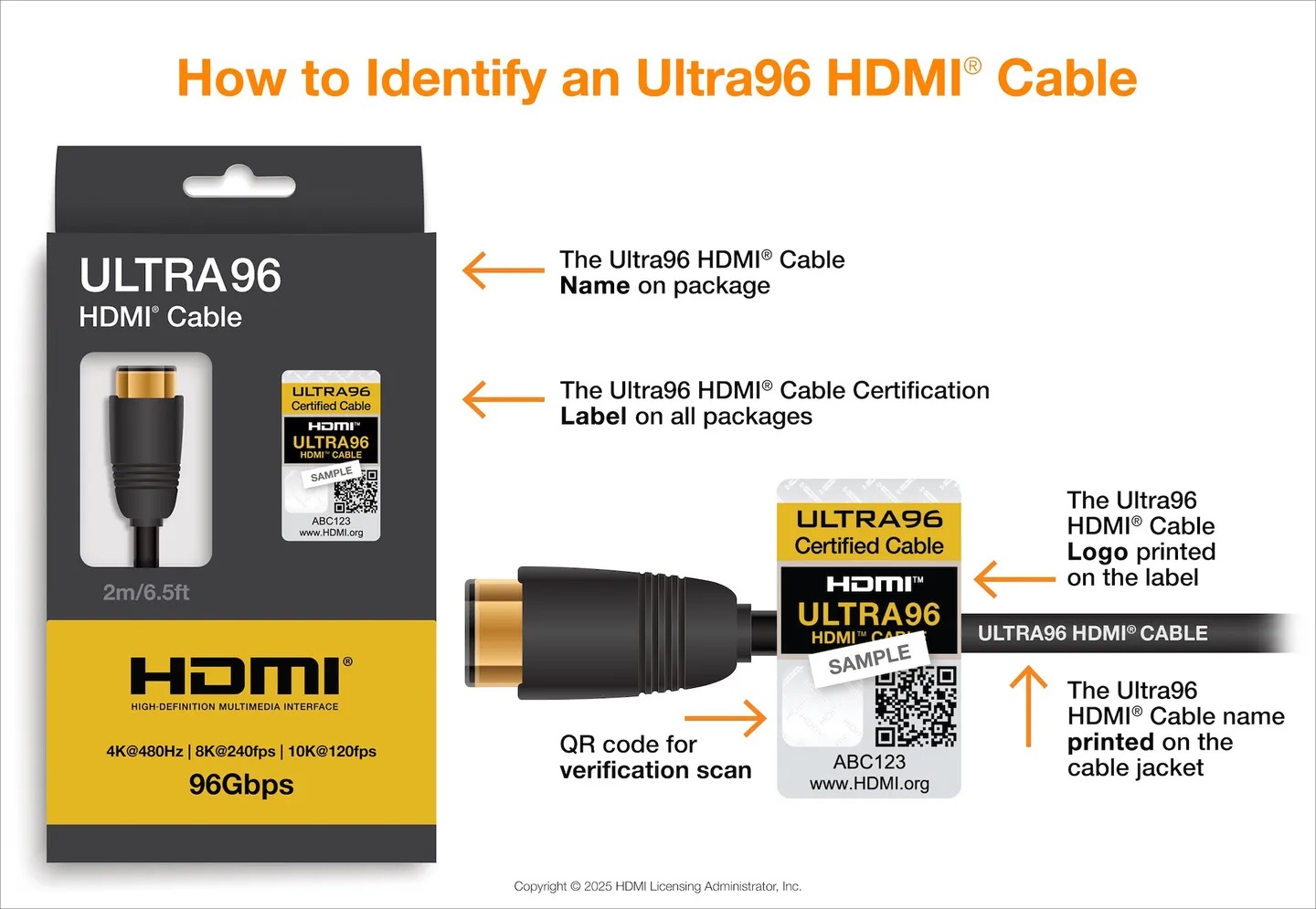

The key improvement with HDMI 2.2 over its predecessor, HDMI 2.1, is the bump in bandwidth from 48 GB/s to 96 GB/s. In order to ensure a consistent experience across all HDMI 2.2 devices, you'll be seeing new HDMI cables with an "Ultra96" label denoting the aforementioned transfer rate capability. These cables will be certified by the HDMI Forum with clear branding that should make them easy to identify.

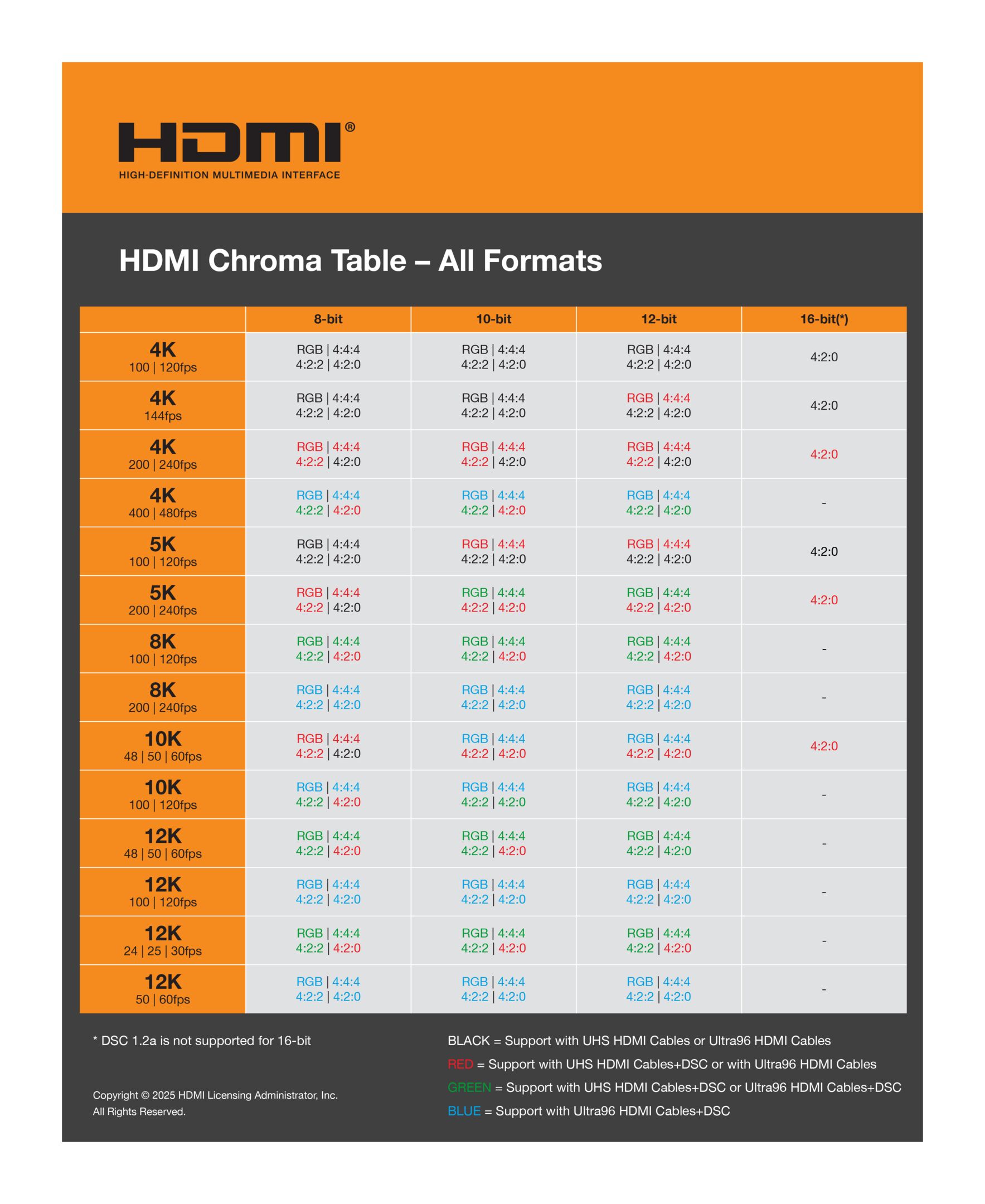

This new bandwidth unlocks 16K resolution support at 60 Hz and 12K at 120 Hz, but with chroma subsampling. That being said, you can expect 4K 240 Hz at up to 12-bit color depth without any compression. DisplayPort 2.1b UHBR 20 was the first to do this with some monitors already available on the market, but that standard is limited to only 80 GB/s and HDMI 2.2 edges it by just a bit, which allows for even uncompressed 8K at 60 Hz.

It's important to keep in mind that only cables explicitly labeled Ultra96 can allow for all this video goodness. As always, the HDMI Forum will allow manufacturers to make the claim that their devices are HDMI 2.2 compliant, but without actually enforcing the bandwidth rule. Therefore, it's important to look for the Ultra96 label so you know you're getting the real deal.

Thankfully, though, if you don't care about the super high resolutions or frame rates, HDMI 2.2 will be backwards compatible. That means you can use the new cables with older ports (or new ports with older cables) and get the lowest common denominator experience. For instance, if you plug an HDMI 2.2 Ultra96 cable in a TV with only HDMI 2.1 support, you should still get HDMI 2.1 features and speeds without any issues.

Apart from backwards compatibility, HDMI 2.2 will bring another comfort feature called "Latency Indication Protocol" (LIP) that will help with syncing audio and video together. This only really matters for large, complicated home theater setups incorporating a lot of speaker channels with receivers and projectors (or screens). If you're part of the crowd, expect reduced lip-sync issues across the board.

AMD Might Be the First to Adopt HDMI 2.2

The first HDMI 2.2 devices will likely start to roll around toward the last quarter of the year, with AMD's upcoming UDNA GPUs rumored to be among the first to adopt the standard. Little is known about the next-gen Radeon cards, but an earlier leak pointed to limited HDMI 2.2 support for UDNA that would restrict the maximum bandwidth on most models.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As it stands right now, AMD's Radeon Pro cards are the only ones that support the full DisplayPort 2.1b UHBR20 standard, with the RX 9000 series being limited to only 54 GB/s (down from the 80 GB/s maximum). It remains to be seen if AMD pulls something similar with UDNA and only offers the full HDMI 2.2 bandwidth with their workstation GPUs.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

setx This garbage should just die.Reply

As it stands right now, AMD's Radeon Pro cards are the only ones that support the full DisplayPort 2.1b UHBR20 standard

Are you sure? I think they were forbidden to support it on Linux. -

Pierce2623 Out curiosity, does anybody here look at a game in 4k and say “that’s just ugly. It needs more resolution.” ?Reply -

newtechldtech Reply

It is not about games, as a matter of fact no hardware can play games at 16K resolution ... but Movies will come at that resolution soon.Pierce2623 said:Out curiosity, does anybody here look at a game in 4k and say “that’s just ugly. It needs more resolution.” ? -

bit_user Reply

Yeah, probably this. AMD tried, for years, to work with the HDMI consortium to find some way to support HDMI 2.1+ in their open source drivers, but got shut down at every attempt. So, it's probably only by AMD maintaining a closed-source driver option (with only their workstation cards being "officially" supported) that they can offer higher.setx said:This garbage should just die.

Are you sure? I think they were forbidden to support it on Linux.

Someone else (I think Intel?) did manage to support HDMI 2.1 with open source drivers, so the issue appears to be how the display controller is designed and how much of its functionality gets handled in closed-source firmware vs. open source drivers.

Basically, what the HDMI consortium wants to avoid is open source drivers containing too many details of the specification, that other implementers could study and replicate, without themselves having to pay a license fee.

And, you know, this all comes down to patents. If HDMI were patent-free, then there wouldn't be a need for such onerous royalties and then there would be no need for such secrecy. -

bit_user Reply

The digital cinemas I've gone to are still using 4k and that seemed like enough resolution to me. I can't imagine any benefit for them going above 8k, unless we're talking about something like Sphere.newtechldtech said:It is not about games, as a matter of fact no hardware can play games at 16K resolution ... but Movies will come at that resolution soon.

I think it's more about wall-sized displays for visualization and realtime monitoring. Think: lots of charts, or maybe lots of video feeds from security cameras, etc.

As a matter of fact, I know some security cameras are already doing 8k, so maybe 16k isn't far off? -

John Nemesh Reply

Nope, they won't. while 4k obtained widespread adoption by both consumers and the movie industry, there is no push whatsoever to go beyond 4k. Hell, even most "4k" movies are just upscaled 2k!newtechldtech said:It is not about games, as a matter of fact no hardware can play games at 16K resolution ... but Movies will come at that resolution soon.

Additionally, the benefits of 8k are only really visible on TVs over 85", 16k would require at LEAST a 120" screen to see any noticeable difference over 4k!

Now for gaming and/or other computing applications, there is a need for higher resolution support. You will sit MUCH closer to a monitor than a TV/movie screen, and for certain applications (medical for one), every pixel counts!

You have to also take into consideration, especially for gaming, that this is not only "16k" capable, but will enable 4k at high refresh rates. -

bit_user Reply

Depends, but not many recent movies, or true classics that are based on new transfers.John Nemesh said:Hell, even most "4k" movies are just upscaled 2k!

https://forum.blu-ray.com/showthread.php?t=270798

Here's another good resource:

https://www.digiraw.com/DVD-4K-Bluray-ripping-service/4K-UHD-ripping-service/the-real-or-fake-4K-list/

It all depends on how far you sit. I sit about 10 feet away from my 65" screen and I think even 4k is overkill for me.John Nemesh said:Additionally, the benefits of 8k are only really visible on TVs over 85", 16k would require at LEAST a 120" screen to see any noticeable difference over 4k! -

Alex/AT Reply

Yes. Me. 4K->8K DLSS looks much (and yes I mean a lot, these crisp thin edges do matter) better than raw 4K in static / close to static / slowmo scenes (fast scenes look just the same as 4K due to DLSS dropping resolution).Pierce2623 said:Out curiosity, does anybody here look at a game in 4k and say “that’s just ugly. It needs more resolution.” ?

8K 65" screen @ 3 meters (LG QNED 96) / nV 4090.

I was just "YAY!!!!!!" when they added DLSS to Trails into Daybreak and I could go full throttle maxed 8K there.

This made not a day but my whole month.

Anime upscaling with nV superres actually also looks better in 8K than 4K. Same reason again: the edge lines and corners are ultimately smooth and upscale pixelation becomes unnoticeable. -

Alex/AT Having 8K@60 without DSC would actually be a paramount. Pity it would require a new screen and gpu though, so probably not until 2.3/2.4's out :D Would also be a matter of availability of 96G compatible optical cables as large screens are usually mounted at a distance from PC and even at just little >3 meters even 48G stability starts to suck badly on copper (a proud owner of two 5m copper cables = fail, 7m optical cable = huge success).Reply -

Alex/AT Reply

For me personally, there is a huge difference between 8K and 4K on 65" @ 3 meters.John Nemesh said:Additionally, the benefits of 8k are only really visible on TVs over 85", 16k would require at LEAST a 120" screen to see any noticeable difference over 4k!

@ 8K pixelated edges can't be seen while @ 4K they are clearly present everywhere.