iPhone 6s: Samsung And TSMC A9 SoCs Tested

The A9 SoC inside the iPhone 6s comes from two different vendors (Samsung and TSMC) using two different FinFET processes (14nm and 16nm, respectively). We test both versions to see if there's any power or performance differences.

Now that the veil of secrecy surrounding the iPhone 6s and 6s Plus has lifted, some interesting details have emerged. One of the more tantalizing discoveries was made by Chipworks, when it revealed that Apple is dual-sourcing its A9 SoC (System on a Chip) from Samsung and TSMC.

Sourcing components from multiple vendors is a common practice among OEMs, especially for memory chips such as RAM and NAND. Apple routinely dual-sources the IPS screens for its iPhones, and Samsung frequently uses entirely different SoCs, camera sensors, and baseband processors, which differ in performance and features, in its smartphones depending on which region they're sold.

What's unusual in this case, however, is that Samsung is using its 14nm LPE (Low Power Early) FinFET process, which it also uses for the Exynos 7420 SoC, while TSMC is using its own 16nm FinFET process, resulting in two different versions of the A9 SoC with different die sizes.

It's generally undesirable to manufacture a processor using two different technologies because it adds to the engineering cost. So why would Apple choose this dual-sourcing strategy for its A9 SoC? In one word: supply. Apple derives up to 70 percent of its total revenue from iPhone sales, so any component shortages for its flagship product would seriously compromise its financial performance. Because sub-20nm FinFET is a new technology for both Samsung and TSMC, it's likely neither has the capacity or yields to satisfy Apple's demand. Having a second supplier also provides a safety net in case one of the chip makers encounters a production problem and cannot meet its quota.

With two different versions of the A9 floating around and no way to tell beforehand which one comes packed inside a new iPhone, it's understandable that some people may be concerned that one chip holds a performance or battery life advantage over the other. Because both versions share the same architecture and run at the same clock frequencies, there should be no difference in peak performance. Where they could differ, however, is the voltage required to meet those frequencies. A processor using a higher voltage consumes more power, thereby reducing battery life, and produces more heat, which could degrade performance if the processor needs to throttle back clock speed to keep from overheating.

Before we explore the performance and battery life of the different A9 versions, it's important to remember that even processors using the same process technology, or even cut from the same wafer, require different voltages to meet the same clock frequency target due to natural manufacturing variability. A few extra atoms here or a thinner deposition layer there can be the difference between an efficient, lower-voltage processor and a more power-hungry, higher-voltage one.

Rather than scrapping processors that do not meet power/performance targets, manufacturers sort them into different frequency bins and price them accordingly. For example, there are three different versions of Qualcomm's Snapdragon 801 with integrated LTE baseband with maximum frequency ratings between 2.26 GHz and 2.45 GHz. Because every A9 CPU core is required to run at the same max clock frequency, Apple's tolerance range for core voltage will necessarily be tighter.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Performance And Battery Life

This topic has already sparked discussion on several Internet forums, with users posting results from various benchmarks (primarily the Geekbench battery test) showing a definitive battery life advantage for the TSMC version, prompting Apple to issue the following statement:

With the Apple-designed A9 chip in your iPhone 6s or iPhone 6s Plus, you are getting the most advanced smartphone chip in the world. Every chip we ship meets Apple's highest standards for providing incredible performance and deliver [sic] great battery life, regardless of iPhone 6s capacity, color, or model.Certain manufactured lab tests which run the processors with a continuous heavy workload until the battery depletes are not representative of real-world usage, since they spend an unrealistic amount of time at the highest CPU performance state. It's a misleading way to measure real-world battery life. Our testing and customer data show the actual battery life of the iPhone 6s and iPhone 6s Plus, even taking into account variable component differences, vary within just 2-3% of each other.

While Apple's assertion that the benchmarks being used to compare the two A9 versions do not represent real-world usage is accurate, that's not really the problem here. The benchmarks themselves are perfectly valid tools for probing power efficiency. The real issue is how the tests were conducted. Were the screens calibrated to the same brightness level for each iPhone 6s? Did each phone have the same apps installed and use the same operating system settings? What background processes were running on each phone? We've seen firsthand how changing just a few settings can affect benchmark scores by up to 15 percent, and that's before any apps such as Facebook, Instagram, or Twitter are installed that can wreak havoc with CPU benchmarks.

For this reason, Tom's Hardware has developed a rigorous testing methodology for obtaining accurate, repeatable performance results. Using these procedures, we tested two iPhone 6s Plus phones, one with a Samsung-made A9 (which identifies internally as model N66AP) and one with the TSMC A9 (model N66mAP).

| Apple A9 SoC Comparison: Performance | ||||

|---|---|---|---|---|

| Benchmark | Samsung A9Result | TSMC A9Result | % DifferenceSamsung vs. TSMC | |

| Basemark OS II Full | Overall | 2408 | 2433 | -1.03% |

| System | 5030 | 5127 | -1.89% | |

| Memory | 1270 | 1319 | -3.72% | |

| Graphics | 4355 | 4310 | 1.03% | |

| Web | 1209 | 1203 | 0.54% | |

| Geekbench 3Single-Core | Geekbench Score | 2545 | 2529 | 0.63% |

| Integer | 2559 | 2544 | 0.57% | |

| Floating Point | 2526 | 2503 | 0.92% | |

| Memory | 2558 | 2554 | 0.18% | |

| Geekbench 3Multi-Core | Geekbench Score | 4455 | 4419 | 0.81% |

| Integer | 5000 | 4947 | 1.07% | |

| Floating Point | 4873 | 4829 | 0.91% | |

| Memory | 2533 | 2546 | -0.51% | |

| 3DMark: Ice Storm Unlimited | Score | 27958 | 27768 | 0.68% |

| Graphics | 41780 | 41872 | -0.22% | |

| Physics | 12957 | 12744 | 1.67% | |

| GFXBench 3.0 | Manhattan Offscreen | 2452 frames (40.2 fps) | 2455 frames (40.2 fps) | -0.10% |

| T-Rex Offscreen | 4430 frames (79.1 fps) | 4473 frames (79.9 fps) | -0.97% |

As expected, there's no discernible peak performance difference between the two different A9 models. All of the CPU, GPU, and system performance scores show less than a 2 percent difference, which lies within the margin of error for these tests. The only small outlier is the Basemark OS II Memory test, but this has more to do with RAM performance and how the operating system caches disk I/O then SoC speed.

| Apple A9 SoC Comparison: Battery Life and Thermal Throttling | ||||

|---|---|---|---|---|

| Benchmark | Samsung A9Result | TSMC A9Result | % DifferenceSamsung vs. TSMC | |

| Basemark OS II Full | Battery Score | 951 | 879 | 8.13% |

| Battery Lifetime | 167 min | 151 min | 10.76% | |

| GFXBench 3.0 | Battery Performance | 2866 frames (51.2 fps) | 2857 frames (51.0 fps) | 0.32% |

| Battery Lifetime | 149 min | 144 min | 3.47% | |

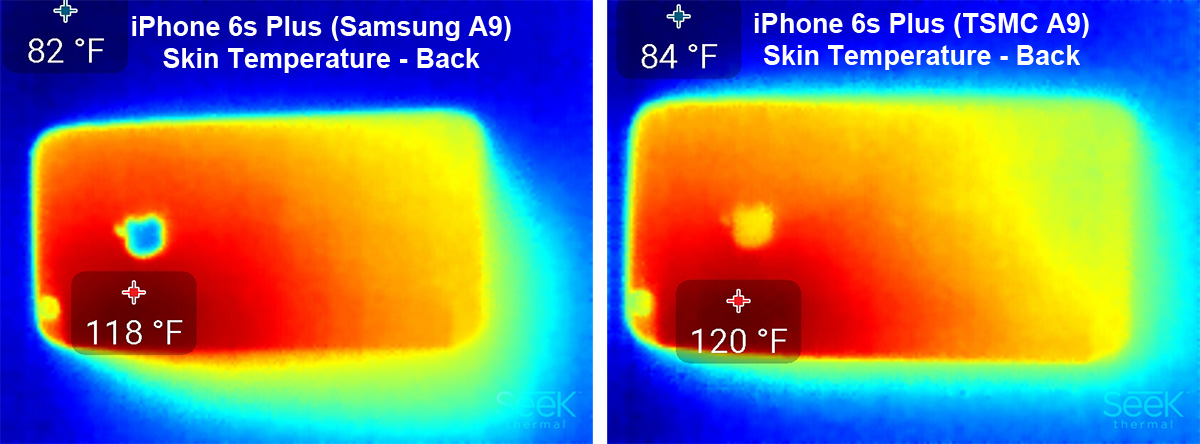

| Skin Temperature | 118 ºF | 120 ºF | -1.67% |

The Basemark OS II battery score accounts for both battery life and performance under CPU-intensive workloads. It's a good stress test for determining CPU efficiency and seeing if the SoC can maintain peak performance levels without thermal throttling. In this case, we see Samsung's 14nm FinFET process has a slim but noticeable advantage, lasting 10.8 percent longer (16 minutes) than the iPhone 6s Plus with the TSMC made A9.

The GFXBench battery life test is the GPU equivalent of Basemark OS II, providing a worst-case battery life based primarily on GPU, memory, and display power consumption that is similar to what you might see while playing an intense 3D game. In this scenario, both the performance and the skin temperature on the back of each iPhone are nearly identical, indicating that both A9 SoCs generate a similar amount of heat and exhibit minimal thermal throttling. The Samsung-made A9 manages to last 3.5 percent longer when pushing the GPU hard, which equates to a meager five minutes and is barely outside the margin of error in this test.

Conclusion

Based on the results of our testing, it's clear that both versions of Apple's A9 SoC deliver the same level of performance, but Samsung's 14nm FinFET process appears to offer slightly better power efficiency, extending battery life between 3.5-10.8 percent. This is a little more than the 2-3 percent quoted by Apple, but not much, and it equates to only about 5-15 minutes of runtime under the most extreme conditions.

Real-world use cases other than intense gaming do not run the CPU and GPU at 100 percent for extended periods. Instead, the CPU and GPU run at much lower voltages and frequencies the majority of the time and only ramp up to their maximum clock speeds for short bursts of activity. Because an SoC's power versus frequency relationship is nonlinear (meaning each additional 100 MHz of frequency requires larger and larger increases in core voltage), Samsung's advantage during normal use will be less than what we measured in our tests and is likely to be very close to Apple's 2-3 percent figure.

Although we believe our results are accurate, they are derived from a sample size of one. We do not know Apple's allowable core voltage range for the A9 or where our particular samples fall within this tolerance band. Our tests also fail to capture the small variability in power consumption for other components like the RAM and display. Therefore, other iPhones may show more or less variance in runtime than our samples.

Even after taking this into account, the extra few minutes of battery life you may get from a phone using the Samsung-made A9 will hardly be noticeable.

If you want a new iPhone 6s, buy it, use it, and don't worry about who made the processor.

MORE: Best SmartphonesMORE: All Smartphone Content

-

Pei-chen Good review and write up. I have the same exactly thought when "the news break" that some YouTuber find one phone with a TSMC A9 lasts longer than the one with Samsung A9. Putting aside the problem with sample-size-of-1, those YouTuber have no idea how binning works and assumes all TSMC/Samsung chips are exactly the same. Those of us that've been around Tom's and Anand's a long time knows even two identically binned chips would show difference in power draw and those clueless YouTuber have no idea.Reply

Add in the fact the boards are full of chips, battery at 100% still draw power and screen at same brightness will have different power draw it is safe to say difference between TSMC and Samsung A9s are overblown. -

nitrium "extending battery life between 3.5-10.8 percent. This is a little more than the 2-3 percent quoted by Apple, but not much, and it equates to only about 5-15 minutes of runtime under the most extreme conditions."Reply

Well it's roughly 100-200% more than what Apple quoted - that is quite a lot different isn't it? And 5-15% under extreme conditions doesn't seem like much, but how much more is it under typical workloads? Wouldn't that equate to be as much as an hour of extra battery over the day if you get a Samsung manufactured A9? -

hannibal Also one other site did test that TSMC battery lasted longer, so there is definitely differences between CPUs. So silicon lottery...Reply -

Just what I needed to know.Reply

iPhone 4: if u hold it a certain way, u lost most of ur signal.

iPhone 6: bends if u put it in ur jean pocket.

iPhone 6s: CPU-maker luck-of-the-draw affects battery life. -

MobileEditor ReplyJust what I needed to know.

iPhone 4: if u hold it a certain way, u lost most of ur signal.

iPhone 6: bends if u put it in ur jean pocket.

iPhone 6s: CPU-maker luck-of-the-draw affects battery life.

Well, it's still better than the Samsung camera lottery :) All of the latest Galaxy phones come with either a Sony or Samsung made rear camera sensor that are supposed to be equivalent. In our Galaxy S6 review, however, we show that there's a notable difference between them.

- Matt Humrick, Mobile Editor, Tom's Hardware -

MobileEditor Reply"extending battery life between 3.5-10.8 percent. This is a little more than the 2-3 percent quoted by Apple, but not much, and it equates to only about 5-15 minutes of runtime under the most extreme conditions."

Well it's roughly 100-200% more than what Apple quoted - that is quite a lot different isn't it? And 5-15% under extreme conditions doesn't seem like much, but how much more is it under typical workloads? Wouldn't that equate to be as much as an hour of extra battery over the day if you get a Samsung manufactured A9?

No, I do not consider 5-15 minutes after 80-100% discharge to be a lot. Also, this is a worst-case scenario where the CPU or GPU runs at max frequency all the time. During normal use, the CPU/GPU will be at their idle frequencies most of the time. Because of the nonlinear relationship between voltage and frequency, the difference should be less than what we measured during normal operation, which is the scenario Apple claims only shows a 2-3% difference. If that's true, then a use case where the battery lasts 8 hours would show about a 15 minute advantage. In the Basemark OS II test where the CPU is at max frequency for the duration of the test, there's only a 20 minute difference until the battery is depleted (extrapolating from where the test ends with 20% battery remaining).

Unfortunately, our iOS test suite does not contain any tests that mimic a real-world usage scenario. I did perform a simple 1080p H.264 video playback test and saw a 2-3% advantage for the TSMC A9. This is not necessarily applicable to the CPU, however, because I believe the A9 uses a fixed-function hardware block for video decoding that would use a different transistor type optimized for lower frequency operation.

- Matt Humrick, Mobile Editor, Tom's Hardware -

Chris Droste Reply"extending battery life between 3.5-10.8 percent. This is a little more than the 2-3 percent quoted by Apple, but not much, and it equates to only about 5-15 minutes of runtime under the most extreme conditions."

Well it's roughly 100-200% more than what Apple quoted - that is quite a lot different isn't it? And 5-15% under extreme conditions doesn't seem like much, but how much more is it under typical workloads? Wouldn't that equate to be as much as an hour of extra battery over the day if you get a Samsung manufactured A9?

most smartphones average between 5.5 and 6.5hours of on screen time with app, text, email, and talk usage in the real world. even if you had a "golden sample" you're not likely to get more than 7hours in practical use, and that will vary downward in most cases. The result? you'd have to use your "special" iPhone 6S like your grandparents who don't even know what a mobile app is to reap anything truly noteworthy. If you really must have battery life, Verizon will be happy to sell you a Droid XXX Turbo/super/special/R/S MAXX phone with massive battery. -

Sywofp One thing - why didn't you guys test using the Geekbench battery life benchmark?Reply

Considering it is the one that started this whole debate, it seems like it would be the first test to run...

Arstechnica found similar results to yours, except for Geekbench, where the Samsung performed a lot worse.

So the important question IMO, is why that benchmark gets such a big difference, and does it reflect any real world usage scenarios - even if not experience by most people. -

MobileEditor ReplyOne thing - why didn't you guys test using the Geekbench battery life benchmark?

Considering it is the one that started this whole debate, it seems like it would be the first test to run...

Arstechnica found similar results to yours, except for Geekbench, where the Samsung performed a lot worse.

So the important question IMO, is why that benchmark gets such a big difference, and does it reflect any real world usage scenarios - even if not experience by most people.

We did not run the Geekbench battery test because it's not part of our usual test suite, and we're not familiar enough with how it works or with the accuracy of its results.

I saw the Ars Technica article. It seems a bit odd that every test except Geekbench battery shows minimal difference, while Geekbench shows a 20+% gap. This is a red flag to me that something might not be working right with this test and is another reason we did not include those results.

- Matt Humrick, Mobile Editor, Tom's Hardware

![Two different model numbers for the Apple A9 SoC. [CREDIT: Chipworks]](https://cdn.mos.cms.futurecdn.net/isCjQwm9e4pHRKeMVPQBtT.jpg)