AMD FreeSync Versus Nvidia G-Sync: Readers Choose

We set up camp at Newegg's Hybrid Center in City of Industry, California to test FreeSync against G-Sync in a day-long experiment involving our readers.

Test Results: FreeSync or G-Sync?

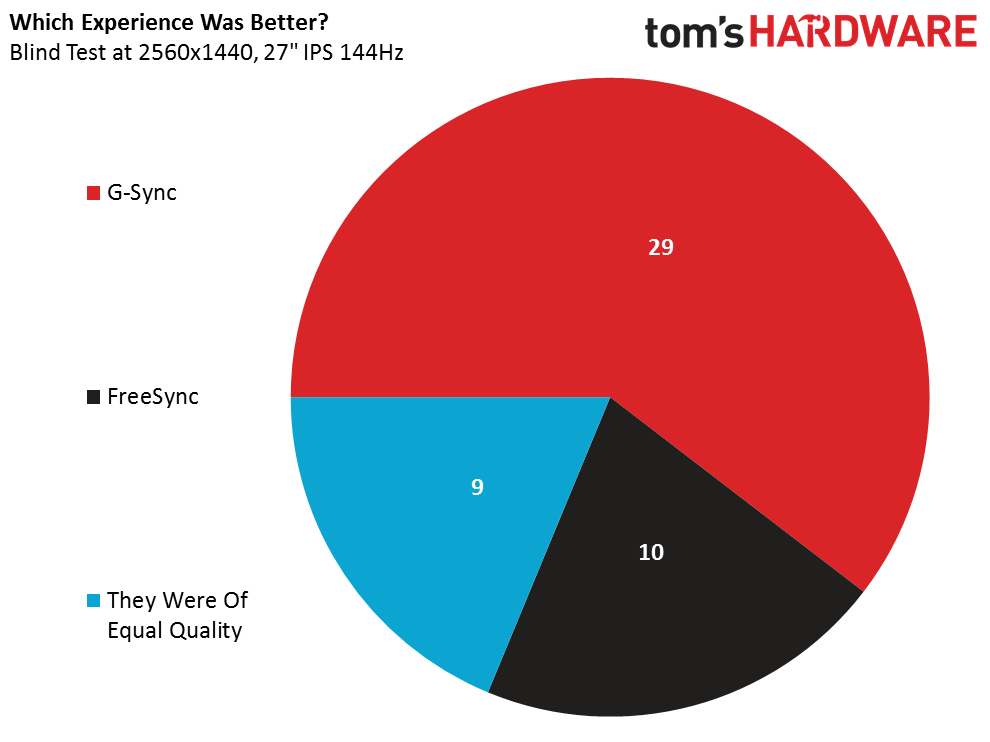

We begin with the overall result, and then drill down from there. Resist the temptation to take this first chart and use it exclusively as the basis for your next purchasing decision. After all, there were a couple of different factors in play that need to be discussed in greater depth, and this outcome cannot capture their subtleties.

Twenty-nine (or 60%) of our participants chose G-Sync based on their experience in our experiment. Ten (or ~21%) of the attendees picked FreeSync. And nine (or almost 19%) considered both solutions of equal quality. Given the price premium on G-Sync, you can almost count an undecided vote for AMD, since smart money often goes to the cheaper hardware if it delivers a comparable experience.

We expected Nvidia to secure the majority in our overall tally for a couple of reasons. First, Borderlands, which half of our respondents played, ran outside of the FreeSync-equipped Asus monitor’s variable refresh range. So we need to assess how many surveys cited this title as their reason for choosing G-Sync. Second, we created a side experiment on two computers using Battlefield 4, which allowed FreeSync to remain under 90 FPS (in its variable refresh range) while Nvidia’s hardware pushed higher frame rates through dialed-back quality options. One-quarter of our attendees would have unknowingly participated in this one, and we’ll separately compare their responses to the folks who played at the Ultra preset on both technologies.

To be clear, the MG279Q’s variable range of 35 to 90Hz is not a limitation of FreeSync, but rather a consequence of the scaler Asus chose to outfit its monitor with. We wanted to compare similar-looking panels and had to make some compromises to minimize the variables in play. There was an alternate combination that could have stepped us back to TN technology with a range of 40 to 144Hz, but we do not regret running our tests using IPS. Universally, the audience commented on how gorgeous the screens looked, and we suspect that most of the gamers with Asus’ MG279Q on their list have it there because it offers a native QHD resolution, that AU Optronics AHVA panel and refresh rates up to 144Hz. Regardless of whether you enable FreeSync or not, that’s one heck of a gaming product for $600, or $150 less than the G-Sync-equipped equivalent, as priced on Newegg at the time of this writing. [Editor's note: At publishing time, the price of the Acer monitor increased $50. This is reflected in the pricing buttons that link to NewEgg. See page 2. Thus, the price difference grew. Those pricing buttons are dynamic and pull directly from NewEgg, so they also could change over time.]

Asus further shared its plans to launch a TN-based FreeSync-capable screen in early September with a 1ms response time and VRR between 40 and 144Hz. The company will also introduce its G-Sync-equipped IPS line in September, including a 4K/60Hz screen and a QHD/144Hz model.

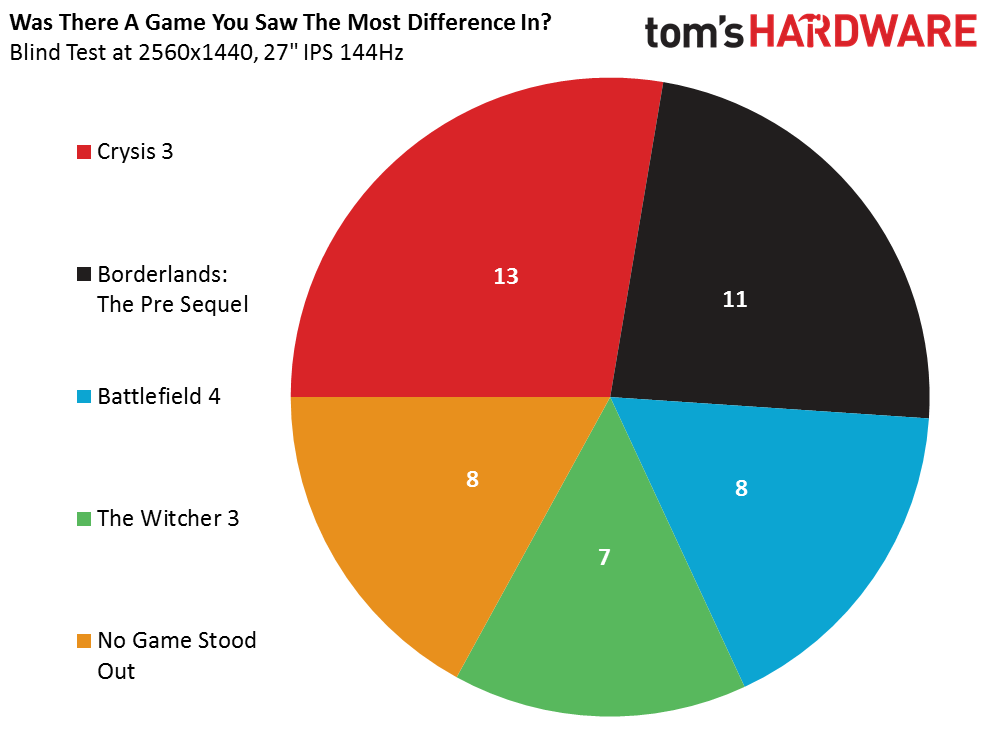

Eight respondents said there was no single game that stood out to them, and of those, five answered that the two technologies were of equal quality. One respondent made comments, but didn’t cite a specific game that affected him and gave both technologies equal marks.

Crysis was most often cited as the game where a difference was observed, which is difficult to explain technically because it should have been running in a variable refresh range on both competing technologies. Four of those who mentioned Crysis preferred their experience on AMD’s hardware, eight chose Nvidia’s and one said the two technologies were of equal quality, though his Nvidia-based platform did stutter during an intense sequence.

Next was Borderlands, which 11 participants said showed the most difference. Nine of those picked G-Sync as their preference, one chose FreeSync, noting the 390X-equipped machine felt smoother in both Borderlands and The Witcher, and one said they were of equal quality after noticing the tearing on FreeSync, but mentioned a different experience in The Witcher. While we hadn't anticipated it during our planning phase, Borderlands turned out to be a gimmie for Nvidia since the AMD setups were destined to either tear (if we left v-sync off) or stutter/lag (if we switched v-sync on). What we confirmed was that a majority of our respondents could see the difference. It's a good data point to have.

Battlefield demonstrated the most variance for eight attendees. Six folks favored what they saw from G-Sync, one picked FreeSync and the last noted that he experienced smoother performance in this game (perhaps compared to Crysis?), but said the two systems were of equal quality.

The Witcher seemed least-influential—seven participants saw the biggest difference in it. Three of those thought the 390X delivered better responsiveness, while four favored the GTX 970 and G-Sync. As with Crysis, both G-Sync and FreeSync should have been running within their respective variable refresh ranges based on the settings we chose. So, it’s hard to say with certainty what other factors would be affecting game play.

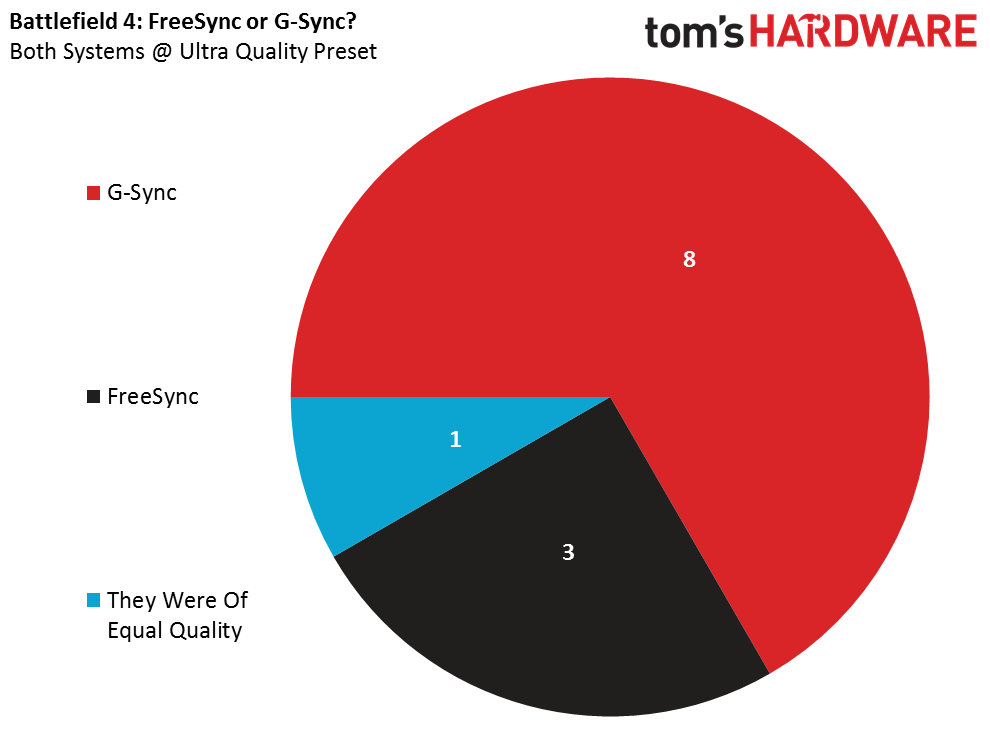

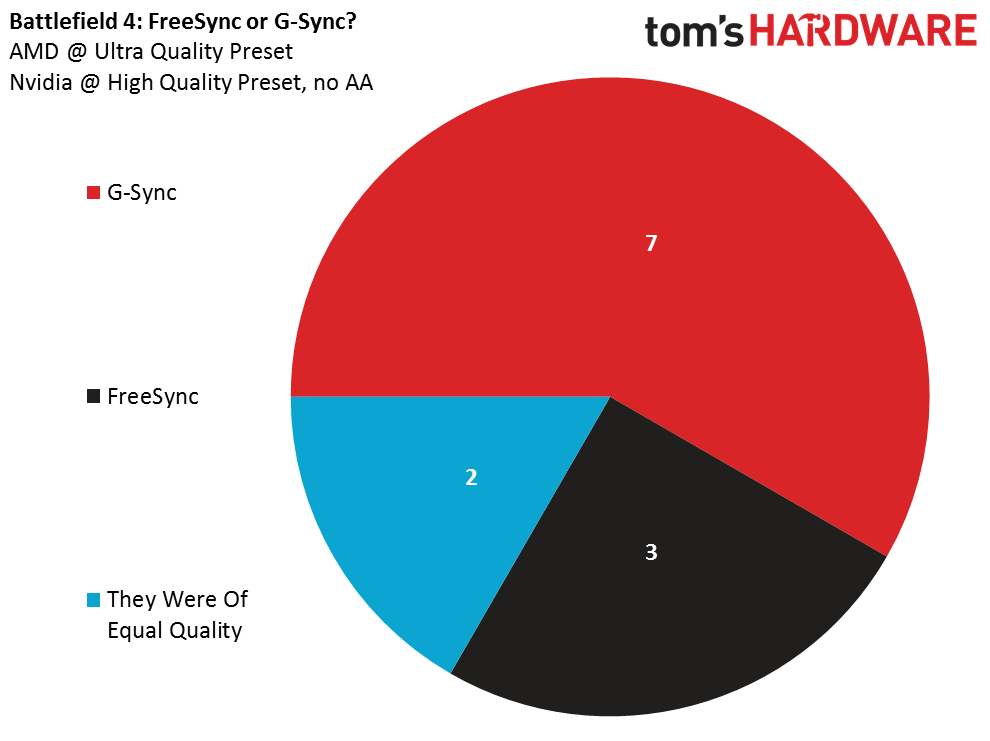

How about that mini-experiment we did with Battlefield 4, running two workstations in the same sub-90 FPS range and two at different settings, keeping FreeSync at its target performance level with higher quality as Nvidia dropped to the High preset for faster frame rates?

Well, of the folks who played on the machines set to Battlefield's Ultra quality preset, three chose the AMD-equipped system, eight went with Nvidia’s hardware and one put them on equal footing. Notably, even when a respondent picked one technology over the other, it was mentioned that they were quite similar and free from tearing. Other in-game differences like perceived frame rates seemed to affect the choices.

Right next to them, we had another AMD machine at Ultra settings and an Nvidia box dialed down to the High preset. Again, three respondents picked AMD’s hardware. Seven went with Nvidia, while two said they were of equal quality. Three participants specifically called out smoothness in Battlefield 4 as something they noticed, and nobody reported lower visual quality, despite the dialed-back quality preset and lack of anti-aliasing on the GeForce-equipped machine. Overall, we'd call those results comparable.

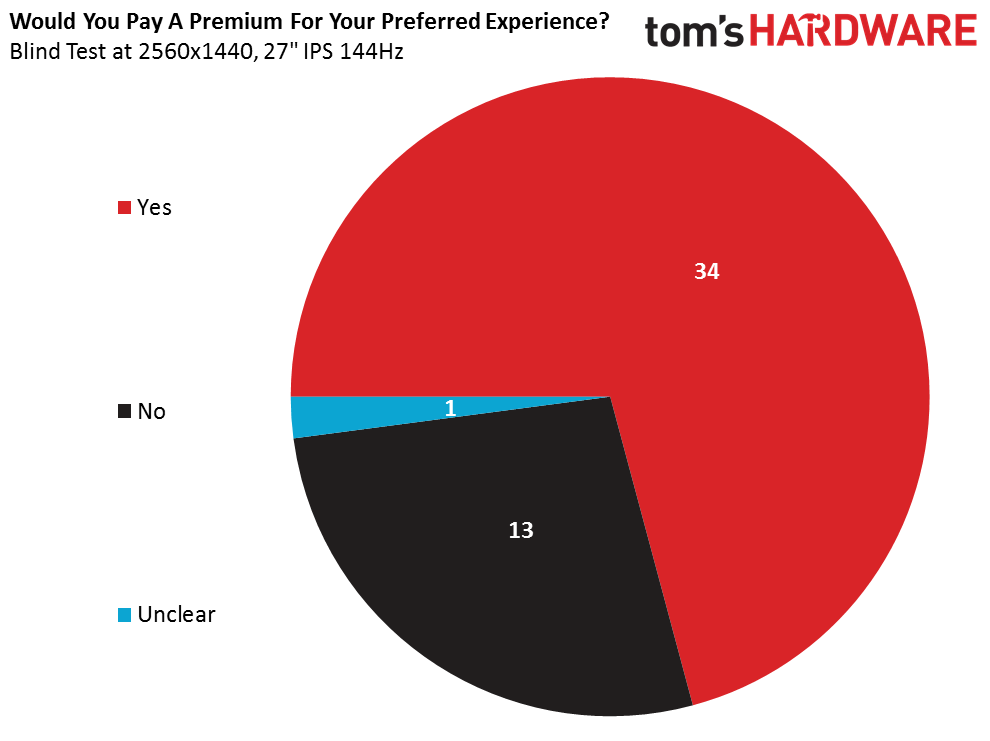

Thirty-four of our 48 participants said that yes, they would pay a premium for the experience they preferred over the other configuration. Thirteen wouldn’t, and one answer wasn’t clear enough to categorize.

Interestingly, nine of those who said they’d spend more on the better experience ended up picking the Radeon R9 390X/Asus MG279Q combination. Twenty-three picked the G-Sync-capable hardware. So, 79% of our respondents who preferred the G-Sync experience would be willing to pay extra for it. How much, though?

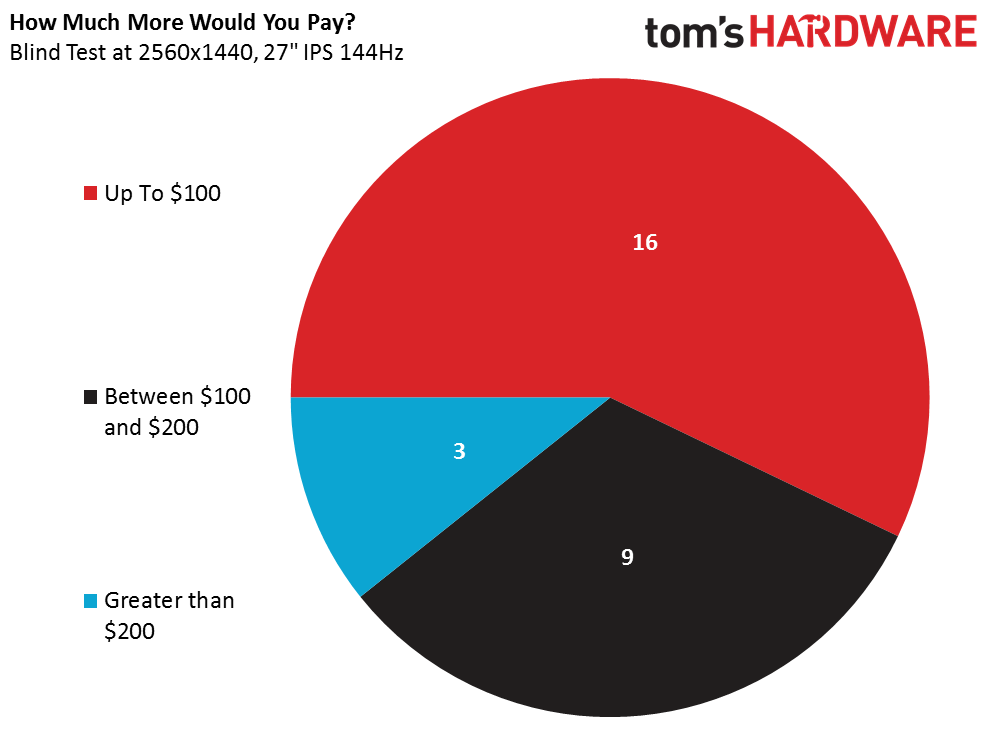

We asked our participants to quantify the degree to which they favored one solution over the other, if indeed they had a preference. Not all of the answers we received were usable, but of those that were, 16 said they’d be willing to spend up to $100 more for the better experience. Nine capped the premium around $200. And three felt strongly enough to budget significantly more—one gamer committed to up to twice the spend, one said an extra $200-$300 per year and a third would budget up to $500 more for a high-end gaming PC.

Current page: Test Results: FreeSync or G-Sync?

Prev Page Welcome To The Big Show Next Page A Little About Our ParticipantsGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

Wisecracker With your wacky variables, and subsequent weak excuses, explanations and conclusions, this is not your best work.Reply

-

NethJC Both of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.Reply

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

-

AndrewJacksonZA Thank you for the event and thank you for your write up. Also, thank you for a great deal of transparency! :-)Reply -

Vlad Rose So, 38% of your test group are Nvidia fanboys and 8% are AMD fanboys. Wouldn't you think the results would be skewed as to which technology is better? G-Sync may very well be better, but that 30% bias makes this article irrelevant as a result.Reply -

jkrui01 as always on toms, nvidia and intel wins, why bother making this stupid tests, just review nvida an intel hardware only, or better still, just post the pics and a "buy now" link on the page.Reply -

loki1944 ReplyBoth of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

Gsync is way overhyped; I can't even tell the difference with my ROG Swift; games definitely do not feel any smoother with Gsync on. I'm willing to bet Freesync is the same way. -

Traciatim It seems with these hardware combinations and even through the mess of the problems with the tests that NVidia's G-Sync provides an obviously better user experience. It's really unfortunate about the costs and other drawbacks (like only full screen).Reply

I would really like to see this tech applied to larger panels like a TV. It would be interesting considering films being shot at 24, 29.97, 30, 48 and 60FPS from different sources all being able to be displayed at their native frame rate with no judder or frame pacing tools (like 120hz frame interpolation) that change the image. -

cats_Paw "Our community members in attendance now know what they were playing on. "Reply

Thats when you lost my interest.

It is proven that if you give a person this information they will be affected by it, and that unfortunatelly defeats the whole purpose of using subjective opinions of test subjects to evaluate real life performance rather than scientific facts (as frames per second).

too bad since the article seemed to be very interesting. -

omgBlur ReplyBoth of these technologies are useless. How bout we start producing more high refresh panels ? It's as simple as that. This whole adaptive sync garbage is exactly that: garbage.

I still have a Sony CPD-G500 which is nearing 20 years old and it still kicks the craps out of every LCD/LED panel I have seen.

Gsync is way overhyped; I can't even tell the difference with my ROG Swift; games definitely do not feel any smoother with Gsync on. I'm willing to bet Freesync is the same way.

Speak for yourself, I invested in the ROG Swift and noticed the difference. This technology allows me to put out higher graphics settings on a single card @ 1440p and while the fps bounces around worse than a Mexican jumping bean, it all looks buttery smooth. Playing AAA games, I'm usually in the 80s, but when the game gets going with explosions and particle effects, it bounces to as low as 40. No stuttering, no screen tearing.