Intel Plays Defense: Inside Its EPYC Slide Deck

Memory & Bandwidth Projections

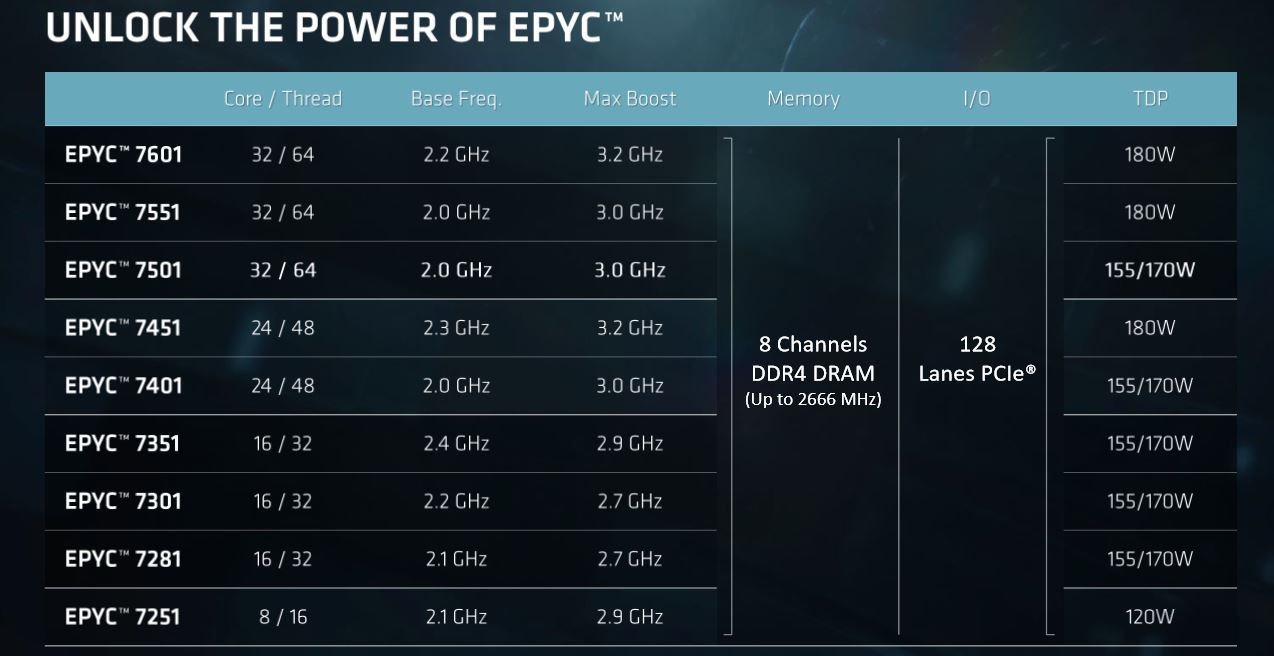

EPYC has eight memory channels, outnumbering Purley's six, and supports up to 2TB of memory per processor. If you go the Intel route, you'll need a more expensive "M" SKU to accommodate up to 1.5TB. Most mainstream Xeons only support up to 768GB of memory.

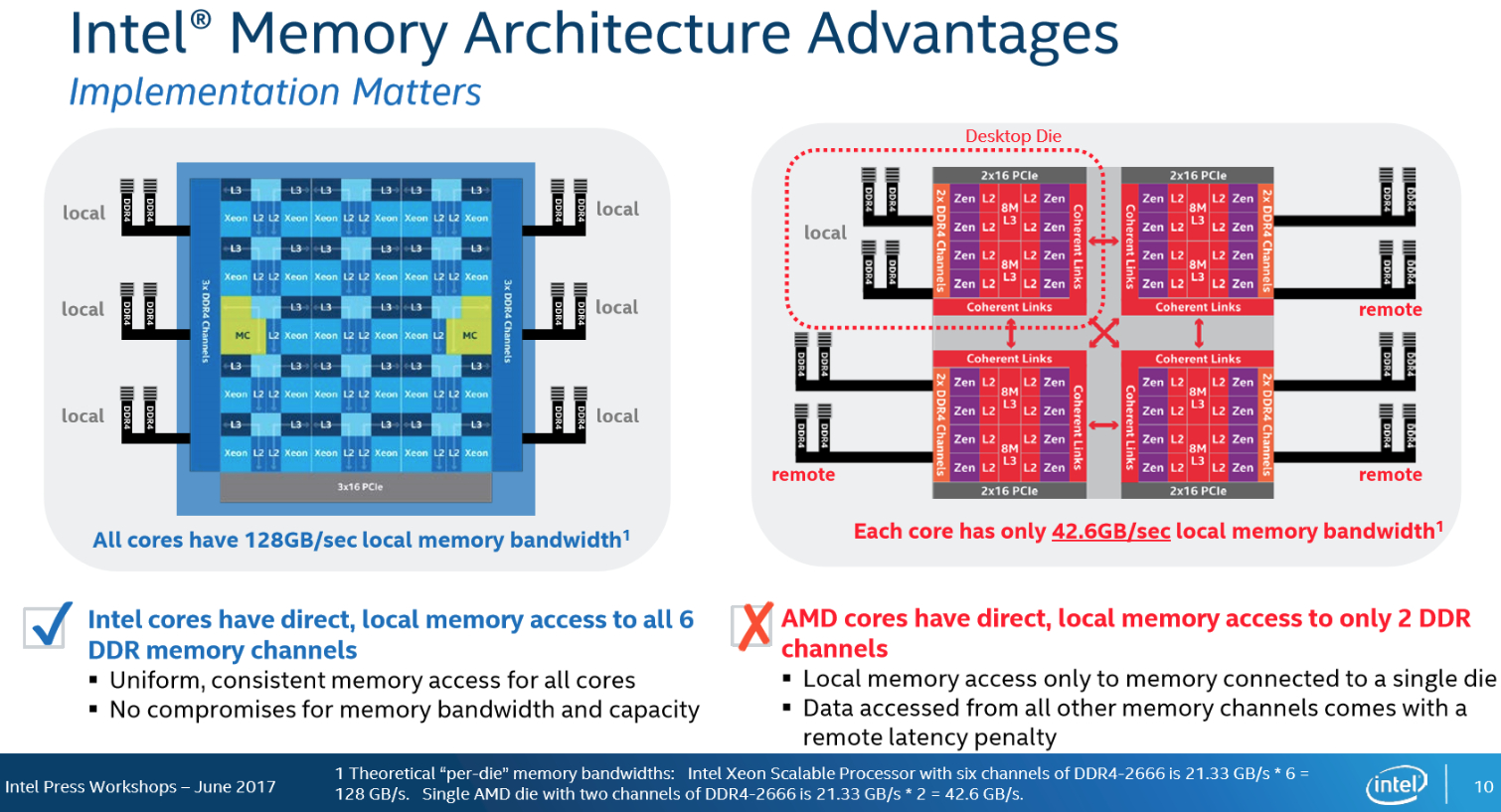

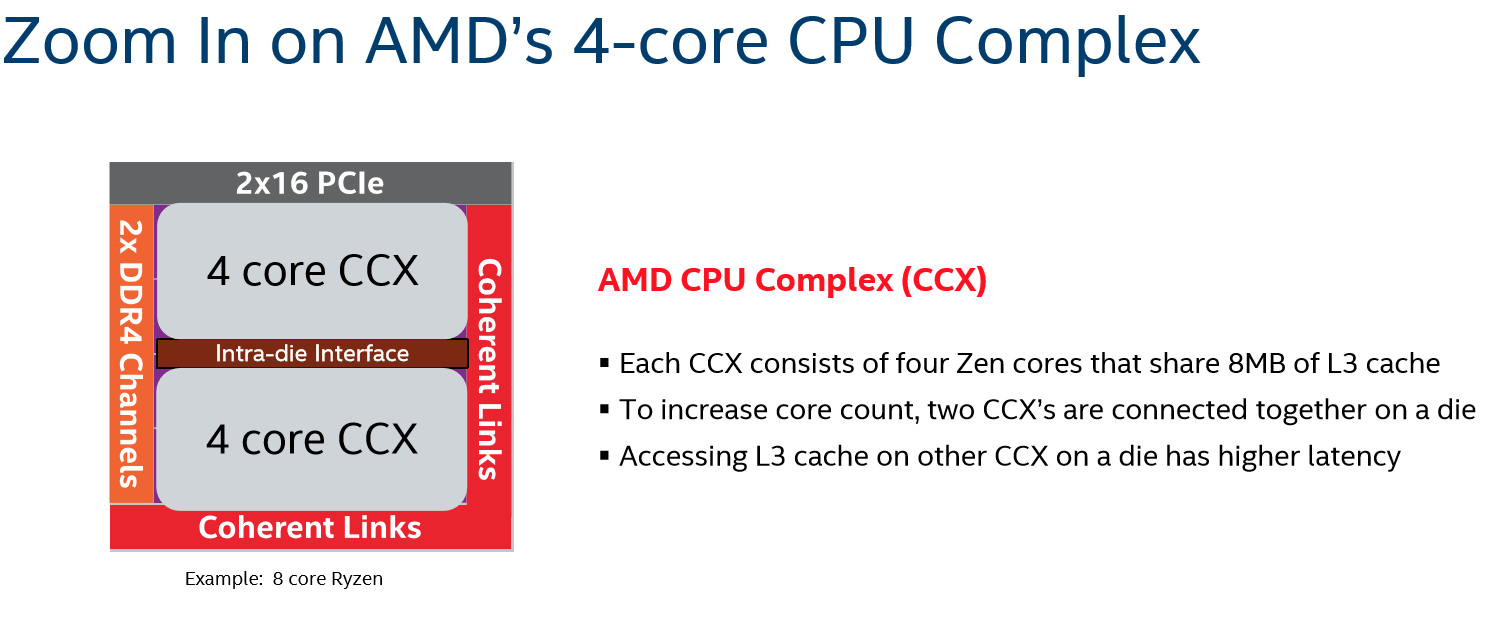

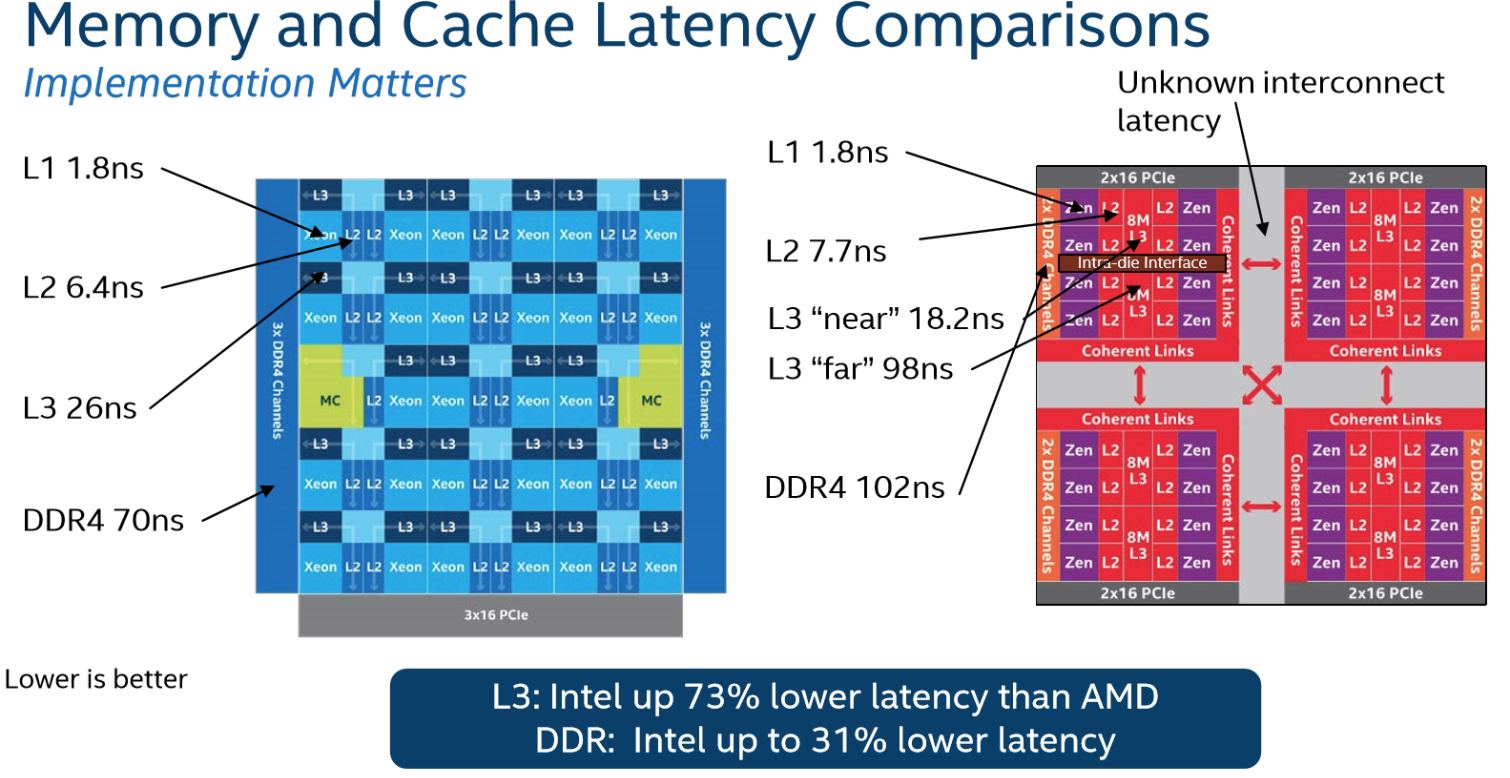

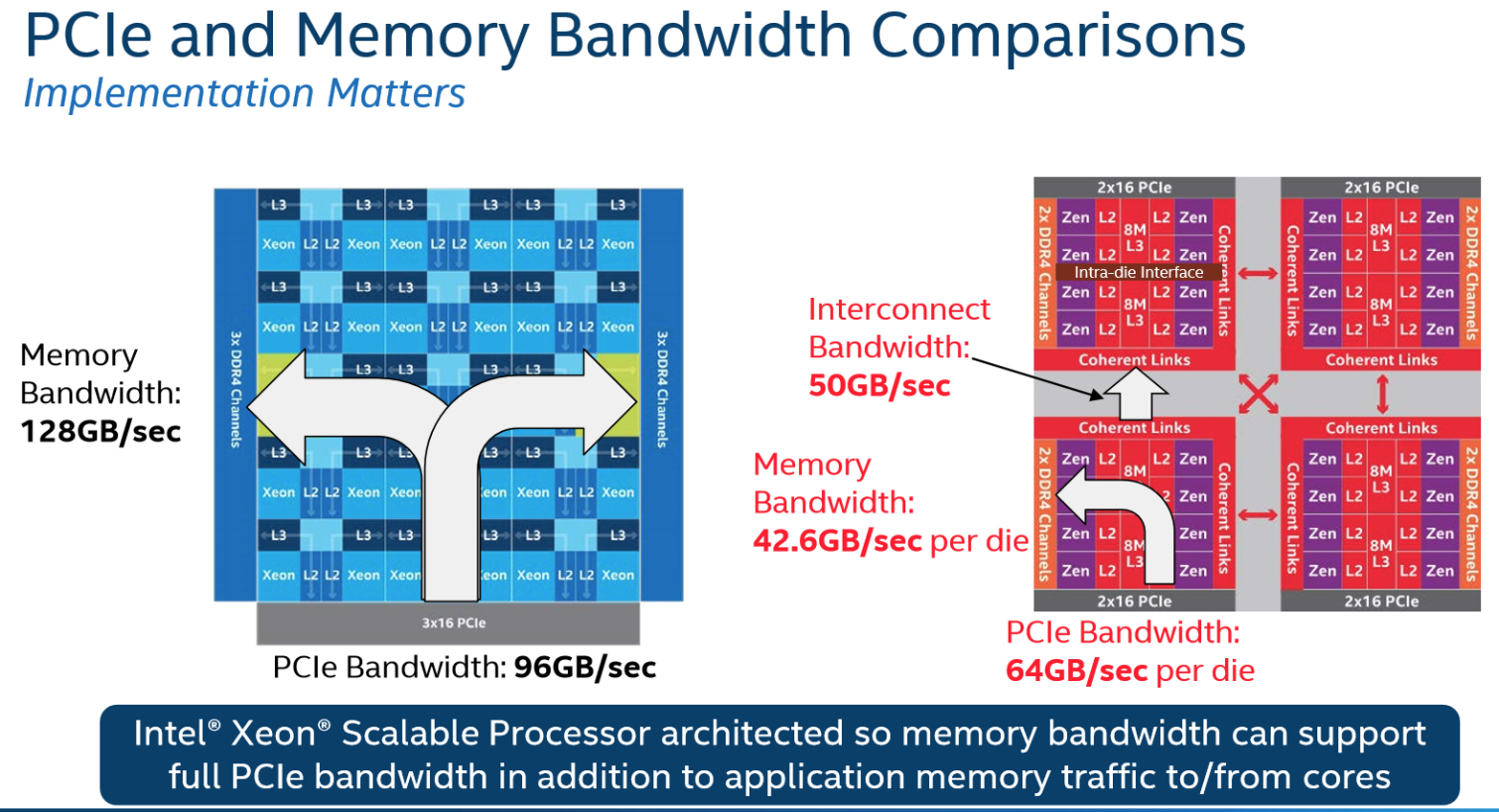

Intel claims that EPYC's memory access will suffer due to AMD's multi-die implementation. Each CCX (Core Complex) comes armed with two channels of DDR4. Access to the rest of the channels incurs a latency penalty. According to Intel, that reduces per-core local memory bandwidth to 42.6 GB/s, which is in-line with AMD's per-channel 21.3 GB/s bandwidth specification (170 GB/s per socket).

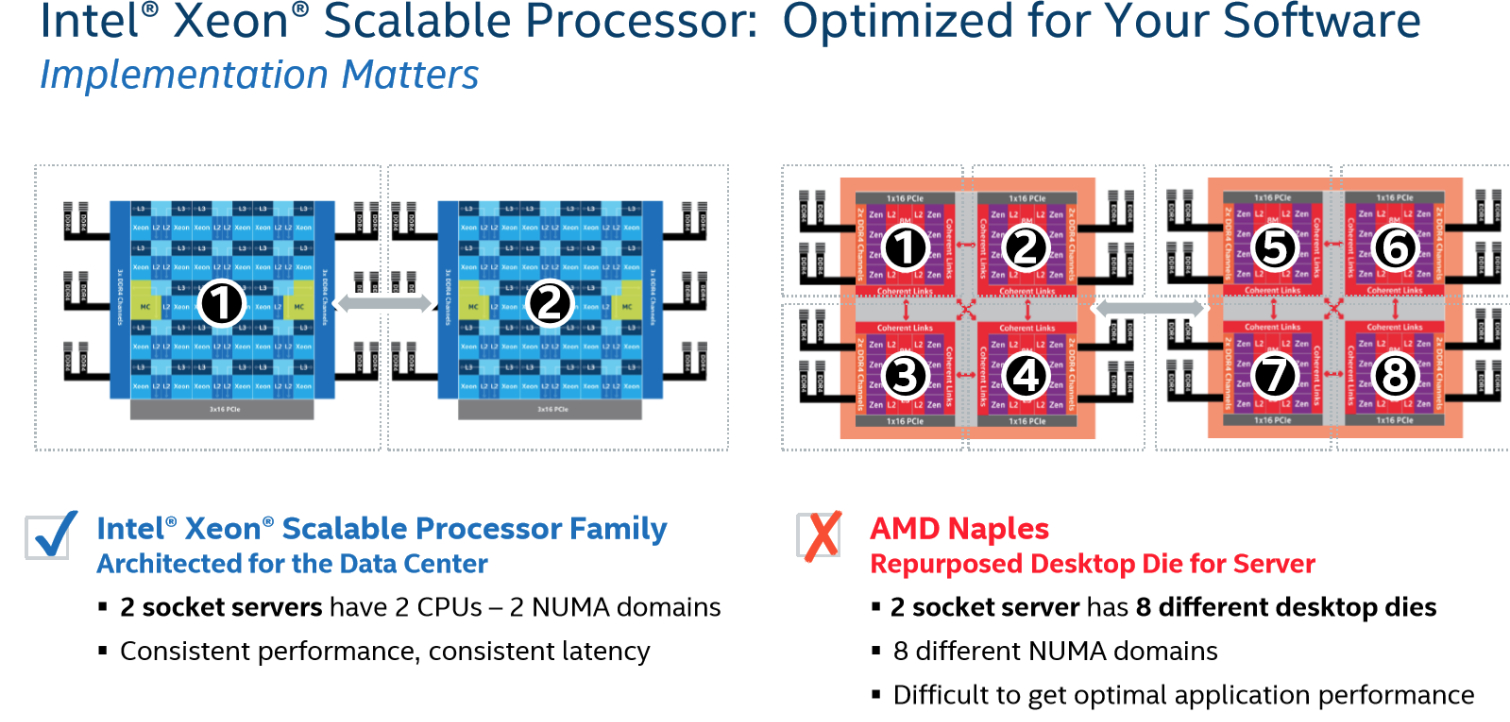

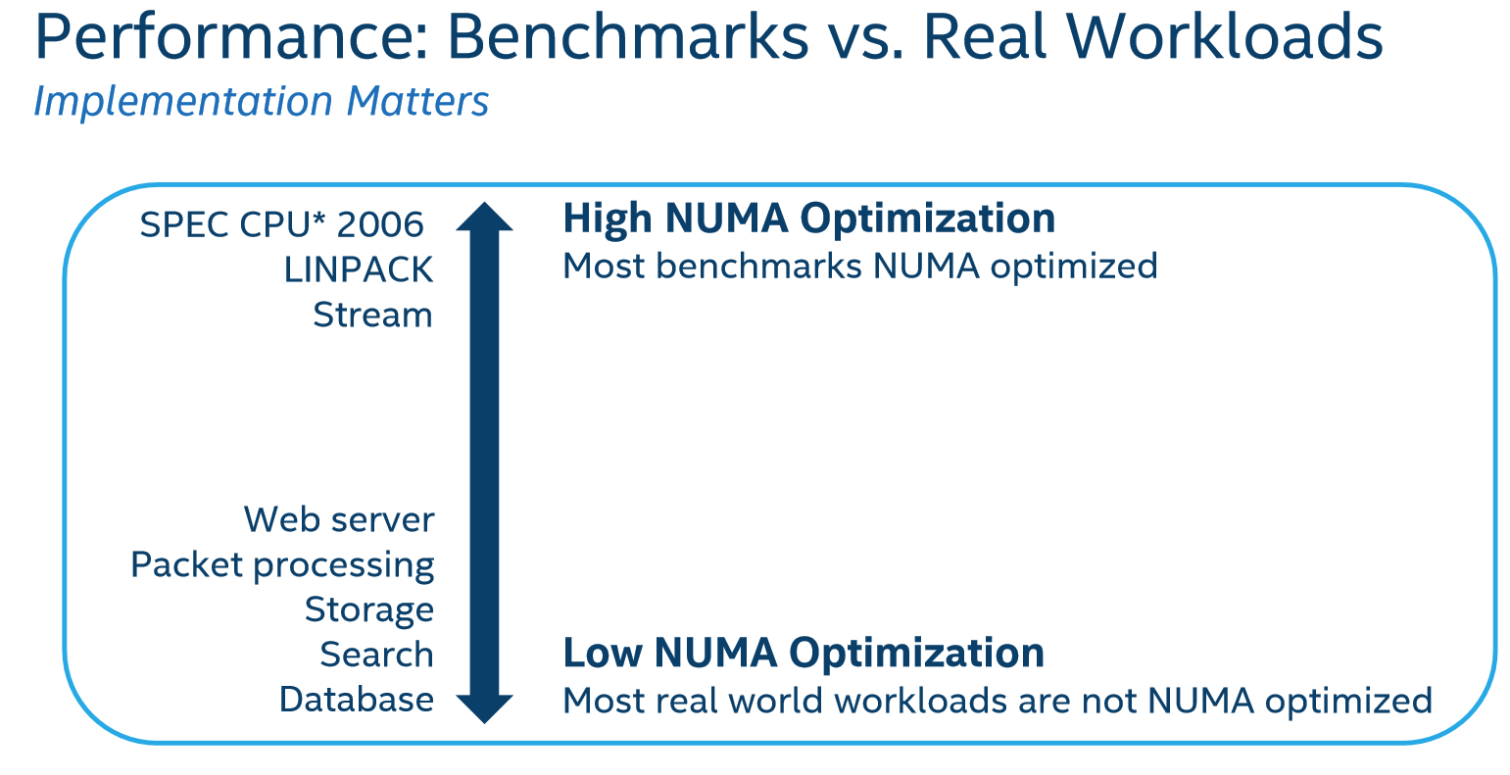

AMD offsets those penalties by establishing four different NUMA (Non-Uniform Memory Access) nodes per EPYC processor, or eight nodes per dual-socket server. During its workshop, Intel said that'll restrict the platform's scaling in many workloads. In contrast, Intel's mesh architecture provides direct access to all six memory channels, which is purportedly better suited to in-memory databases and HPC applications.

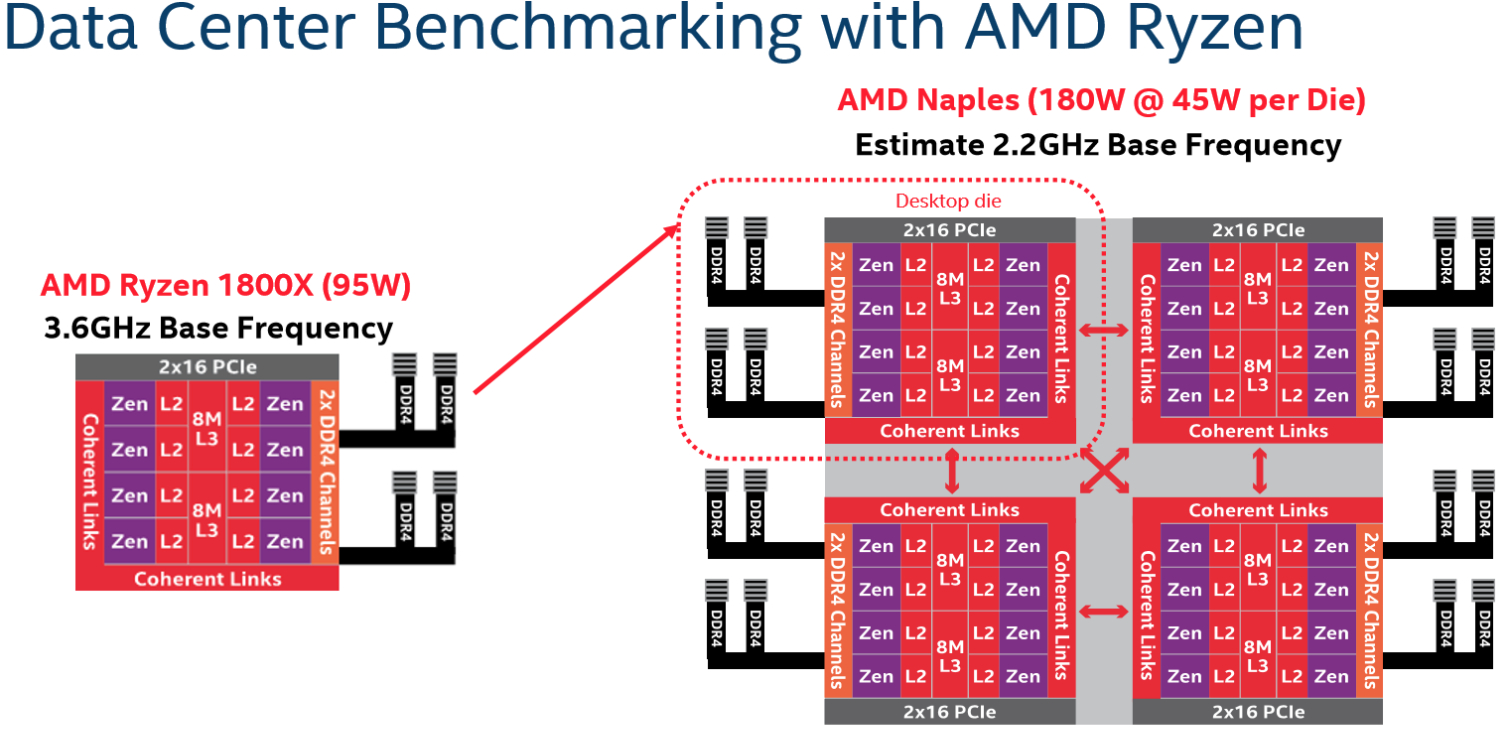

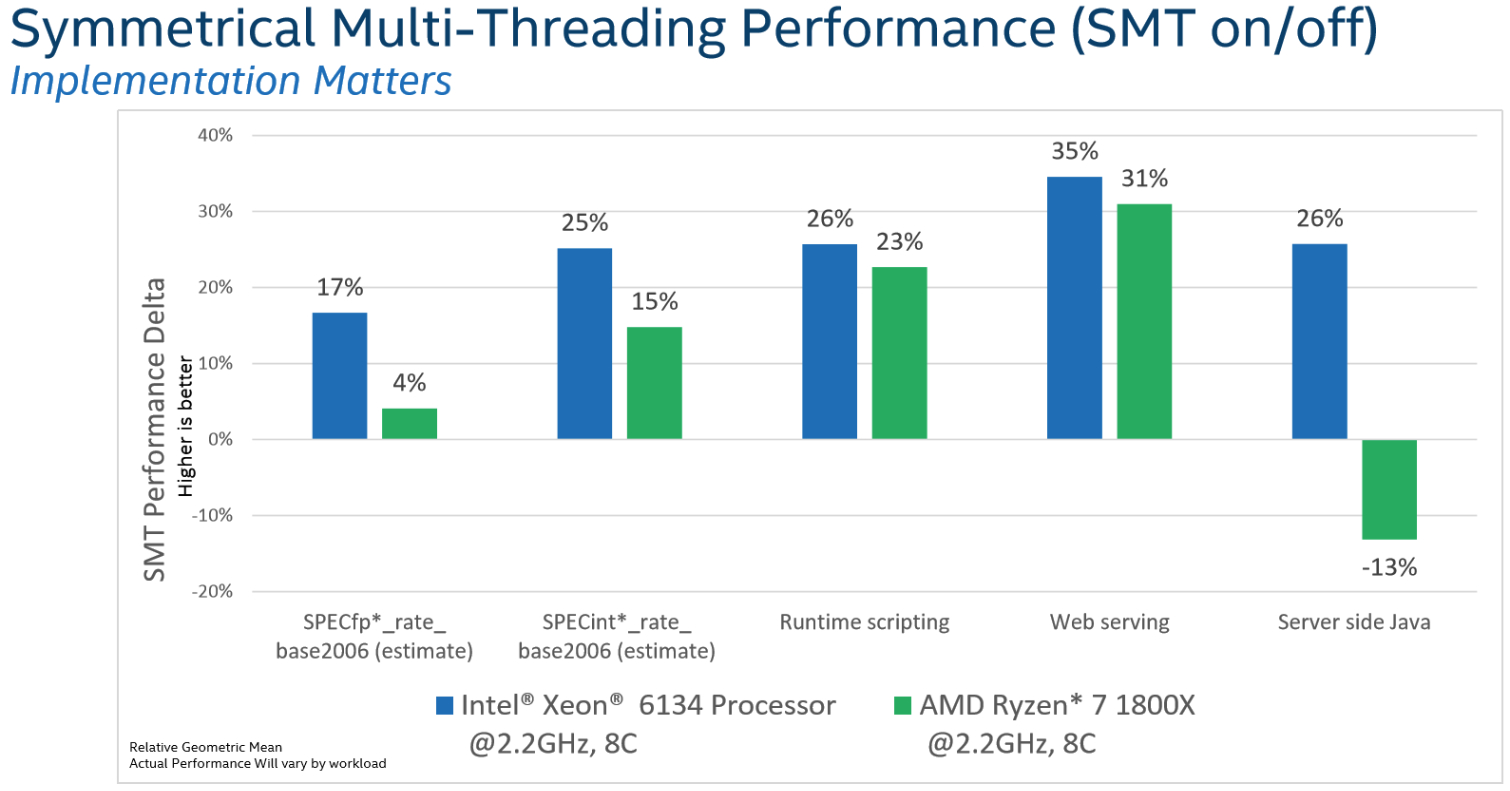

Intel doesn't have access to EPYC processors (though we're guessing it'll be one of AMD's first customers). Instead, the company used a 95W eight-core Ryzen 7 1800X to project AMD's base frequency for the 180W parts. Basic math dictates that AMD has to drop the per-die TDP to 45W to fit four of them into a 180W envelope. This led Intel to guess at a 2.2 GHz base frequency. AMD announced its product line-up after the workshop we attended, and Intel's frequency projections were close.

Since it didn't take a stab at AMD's boost clock rates, Intel locked its own Skylake cores to a similar 2.2 GHz and conducted a series of memory/cache latency and memory/PCIe bandwidth tests. The company claims EPYC's access to "far" L3 cache will weigh in at roughly the same latency as going to DDR4, which our sister site AnandTech confirmed in its testing. Intel also pointed out that EPYC's "near" L3 access is actually faster than Skylake's. That might benefit some workloads.

Each Zeppelin die includes 32 PCIe 3.0 lanes. According to Intel, EPYC's per-core PCIe bandwidth outweighs what the memory controller can do, creating an imbalance when copying data into memory. Intel also believes the 50 GB/s interconnect bandwidth (which AMD officially specifies at 42.6 GB/s) creates contention and restricts EPYC's usable PCIe and memory bandwidth across different dies and sockets.

Simultaneous multi-threading testing was achieved by toggling the feature on Ryzen 7 1800X. Apparently, Intel found that it didn't deliver as much of a boost as Hyper-Threading. The server-side Java test even slowed down, which Intel conceded could be due to a bug in the software or BIOS. AnandTech recorded an SMT scaling advantage for the EPYC 7601 during the SPEC CPU2006 SMT integer performance test. The processor offered an average boost of 28% compared to Intel's 20%.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

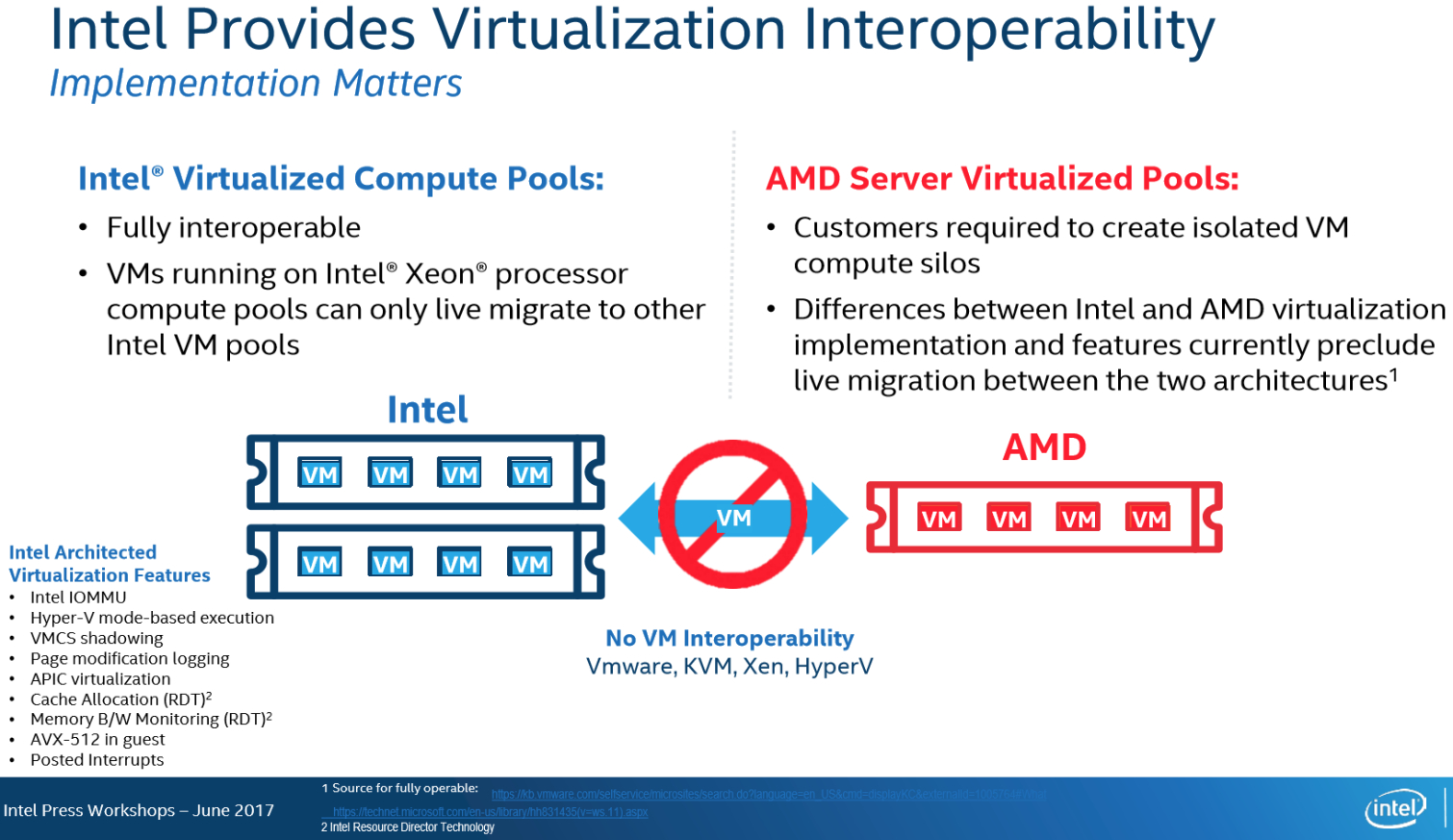

Gartner predicted in 2016 that many organizations had virtualized over 75% of their infrastructure, so VM performance is a key requirement for modern deployments. Intel claimed that EPYC has "No VM Interoperability" with VMware, KVM, Xen, or Hyper-V. But then, after Intel's presentation, both VMware and Microsoft made an appearance at AMD's launch event. They are listed, along with Xen and KVM, on AMD's hypervisor partner list.

Intel also pointed out there isn't a way to support live VM migration between Intel and AMD systems. Of course, the general idea is that it will be difficult to migrate from Intel's platforms to AMD's EPYC. It also creates an isolated VM compute silo if you use AMD processors. The work-around would be simply powering down the VM and migrating between platforms.

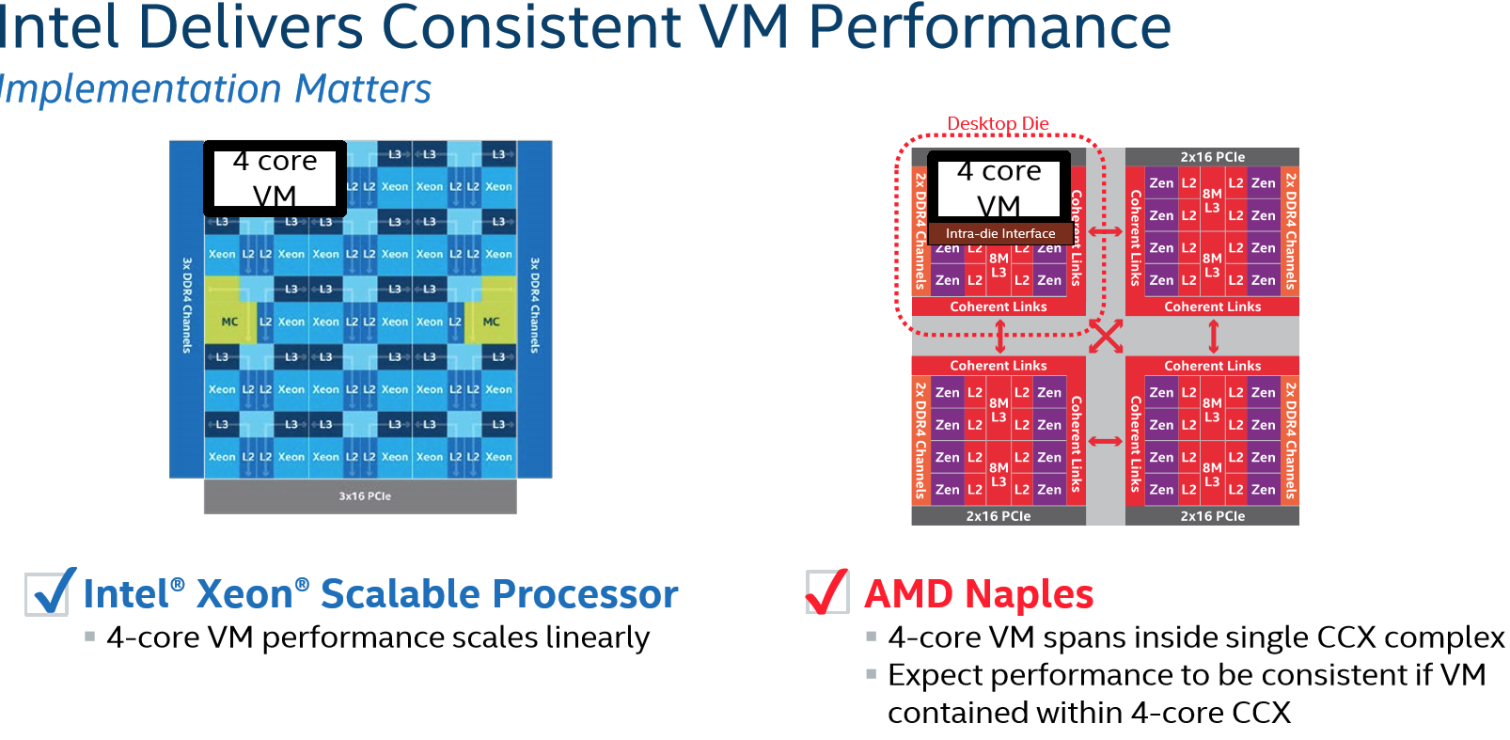

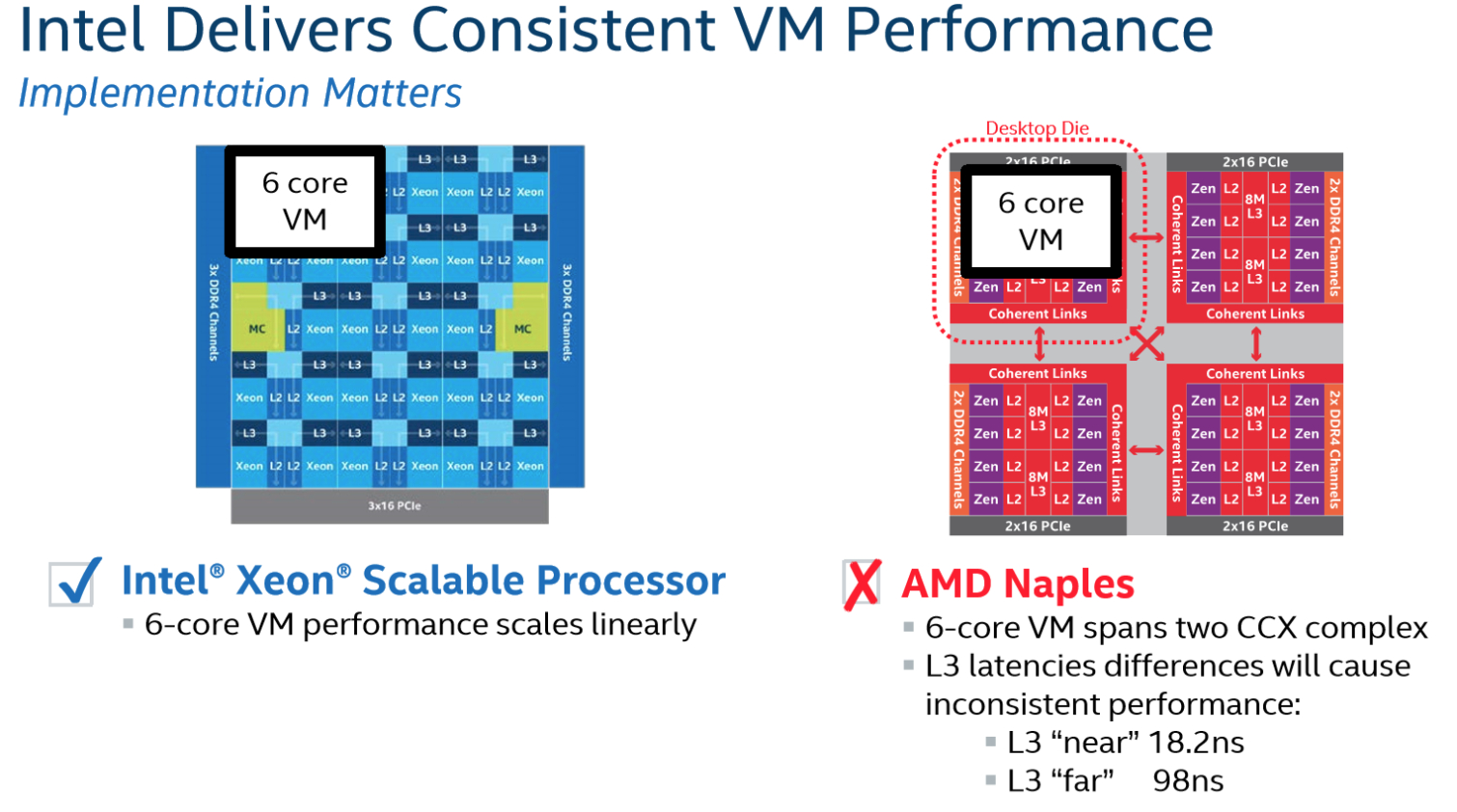

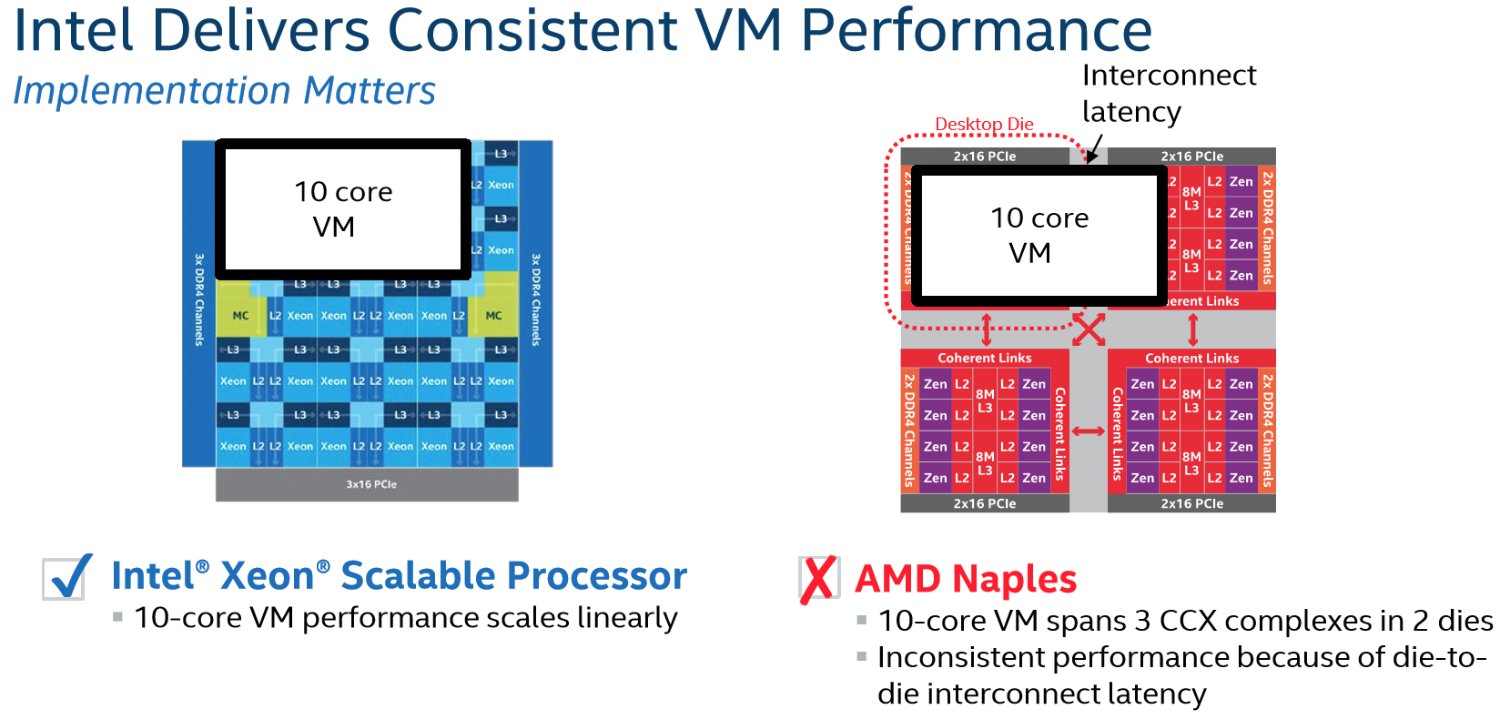

Doubling down on its opinion of EPYC for virtualized workloads, Intel argued that VMs spanning multiple CCXes, such as a six-core VM, would suffer inconsistent performance due to higher L3 latency. Moreover, moving out to 10 cores would span two dies, imposing even worse performance due to die-to-die interconnect latency. These theories do make technical sense. But without actual silicon, it's impossible to quantify the impact for certain.

Notice that the "Desktop Die" messaging pops up in these slides as well...

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Memory & Bandwidth Projections

Prev Page Intel's Take On EPYC Next Page Gaming Data Center Performance & Final Thoughts

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Aspiring techie This is something I'd expect from some run-of-the-mill company, not Chipzilla. Shame on you Intel.Reply -

bloodroses Just like every political race, here comes the mudslinging. It very well could be true that Intel's Data Center is better than AMD's Naples, but there's no fact from what this article shows. Instead of trying to use buzzwords only like shown in the image, back it up. Until then, it sounds like AMD actually is onto something and Intel actually is scared. If AMD is onto something, then try to innovate to compete instead of just slamming.Reply -

redgarl LOL... seriously... track record...? Track record of what? Track record of ripping off your customers Intel?Reply

Phhh, your platform is getting trash in floating point calculation... 50%. And thanks for the thermal paste on your high end chips... no thermal problems involved. -

InvalidError Reply

To be fair, many of those "cheap shots" were fired before AMD announced or clarified the features Intel pointed fingers at.19950405 said:All I see is cheap-shots, kind of low for Intel.

That said, the number of features EPYC mysteriously gained over Ryzen and ThreadRipper show how much extra stuff got packed into the Zeppelin die. That explains why the CCXs only account for ~2/3 of the die size. -

redgarl To Intel, PCIe Lanes are important in today technology push... why?... because of discrete GPUs... something you don't do. AMD knows it, they knows that multi-GPU is the goal for AI, crypto and Neural Network. This is what happening when you don't expend your horizon.Reply

It's taking us back to the old A64. -

-Fran- It's funny...Reply

- They quote WTFBBQTech.

- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

- No word on security features. I guess omission is also a "pass" in this case.

This reads more like a scare threat to all their customers out there instead of trying to sell a product. Miss Lisa Su is doing a good job it seems.

Cheers! -

InvalidError Reply

The extra server-centric stuff (crypto superviser, the ability for PCIe lane to also handle SATA and die-to-die interconnect, the 16 extra PCIe lanes per die, etc.) in Zeppelin didn't magically appear when AMD put EPYC together... so technically, Ryzen chips are crippled EPYC/ThreadRipper dies.19950713 said:- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

-

-Fran- Reply19950773 said:

The extra server-centric stuff (crypto superviser, the ability for PCIe lane to also handle SATA and die-to-die interconnect, the 16 extra PCIe lanes per die, etc.) in Zeppelin didn't magically appear when AMD put EPYC together... so technically, Ryzen chips are crippled EPYC/ThreadRipper dies.19950713 said:- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

I don't know if you're agreeing or not... LOL.

Cheers!