MIT Researchers Animate Real Objects From Seconds Of Prerecorded Video Footage

The lines between digital animation and real-world film have been getting increasingly blurrier for decades. As rendering and recording technology have improved, so have the visuals depicted on screen, but there’s still something awkward about a digital character interacting with a real environment. Hollywood studios get around this problem by adding digital environmental assets for the digital characters to interact with (example shown in the video below). This process can be costly and time-consuming and therefore not an option for many applications.

A small team of researchers at MIT have developed a process that could change all of that, though.

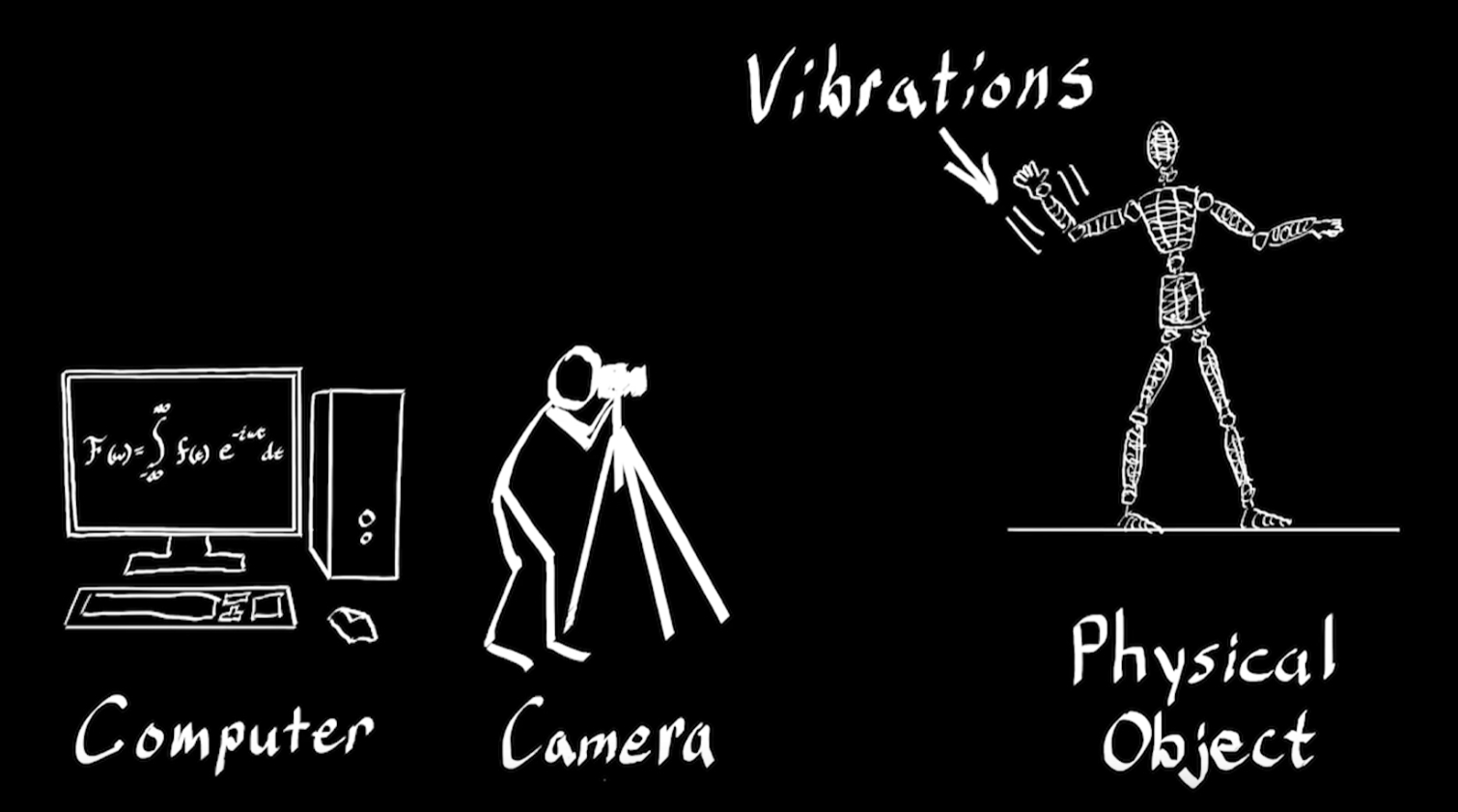

Interactive Dynamic Video is the result a video analysis technique developed by Abe Davis, Just G. Chen, and Fredo Durand at the MIT Dept. of Civil and Environmental Engineering. The process involves analyzing the vibration characteristics of objects and then using that information to create an interactive simulation of the object.

In order to accurately simulate how an object will react to various forces, Davis and his colleagues isolate different “vibration modes,” which are defined by the movement frequency and the shape of the vibrating object. The simulation model relies on this data to accurately calculate how an object would react by touching it or pulling at it.

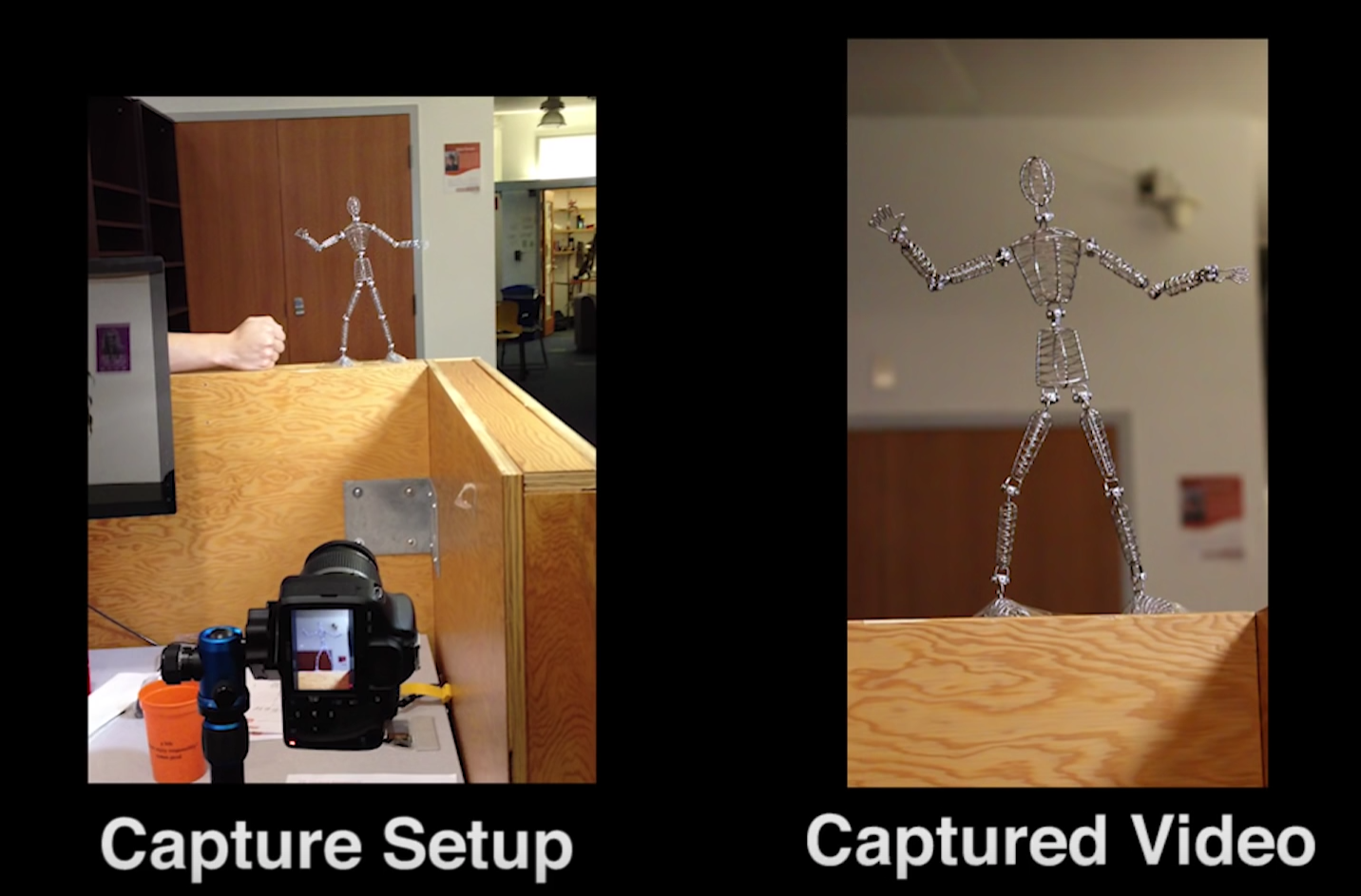

You don’t need a lot of footage to create a realistic simulation, either. The team was able to create a simulation of a wireframe figure using just five seconds of video footage. The wire figure wouldn’t be a difficult object to animate, but this process can be used on much more complex structures, such as a shrub. The team recorded less than a minute of video of a shrub blowing in the wind and was able to simulate its physical behavior with that information.

Davis said that there are a number of potential applications for such a technology. He suggested that Interactive Dynamic Video could be used for structural health monitoring for bridges and other infrastructure, but he expects that it will be used mostly for low-cost special effects. The technique can be used to add realistic animations to live-action film.

Interactive Dynamic Video was part of Abe Davis’s PhD dissertation at MIT. There are no current plans to create a commercial product based on this technique, but MIT will license the use of the patent. You can find more information on the project’s website.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

turkey3_scratch For anybody who just read the article you have to watch the attached video to really see how cool and groundbreaking this is.Reply -

Kalimni Okay, that's actually really cool. I wonder if this could be applied to video games to generate more realistic physics animations.Reply -

WFang and if your attention span is particularly short, skip to the fun part of the video at 3m1s:Reply

https://youtu.be/4f09VdXex3A?t=3m1s -

anbello262 This is realy a groundbreaking development. I sincerely hope this doesn't die in the underground.Reply

Best possible outcome would be to have a popular open source implementation of this, for indie developers and small studios.

This is particularly important for future AR, I believe. Having the digital and physical world interact is the basic principle of AR, after alll.