Input latency is the all-too-frequently missing piece of framegen-enhanced gaming performance analysis

Low input latency is key to a good framegen experience. Let's (try to) measure it.

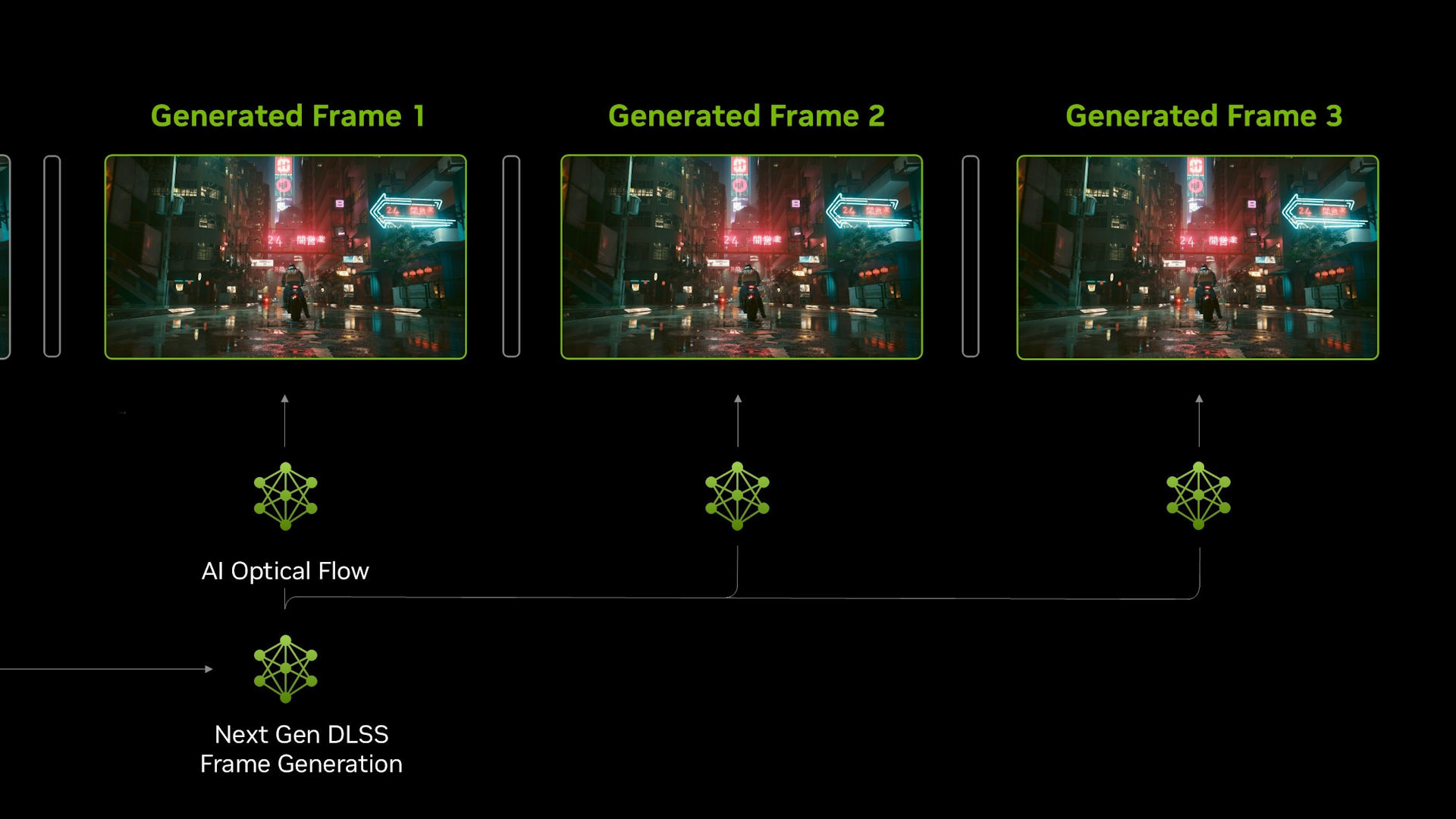

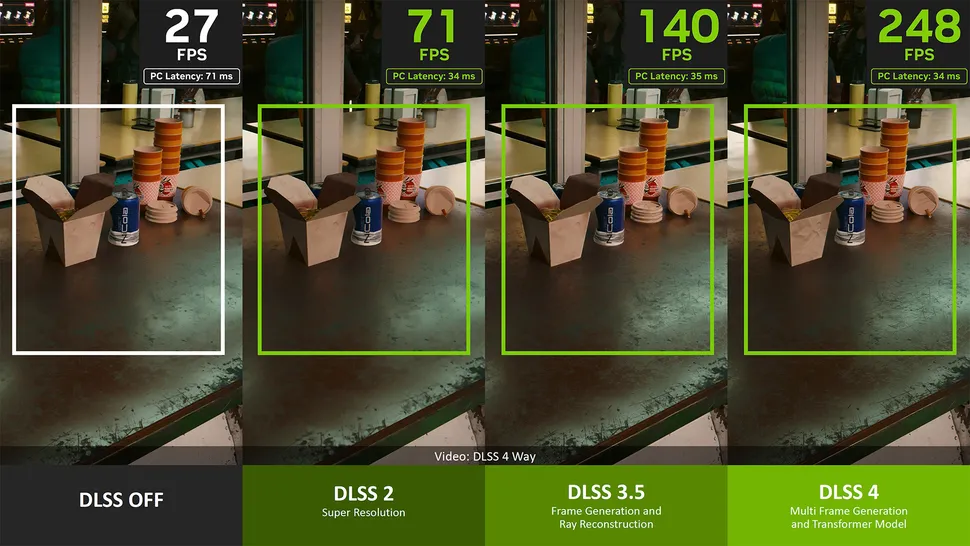

We live in rapidly changing times for PC graphics. More and more pixels are being generated by AI or “neural” rendering techniques. The DLSS, FSR, and XeSS upscalers let us boost frame rates at will in exchange for small dings to image quality. Frame generation techniques, especially Nvidia’s multi-frame generation tech on its Blackwell cards, let us boost them even higher still with the click of an in-game toggle.

When implemented well, we think MFG can be an improvement to the gameplay experience. Take crushing titles like Alan Wake II and Cyberpunk 2077. On a high-refresh-rate, variable-refresh rate monitor, both games look pretty choppy at 45 or 50 FPS if you’re running them at demanding settings, even with an assist from a monitor's low framerate compensation.

Blackwell’s multi-frame generation can turn that choppy ride into a smooth experience by pushing output frame rates far higher up in a display’s refresh rate range. There are some cases where MFG’s seams are still visible, but we think they’re an acceptable tradeoff for the much smoother output.

There’s just one catch: a game with MFG can look smoother, but it might not feel better to interact with. That’s because its input lag remains tied to the speed at which the base game loop runs. DLSS and other upscalers can improve this latency by boosting baseline performance, but framegen and MFG don’t.

We’ve frequently, albeit informally, described this need for a good input lag baseline in past coverage. (See also our ruminations on framegen performance in Black Myth Wukong.) As framegen and MFG both seem poised to become a bigger and bigger part of PC gaming going forward, we think that there needs to be a more formal, empirical way of communicating this latency baseline.

Having an easy way to quantify both input lag and output frame rate lets us communicate better with everybody about what we're seeing, and exploring the relationship between baseline frame rate, input lag, and output frame rates with framegen can also help us work back from manufacturer info to determine whether claims were made with an acceptable input latency as a baseline.

We already have the tools to at least approximately capture this latency. Nvidia’s own FrameView application provides a “PC Latency” metric, while Intel’s PresentMon exposes a measurement called “All Input to Photon Latency,” which can be optionally captured as part of its overall performance logging.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We've found game and GPU vendor compatibility potholes with both of these applications, and we have some reservations about these entirely software-based metrics, but higher latency measurements from them correlate to a notably worse-feeling gameplay experience, and that’s good enough as a starting point.

Most importantly, communicating this measurement alongside framegen-enhanced output makes for more honest marketing. Nvidia apparently knows this, since it touted low PC latency as an advantage in Cyberpunk 2077 when it introduced MFG alongside the RTX 5090. In contrast, it’s conspicuously absent from the recently-launched RTX 5050’s MFG-heavy official marketing materials.

In any case, if a graphics card isn't contributing to a good input-to-photon latency to begin with, pumping its output frame rate with framegen or MFG—and only communicating that figure—doesn’t show the complete picture.

We wanted to see if quantifying this latency provides a more grounded description of what it’s like to game in a future where output frame rates might be less about raw power and more about toggling in-game settings to taste.

Put your cards on the table

Our not-particularly-original proposal here, but one that we need to formalize regardless, is that there is a maximum acceptable latency threshold with framgen enabled (call it the MALT) beyond which a given game starts to feel more and more uncoupled from user input. This unpleasant behavior presents itself in various ways, like "rubber banding" or "swimminess."

When talking about framegen performance, we believe companies and testers should include the MALT they considered “acceptable” when using those techniques to boost the output frame rate.

Lower MALTs will require higher baseline performance, whether through beefier hardware or lighter in-game settings. And there is a ceiling past which a MALT will be too high to ensure a good interactive experience.

What makes for a good MALT? It’s likely to vary by game and by game type. Our experience with Alan Wake II and MFG so far suggests that any input-to-photon latency below 30 ms is excellent, 30-45 ms feels great, 45ms - 60 ms is usable, and anything too far above that will start to cause perceptible rubber banding or discontinuity between input and output.

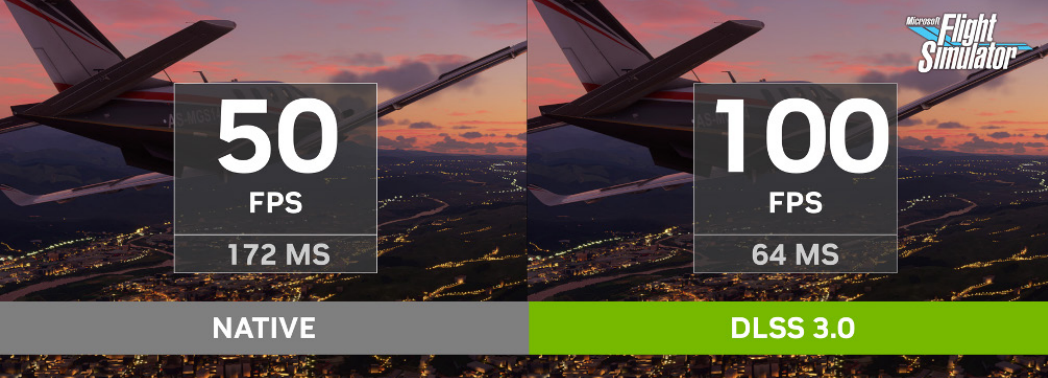

Why measure this latency directly instead of just using frame rates as a proxy (i.e., 60 FPS as a “good enough” foundation on which to enable MFG?) It’s probably an edge case, but Nvidia has highlighted at least one experience where a frame rate that intuitively seems “good enough” does not match up with an acceptable input latency. The company observed Microsoft Flight Simulator running at 50 FPS natively, but with an input latency of 172 ms.

In short: if you can measure it, measure it.

Achievable latency thresholds are also likely to vary from system to system, due to the huge array of peripherals, components, and monitors out there. Your tolerance for input lag may also be tighter or looser than ours. PresentMon and FrameView are free, so the only cost involved in testing this out for yourself is time.

Our testbed for this article uses the following components:

CPU | Ryzen 9 7950X |

Motherboard | ASUS TUF Gaming X670E-Plus WiFi |

RAM | 32GB |

SSD | Inland Gaming Performance Plus 4TB NVMe (PCIe 4.0) |

Power supply | Corsair RM1000x |

Monitor | ASUS ROG Strix XG27UCS (4K, 160 Hz) |

Keyboard | HyperX Alloy Origins 65 |

Mouse | Logitech G502 |

Exploring MALT-normalized performance

In a world where even the $329-ish RTX 5060 Ti 16GB can push 200 FPS at 1440p with DLSS and MFG 4X in Alan Wake II, we wanted to see what performance analysis might look like if we followed our own advice and normalized to a MALT. We picked 60 ms as our threshold and got to work seeing how we could tune settings on a range of modern GPUs to stay within that range.

Our results are fairly intuitive. The first column shows the system latency you get simply from moving up to a more powerful graphics card with the same settings, and the second then shows the higher settings we’re able to select within that headroom without going over our 60 ms input latency threshold. Read the chart in a Z pattern to understand the enhancements that a more powerful card gives you for the same MALT.

Alan Wake II - performance normalized to 60 ms average input latency | FrameView PC latency at n-1 settings | Changes to in-game settings to reach ~60 ms MALT with MFG 4X (High raster preset) | Average output frame rate with MFG 4X and 60 ms MALT |

|---|---|---|---|

RTX 5060 Ti 16GB | N/A – baseline | 2560x1440 target, DLSS Ultra Perf, no RT | 192 FPS |

RTX 5070 | ~39 ms | 2560x1440 target, DLSS Performance, Low RT | 202 FPS |

RTX 5070 Ti | ~47 ms | 2560x1440 target, DLSS Quality, Medium RT | 194 FPS |

RTX 5080 | ~55 ms | 3840x2160 target, DLSS Ultra Perf, High RT | 190 FPS |

RTX 5090 | ~42 ms | 3840x2160 target resolution, DLSS Balanced, Ultra RT | 190 FPS |

Buying a more powerful GPU for Alan Wake II, at least, intuitively lets you enjoy lower input latency and higher output frame rates than those at our 60 ms MALT for a given group of settings, or higher resolution and image quality settings for the same 60 ms threshold.

The point here is that if you don’t need the lowest possible input latency, as you might not in a slower-paced experience like Alan Wake II, putting more of your GPU’s resources toward higher-resolution output and richer visuals when you have the latency headroom to do so with framegen is just smart. Monitoring this figure also lets you dial back settings if your given choices result in an unpleasant experience.

One challenge in fine-tuning performance with MFG is that DLSS quality settings only offer us five coarse levels of adjustment today without the use of third-party tools. If we had more intermediate DLSS settings, we could potentially achieve lower input latencies (imagine a value between Ultra Performance and Performance, for example) than our 60 ms threshold while still maximizing image quality within the rendering resources of a given graphics card. We hope that Nvidia explores ways to natively expose finer DLSS granularity in the future to make the most of MFG.

MALTed marketing

Considering latency and frame rates together also helps us analyze a bold question: does MFG let lower-end GeForce cards “beat” their higher-end predecessors? Nvidia infamously claimed the RTX 5070 could beat the RTX 4090 with the full suite of DLSS 4 features enabled. We didn’t test that exact claim, but we did pit the RTX 5080 versus the RTX 4090 to try and understand whether it had any merit.

If we normalize for a 60 ms MALT in Alan Wake II with high raster settings, ultra RT, and a 4K target resolution, the RTX 5080 has to use DLSS Ultra Performance to achieve this target input latency, whereas the Ada flagship can use DLSS Performance for a boost in image quality at the same latency.

The major difference, of course, is that MFG 4X lets the RTX 5080 output an enhanced 190 FPS with DLSS Ultra Performance, for a theoretically much smoother output than the RTX 4090’s 97 FPS with DLSS Performance. The difference in delivered smoothness isn’t as drastic to our eyes as the output frame rates might suggest, but our 160 Hz monitor might be concealing the full magnitude of this improvement.

Use DLSS Ultra Performance on both cards, though, and the RTX 4090’s PC latency drops 25%, to 46 ms on average, and the output frame rate rises to 133 FPS. Even though the RTX 4090’s 2X framegen output still can’t match the RTX 5080’s with MFG 4X at the same settings, we enjoy much lower overall input latency on the RTX 4090 than on the RTX 5080, and some might prefer that more responsive experience.

Looking at both input latency and output frame rates together, the RTX 5080 can deliver theoretically smoother output, but RTX 4090 owners probably shouldn’t go out and upgrade on this basis alone. And it certainly throws cold water on bold claims that even lower-end cards than the RTX 5080 can match or exceed the gaming experience of an RTX 4090 unless the output frame rate is all that you’re measuring.

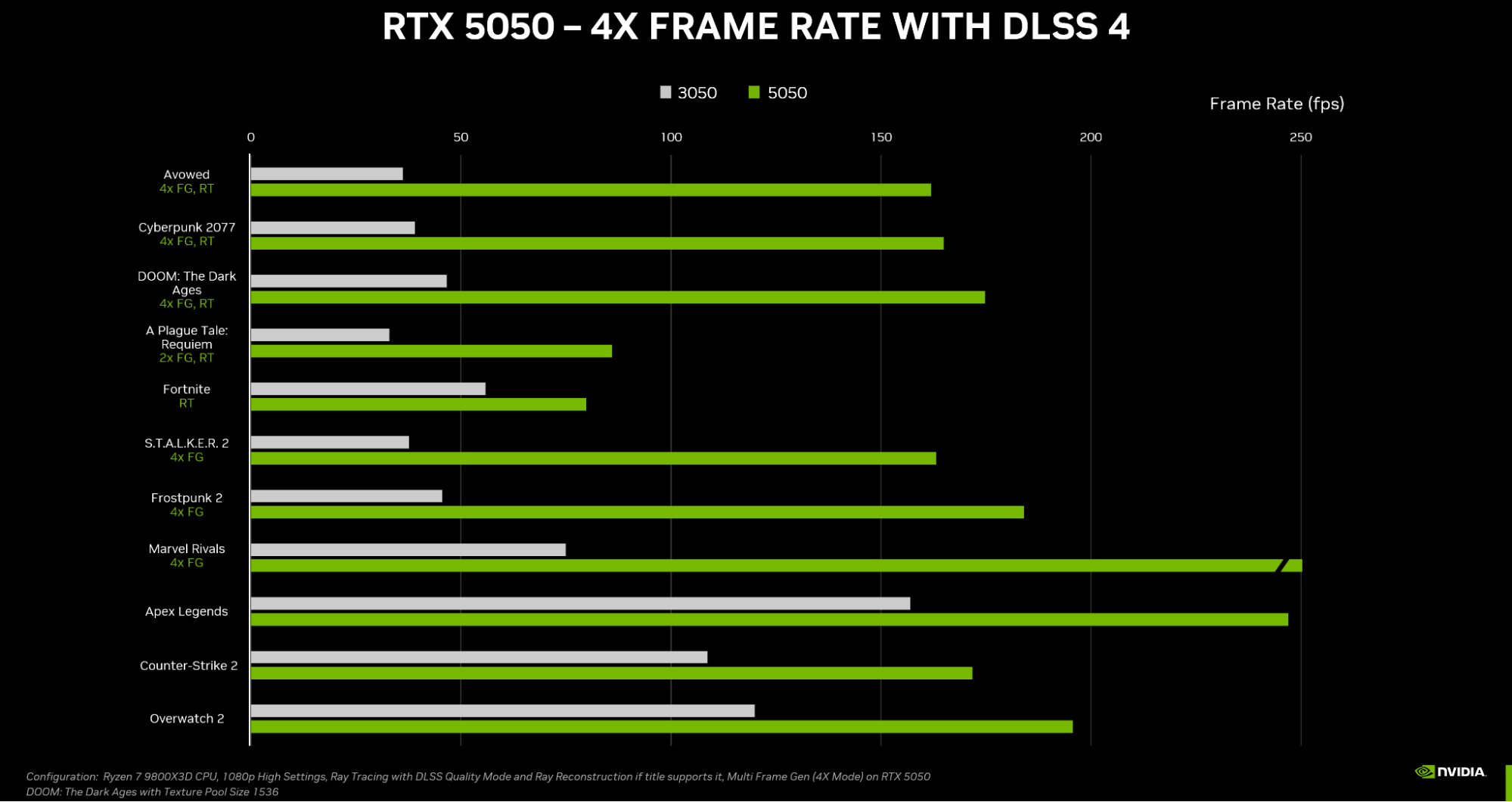

This leads us to Nvidia’s marketing materials for the recently launched RTX 5050. Eyeballing its charts, the company appears to have used a low baseline frame rate for the non-esports titles in its selection. Cyberpunk 2077 and Avowed both appear to cap out around 160 FPS with MFG 4X, implying a baseline of around 40 FPS on average.

Again, we’ve already seen that intuition doesn’t necessarily serve us well when tying frame rates to a particular game’s input latency, and it’s entirely possible that Nvidia observed an acceptable latency threshold when it tuned settings to achieve that 40-ish FPS. But when you appear to be riding the line like this, it makes disclosing those assumptions all the more important.

Intel recommends a minimum of 40 FPS for use with its Xe Frame Generation tech, but that figure is for developers targeting a good experience on integrated graphics. For higher-end graphics cards, Intel suggests a 60 FPS baseline is ideal. AMD recommends 60 FPS as a performance target before using FSR 3 Frame Generation, period (and also cautions that “sub 30fps frame rate pre-interpolation should be absolutely avoided.”)

Nvidia doesn’t have a recommended frame rate for use with MFG that we could find, but it’s also got a trick up its sleeve that Intel and AMD’s methods lack. Nvidia Reflex in DLSS 4 includes Frame Warp, a reprojection technique that we already know from VR. Frame Warp uses a range of data sources to shift output frames to more closely match the user’s most recent inputs, reducing perceived latency.

It’s possible that with Frame Warp and the perceptual latency reduction it purports to offer, MFG can tolerate input frame rates lower than XeFG and FSR—but again, these assumptions can be measured, and they should be communicated. After all, if Nvidia’s framegen solution can robustly tolerate a higher input latency and do more with less on lower-end hardware like the RTX 5050, that’s a competitive advantage over other framegen approaches.

The bottom line - for now

With all this in mind, how should we reason about GPU performance in a world where framegen is more and more common? MFG does not suddenly mean that more powerful GPUs are unnecessary or that lower-end parts can magically eclipse higher-end ones. It’s more of a cherry on top of the power you’re already buying than a leg up on the next card up the stack.

Higher-end GPUs can still give you better image quality at the same input latency or a much lower input latency for the same settings, and you still need their muscle to push demanding games at higher output resolutions, period, even with upscaling in the picture.

What’s also important to remember is that not all framegen techniques are the same, even from the same vendor. Framegen on Nvidia Ada cards looks a bit worse to our eyes than on Blackwell with MFG, and MFG in DLSS 4 includes technologies like reprojection that other vendors’ solutions lack. We didn't test FSR or XeSS frame generation for this article, but they lack the flexibility that MFG's 3X and 4X multipliers give you for better matching output frame rate to your monitor's frame rate.

On that note, we think the best way to think of MFG is as a bridge to increasing gaming smoothness on today’s high-refresh-rate monitors, even with relatively attainable graphics cards. For just one example, 1440p 240Hz monitors used to be wildly expensive, but you can now grab one for less than $300. The cost of GPU power is obviously not going down at the same rate as those high-refresh-rate monitors.

Recall that the $329-and-up RTX 5060 Ti 16GB can push 200 FPS at 1440p with DLSS Ultra Performance and MFG 4X in Alan Wake II. To reach those same performance figures without MFG at the same settings, you need to use a $3000+ RTX 5090. We know, because we tried.

Unless you’re an esports pro who needs both the lowest possible input latency and high output frame rates, being able to use higher reaches of your monitor’s refresh rate range without spending $3000 on a commensurate GPU is pretty cool.

The worst way to think about MFG is as a route to achieving “playable” output frame rates. This is a false grail. A game running at an unplayable 20 FPS pre-framegen cannot magically be boosted to a “playable” 60 or 80 FPS using MFG, simply because the input latency at such a low native frame rate is likely to exceed any acceptable standard.

Overall, our brief investigation suggests that in a future where framegen is more and more common, both vendors and media alike need to be clearer about the baseline input latency they’re assuming when talking about framegen-enhanced performance. It’s entirely possible for all camps to end up creating marketing materials and performance or image quality analyses that rest on an input frame rate that’s too low and an input latency that’s too high for the end user to actually enjoy.

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

edzieba Over a decade on from Inside the Second, and it seems the same lessons in GPU performance measurement have to keep being relearned time and time again. We've known for at least that long that measuring, or even plotting, FPS tells you only a fraction of the story on GPU performance.Reply

Thou shalt plot frametimes, not framerates! -

GenericUsername109 Yes, measure the time from a key press or a mouse click to in-game response and showing it on the screen. But this would not be only about the rendering pipeline, but also about the game engine itself (which can definitely make things even worse, beyond just a poor GPU/driver lag).Reply -

JarredWaltonGPU Reply

It's the age-old problem of benchmarks. If sites make charts that only 5% of readers can understand, that's not a great solution. Putting in five charts instead of one doesn't work great either. People (well, most people...) want concise representations of data, not minutia overload. If you only plot frametimes, you still end up with the same problems as only plotting framerates. They're just reciprocals of each other: Frames per second versus seconds per frame. (FPS = 1000 / frametime in ms. Frametime in ms = 1000 / FPS.)edzieba said:Over a decade on from Inside the Second, and it seems the same lessons in GPU performance measurement have to keep being relearned time and time again. We've known for at least that long that measuring, or even plotting, FPS tells you only a fraction of the story on GPU performance.

Thou shalt plot frametimes, not framerates!

While we're on the subject, there's potential value in doing logarithmic scales of FPS. Because going from 30 to 60 is a much bigger deal than going from 60 to 120, or from 60 to 90. But again, there's nuance to discuss and more people will likely get confused by logarithmic charts. And what base do you use for these logarithmic charts? Because that can change the appearance of the graphs as well.

Measuring latency is helpful and good, but also doesn't tell the full story regardless. It's why all of my reviews for the past couple of years also included a table at the end that showed average FPS, average 1% lows, price, power, and — where reported by FrameView — latency. Like on the 9070 / 9070 XT article, bottom of page 8:

https://www.tomshardware.com/pc-components/gpus/amd-radeon-rx-9070-xt-review/8

(I didn't include that on the 9060 XT review because, frankly, I was no longer a full-time TH editor and didn't want to spend a bunch more time researching and updating prices, generating more charts, etc. 🤷♂️ )

It all ends up as somewhat fuzzy math. Some people will want sub-40ms for a "great" experience and are okay with 100 FPS as long as it gets them there. Others will be fine with sub-60ms or even sub-100ms with modest framerates. I will say, as noted in many reviews and articles, that MFG and framegen end up being hard to fully quantify. Alan Wake 2 can feel just fine with a lower base framerate, with MFG smoothing things out, and relatively high latency. Shooters generally need much lower latency to feel decent. Other games can fall in between.

And engines and other code really matter a lot. Unreal Engine 5 seems to have some really questionable stuff going on under the hood, and it's used in a lot of games. I know there was one game I was poking at (A Quiet Place: The Road Ahead) where even a "native" (non-MFG/non-FG, non-upscaled) 40~45 FPS actually felt very sluggish. Like, if I were to just play the game that way without looking at the performance or settings, I would have sworn that framegen was enabled and that the game was rendering at ~20 FPS and doubling that to 40. But it wasn't, the engine and game combination just ended up feeling very sluggish. Enabling framegen actually made the game feel much better! Weird stuff, but that's par for the course with UE5. -

hotaru251 MFG "might" work on high end, but it shouldn't be used on any low end system.Reply

Just like how DLSS was meant to let lower end systems run games better it was abused and is now expected for a min 60fps on those low systems.

FrameGen already shows us that devs will abuse any tech to cheap out on optimizing games and have "framegen on to get 60fps" as their min requirements.

This will be case with MFG if it gets adopted by both sides. It will make gaming an even more awful experience for many. -

John Nemesh tl;dr version: Fake frames are bad...if you want smoother graphics, upgrade your rig.Reply

Nvidia's fake frames are a gimmick, nothing more, nothing less. If it makes the game "feel worse", you shouldn't be using it...AT ALL. -

John Nemesh Reply

You don't have to dive into minutia...just give RENDERED frame rates in reviews and don't use FAKE frames in them at all.JarredWaltonGPU said:It's the age-old problem of benchmarks. If sites make charts that only 5% of readers can understand, that's not a great solution. Putting in five charts instead of one doesn't work great either. People (well, most people...) want concise representations of data, not minutia overload. If you only plot frametimes, you still end up with the same problems as only plotting framerates. They're just reciprocals of each other: Frames per second versus seconds per frame. (FPS = 1000 / frametime in ms. Frametime in ms = 1000 / FPS.)

While we're on the subject, there's potential value in doing logarithmic scales of FPS. Because going from 30 to 60 is a much bigger deal than going from 60 to 120, or from 60 to 90. But again, there's nuance to discuss and more people will likely get confused by logarithmic charts. And what base do you use for these logarithmic charts? Because that can change the appearance of the graphs as well.

Measuring latency is helpful and good, but also doesn't tell the full story regardless. It's why all of my reviews for the past couple of years also included a table at the end that showed average FPS, average 1% lows, price, power, and — where reported by FrameView — latency. Like on the 9070 / 9070 XT article, bottom of page 8:

https://www.tomshardware.com/pc-components/gpus/amd-radeon-rx-9070-xt-review/8

(I didn't include that on the 9060 XT review because, frankly, I was no longer a full-time TH editor and didn't want to spend a bunch more time researching and updating prices, generating more charts, etc. 🤷♂️ )

It all ends up as somewhat fuzzy math. Some people will want sub-40ms for a "great" experience and are okay with 100 FPS as long as it gets them there. Others will be fine with sub-60ms or even sub-100ms with modest framerates. I will say, as noted in many reviews and articles, that MFG and framegen end up being hard to fully quantify. Alan Wake 2 can feel just fine with a lower base framerate, with MFG smoothing things out, and relatively high latency. Shooters generally need much lower latency to feel decent. Other games can fall in between.

And engines and other code really matter a lot. Unreal Engine 5 seems to have some really questionable stuff going on under the hood, and it's used in a lot of games. I know there was one game I was poking at (A Quiet Place: The Road Ahead) where even a "native" (non-MFG/non-FG, non-upscaled) 40~45 FPS actually felt very sluggish. Like, if I were to just play the game that way without looking at the performance or settings, I would have sworn that framegen was enabled and that the game was rendering at ~20 FPS and doubling that to 40. But it wasn't, the engine and game combination just ended up feeling very sluggish. Enabling framegen actually made the game feel much better! Weird stuff, but that's par for the course with UE5. -

Alvar "Miles" Udell The problem with "AI Generated Frames" is that in moderation they're great, especially when used with adaptive refresh rates (Freesync/G-Sync), doubly so for lower refresh rate monitors (not as many frames needed to hit it), but in the levels that they push for DLSS4 marketing there's just too many to make sense. They should be used to "fill in the gaps" to make a game smooth, not as a crutch to "make the game playable".Reply -

rluker5 I tried the Lossless Scaling FG to 60 fps just to handle the occasional dips to 45 fps with a second DGPU on my A750 and it looked really good.Reply

But I was playing Expedition 33 and the variable input lag was killing my ability to dodge and parry.