Roundup: Three 16-Port Enterprise SAS Controllers

16-Port SAS RAID Cards Go Head-To-Head

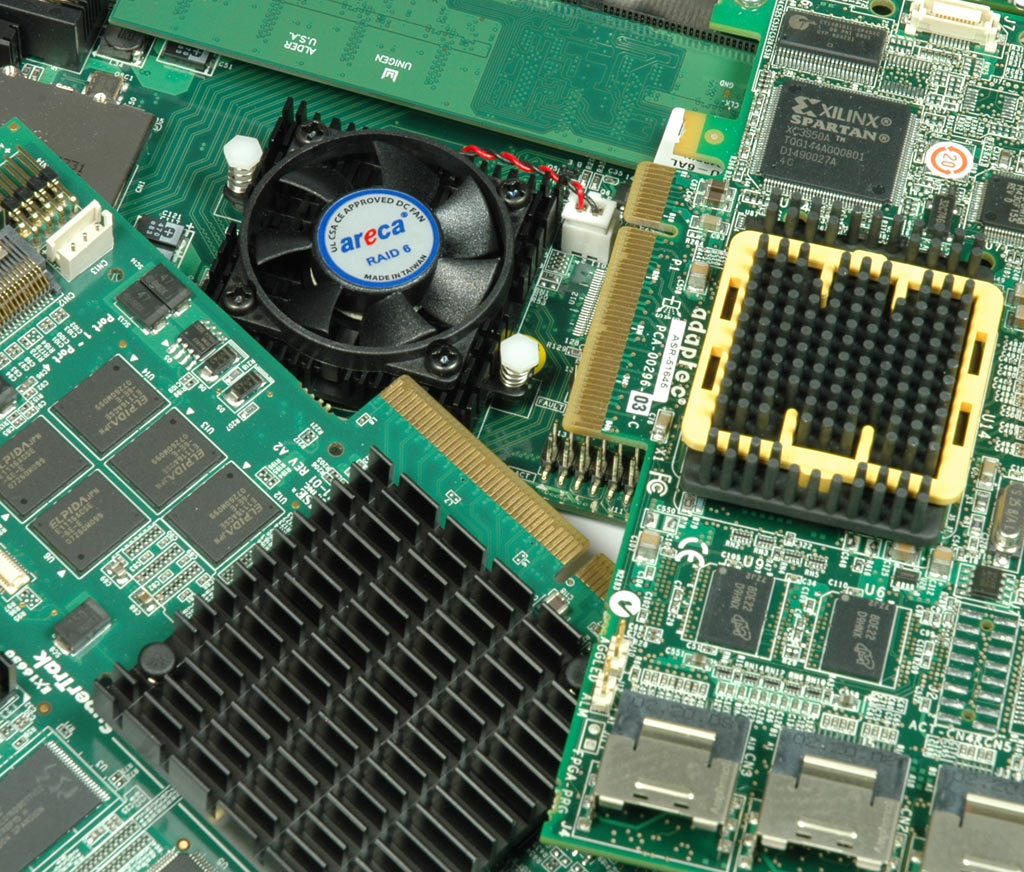

Even entry-level servers come with dual- or quad-core processors and many gigabytes of RAM. But a proper storage subsystem still depends on powerful and flexible host adapters, usually with RAID capabilities. We have three 16-port high-end SAS cards from Adaptec, Areca, and Promise in-house and are ready to run them through their paces in search of a winner.

What SAS is All About

While server storage used to center on adapters and drives employing the Small Computer System Interface (SCSI), today’s interface choice for Direct Attached Storage (DAS) applications is called SAS: Serial Attached SCSI. The parallel SCSI bus had insurmountable issues, such as varying signal run-time on each of the wires at increased speeds, which is why a serial transmission was deployed. SAS is a serial point-to-point connection protocol that doesn’t require signal termination. It works with an 8b/10b encoding scheme. It allows a speed of 3 Gb/s in today’s implementation, with 6 Gb/s coming up this year (representing 300 MB/s and 600 MB/s net throughput per port, respectively). On the surface, that 300 MB/s may not appear faster than the 320 MB/s of UltraSCSI, but the throughput is available per connected device, rather than being shared.

SAS controllers (also known as initiators) utilize SSP, the SAS SCSI Protocol, to talk to client devices (known as targets). The SATA Tunneling Protocol (STP) also lets them utilize Serial ATA drives, and the SAS Management Protocol (SMP) is used to manage expanders. SAS uses both fanout expanders and edge expanders, which can be compared to switches in the networking world. One SAS controller can work with up to two edge expanders, which are in turn used to run up to 128 drives. Fanout expanders allow hooking up even more edge expanders.

The beauty of SAS is that it is extremely flexible and scalable. You can use a variety of configurations within a SAS domain, consisting of SAS and SATA drives set up to provide solutions for various performance and capacity requirements. The cards we looked at are all in the $1,000 range, and provide excellent features and performance for enterprise storage solutions.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: 16-Port SAS RAID Cards Go Head-To-Head

Next Page 4x4 Ports: Multi-Lane Technology, Enclosures, Connections