New PCIe adapters turn your x16 slot into a clown car of GPU and SSD connectivity

High-bandwidth connectivity for enterprise and enthusiast platforms alike

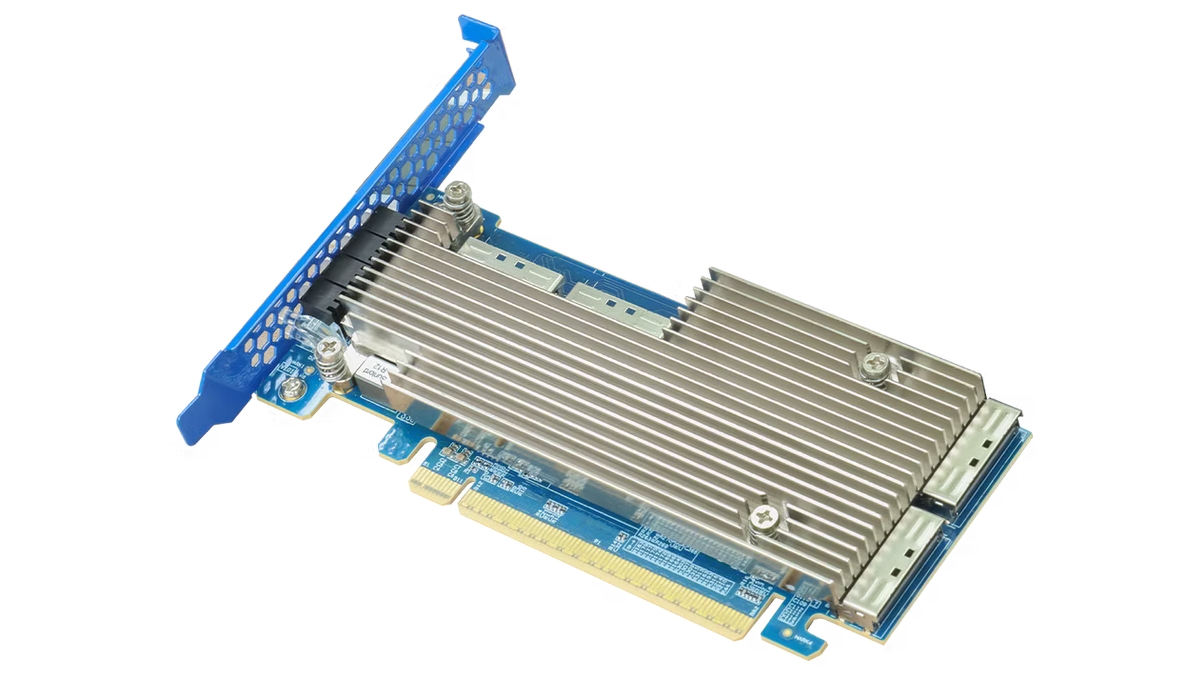

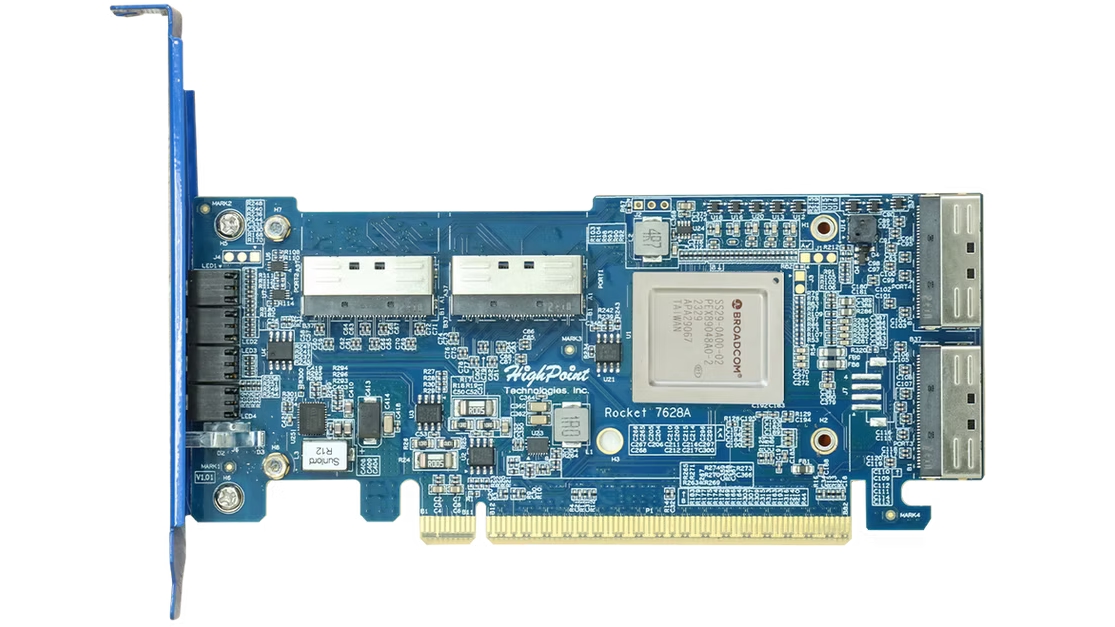

HighPoint Technologies has announced a new lineup of PCIe Gen 5 and Gen 4 x16 adapters featuring MCIO (Mini Cool Edge IO) and SlimSAS (Serial Attached SCSI) connectors, aimed at expanding the I/O capabilities of modern workstations and servers. These adapters improve GPU scalability and unlock high-bandwidth NVMe storage configurations on platforms where PCIe lanes are limited or require efficient redistribution.

The newly launched adapter series includes the PCIe Gen 5 x16 Rocket 1628A and PCIe Gen 4 x16 Rocket 1528D, each providing up to four MCIO x4 or SlimSAS x4 channels. This makes it possible to split a single PCIe x16 slot into multiple high-speed connections, which is ideal for connecting multiple NVMe SSDs, GPUs, or other devices without sacrificing performance.

HighPoint’s PCIe Gen 5 adapters, in particular, seem to be a compelling option for AI workloads, GPU-heavy setups, and enterprise-grade storage solutions. MCIO connectors — a fairly new standard designed for high-density PCIe connectivity — position these adapters for future-proofed deployment in data centers and high-performance desktop environments.

Each adapter is said to be designed with minimal signal loss in mind, using advanced PCB layouts and high-quality components to maintain signal integrity at Gen 5 speeds. The Gen 4 SlimSAS variants target platforms that haven’t yet moved to Gen 5 but still need high-throughput options for storage expansion.

HighPoint is positioning these cards as cost-effective and flexible, particularly for system builders and prosumers looking to maximize the utility of existing PCIe slots. While primarily aimed at OEMs and enterprise users, enthusiasts working with compact systems or unconventional GPU/storage layouts may also benefit from these adapters' modularity.

Last year, HighPoint made headlines by launching its SSD7540 RAID card, a PCIe Gen 4 x16 adapter capable of delivering transfer speeds up to 56 GB/s. That card featured eight M.2 NVMe slots and targeted high-performance storage arrays for data-intensive workloads.. The new MCIO and SlimSAS adapters build on this legacy by offering even more flexible connectivity options for modern systems, especially as Gen 5 adoption accelerates.

Though pricing hasn't been announced, the new MCIO and SlimSAS x16 adapters will be available through HighPoint’s distribution channels soon. As more Gen 5-capable motherboards and processors enter the market, these innovative adapter solutions will become increasingly relevant for balancing bandwidth-hungry components in dense systems.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis and reviews in your Google feeds. Make sure to click the Follow button.

Kunal Khullar is a contributing writer at Tom’s Hardware. He is a long time technology journalist and reviewer specializing in PC components and peripherals, and welcomes any and every question around building a PC.

-

chaz_music It should be noted that the 1528D is a PCIe switch that does not require your motherboard to support bifurcation to function, which is quite handy. This is a switch and not a bifurcation card. I did not check the other card for the specs.Reply

The 1528D breaks out into four 8 lane ports. I found it is $699 on Amazon, so you will really need it for that price. Just be paying attention that the bandwidth will obviously have to be shared with all of the ports, so a four card configuration will give 1/4 of the PCIe throughput per card.

I might look for a PCIe 3.0 version for a TrueNAS system. It shows in the list but I could not get any product info on their website. Or just bite the bullet and get a 24 port IT mode HBA. -

tamalero Reply

I wonder if there are switches capable of turning a PCIE 16X v5 into 2 PCE16X V4 or similar.chaz_music said:It should be noted that the 1528D is a PCIe switch that does not require your motherboard to support bifurcation to function, which is quite handy. This is a switch and not a bifurcation card. I did not check the other card for the specs.

The 1528D breaks out into four 8 lane ports. I found it is $699 on Amazon, so you will really need it for that price. Just be paying attention that the bandwidth will obviously have to be shared with all of the ports, so a four card configuration will give 1/4 of the PCIe throughput per card.

I might look for a PCIe 3.0 version for a TrueNAS system. It shows in the list but I could not get any product info on their website. Or just bite the bullet and get a 24 port IT mode HBA. -

Li Ken-un This is news? I’ve had this product since last year. PCIe 5.0 \00d7 8 slot, with the HighPoint adapter to get 32 PCIe lanes to my PCIe 3.0 and PCIe 4.0 U.2 SSDsReply -

Nikolay Mihaylov Reply

I've thought about something like that for a long time. It can almost turn an AM5 platform into HEDT. I actually imagine it with PCIe5x16 -> 3xPCIe4x16 directly on the motherboard. Then you can have 3 double-spaced x16 slots. You are unlikely to hit the max IO on three cards simultaneously. Plus, not all cards need to be GPUs. You might have storage or NIC cards.tamalero said:I wonder if there are switches capable of turning a PCIE 16X v5 into 2 PCE16X V4 or similar.

Anyway, just daydreaming. -

Dav_Daddy How exactly are you supposed to run multiple GPU's with this thing? I even went to their website to look for a configuration that made sense for that application.Reply

Not that I can remotely afford to buy something like this, I'm more curious than anything else. -

ravewulf I have a QNAP QM2-2P-384A which uses a PCIe switch to go from PCIe 3.0 x8 to two PCIe 3.0 x4 NVMe M.2 slots. The neat thing is I have it plugged into an old AM3+ board which only has PCIe 2.0 but internally the switch still runs at PCIe 3.0 speeds for the NVMe drives, with each drive seeing PCIe 3.0 x4 (verified with CrystalDiskInfo). Only the connection between the PCIe switch and the motherboard is limited to PCIe 2.0 speeds, and that bandwidth is distributed based on need at any given moment instead of an even 50/50 split or reducing lanes. So if I'm only accessing one of the drives at a time, that one drive will run at its full PCIe 3.0 x4 bandwidth and the PCIe switch actively converts it to PCIe 2.0 x8 for the motherboard with no loss in speed.Reply -

tamalero Reply

This, or just split for storage.. Lots of availability with splitting that PCIE5 into many SSDs or standard disks.Nikolay Mihaylov said:I've thought about something like that for a long time. It can almost turn an AM5 platform into HEDT. I actually imagine it with PCIe5x16 -> 3xPCIe4x16 directly on the motherboard. Then you can have 3 double-spaced x16 slots. You are unlikely to hit the max IO on three cards simultaneously. Plus, not all cards need to be GPUs. You might have storage or NIC cards.

Anyway, just daydreaming. -

8086 Devices like this SSD HBA are why I wish board makers would give us less M.2 slots and more PCI-e x16 slots for real upgrades such as this.Reply