Intel Plays Defense: Inside Its EPYC Slide Deck

Intel Responds

Intel, like many other vendors, holds press workshops before key product releases. The company invited several publications and analysts, Tom's Hardware among them, to its Jones Farm campus in Hillsboro, Oregon for two days of marathon briefings. These included 15 sessions, 10 slide decks, and 365 slides outlining nearly every detail of its new Xeon Scalable Processor family.

It was an almost overwhelming amount of information to sift through. In our quest to cram as much relevant information as possible into our coverage, however, we considered it much-needed information.

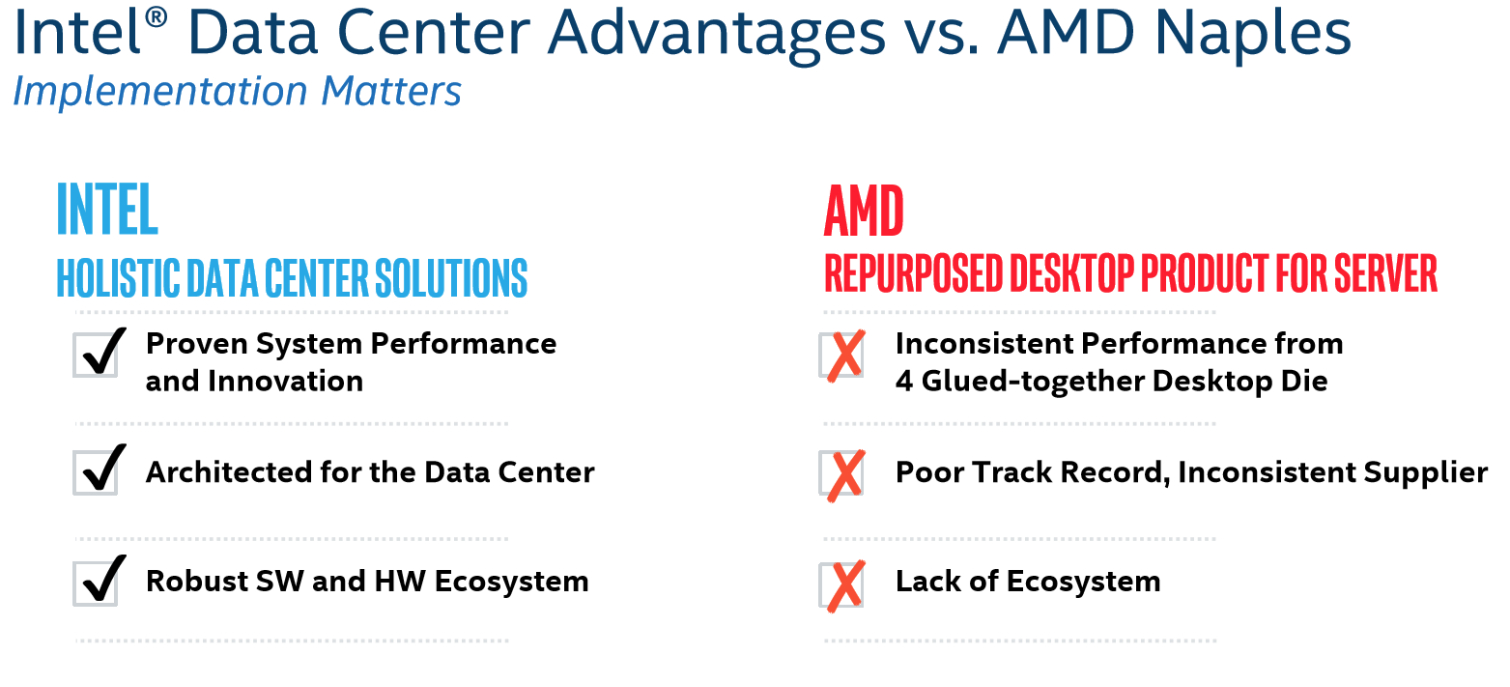

One presentation stuck out more than the rest. Intel presented a deck that outlined what it considers to be its advantages against AMD’s EPYC CPUs. The slides generated a lot of controversy over the last week, but they haven't been presented in context. We’re going to fix that. But first, some background:

Competition Heats Up

AMD was last competitive in the server space around five years ago, which allowed Intel to gobble up ~99.6% of the market. EPYC has the potential to change this by virtue of its strong performance, scalability, aggressive pricing, and less confusing segmentation than Intel's Xeon line-up.

Most analysts surmise that AMD’s latest and greatest poses little short-term threat to Intel’s data center dominance. The conservative enterprise is notoriously slow to adopt unproven designs, and that means the safe money is still on Xeon. It will take time for AMD to reclaim more than a single-digit share of the server space. The company knows this.

Aside from market share, AMD poses a larger threat to Intel’s margins, which can exceed 60%. By strategically snipping features from various models in the Xeon portfolio, Intel is able to maximize the profit it earns across its product stack. Core count, clock rates, memory handling, compute functionality, threading, scalability, and manageability are all used to create unique SKUs with price points to match the features that get turned on.

Intel’s MSRPs are largely irrelevant to its largest customers, some of which are commonly referred to as the Super Seven+1: Google, Facebook, Amazon, Microsoft, Baidu, Alibaba, Tencent, and AT&T. These companies purchase CPUs in high volume and often have access to new processors months in advance of the official launches. They also don’t pay Intel’s official prices. The same goes for other large customers, such as Dell/EMC and other OEMs.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Truth be told, it’s hard to negotiate with a company that essentially controls the world's data centers. Companies commonly hammer out press releases claiming they're rolling out alternative platforms, such as those powered by ARM processors. But many of these are ultimately regarded as a tactic to remind Intel there are other options. After all, while it is possible to switch to ARM, that architecture doesn't support x86 without some sort of emulation. This presents significant technical challenges.

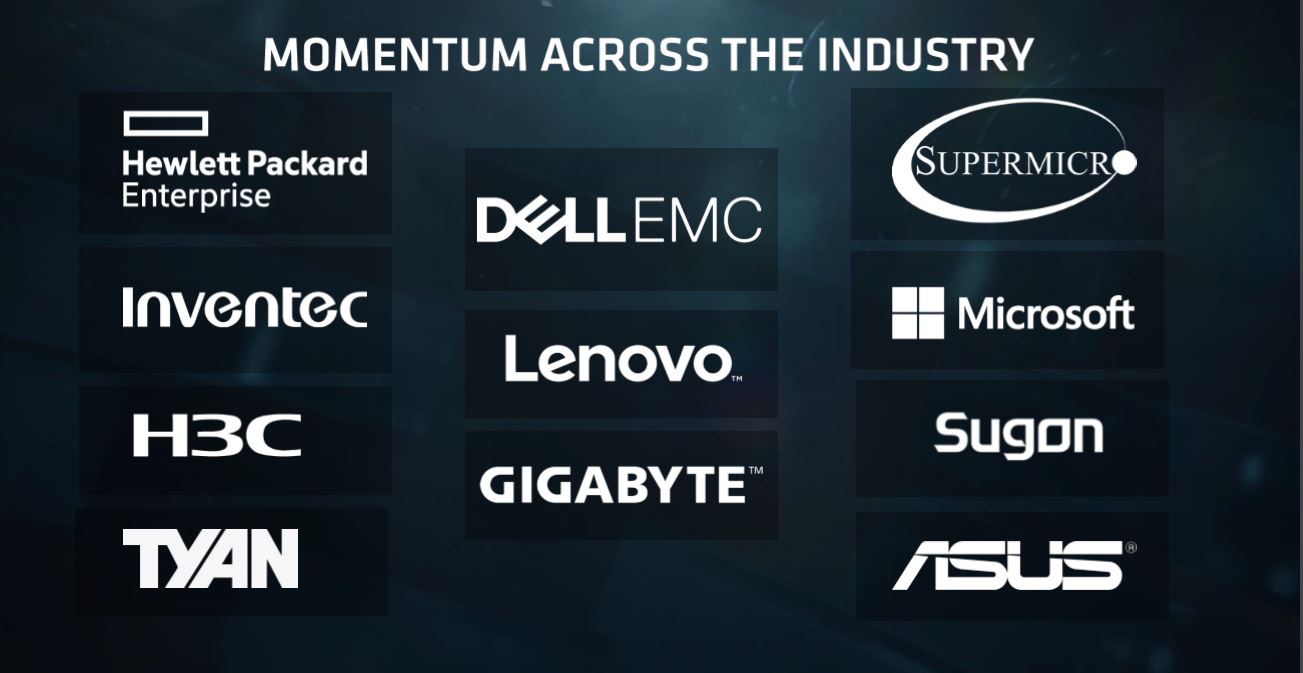

EPYC changes the game. During AMD's launch event, representatives from several major companies took to the stage and expressed support for the platform. Baidu, Microsoft, Supermicro, Dell, Xilinx, HPE, Dropbox, Samsung, and Mellanox were all there. Notice the Super Seven+1 members? Surely there are other high-profile names being courted behind the scenes, so we expect more partner announcements in the future. We can't overstate the importance of OEMs like Dell and HPE, but Sugon also clears the path to the burgeoning ODM market. Xilinx and Mellanox are key partners that might help offset Intel's goals with Purley's integrated networking and FPGA features, and the Azure tie-up portends penetration into cloud-based deployments.

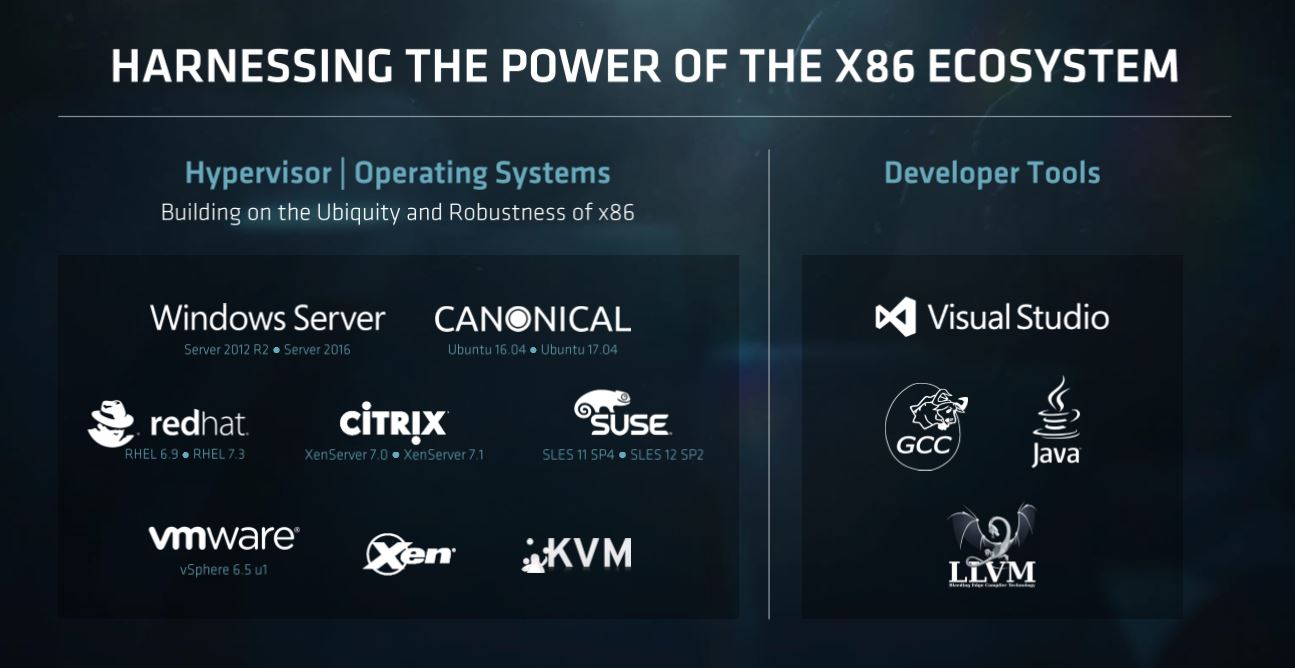

We also see that AMD specifically calls out "harnessing the power of the x86 ecosystem." To that effect, the company lined up a strong roster of hypervisor/operating system and developer tools partners. VMware, Microsoft's server division, and Red Hat also took to the stage at AMD's event.

EPYC also does away with some of Intel's segmentation practices. AMD only manipulates core count, clock rates, and multi-socket support to break up its portfolio. That means customers still get simultaneous multi-threading, along with all of the architecture's PCIe lanes and unaltered memory capacity/speed support, even from the least-expensive models. In short, EPYC offers more connectivity across the board and simpler (purportedly cheaper) motherboards. Instead of "buying up" with Intel for one crucial feature, there are now less expensive alternatives, some of which revolve around AMD's single-socket server strategy.

These CPUs are a threat to Intel's margins because they give Xeon customers another option. Consequently, Intel might have to get more price-competitive in key portions of its product stack, especially with high-volume customers. That means EPYC could affect Intel's bottom line, even if it doesn't gain significant market share.

There is little doubt that AMD's EPYC will find some measure of success in the data center, and Intel wants to get ahead of any potential adoption. Like most companies, Intel does its own research to gauge the positioning of competitors. Typically, though, the press isn't privy to such defensive documentation. But one of the slide decks we saw at Intel's recent press workshop outlined what Intel feels are the strengths of Xeon compared to the weaknesses of AMD's EPYC. This presentation is generating quite a bit of criticism online. So let's see what Intel had to say...

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Aspiring techie This is something I'd expect from some run-of-the-mill company, not Chipzilla. Shame on you Intel.Reply -

bloodroses Just like every political race, here comes the mudslinging. It very well could be true that Intel's Data Center is better than AMD's Naples, but there's no fact from what this article shows. Instead of trying to use buzzwords only like shown in the image, back it up. Until then, it sounds like AMD actually is onto something and Intel actually is scared. If AMD is onto something, then try to innovate to compete instead of just slamming.Reply -

redgarl LOL... seriously... track record...? Track record of what? Track record of ripping off your customers Intel?Reply

Phhh, your platform is getting trash in floating point calculation... 50%. And thanks for the thermal paste on your high end chips... no thermal problems involved. -

InvalidError Reply

To be fair, many of those "cheap shots" were fired before AMD announced or clarified the features Intel pointed fingers at.19950405 said:All I see is cheap-shots, kind of low for Intel.

That said, the number of features EPYC mysteriously gained over Ryzen and ThreadRipper show how much extra stuff got packed into the Zeppelin die. That explains why the CCXs only account for ~2/3 of the die size. -

redgarl To Intel, PCIe Lanes are important in today technology push... why?... because of discrete GPUs... something you don't do. AMD knows it, they knows that multi-GPU is the goal for AI, crypto and Neural Network. This is what happening when you don't expend your horizon.Reply

It's taking us back to the old A64. -

-Fran- It's funny...Reply

- They quote WTFBBQTech.

- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

- No word on security features. I guess omission is also a "pass" in this case.

This reads more like a scare threat to all their customers out there instead of trying to sell a product. Miss Lisa Su is doing a good job it seems.

Cheers! -

InvalidError Reply

The extra server-centric stuff (crypto superviser, the ability for PCIe lane to also handle SATA and die-to-die interconnect, the 16 extra PCIe lanes per die, etc.) in Zeppelin didn't magically appear when AMD put EPYC together... so technically, Ryzen chips are crippled EPYC/ThreadRipper dies.19950713 said:- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

-

-Fran- Reply19950773 said:

The extra server-centric stuff (crypto superviser, the ability for PCIe lane to also handle SATA and die-to-die interconnect, the 16 extra PCIe lanes per die, etc.) in Zeppelin didn't magically appear when AMD put EPYC together... so technically, Ryzen chips are crippled EPYC/ThreadRipper dies.19950713 said:- Use the word "desktop die" all over the place without batting an eye on their own "extreme" platform being handicapped Xeons.

I don't know if you're agreeing or not... LOL.

Cheers!