Samsung Goes 6 Gb/s: Is The 830-Series SSD King Of The Hill?

Samsung's 830 is a late entry to the 6 Gb/s SSD market, but the company claims impressive performance. Can it unseat the incumbent SandForce-based drives? Let's just say this new offering shakes up the SSD world in a major way. Other vendors, beware.

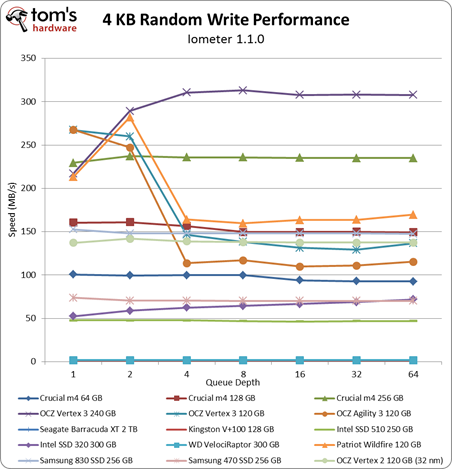

Benchmark Results: 4 KB Random Performance (Throughput)

Our Storage Bench v1.0 mixes random and sequential operations. However, it's still important to isolate 4 KB random performance because that's a large portion of what the drive sees on a day-to-day basis. Right after Storage Bench v1.0, we subject the drives to Iometer, testing random 4 KB performance. But why specifically 4 KB?

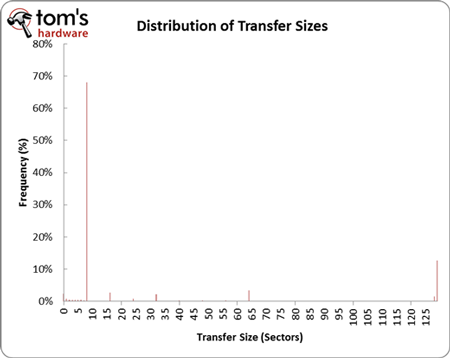

When you open Firefox, browse multiple webpages, and write a few documents, you're mostly performing small random read and write operations. The chart above comes from analyzing Storage Bench v1.0, but it epitomizes what you'll see when you analyze any trace from a desktop computer. Notice that close to 70% of all of our accesses are eight sectors in size (512 bytes per sector, thus 4 KB).

We're restricting Iometer to test an LBA space of 16 GB because a fresh install of a 64-bit version of Windows 7 takes up nearly that amount of space. In a way, this examines the performance that you would see from accessing various scattered file dependencies, caches, and temporary files.

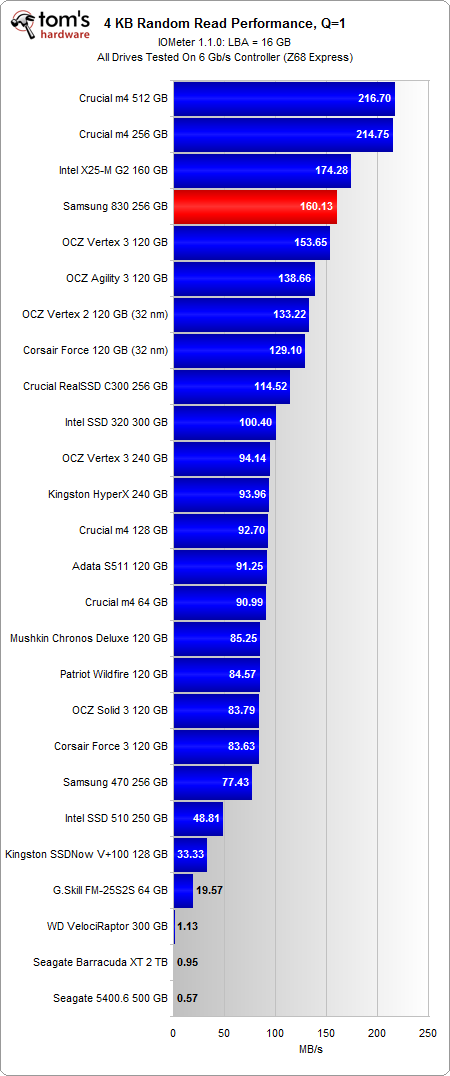

If you're a typical PC user, it's important to examine performance at a queue depth of one, because this is where the majority of your accesses are going to fall on a machine that isn't being hammered by I/O commands.

Before we get to the numbers, note that we're presenting random performance in MB/s instead of IOPS. There is a direct relationship between these two units, as average transfer size * IOPS = MB/s. Most workloads tend to be a mixture of different transfer sizes, which is why the ninjas in IT prefer IOPS. It reflects the number of transactions that occur per second. Since we're only testing with a single transfer size, it's more relevant to look at MB/s (it's also more intuitive for "the rest of us"). If you want to convert back to IOPS, just take the MB/s figure and divide by .004096 MB (remember your units) for the 4 KB transfer size.

At a queue depth of one, Samsung's 830 starts to show its principal weakness. In random reads, the 256 GB 830-series drive pushes slightly more than 160 MB/s. The closest contender is OCZ's 120 GB Vertex 3, but it falls behind by less than 4.5% with a random read rate of 154 MB/s. Crucial's 256 GB and 512 GB m4s still reign king with speeds slightly above 210 MB/s.

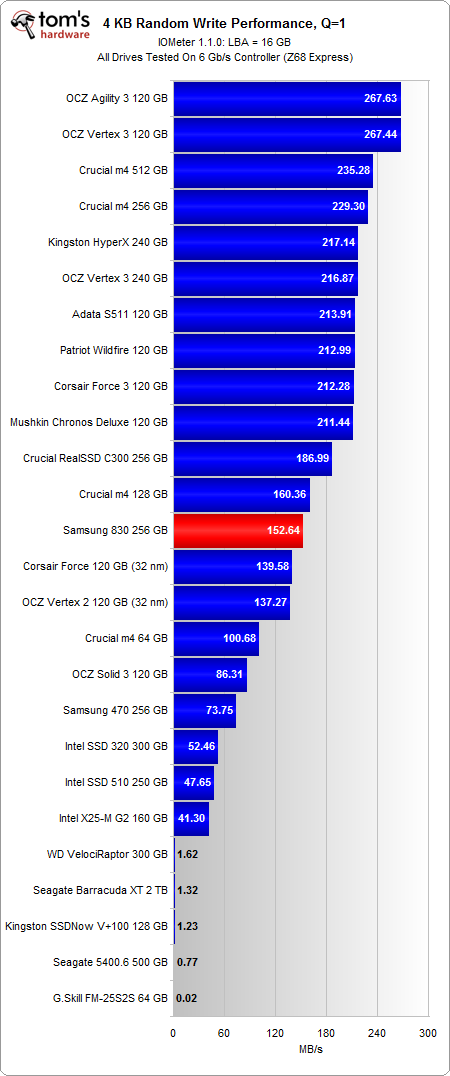

The 256 GB 830 drops even further down the chart in random write performance with a rather uninspiring result of 152 MB/s. This puts the 256 GB 830 very close to 120 GB SSDs employing first-gen SandForce hardware. Even Crucial's 128 GB m4 has a 5% lead on the 256 GB 830. Second-gen SandForce drives all deliver 40-50% greater performance in this discipline.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

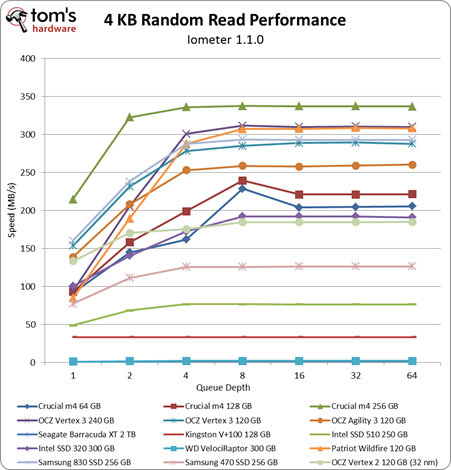

We've removed a few entries from our line graphs to make them more readable. Specifically, we pulled out some of the SandForce-based drives because many share the same performance profile. The key differentiator for SF-based SSDs vendors is memory: ONFi 2.0 versus ONFi 1.0 versus Toggle-mode DDR. We're holding onto three SSDs to represent each unique class of SandForce drive:

- OCZ Vertex 3: second-gen SandForce-based SSD using synchronous ONFi 2.0 NAND.

- OCZ Vertex Agility 3: second-gen SandForce-based SSD using asynchronous ONFi 1.0 NAND.

- Patriot Wildfire: second-gen SandForce-based SSD using first-gen Toggle-mode DDR NAND.

When we start looking at performance across queue depths, we continue to see Samsung's 256 GB 830 shadow the 120 GB Vertex 3 in random reads. At queue depths lower than four, the 256 GB 830 outpaces the 240 GB Vertex 3 (ONFi 2.0) and the 120 GB Wildfire (Toggle-mode 1.0). However, at queue depths above four, the 240 GB Vertex 3 and 120 GB Wildfire edge ahead of the 256 GB 830 by roughly 10%.

In random writes, the 256 GB 830 is a middle-of-the-road performer, presenting speeds similar to 120 GB first-gen SandForce SSDs across all queue depths. Crucial's 256 GB m4 delivers almost 60% more performance, while OCZ's Vertex 3 offers speeds double that of the 256 GB 830. The 240 GB Vertex 3 actually manages to push past 300 MB/s once we hit queue depths above four.

Current page: Benchmark Results: 4 KB Random Performance (Throughput)

Prev Page Benchmark Results: Storage Bench v1.0 Next Page Benchmark Results: 4 KB Random Performance (Response Time)-

pbrigido With all of these fast SSDs coming to market, I can only hope that the competition starts to drive down prices soon.Reply -

I still opt for the M4 in all the enthusiast builds I do!Reply

It boils down to reliability, not one hiccup on M4 yet (or any crucial drive Ive installed), 4/5 Sandforce drives I have installed have had some form of callback problem to resolve once deployed, mostly requiring firmware updates, but a few failed drives as well!

Mind you, still better than the early Corsair force Series I used, every single one failed! Stopped using them quick!

Am tempted by OCZ, once they have reliability on their side I will give them a go again! -

Would love to see an article addressing Sandforce controller problems people have been experiencing.Reply

-

mark_hamill Would love to see an article addressing Sandforce controller problems people have been experiencing.Reply -

JamesSneed Looks like a really nice SSD. Samsung has one of the best validation proceses along with Intel and Crucial so I really don't expect people to have issues like they do with OCZ drives. Now the real question how much will it be on the egg?Reply

I saw this quote below in the summary and laughed as nobody in there right mind would use a basic MLC drive in a database server. So Samsung tuned the drive for what it will be used in ,desktops, good.

"Although we'd probably think twice before picking this as our first choice for a database server, it does just fine in an enthusiast's machine." -

JohnnyLucky great review. now we just have to wait and see how the ssd will hold up over the long haul. If it is anything like the 470, then it should be problem free.Reply -

beenthere We'll see how this series of Samsung SSDs fair. The previous gen was a nightmare of problems so I don't think Samsung's validation process is any better that the rest of the SSD suppliers - which is sad when Samsung controls everything including NAND production. It's amazing that we still have SSDs NOT readt for Prime Time.Reply -

AppleBlowsDonkeyBalls beenthereWe'll see how this series of Samsung SSDs fair. The previous gen was a nightmare of problems so I don't think Samsung's validation process is any better that the rest of the SSD suppliers - which is sad when Samsung controls everything including NAND production. It's amazing that we still have SSDs NOT readt for Prime Time.Reply

Proof? I think you just pulled this out of your ass or from someone's that told you some story. The 470 series was VERY reliable.