Testing GPUs with AMD FSR3 and Avatar: Frontiers of Pandora — 16 graphics cards and hundreds of benchmarks

AMD's FSR3 finally feels ready for prime time.

Avatar: Frontiers of Pandora Overview

Avatar: Frontiers of Pandora launched earlier this month, and we've tested it to see how it runs. Except, it's not really about the game so much as it is the technology powering the graphics — specifically AMD's FSR 3 with Frame Generation. It's not the first game with FSR 3 support, but after some initial testing and some wonky results in Immortals of Aveum and Forspoken, I'm happy to say my experience was vastly improved with Avatar's implementation. We've grabbed a collection of sixteen of the best graphics cards and put them to the test in the game's built-in benchmark, including tests at native resolution, quality upscaling with FSR 3 and DLSS 2, and FSR 3 plus Frame Generation.

Something else that's somewhat interesting is Avatar's inclusion of an "Unobtanium" preset (unlocked by launching the game with "-unlockmaxsettings"), an ultra-demanding setting that can push even the fastest graphics cards to their limits and then some. But don't get too excited, because fundamentally the Unobtanium setting only looks slightly better than the Ultra preset, at least in my experience.

Avatar: Frontiers of Pandora Test Setup

AMD provided a code for Avatar: Frontiers of Pandora — it's an AMD promotional game, just like Alan Wake 2 was an Nvidia promoted game. It's also highly appropriate that the game deals with the Na'vi aliens, and some marketing guru at AMD must have felt it was a match made in heaven. Playing as a Na'vi, on an Navi 31-based Radeon RX 7900 XTX? Excellent!

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 4070

Nvidia RTX 4060 Ti 16GB

Nvidia RTX 4060 Ti

Nvidia RTX 4060

Nvidia RTX 3080

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7800 XT

AMD RX 7700 XT

AMD RX 7600

AMD RX 6800 XT

Intel Arc A770 16GB

Intel Arc A750

The game does include ray traced shadows and reflections, though it's not exactly clear when those are used over faster solutions like screen space reflections. Certainly, the RT effects aren't nearly as demanding or readily apparent as we've seen in other games that use the technology.

There are five presets (if you count Unobtanium), plus a large collection of 22 individual settings you can tweak as you see fit. Ultrawide monitors are supported, and the game itself is an open-world sandbox of sorts that feels a lot like other Ubisoft open-world games (Assassin's Creed, Far Cry, and Watch Dogs)... except with 15-foot tall blue alien cat-people.

For our testing, we've run the medium preset at 1080p, then the ultra preset at 1080p, 1440p, and 4K. We also tested the unobtanium preset on a handful of cards at 4K. For every GPU, we tested at native resolution, with FSR 3 or DLSS 2 quality mode upscaling, and with FSR 3 quality upscaling plus frame generation. That's twenty different settings and resolutions on cards like the RTX 4090, or fifteen on AMD's top GPUs like the RX 7900 XTX. We've used the built-in benchmark, capturing frametimes manually using FrameView.

We used AMD's 23.12.1 drivers (Game Ready for Avatar), Intel 5074 drivers (also game ready — more recent 5122 came out after testing was done), and Nvidia 546.33 drivers (no mention of Avatar support for any of team green's recent drivers). Now, let's get to the benchmark results — image quality and additional discussion will be further down the page.

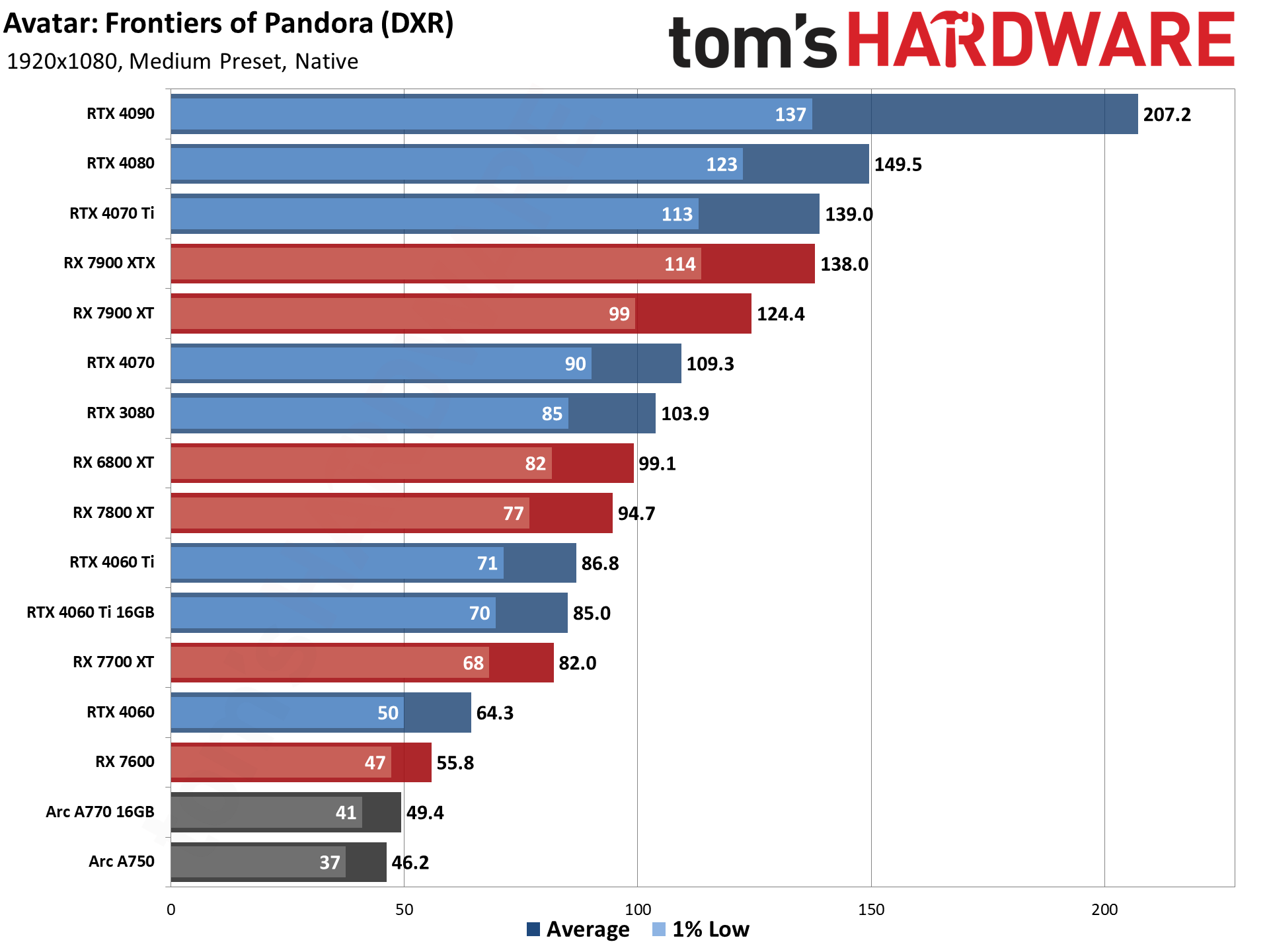

Avatar: Frontiers of Pandora 1080p GPU Performance

We'll start with the medium and ultra presets at 1080p. It's not entirely clear whether medium uses some ray tracing effects or not, but presumably the ultra preset at least uses RT for some of the shadows and reflections. Here are the native rendering results.

The RTX 4060 and above manage to clear 60 fps, though minimums fell below that mark on the 4060. AMD's RX 7600 comes just just a bit short of 60 fps, and the best Intel's Arc can manage is 45–50 fps.

It's also interesting to see the RTX 4070 Ti matching the normally faster RX 7900 XTX, though the gap between it and the RTX 4080 is quite small. The RTX 4090 meanwhile kicks things into high gear and breaks the 200 fps mark.

Previous generation AMD and Nvidia GPUs also show some interesting behavior. The RTX 3080 just edges past the RX 6800 XT, indicating that even the medium preset probably uses a bit of ray tracing — in our GPU benchmarks hierarchy, the RX 6800 XT was 7% faster than the 3080 for rasterization games, while in RT games the tables turn and the 3080 was 55% faster. Also curious is that the RX 6800 XT came in slightly ahead of the normally faster RX 7800 XT. On the Nvidia side, the RTX 4070 was slightly faster than the RTX 3080.

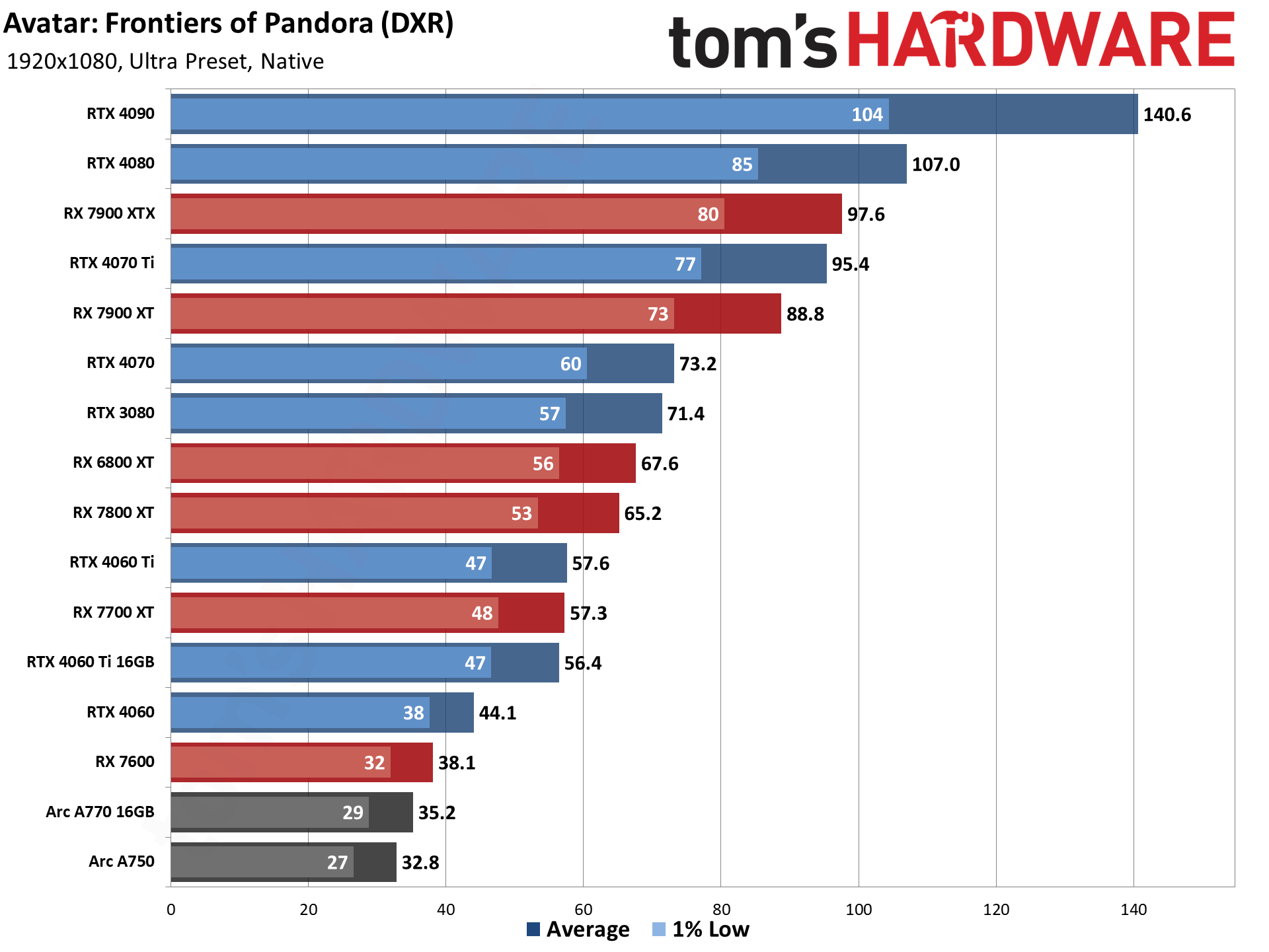

Moving to the ultra preset, there's a pretty big hit to performance across all GPUs, but the general positioning doesn't change too much. The 7900 XTX moves just ahead of the 4070 Ti now, and the RX 7700 XT matches the two 4060 Ti variants, but otherwise the positions and deltas are nearly the same.

Compared to the above medium testing, the RTX 4090 drops by 32%, the 4080 drops 28%, and the 3080 drops 31%. Even lower-spec GPUs like the 4060 with only 8GB on a 128-bit interface see a similar 31% dip. AMD's GPUs likewise see about a 30% reduction in fps at ultra settings, give or take ~2%, and Intel's Arc GPUs dip by 28–29 percent. In other words, there's a similar performance hit across the selection of GPUs.

Hitting 60 fps becomes a lot more difficult, as you'd expect. The RX 7800 XT and above can break 60 fps, while the RTX 4070 and above are needed to get minimum fps above that mark. Only the RTX 4090 manages to keep minimums above 100 fps. Which is perhaps a big part of why the game supports upscaling...

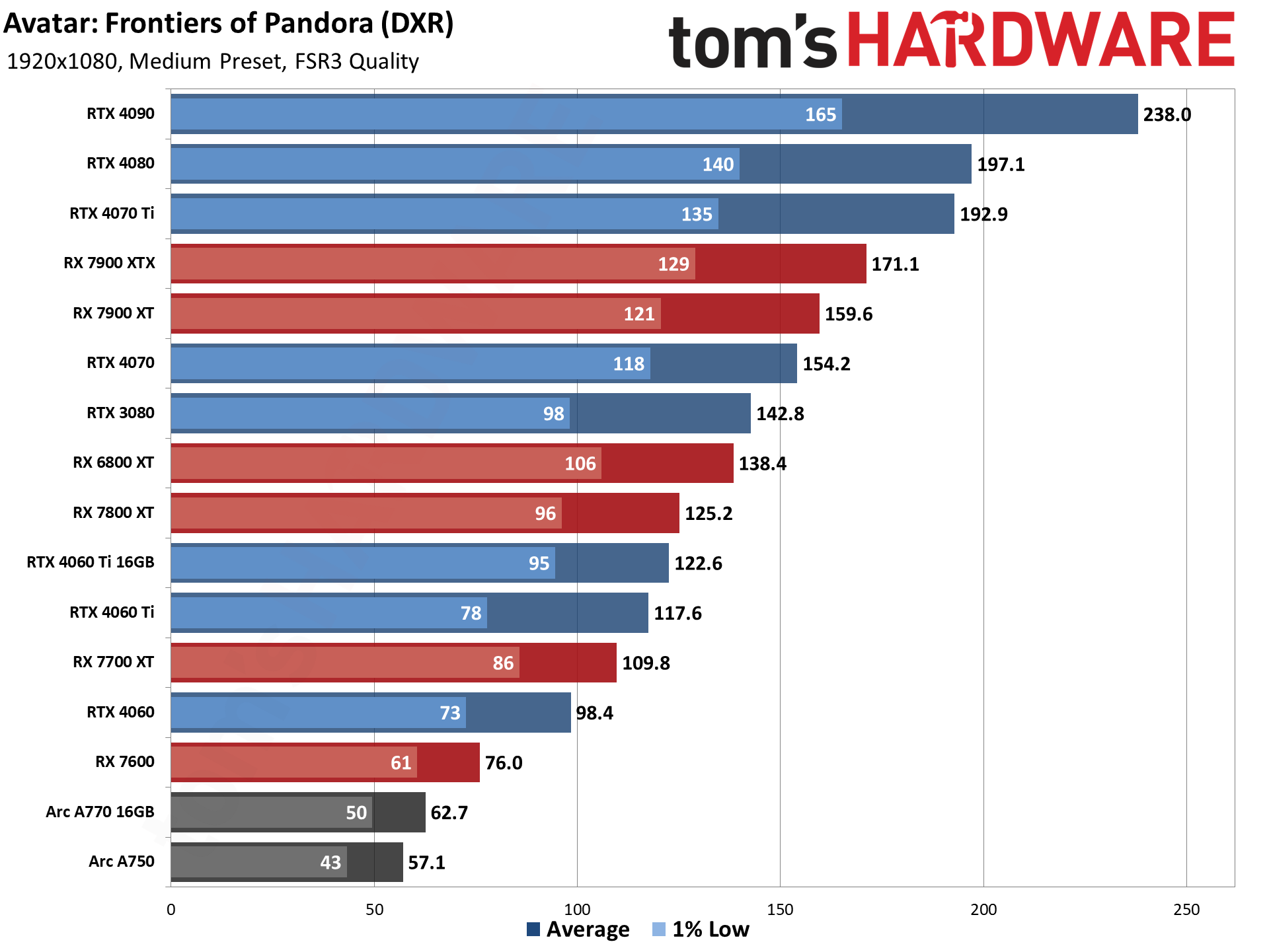

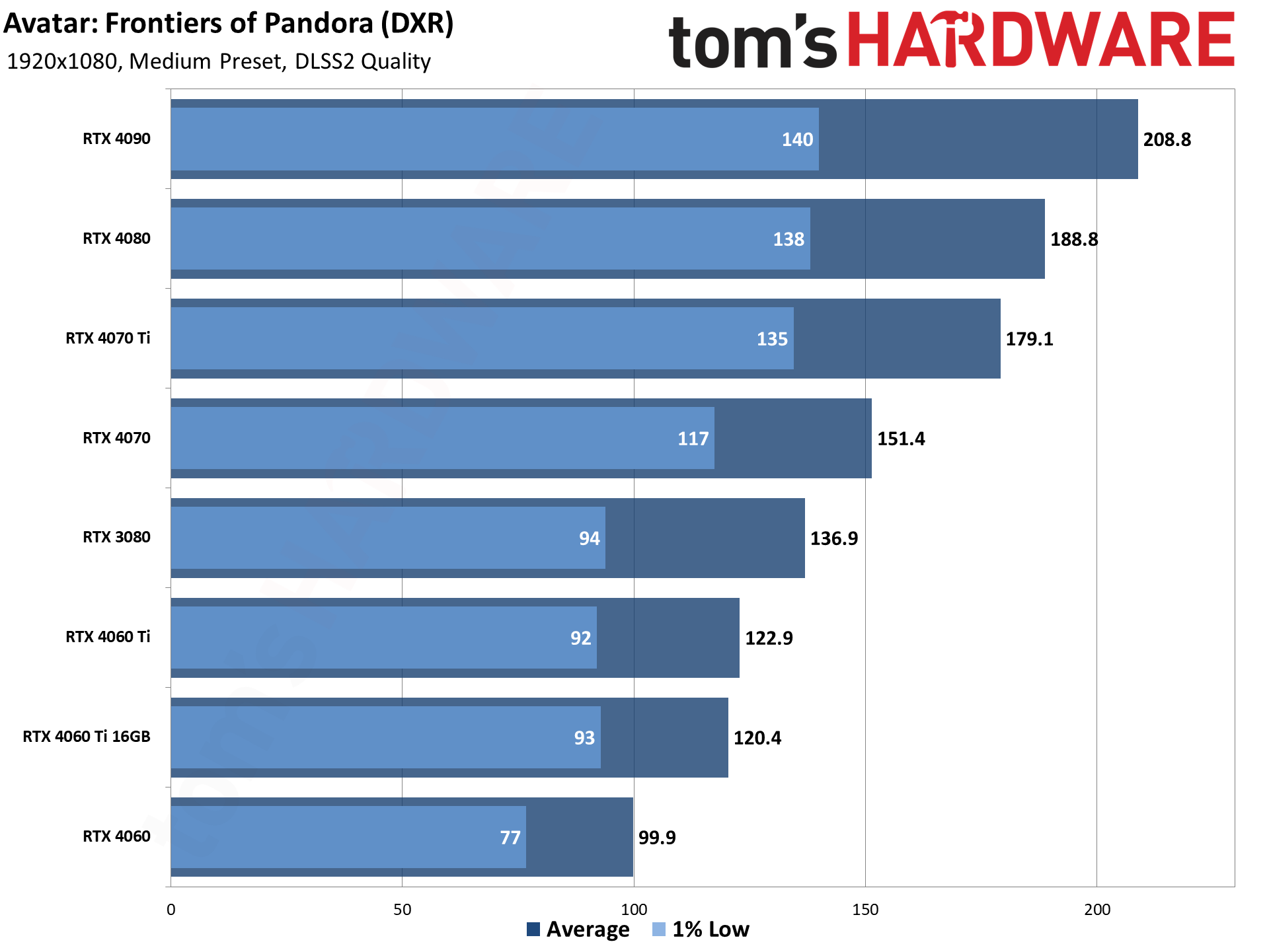

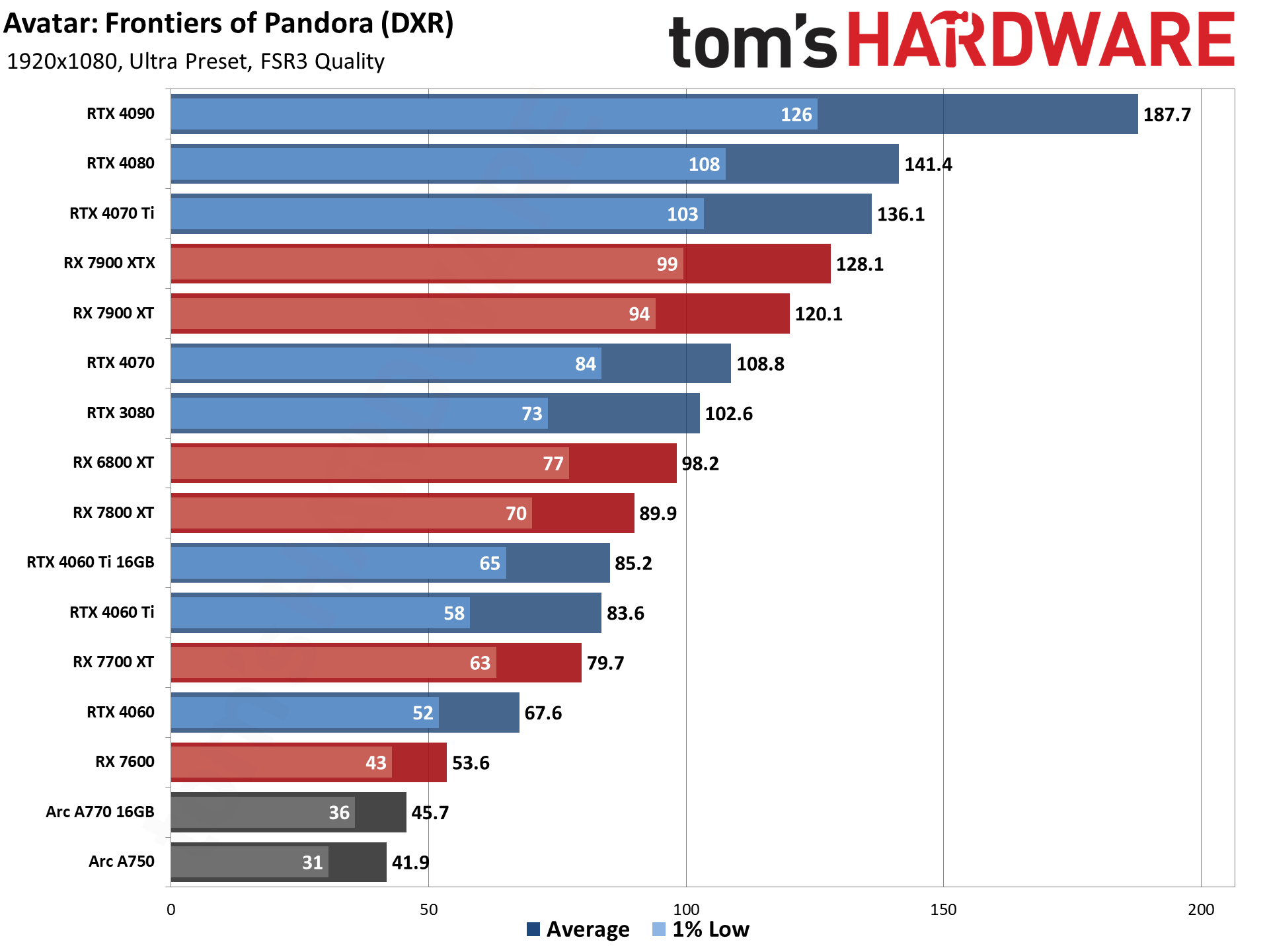

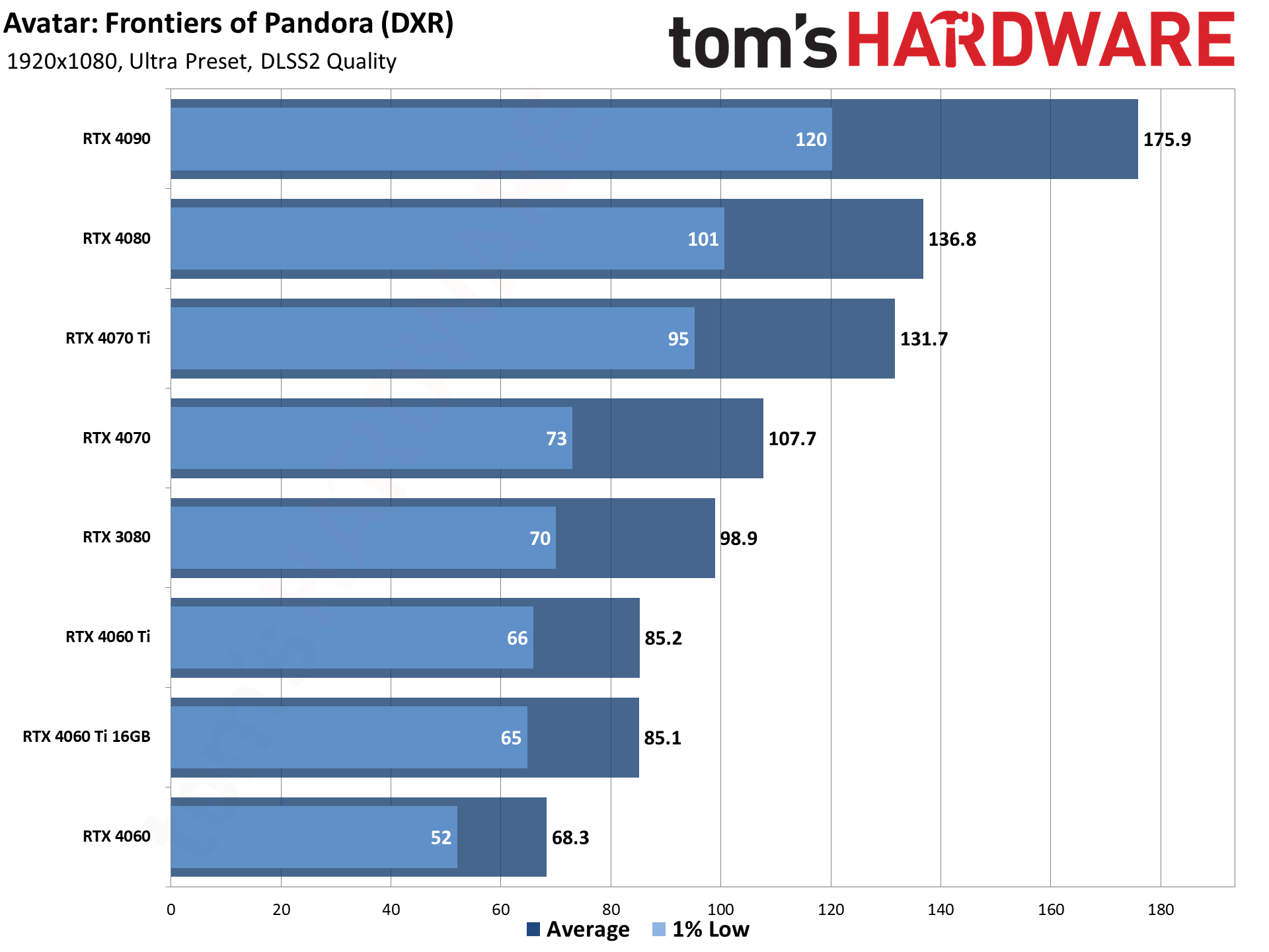

We've got two charts in each of the above galleries, the first showing all GPUs running with FSR 3 Quality mode upscaling, and the second showing the Nvidia cards using DLSS 2 Quality mode upscaling. Quality mode upscaling typically means a 2X upscaling factor, so in this case we'd expect the game to render at 1358x764 (give or take). There's no indication of the actual rendering resolution, however, so we'll just have to take that on faith.

Rendering fewer pixels and then upscaling should, in theory, mean higher framerates. However, CPU bottlenecks can start to become a factor. The RTX 4090 at 1080p medium for example sees a 15% improvement with FSR3Q, but only a 1% change with DLSS2Q. As we've seen before, that means FSR upscaling in general is better at improving performance beyond a certain framerate threshold. Dropping down to the RTX 4060 meanwhile yields a 53% improvement with FSR3Q and a 55% improvement with DLSS2Q.

AMD's GPUs show a range of gains as well. The 7900 XTX only improves by 24% at 1080p medium, and 31% at 1080p ultra. The RX 7600 meanwhile sees a 36% boost at medium and a 41% boost at ultra settings.

In other words, the more demanding it is to render a pixel, the greater the benefit you get from upscaling — at least in terms of performance. You can also elect to use higher upscaling factors, balanced being 3X upscaling and performance being 4X upscaling. At 1080p, however, higher levels of upscaling will start to show more of a loss of image fidelity.

Put another way, there appear to be lower limits to how far DLSS upscaling can take you — and that's probably part of why Nvidia created DLSS 3 Frame Generation. Except Avatar doesn't support DLSS 3. We'll see below that DLSS upscaling on the RTX cards starts to match or even slightly exceed (in performance) FSR 3 at higher resolutions.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

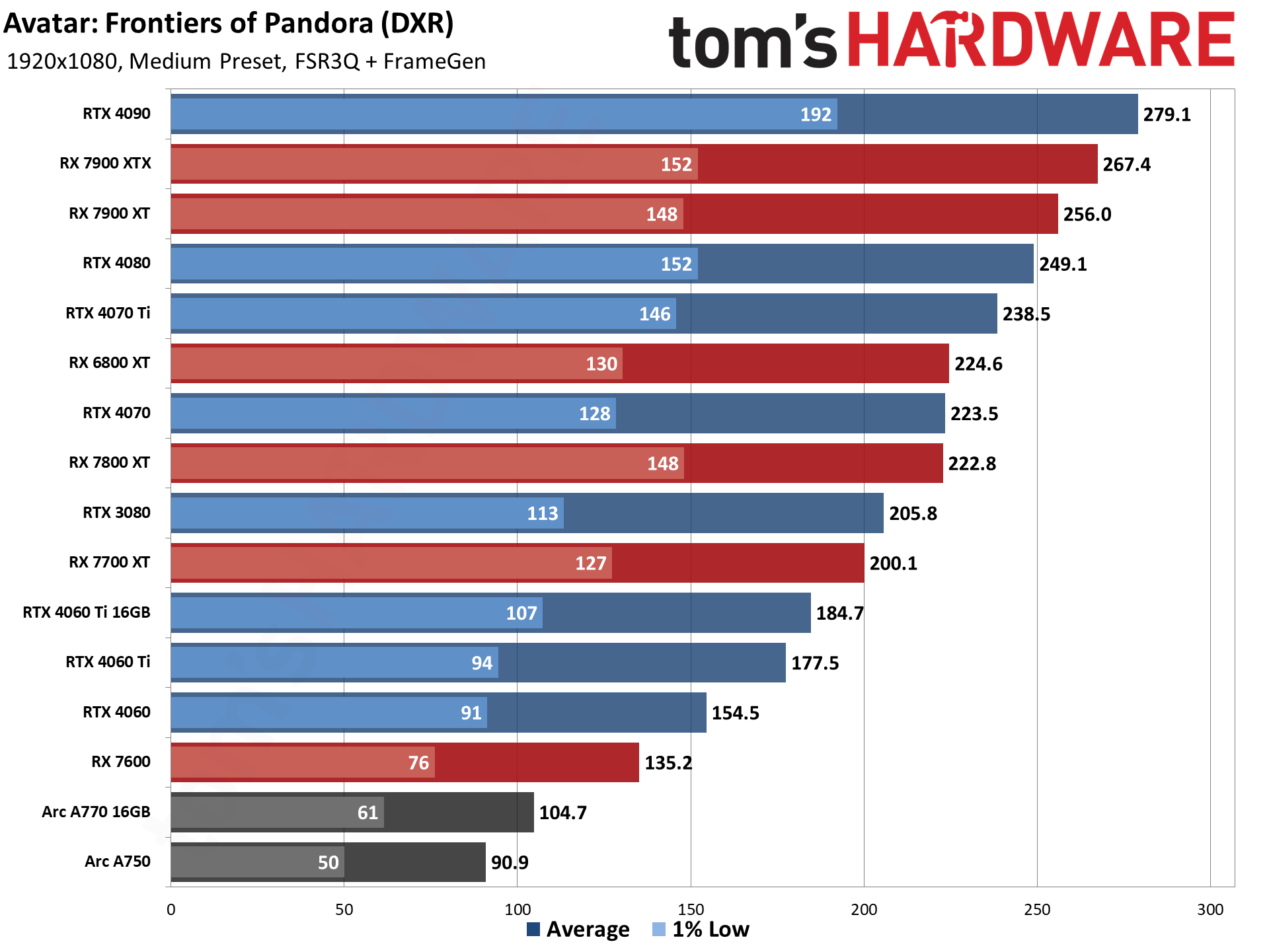

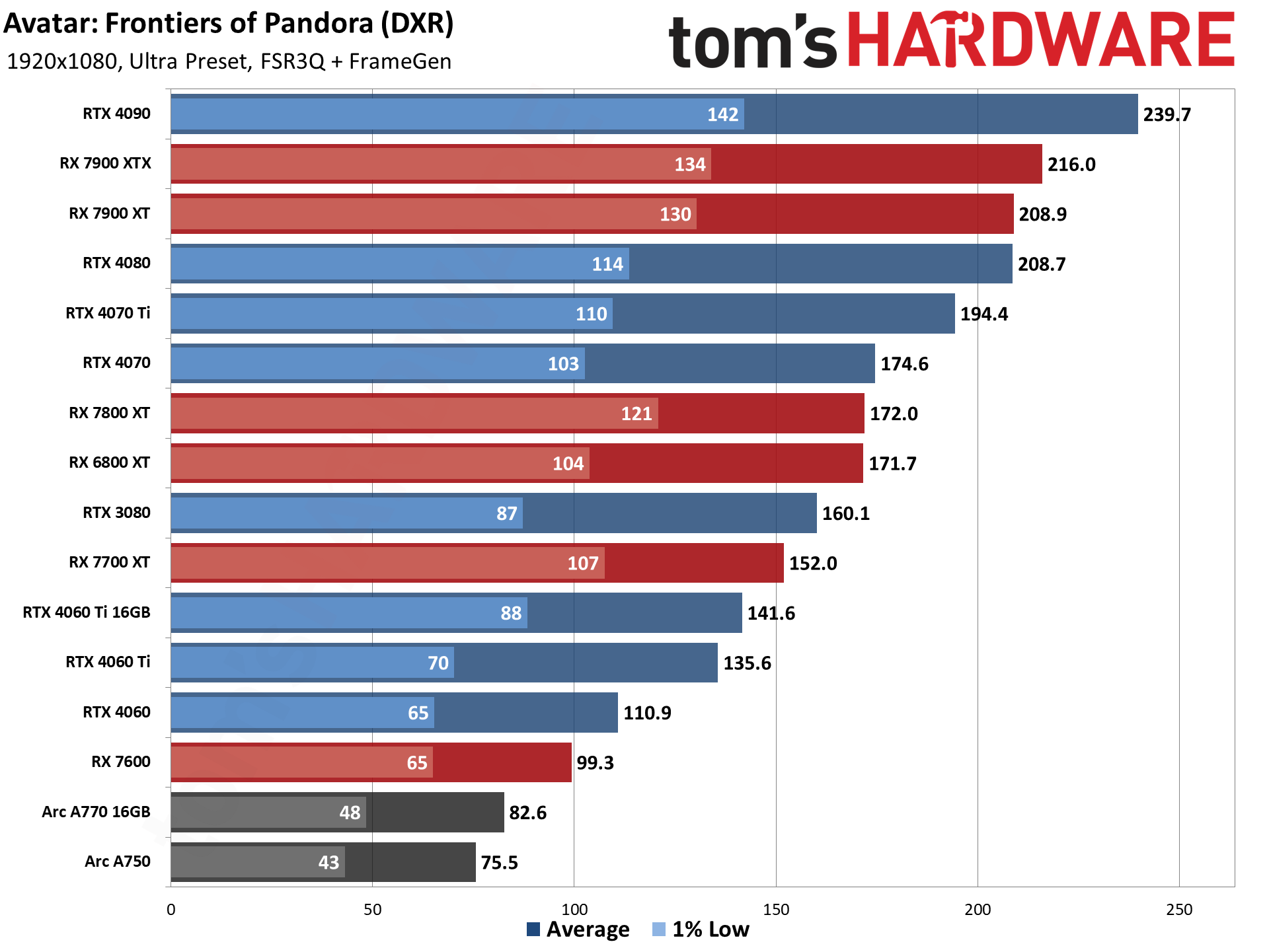

Wrapping up our 1080p testing, we have FSR 3 quality upscaling combined with frame generation. Avatar doesn't support DLSS 3 (there will probably be a mod for that shortly, if not already), nor does it support DLSS 3.5 ray reconstruction, so the only frame generation option is via FSR 3, and you can't use it concurrently with DLSS 2 upscaling. Regardless, the fundamental concept is the same: Take two existing frames and interpolate an intermediate frame.

Here's where things start to get interesting. First, while frame generation tends to be more prone to artifacts than just upscaling, Avatar didn't seem to have too much trouble. There are issues with UI overlays, but the rest of the screen content seemed to look quite decent without resorting to video capture and stepping through the individual frames. Perhaps more importantly, the benefits of frame generation, in terms of potential fps, are much greater. With DLSS 3, we often have a caveat that it adds latency — and that applies to FSR 3 as well — but curiously, the impact of that didn't feel as noticeable, even at lower base fps (which we'll get to at higher resolutions).

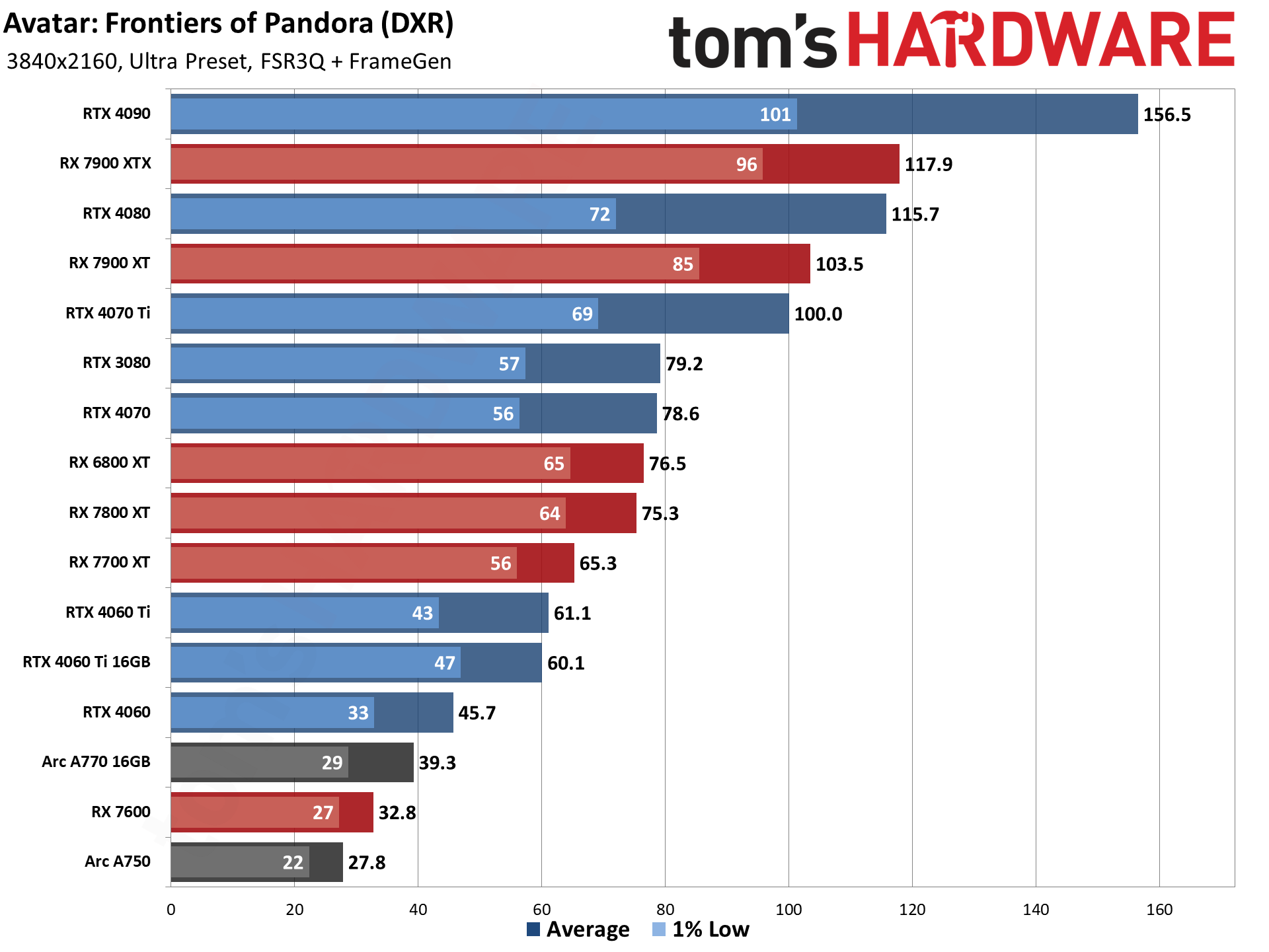

So, with FSR 3 upscaling and frame generation, everything now easily breaks 60 fps, and even the slowest GPUs in our test group are mostly pushing 100 fps and more. The Arc A750 is the sole exception, landing at 91 fps, but it was very much playable. At the top of the chart, RTX 4090 gets 279 fps while the RX 7900 XTX gets 267 fps. CPU bottlenecks are likely still a factor, but AMD GPUs in general get a bigger boost from FSR 3 than their Nvidia counterparts.

Take the RTX 4070 Ti and RX 7900 XTX as an example. At 1080p medium and native resolution, they're basically tied. With just FSR 3 upscaling, the 4070 Ti is 13% faster, but then throw frame generation into the mix and the 7900 XTX takes the lead by 12%. Some of the difference likely stems from the added bandwidth offered by the 7900 XTX, and the potential gains vary across GPUs from the same family.

RX 7600 for example sees a 142% improvement at 1080p medium and 161% at 1080p ultra. The RX 7700 XT shows nearly the same gains (144% and 165%), and 7800 XT improves by 135% and 164%, but things start to trail off from there. The 7900 XT shows a 106% and 135% improvement, and the 7900 XTX only gets 94% and 121% — again, CPU bottlenecks are a likely factor.

Looking at Intel's Arc GPUs, though, it's not just the CPU. The A770 shows a 112% improvement at 1080p medium and 134% at 1080p ultra, but the A750 only gets a 97% and 130% increase. And Nvidia? The RTX 4060 gets 140% and 152%, while the 4060 Ti only gets 104% and 136%, but the 4060 Ti 16GB gets 117% and 151%. But we'll have to see how things look at 1440p and 4K before coming to any strong conclusions.

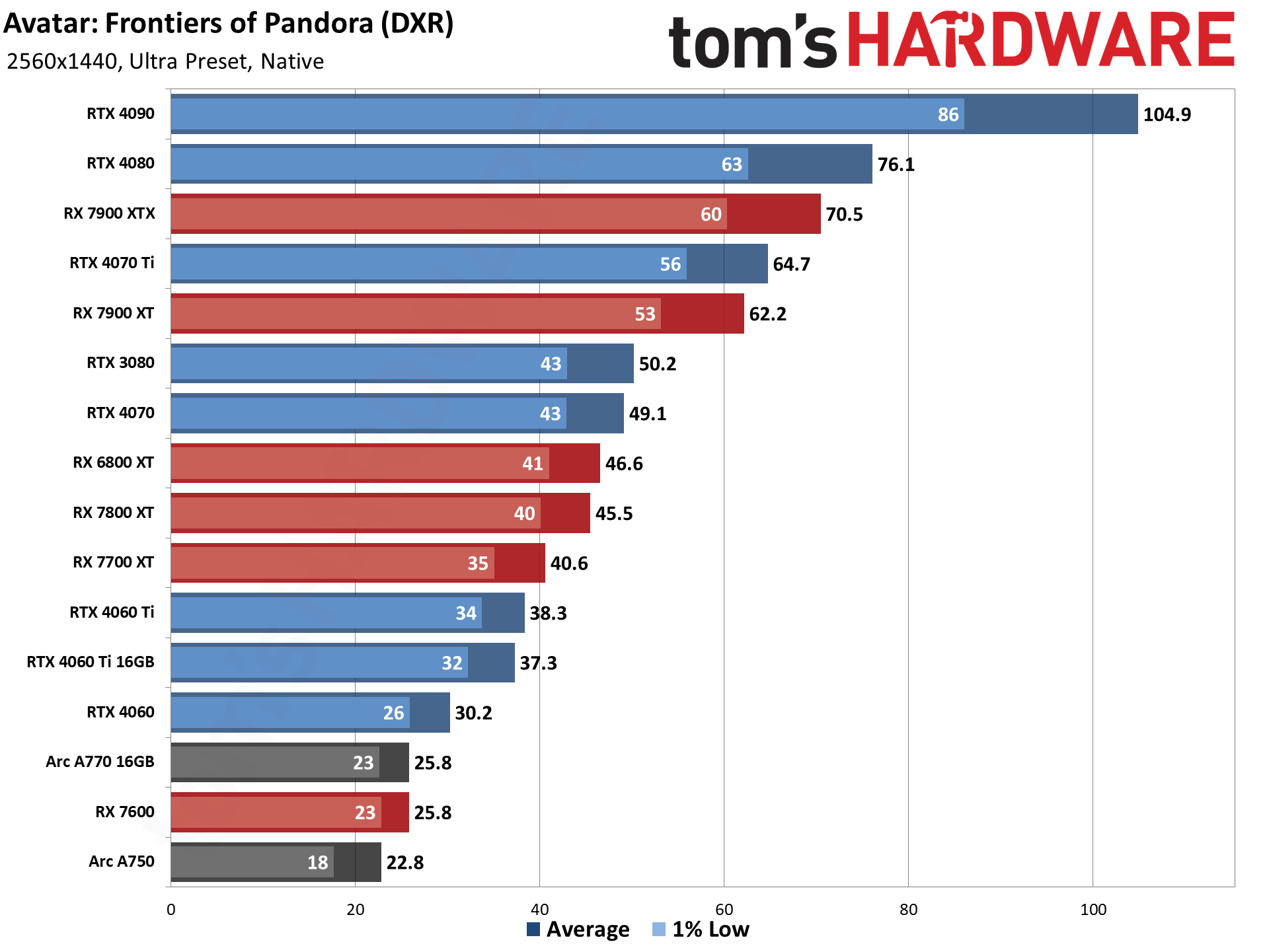

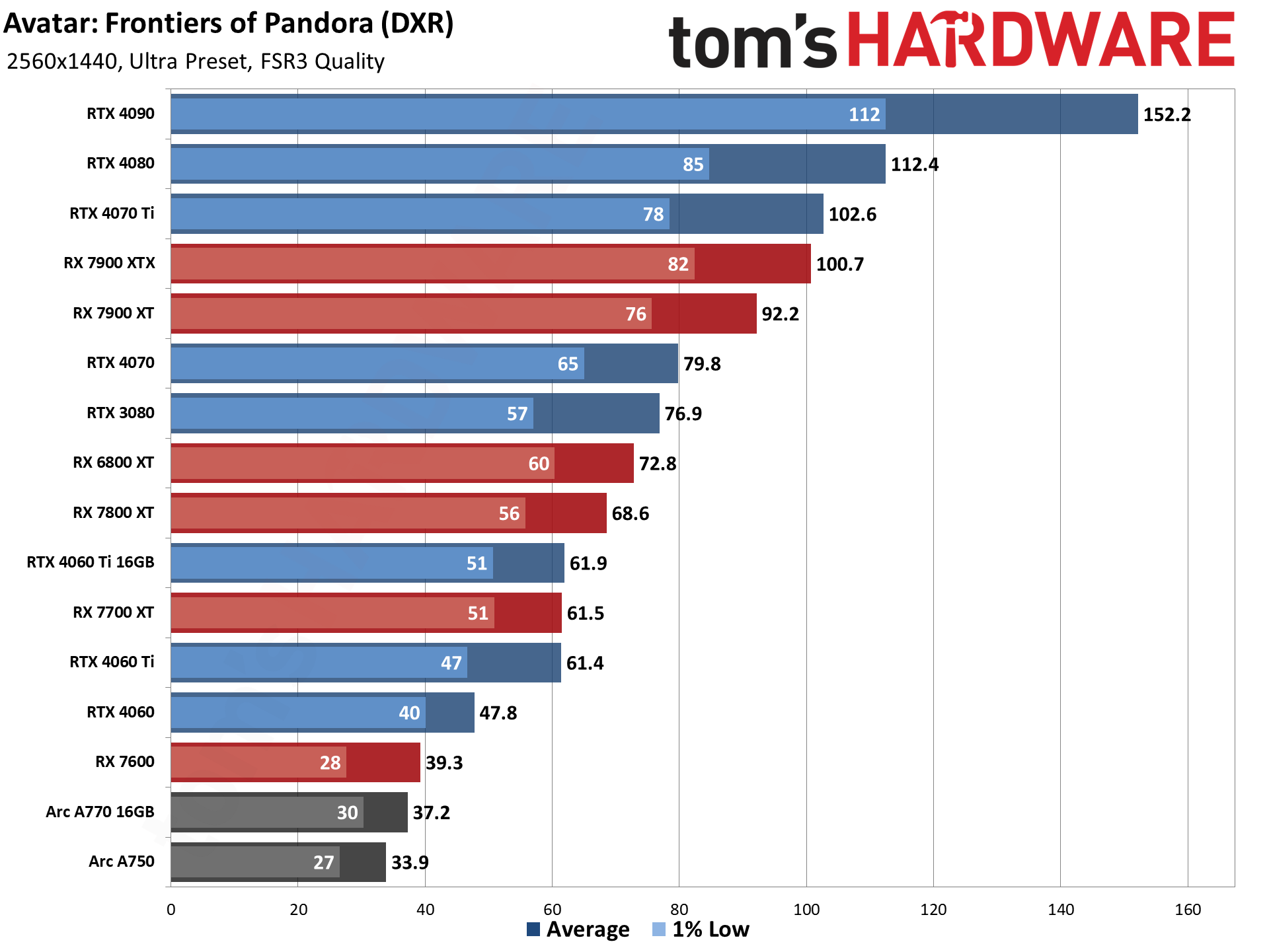

Avatar: Frontiers of Pandora 1440p GPU Performance

Avatar: Frontiers of Pandora starts to struggle at 1440p ultra, at least without upscaling. Now, only the RX 7900 XT and above break 60 fps, and the RTX 4060 technically manages 30 fps but really you'd want something faster.

VRAM utilization doesn't appear to be a critical issue, even on the 8GB cards, as evidenced by the relatively small difference between the average fps and the 1% low fps. Alternatively, look at the 4060 Ti 8GB and 16GB; the 8GB card clocks higher, so unless VRAM is a limitation, it comes out ahead, as seen in our charts here. You might get the occasional stutter with an 8GB card running the ultra preset, but the base level of performance on such hardware is likely to be a bigger concern.

The RTX 4070 Ti has now fallen a bit further off the pace of the RX 7900 XTX, though it still leads the 7900 XT. The RTX 3080 and 4070 likewise hold a slight lead over the RX 6800 XT and 7800 XT. And at the bottom of the chart, the Arc GPUs as well as the RX 7600 have all fallen below the 30 fps mark.

FSR 3 and DLSS 2 upscaling both improve performance a good amount in Avatar at 1440p ultra. With upscaling, everything we tested at least manages 30 fps or more, and the RTX 4060 Ti and above even manage to break 60 fps (though not on 1% lows). Of course, it's worth noting that we're starting with a minimum price of around $200 for the slowest GPU in the charts, the Arc A750.

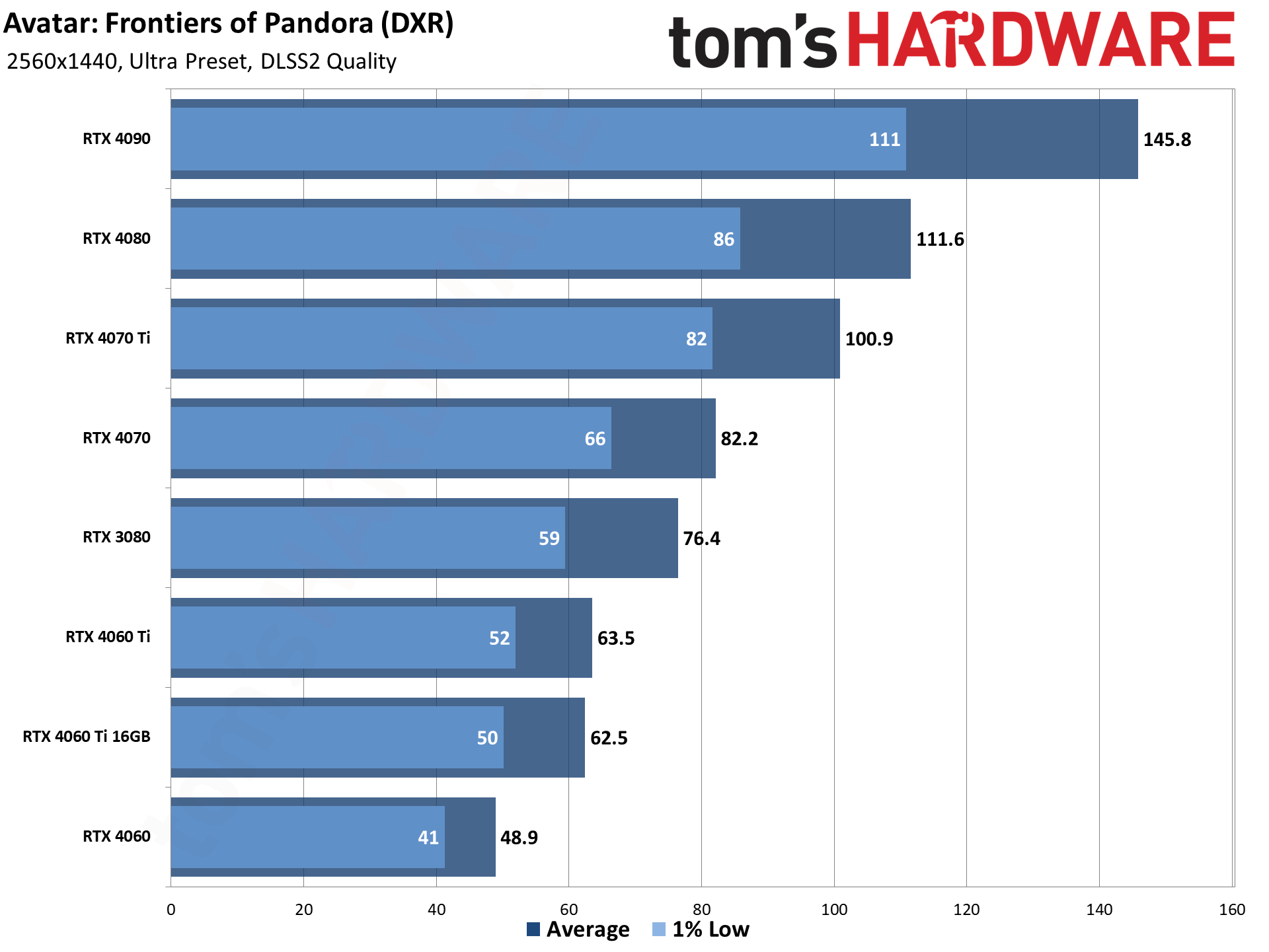

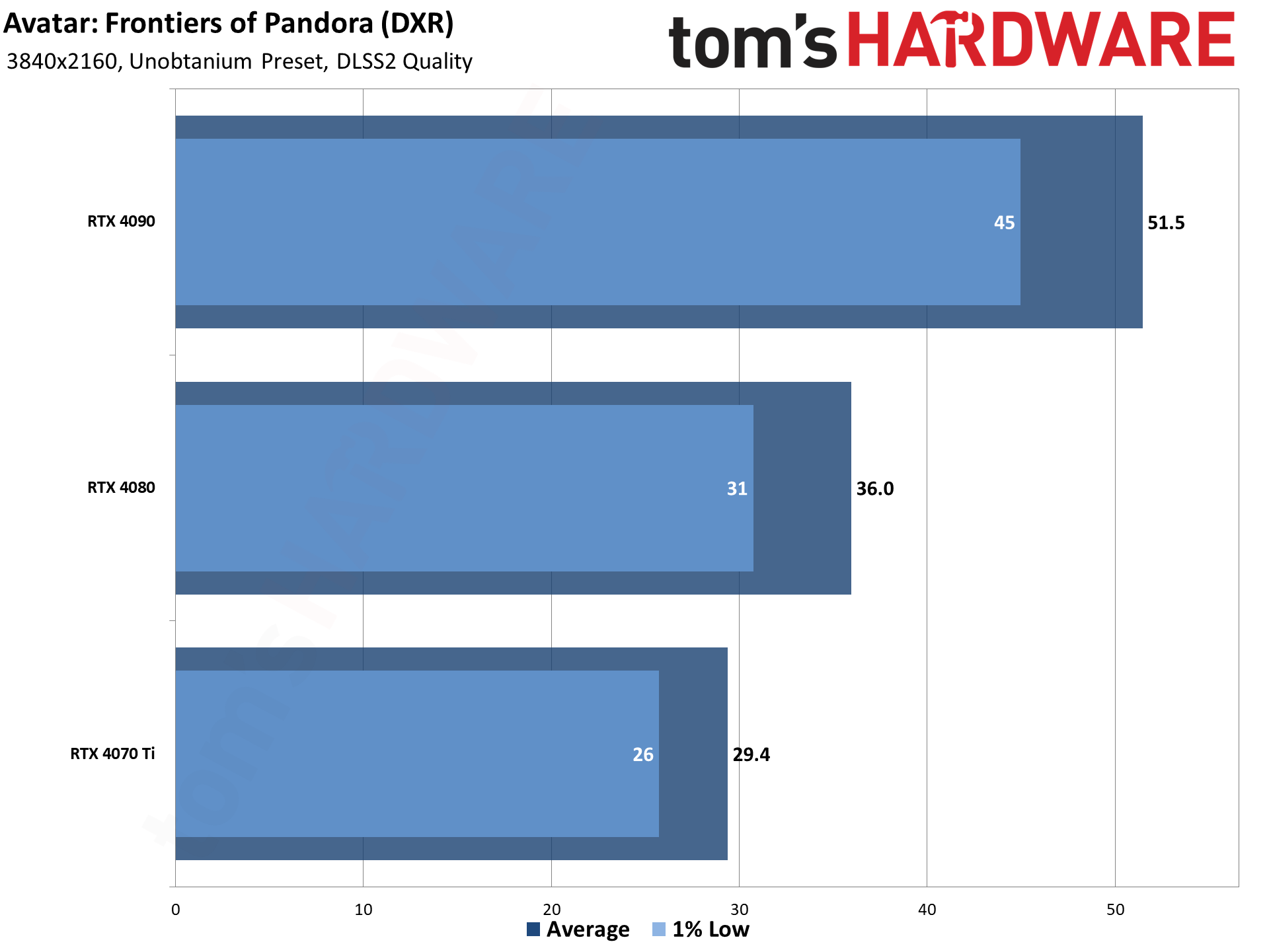

What about DLSS vs. FSR 3 upscaling? For the Nvidia GPUs, performance from FSR 3 is slightly better on the fastest cards (RTX 4070 Ti and above), but DLSS 2 takes the lead on the RTX 4070 and below. Image fidelity does favor DLSS still, in our opinion, so Nvidia users will mostly want to stick with DLSS if they're only going to use upscaling.

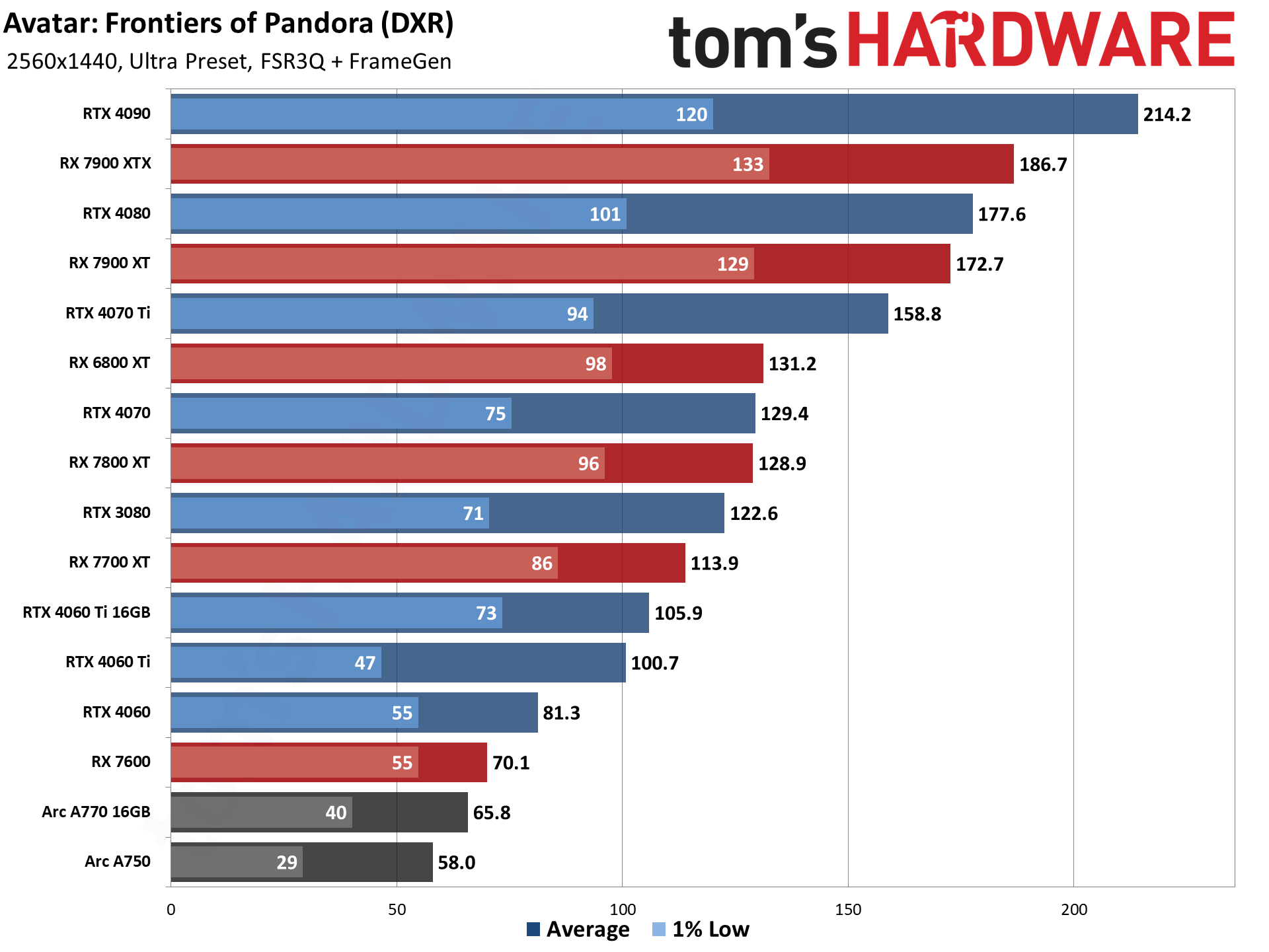

The problem is that, as noted earlier, FSR 3 frame generation is only allowed if you're using FSR 3 upscaling as well. If you want to maximize performance, frame generation again helps quite a lot.

Compared to native 1440p ultra, FSR 3 upscaling combined with frame generation at least doubles the framerate on every GPU we tested. The RTX 4090 sees a 104% boost, 4080 gets 133%, and 4070 Ti nets a 145% improvement. Nearly everything else from the green team gets a 160–170 percent improvement, the exception being the RTX 3080 that also shows a 144% increase.

For AMD, the gains are even larger. The 'worst' results for team red are the RX 7600 at 171% and the 7900 XTX at 165%. Everything else shows around a 180% improvement from upscaling and frame generation, give or take. Intel's Arc GPUs meanwhile both show a 155% delta versus native 1440p.

We didn't test a bunch of older GPUs, but it does appear that the RTX 40-series in general benefits a bit more from FSR 3 than the prior generation hardware. That's probably because of the larger L2 caches, though other architectural changes may also play a role.

What's more interesting to me is the way the game feels with FSR 3 frame generation. Take the cards at the bottom of the chart, like the RX 7600 and below. Those all averaged 58–70 fps, which would mean a base framerate of 29–35 fps. With Nvidia's DLSS 3 frame generation, that tends to feel sluggish in practice — a game will look like it's running at more than 50 fps, but will feel like it's running at less than 30 fps.

I didn't notice the same issue with FSR 3. In fact, I'd go so far as to say Avatar remained completely playable using these settings (1440p ultra with FSR 3 upscaling and framegen), where DLSS 3 games that I've played really need to be hitting 80 fps or more to feel okay. Which, honestly, doesn't make much sense to me.

FSR 3 with frame generation is still interpolating intermediate frames, which should add latency and shouldn't feel as smooth as the doubled fps would suggest. That's what I experienced with Forspoken and Immortals of Aveum as well. But whatever's going on in Avatar simply doesn't follow that same pattern, at least not in the testing and playing that I've done.

Avatar: Frontiers of Pandora 4K GPU Performance

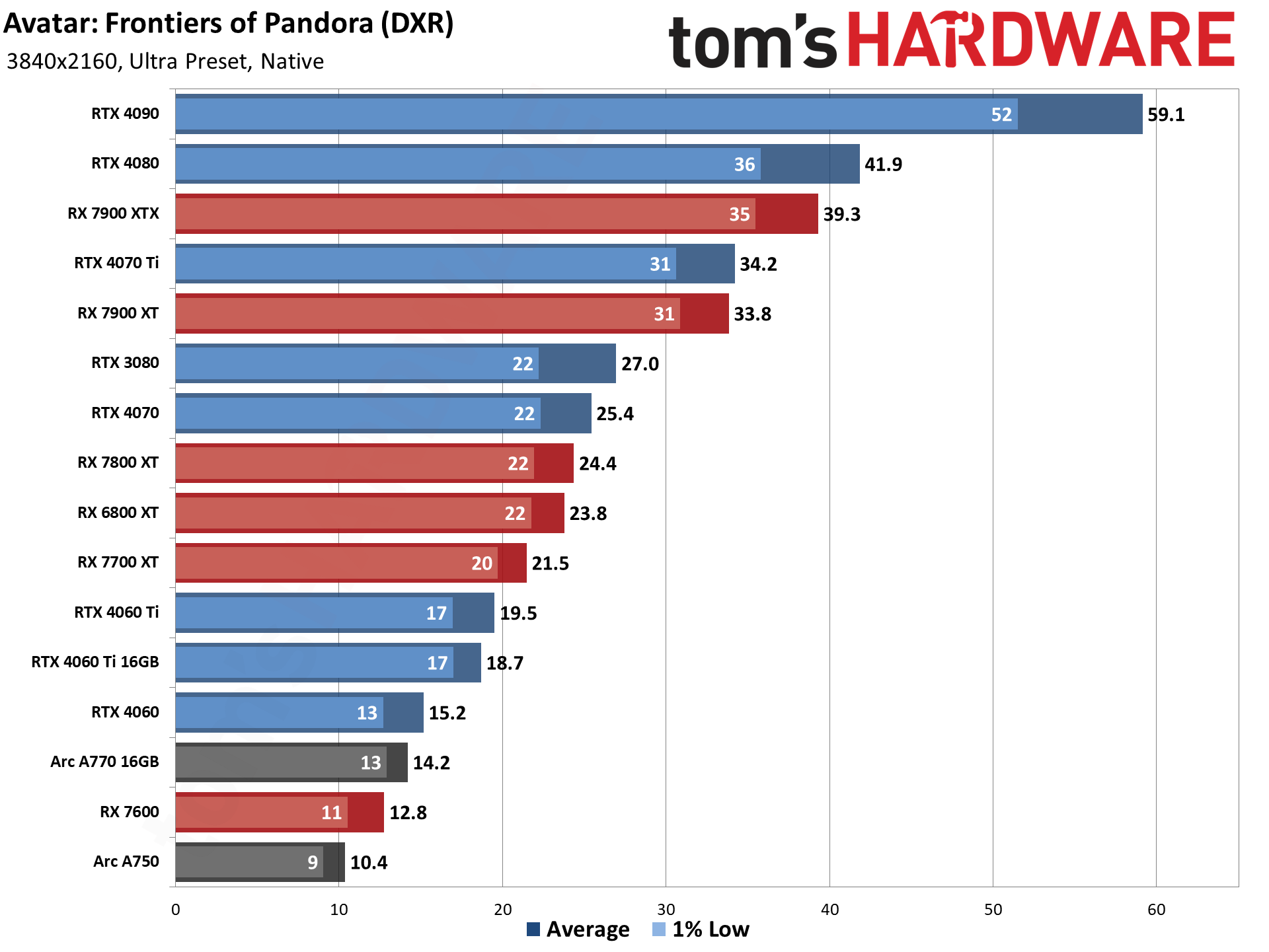

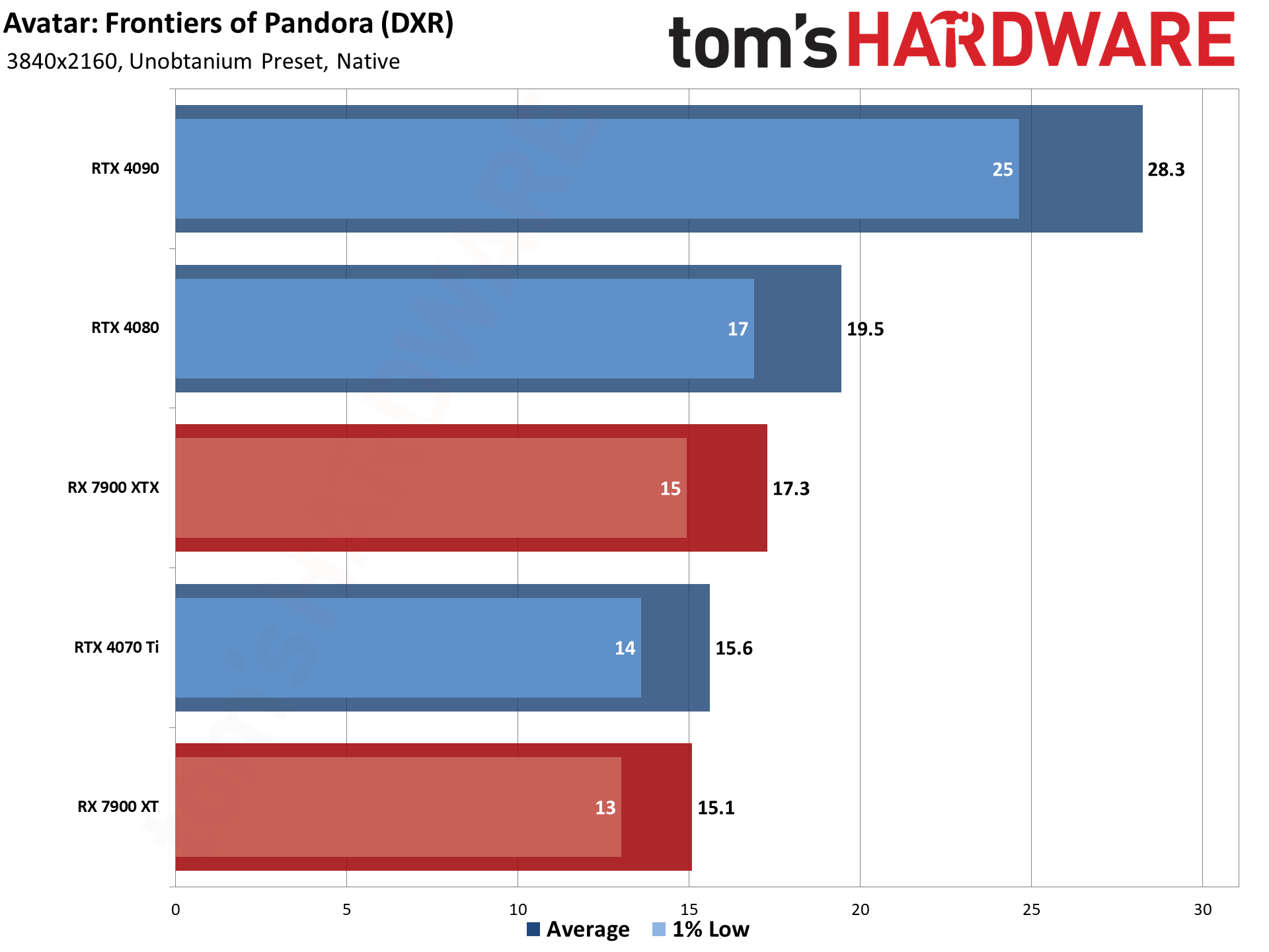

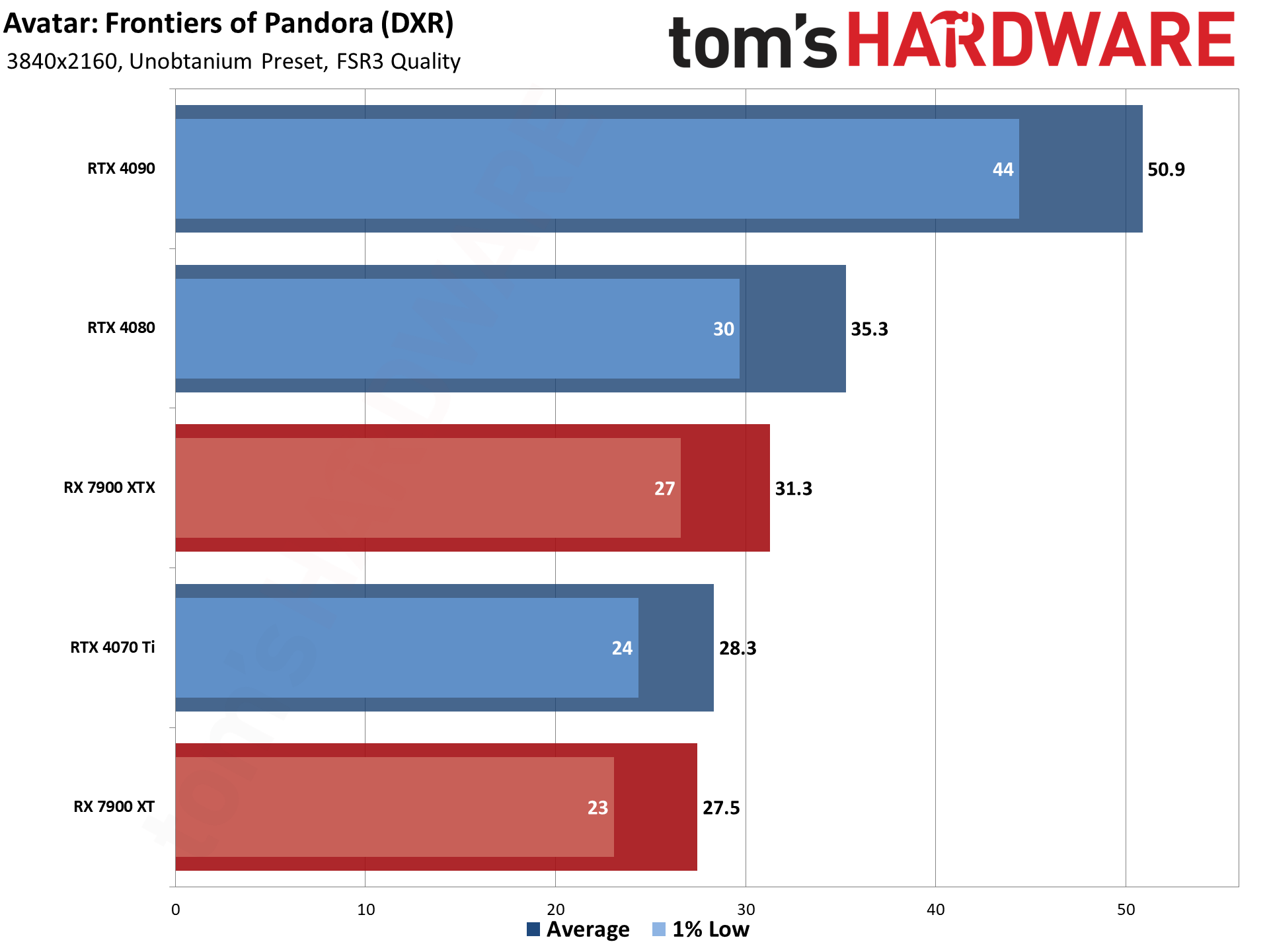

Wrapping things up at 4K, we now have ultra and unobtanium test results — though we only tested the 4070 Ti and above and the 7900 XT and above for the latter. You can see why in the charts, but let's hold on that for a moment.

4K ultra at native rendering needs a lot of horsepower, so much that even the mighty RTX 4090 wasn't able to average more than 60 fps in our test sequence. There are plenty of areas of the game that are less demanding, but in firefights you'll see performance take a hit, and that's when you need the increased responsiveness most. Only five GPUs manage to break 30 fps, the same five GPUs that we ran at unobtanium, and nothing was really great.

What about 4K unobtanium? Well, if the goal is to render things in such a way as to bring even the fastest current GPUs to their knees, it succeeds admirably. The RTX 4090 almost gets to 30 fps, coming in two points shy of that mark. The other four GPUs couldn't even break 20 fps. Ouch.

Does the unobtanium mode look significantly better, though? Not really. We'll compare images below, and there are differences, but in general it's just much more demanding for minor gains in image fidelity. The good news is that upscaling and frame generation become even more helpful at 4K.

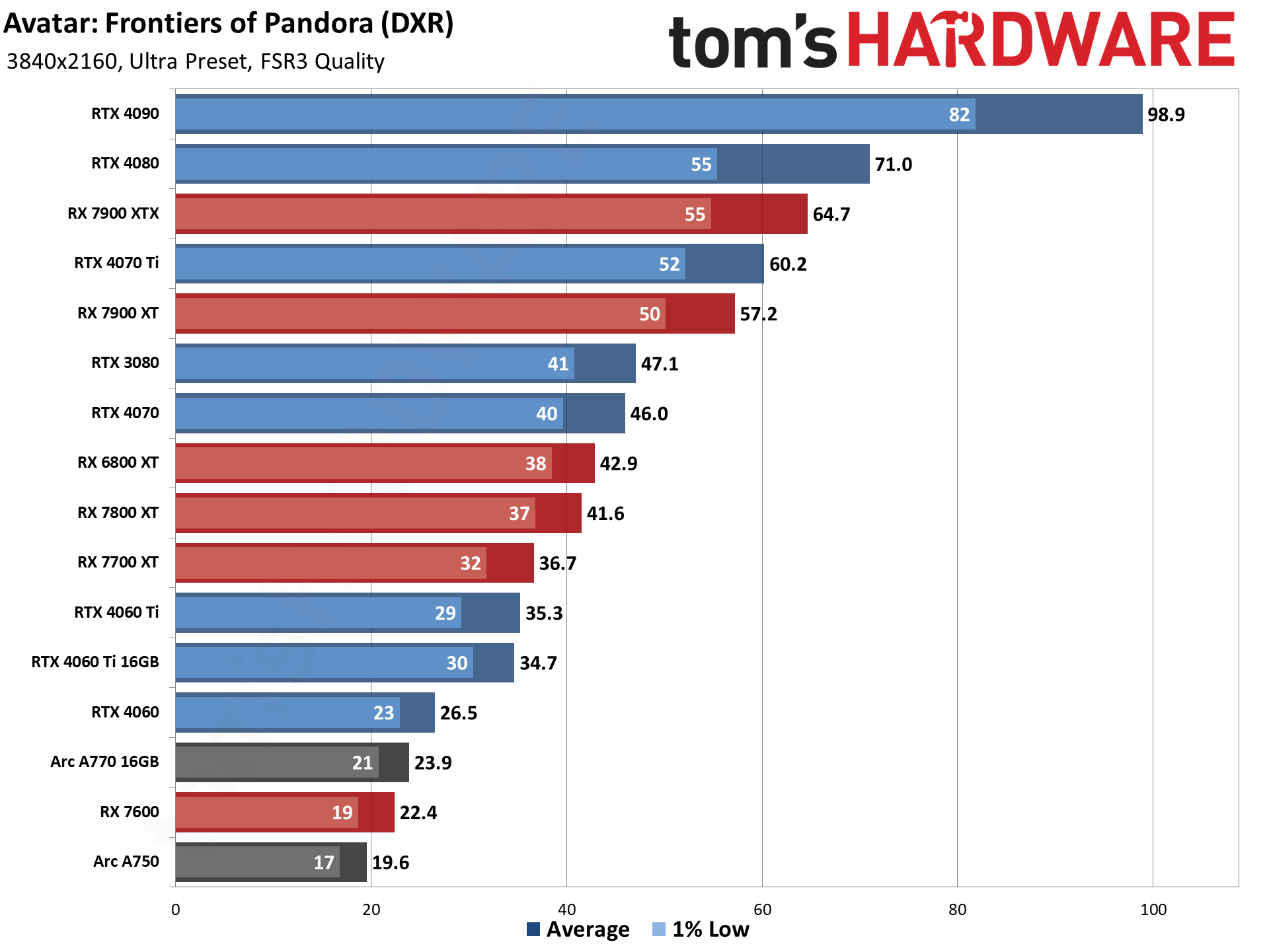

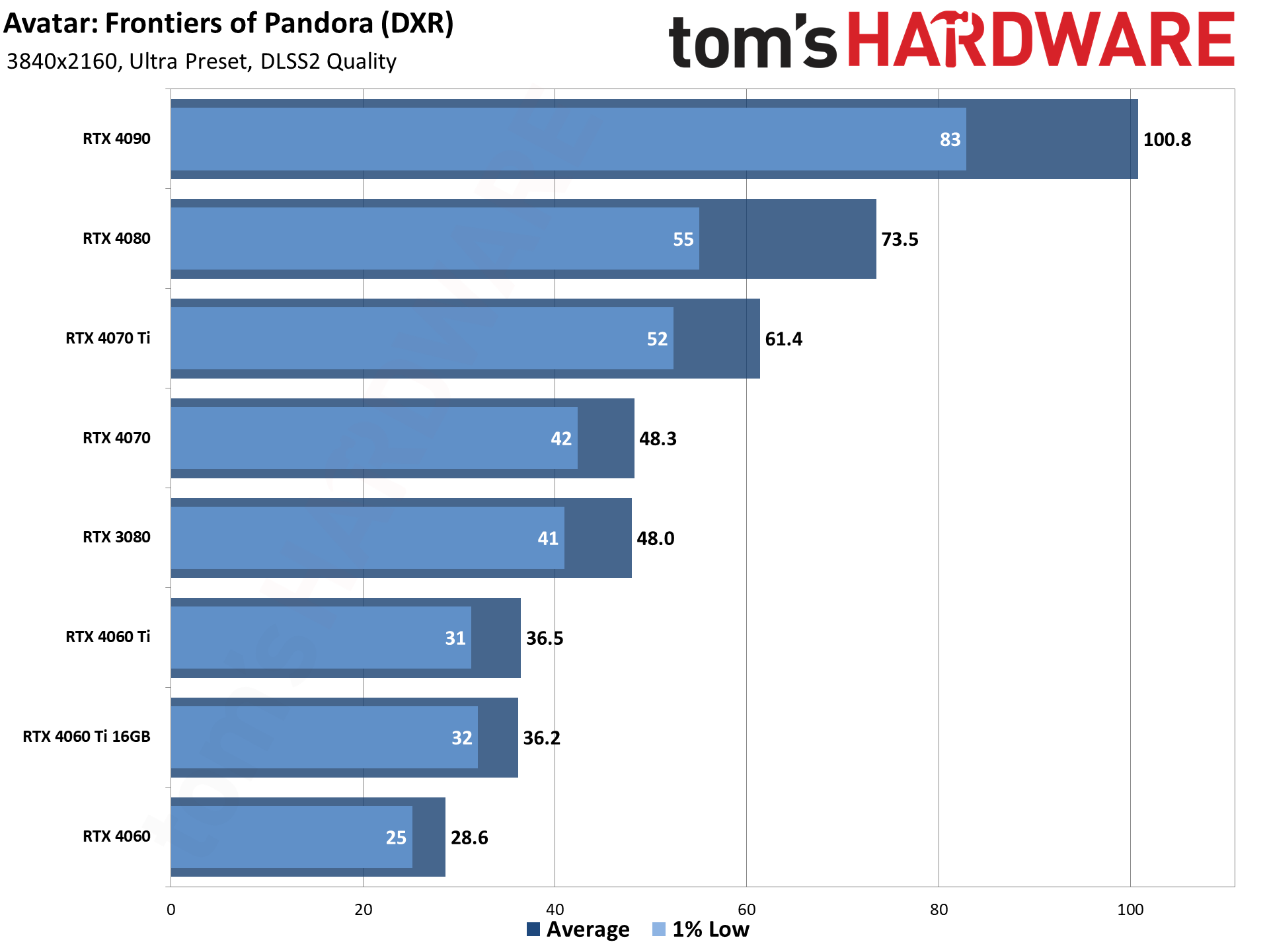

We've got both ultra and unobtanium results in the above gallery, showing FSR 3 and DLSS 2 quality mode upscaling. At 4K, DLSS finally takes a clear win over FSR 3 for performance (it was already leading in fidelity), with all the tested Nvidia cards doing slightly better in DLSS mode. It's not a massive difference (e.g. and 89% improvement with DLSS on the 4060 versus a 75% increase with FSR 3). If you're going to play at 4K on an Nvidia card, you can definitely make the argument that the image fidelity looks better, and you'll typically get about 80% more performance than native rendering.

The AMD and Intel GPUs only have FSR 3 upscaling as an option, with AMD seeing improvements via quality upscaling ranging from 65% (RX 7900 XTX at 4K ultra) to 75% (most other GPUs). 4K unobtanium gives a higher improvement of 81–82 percent on the two 7900-class cards, though — again, more complex pixel rendering means bigger gains from not having to render as many pixels.

The two Intel GPUs show a slightly different look, with the A770 16GB improving by 89% — the best result compared to native of all the tested GPUs — while the A750 'only' improves by 68%. Probably the reduced VRAM on Intel is having an impact, even if that doesn't seem to be much of an issue on other 8GB GPUs. We can't say how XeSS might look in Avatar, but at least FSR 3 doesn't appear to be punishing non-AMD GPUs in any meaningful way.

While we're still dealing with a 2X upscaling ratio, the difference is that 4K has so many pixels that it can really bog down the hardware, especially with the ultra or unobtanium presets. At the same time, halving the number of pixels to render will only get you so far — the RTX 4060 and below all still fail to break 30 fps, with only the 4070 Ti and above able to break 60 fps.

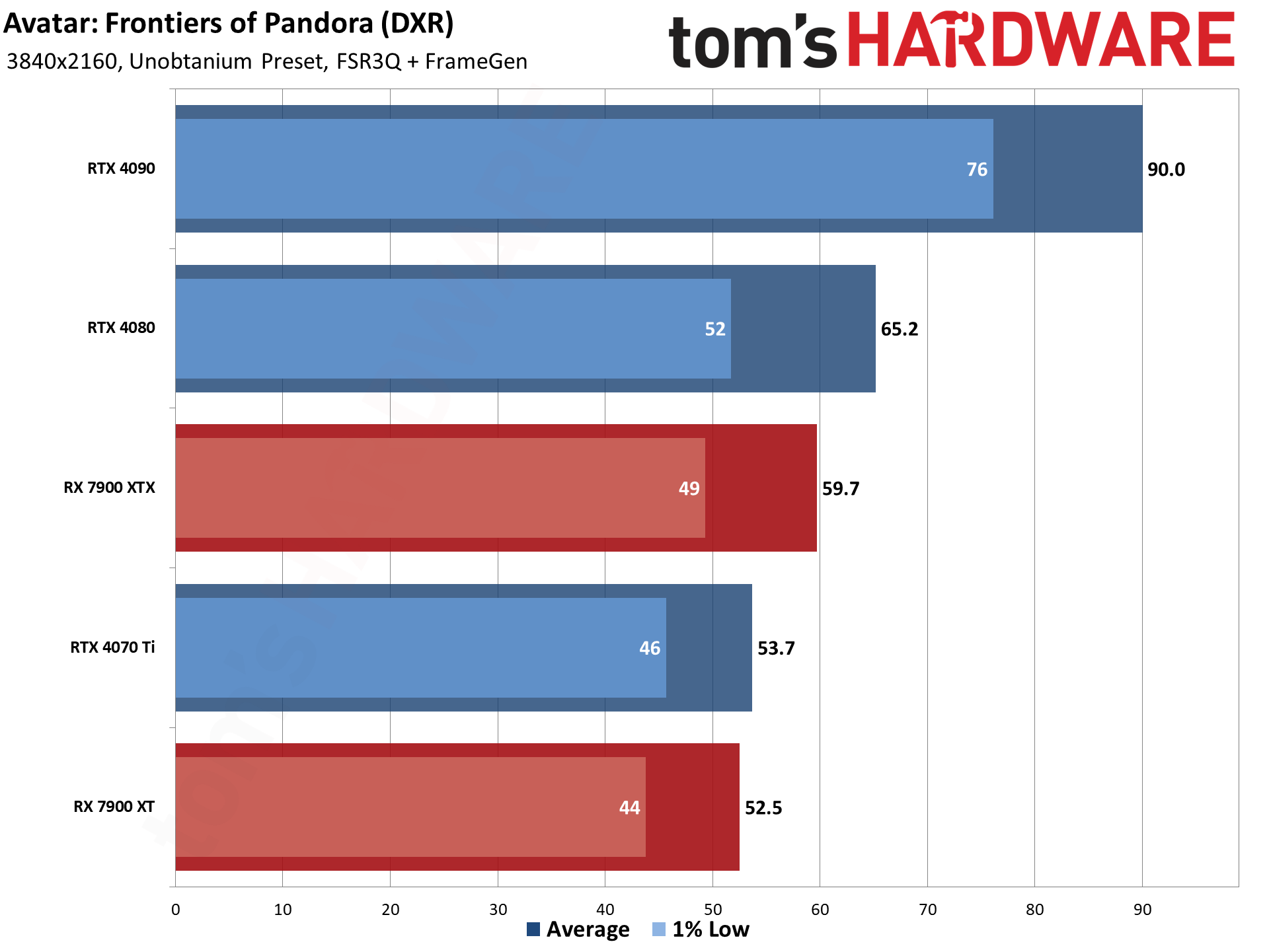

Oh, and that's for ultra settings. Unobtanium is certainly playable on the RTX 4090, and sort of playable on the RTX 4080. But the RX 7900 XTX barely breaks 30 fps and could still use a little more help. Which brings us to the final charts.

At 4K ultra with FSR 3 upscaling and frame generation, there's a bit more of a gap between the 1% lows and the average fps — less consistency in fps, in other words. However, it was quite remarkable to me just how playable most of the GPUs still felt.

The RX 7600 and Arc A750 struggled, but even the Arc A770 and RTX 4060 were viable. The RTX 4060 Ti and above all felt fine. That's not what we would expect, given generated fps of 39 and 46, respectively. They should feel like they're running at ~19 and ~23 fps... but they don't, in our opinion.

We couldn't collect latency measurements, but I ran around areas of Pandora with multiple cards, just to verify that I wasn't missing anything. There's the occasional fps stutter while loading in new areas, but that's about the only hiccup I noticed. Some people might still prefer skipping frame generation, but it really wasn't a problem for me in this particular game. Hopefully, more games in the future can feel more like Avatar and less like... well, Forspoken, Immortals of Aveum, and a bunch of DLSS 3 games.

The same goes for 4K "unobtanium," which was actually quite attainable on the five GPUs we tested. Only the RTX 4080 and 4090 broke 60 fps, but with an adaptive sync display, the 50+ fps results on the other three GPUs would be fine. Again, running around the world of Pandora at these settings with all five GPUs felt a lot better than I was expecting.

Considering the RX 7900 XT should have a ~26 fps user sampling rate with its 52 fps frames-so-screen rate, it should still feel like 26 fps, but to me it still felt more like 40 fps. This is why I really wish DLSS 3 had been included, because it would have given a second option to test. Maybe it's just the game itself that feels different, but I can't say for certain.

Avatar: Frontiers of Pandora Image Fidelity Comparisons

Avatar: Frontiers of Pandora is a nice looking game, with a vibrant and colorful world. But there are also some oddities at times with the rendering. There are a few items to discuss.

One big one is in regards to frame generation. Various UI elements at times cause issues, so that rendering flickers in the area of the HUD elements. This is most apparent at the top of the screen where you can normally see the compass. Turn in place and you'll see the content behind the compass overlay flicker a lot, as though it's using incorrect data. Turning frame generation off eliminates the problem.

This is one of the big differences between FSR 3 and DLSS 3 when it comes to frame generation. Nvidia opted to interpolate between the two frames on everything, including the UI and other overlay elements. It makes it easier to integrate support, as the OFA is supposed to just magically handle the work. It can also lead to some rendering errors, but it's left up to the AI algorithm.

AMD's FSR 3 in contrast doesn't try to interpolate on the UI elements. That makes sense, as how would the algorithm interpolate between a digital display that shows a 2 and then a 3 as an example? This should normally work quite well, but in Avatar it's perhaps an issue in defining what counts as an overlay. Mission log advice as another example showed up with a transparent background and would seem to be an overlay in my mind, but all the flickering around the bounding box suggests it's not being handled properly. This does feel like a bug, though, so hopefully it gets corrected.

Another issue I noticed was with thin objects against a high contrast background. This isn't an FSR 3 problem, as the anomaly persists with no upscaling, or temporal AA, or with DLSS. It's not super annoying, but certain objects definitely stand out as having a bit of a 'halo' around them. I've seen similar stuff in Spider-Man: Miles Morales / Remastered as well as Far Cry 6, so it's likely just part of the way the game engine handles certain things.

There are other aspects of the rendering to discuss as well. I think a big part of the lack of a massive performance hit from ray tracing is that the engine accumulates data over time via ray tracing. If you're moving around, things will generally appear mostly as if they're rendered without RT, or at least without too many rays. Stop moving and over the next second or so you'll see surfaces "fill in" with additional detail.

That also applies to anti-aliasing, from what I could tell. Moving around shows plenty of jaggies if you're looking for them — like on RDA vehicles and machinery. Stop moving and over the next several frames all the jaggies will fade out. This happens in other games to varying degrees, but I certainly noticed the effect a bit more in Avatar.

Anyway, potential rendering oddities aside, let's take a look at the game and how the various presets affect the overall image quality. We're using the five presets for these screenshots, and since we're not moving anywhere, the anti-aliasing, reflections and other effects have the opportunity to look their best.

Image Fidelity Screenshots

Low Preset

Medium Preset

High Preset

Ultra Preset

Unobtanium Preset

Low Preset

Medium Preset

High Preset

Ultra Preset

Unobtanium Preset

Low Preset

Medium Preset

High Preset

Ultra Preset

Unobtanium Preset

The indoor setting (first five images) is easiest for picking out differences, in part because there's no rain, fog, or other particle effects going on. Most of the scene is static, so we can spot the differences in shadows and other areas.

Toggling between the low and medium settings, there are a lot of differences that are easily apparent. There are a lot more ambient occlusion effects (look at the stairs for example), and the vending machine on the middle-left casts some additional light. You can also see more light and detail in other areas, though it's obvious if you think about the way that light behaves in the real world that neither result is fully accurate.

Kicking up from medium to high, you get more shadow and lighting changes. Curiously, some areas seem to revert a bit in the way they look, which means the low preset might be 'more accurate' than the medium preset in some ways. But then going from high to ultra, there are yet again changes to the lighting and shadows! I'm reasonably sure the ultra view uses ray tracing for the lighting and shadows, and in general it does look better than the lower presets, but there's no simple toggle to turn RT on or off.

What about the unobtanium preset? Besides cutting performance in half (or more), it looks like there are higher resolution textures, more geometry, and improved lighting and shadows — again. It's probably casting more rays as well. Look at the vertical walls in the above gallery as an example. There are details that become a lot more noticeable at the maxed out setting. Never mind that it's basically impractical on all but the very fastest GPUs, and only then with frame generation; unobtanium does improve the look of the game a bit — but like path tracing, probably isn't worth the performance hit unless you're reading this five years in the future on an RX 9900 XTX or RTX 7090 or whatever.

What about the other screenshots? Honestly, there's so much rain and fog swirling around in the second and third sets of images that it's hard to say what exactly is changing due to the quality settings, and what's just swirling smoke and flashing lightning. I had hoped the water reflections would show clear differences between the settings, but not even the unobtanium setting convinces me that there's a lot of extra ray tracing or anything else happening.

Frankly, you could play Avatar on its lowest settings and probably not notice the reduction in image fidelity all that much. There are certainly differences if you look for them, but nothing that would make or break the game in my view.

Avatar: Frontiers of Pandora Closing Thoughts

Avatar: Frontiers of Pandora gives me a lot more hope for frame generation, particularly AMD's version of the algorithm. If it did so with no clear rendering issues, I'd be a lot happier, but assuming those are bugs that can get ironed out, it allowed for a playable experience even with framerates that I'd normally expect to be problematic. Does it look better than DLSS 3 frame generation? The jury is still out, and the fact that it didn't get native support for Nvidia's framegen from the developers is unfortunate.

As for upscaling, like Alan Wake 2, this represents another game that shows upscaling is basically here to stay. Sure, there are some elements that will look noticeably worse with upscaling — DLSS or FSR. But when you're just playing a game and not specifically pixel peeping to try to spot the differences? Console gamers have been doing that since the PS4 and Xbox One without much in the way of complaints.

AMD made some noise when Avatar first launched about how every RX 7000-series GPU could break 100 fps. What it didn't really dig into is just how much it needs upscaling and frame generation to get there. Running at native 1080p and the medium preset, the RX 7600 couldn't even hit 60 fps, and quality mode upscaling wouldn't suffice either. But upscaling plus frame generation? Yes, that's enough to get the 7600 up to 135 fps. It's also enough to get many other GPUs well past the 100 fps mark — it all depends on the settings you choose to run.

Besides the performance requirements, there's also the question of the game itself. Is it any good? Reviews in general are grouping around the "pretty good" mark, depending on how you want to define that. There are plenty of 7/10 scores, with 9/10 and 5/10 being more on the fringe. Naturally, anyone who already loves the Avatar movies will probably find a lot to like here, though personally it all comes across a bit heavy-handed and stilted.

As a showcase for AMD FSR 3, the game does better. I didn't encounter any issues with having vsync on (or off), which was a bit of a problem on Forspoken and Immortal. Others have gone into far more detail trying to capture videos showing the various settings in action. Personally, the best I can say is that, outside of the rendering issues noted earlier, I was able to just get on with playing the game and not worry so much about the particular settings being used, and that's enough for a lot of gamers.

Now that AMD has released the FSR 3 source code, it's a safe bet we'll see upscaling and frame generation continue to proliferate. If you're on the "fake frames" bandwagon, that's going to be a problem, but I don't think "neural rendering" or other algorithms are really all that different from the approximations we've been seeing in games for decades. If a technique looks good and let's more people have fun playing games, I'm not going to turn my nose up at it.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Bikki I feel extreamly graceful for what AMD did. We the consumers are the main beneficiary. With open source approach, and decent quality, expect FSR to be moders play ground and even Apple join the game,Reply -

Avro Arrow Ok, so, yeah....WTH? This game is clearly broken somehow if not even the absurdly-expensive RTX 4090 can manage 60FPS average at 4K. It's like AMD and nVidia are trying to outdo each other with the question being "Who can sponsor a title that breaks video cards better?".Reply

The opening salvo came from nVidia with CP2077 (which crushes video cards with its insane RT implementation) and now AMD answers with AFOP (which crushes video cards with its insane rasterisation requirements). Meanwhile, we as consumers are caught in the crossfire (which is pretty apt because games like this make me really miss Crossfire).

"Does the unobtanium mode look significantly better, though? Not really. We'll compare images below, and there are a few differences, but in general it's just much more demanding for extremely minor gains in image fidelity."

^^^^I'm actually chuckling IRL at this because it's exactly how I describe ray-tracing.^^^^ :giggle: -

JarredWaltonGPU Reply

Pretty sure Unobtanium turns on more ray tracing along with higher resolution textures. Problem is that there's no clear description of the various settings and when they do / don't use ray tracing. Or maybe Unobtanium increases the cutoff distance for RT calculations?Avro Arrow said:Ok, so, yeah....WTH? This game is clearly broken somehow if not even the absurdly-expensive RTX 4090 can manage 60FPS average at 4K. It's like AMD and nVidia are trying to outdo each other with the question being "Who can sponsor a title that breaks video cards better?".

The opening salvo came from nVidia with CP2077 (which crushes video cards with its insane RT implementation) and now AMD answers with AFOP (which crushes video cards with its insane rasterisation requirements). Meanwhile, we as consumers are caught in the crossfire (which is pretty apt because games like this make me really miss Crossfire).

"Does the unobtanium mode look significantly better, though? Not really. We'll compare images below, and there are a few differences, but in general it's just much more demanding for extremely minor gains in image fidelity."

^^^^I'm actually chuckling IRL at this because it's exactly how I describe ray-tracing.^^^^ :giggle:

Anyway, I'm a firm believer that RT can improve image quality, when done properly, but doing it properly requires a lot more RT than just doing some shadows, AO, or even reflections. Doing full global illumination (meaning, all the lighting and such calculated via RT, i.e. what Nvidia calls path tracing) probably requires at least 500 rays per pixel, and even that isn't really sufficient. (RT in Hollywood movies are probably closer to ~10K or more rays per pixel, and they're doing that at 8K these days.)

There are lots of things in CP77 where the difference between path tracing and even RT ultra is quite substantial, if you know what you're looking for. It doesn't fundamentally alter the game, but it does look better / more accurate. Same for Alan Wake 2. Avatar isn't doing full RT by any stretch, even at Unobtanium, but it does more shader calculations per pixel for sure. A lot of those calculations are just going to be the same as the faster approximations done at ultra, though. -

JarredWaltonGPU Reply

The 10GB, which is the default version. The 3080 12GB will always show as "RTX 3080 12GB" in my charts and tests, and it was a relatively rare beast compared to the 10GB variant. I included the 6800 XT and 3080 10GB, as those were the two most representative high-end cards of the previous generation IMO.AloofBrit said:Nitpick - which version of 3080 was tested? -

@JarredWaltonGPUReply

I’m asking this as a 4090 owner, who would love to try this at 4K unobtainium: in your experience, is the frame rate situation even slightly improvable through better versions of drivers and/or updates? -

Avro Arrow Reply

Oh, there's no question about that. What I've always believed is that while RT and/PT are the future, PC tech isn't advanced enough to properly use them yet. When it's advanced enough to use RT and/or PT smoothly at 60FPS at 1440p or 2160p, then (and only then) will it be worth it to me to turn it on. This is because I honestly believe that games are beautiful already without it so it's not like anybody's suffering if RT/PT isn't turned on.JarredWaltonGPU said:Pretty sure Unobtanium turns on more ray tracing along with higher resolution textures. Problem is that there's no clear description of the various settings and when they do / don't use ray tracing. Or maybe Unobtanium increases the cutoff distance for RT calculations?

Anyway, I'm a firm believer that RT can improve image quality, when done properly, but doing it properly requires a lot more RT than just doing some shadows, AO, or even reflections. Doing full global illumination (meaning, all the lighting and such calculated via RT, i.e. what Nvidia calls path tracing) probably requires at least 500 rays per pixel, and even that isn't really sufficient. (RT in Hollywood movies are probably closer to ~10K or more rays per pixel, and they're doing that at 8K these days.)

Hell, growing up, I gamed on an Atari, ColecoVision, Intellivision, TRS-80, C64, NES, Genesis, N64, PS2, PS4 and PC. I guess that since I've been gaming so long and have experienced pretty much every level of graphics, what matters most to me is the game's content. I don't think I'm unique in that regard when you consider how popular mobile games and online MMORPGs are despite the fact that they don't even come close to matching the graphical fidelity of modern AAA titles. A game is either fun or it's not, regardless of how pretty the graphics are.

I absolutely agree with you but it's like I said, our tech isn't there yet so it's horrifically expensive and its performance generally sucks buttocks. Like, just think of how many people foolishly spent over $2,000 for an RTX 2080 Ti mere months before the launch of the RTX 30-series cards. At the time, the RTX 2080 Ti was "the pinnacle of RT performance" but, as we look at it now, it still sucked, despite the insane price.JarredWaltonGPU said:There are lots of things in CP77 where the difference between path tracing and even RT ultra is quite substantial, if you know what you're looking for. It doesn't fundamentally alter the game, but it does look better / more accurate. Same for Alan Wake 2. Avatar isn't doing full RT by any stretch, even at Unobtanium, but it does more shader calculations per pixel for sure. A lot of those calculations are just going to be the same as the faster approximations done at ultra, though.

When the RTX 20-series was first released, Jensen Huang said his (in)famous words:

"EVERYTHING JUST WORKS!"

Here we are, five years later (which, as you know is an eternity in PC tech) and it's still not true. How he managed to convince so many just blows my mind. -

vehekos I will never play this game, because I'm absolutely creepied at being forced to play the game as a woman in first person.Reply

I watched youtube videos, and there is something in the sound design that provokes me a deep feeling of disgust. -

Sleepy_Hollowed Replyvehekos said:I will never play this game, because I'm absolutely creepied at being forced to play the game as a woman in first person.

I watched youtube videos, and there is something in the sound design that provokes me a deep feeling of disgust.

Is there a problem as to how women are treated, even in fiction?

This is a rhetorical question.

That's quite insane that the games did not feel sluggish.

I guess that the tech would have its use in slower games.