AMD Introduces Radeon Instinct Accelerators, One Based On Vega 10

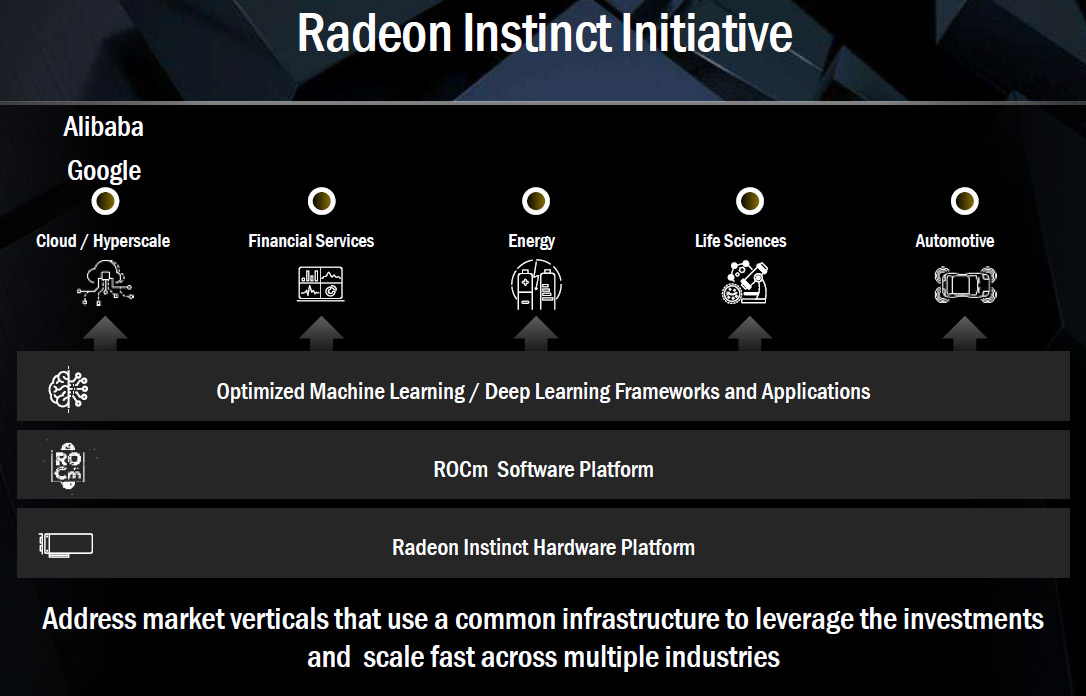

The Tom’s Hardware U.S. and German teams are still unpacking their bags from spending two days in Sonoma, California with AMD’s CEO, Dr. Lisa Su, CTO Mark Papermaster, Radeon Technologies Group chief architect Raja Koduri, and other company leaders. We naturally covered several topics during this Technology Summit (yes, including Vega 10 and Zen), and they’ll be dissected in the days and weeks to come. First, though, the company wanted to announce a trio of add-in cards designed to accelerate deep learning inference and training, along with an open-source library tuned to exploit their abilities. Nvidia has a big head start in the GPU-accelerated machine intelligence market, so AMD has no time to waste here.

Meet Radeon Instinct

All three cards belong to a new family of products called Radeon Instinct. Despite what’s written on their shrouds, though, the underlying hardware we’re talking about is largely familiar to the Tom’s Hardware audience. The entry-level model, Radeon Instinct MI6, employs a Polaris GPU, the middle MI8 is based on the Radeon R9 Fury Nano’s Fiji processor, and the flagship MI25 boasts an upcoming Vega-based chip. AMD said the entire lineup is passively cooled, but that’s a little disingenuous. Each model is going to have its own airflow requirement in a rack-mounted chassis, and those fans are anything but quiet.

The Radeon Instinct MI6 is specified to include 16GB of memory and hit the same 150W board power figure as AMD’s desktop-class Radeon RX 480. A claimed peak FP16 rate of 5.7 TFLOPS allows us to work backward into a frequency of around 1,240MHz, because the Ellesmere processor handles half-precision FP16 similar to FP32. Further, a memory bandwidth specification of 224GB/s corresponds exactly with the 7Gb/s GDDR5 found on the 4GB Radeon RX 480.

Like Polaris, the Fiji processor has a 1:1 ratio of FP16 to FP32, so the MI8 card’s 8.2 TFLOPS half-precision compute rate is identical to Radeon R9 Fury Nano’s single-precision spec at a 1,000 MHz core frequency with all 4,096 of its shaders enabled. Of course, that card is limited to four stacks of first-gen HBM, so the Radeon Instinct MI8 inherits a 4GB capacity ceiling as well.

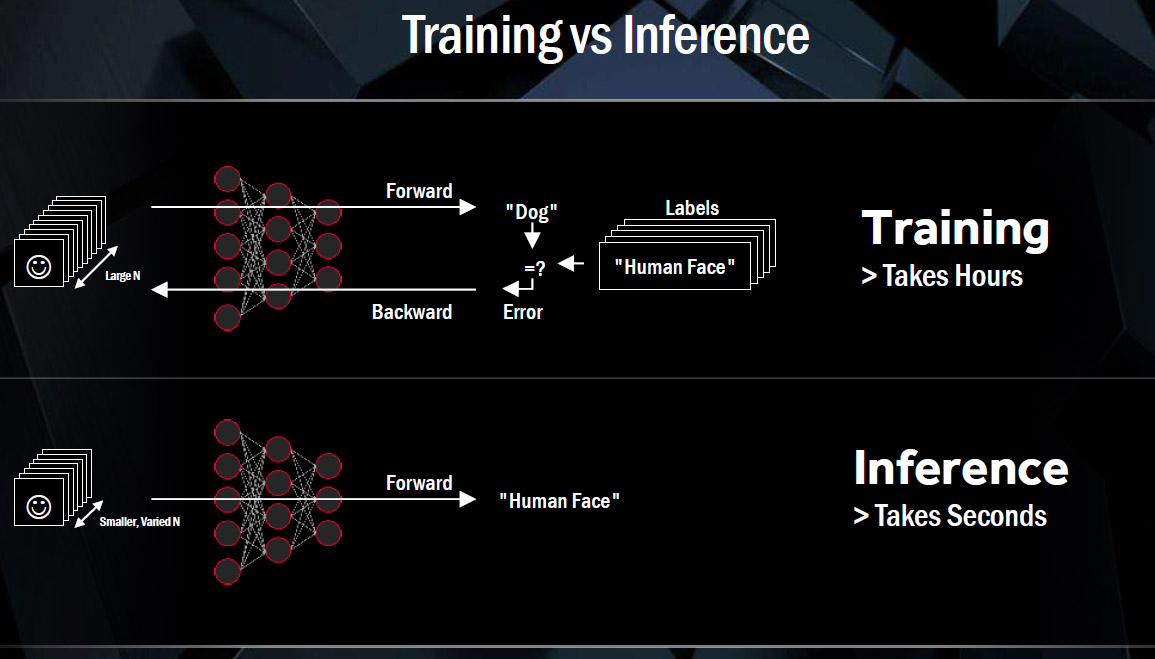

AMD said the MI6 and MI8 are ideal for GPU-accelerated inference, which is what a neural network does after it has gone through its more intensive training stage. Inference often involves fewer inputs than training in order to yield the best response time possible. So, it’s possible that MI8’s comparatively small complement of HBM won’t hamstring performance, particularly given the card’s 512 GB/s of memory bandwidth.

Of course, the flagship MI25 is going to garner the most attention. It hosts a still-unannounced Vega-based GPU, so speeds and feeds are scarce. But as you likely saw from the previous two models, AMD’s Instinct names correlate to Float16 performance, which means we’re looking at up to 25 TFLOPS. This is enabled by a more flexible mixed-precision engine that accommodates two 16-bit operations through the architecture’s 32-bit pipelines. Sony’s PlayStation 4 Pro recently received the same capability, and you can bet other upcoming graphics products from AMD will include it, as well. Dramatically increased compute throughput, and presumably lots of on-package HBM2, make the card well suited to training workloads.

Why is AMD emphasizing FP16 performance at all? Academic research has shown that half-precision achieves similar classification accuracy as FP32 in deep learning workloads. There is naturally a lot of pressure you can relieve from the memory subsystem in bandwidth-limited tasks by using FP16 storage. But in an architecture like Vega’s, the performance of FP16 math theoretically doubles—again, with minimal accuracy loss compared to FP32.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It’s no wonder AMD wanted to get this information out as quickly as possible—Nvidia’s GP100 also supports mixed precision, with up to 21.2 TFLOPS of half-precision throughput using the GPU’s Boost clock rate. Further, Nvidia is beating the drum hard on CUDA 8, although we’re still months away from seeing Vega-based hardware, AMD can at least tell the software side of its story to get developers thinking about programming to these deep learning accelerators.

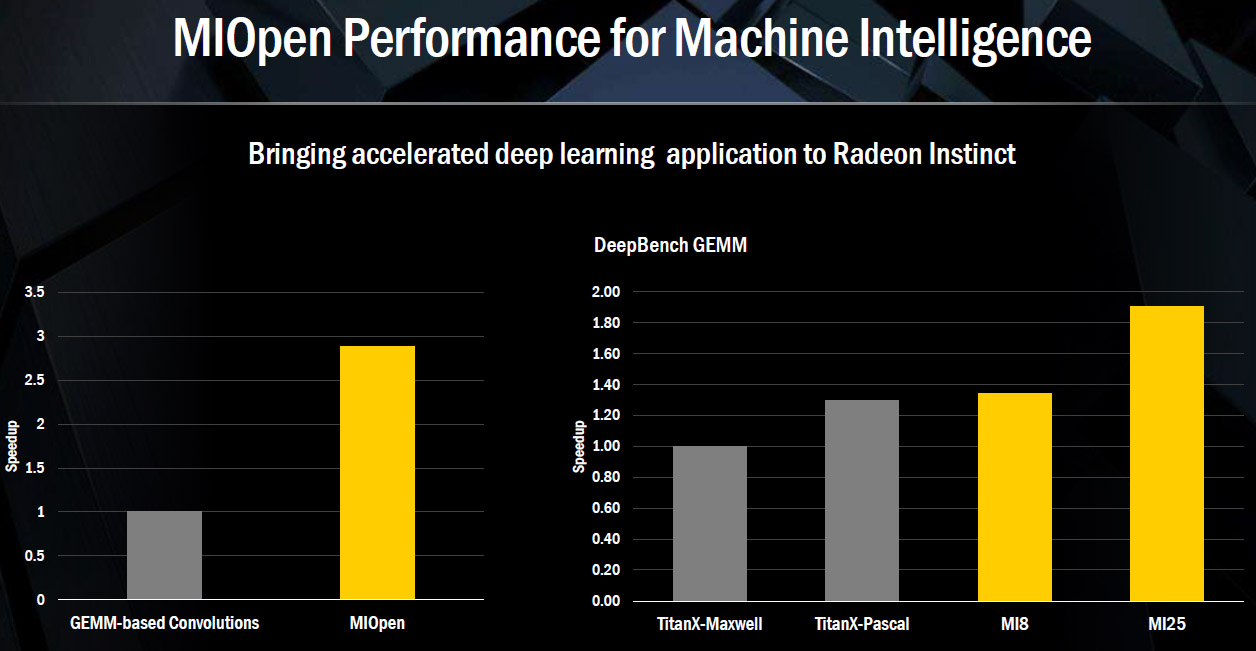

Leveraging The Hardware Through MIOpen

Thus, to go along with its hardware disclosure, AMD also took the wraps off of a GPU-accelerated software library for its Radeon Instinct cards called MIOpen, which should become available in Q1’17, and showed some preliminary benchmark results generated by Baidu Research’s DeepBench tool. For those unfamiliar, DeepBench tests training performance using 32-bit floating-point arithmetic. AMD is reporting only one of the test’s operations (GEMM), so the outcome is fairly cherry-picked. Then again, this is also very early hardware.

AMD is using a GeForce GTX Titan X (Maxwell) as its baseline. That card has a peak FP32 rate of 6.14 TFLOPS using its base frequency. The Titan X (Pascal) jumps to 10.2 TFLOPS, thanks mostly to a significantly higher GPU clock rate. Using its MIOpen library, AMD showed the 8.2 TFLOPS Radeon Instinct MI8 beating Nvidia’s flagship. Meanwhile, the MI25 appears almost 50% faster than Nvidia’s Pascal-based Titan and 90% quicker than the previous-gen Titan X. Based on what we know about Vega’s mixed-precision handling, we can surmise that MI25 has an FP32 rate around 12 TFLOPS. Clearly, to achieve the advantage AMD is already reporting, MIOpen has to be really well optimized for the hardware.

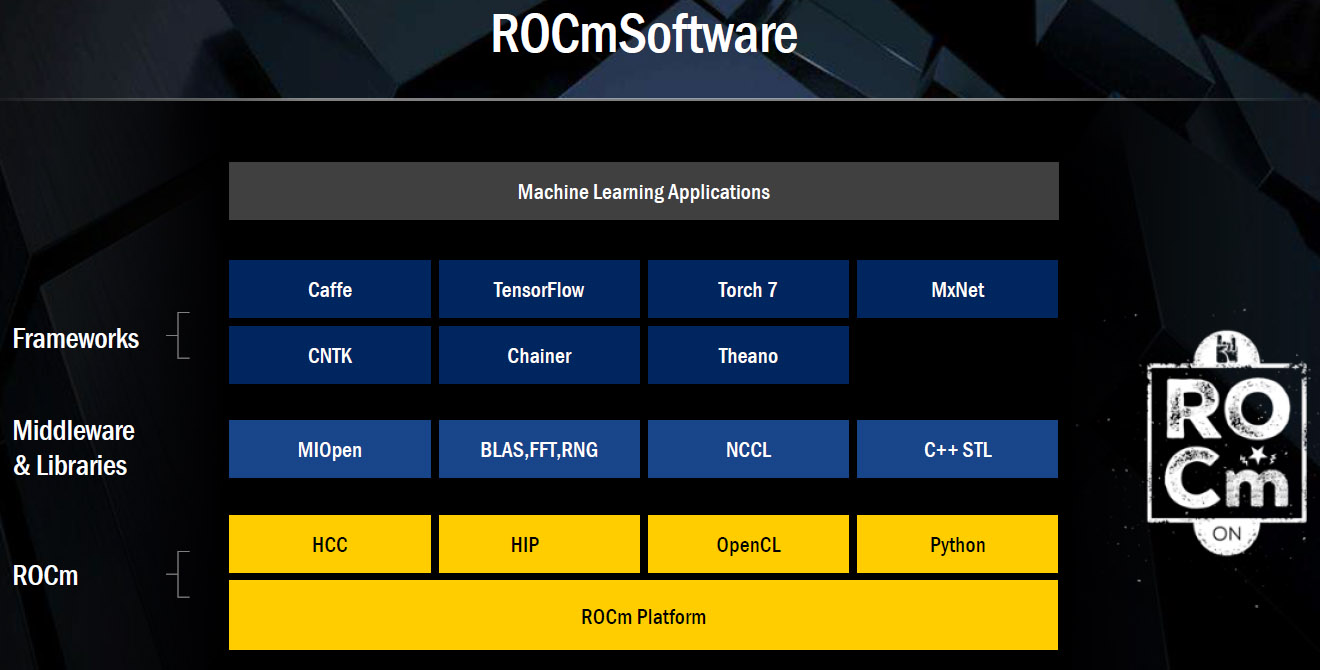

The MIOpen library is but one part of AMD’s software strategy, and it sits above the open-source Radeon Open Compute platform’s support for the Heterogeneous Compute Compiler, HIP (for converting CUDA code to portable C++), OpenCL, and Python. ROCm also incorporates support for NCCL, a library of collective communication routines for multi-GPU topologies, all of the relevant math libraries, and the C++ standard template library.

Early in 2016, AMD introduced its FirePro S-series cards with Multi-User GPU technology based on the Single Root I/O Virtualization (SR-IOV) standard. As an example: This granted VMs direct access to the graphics hardware, and was apparently popular for cloud gaming. AMD is exposing MxGPU on its Radeon Instinct boards for machine intelligence applications, as well, and was quick to point out that Nvidia is much more stringent about segregating its features into separate products.

Putting It All Together

Beyond merely announcing its Radeon Instinct accelerators, AMD also invited a handful of integrators to show off their plans for the cards. At one end of the spectrum, Supermicro populated its existing SuperServer 1028GQ-TFT—a dual-socket Xeon E5-based 1U machine—with four MI25 cards, yielding up to 100 TFLOPS of FP16 performance. Optional Xeon Phi cards don’t even support half-precision math, and the Tesla P100 add-in cards on Supermicro’s qualification list are slower than the mezzanine version, topping out at 18.7 TFLOPS each.

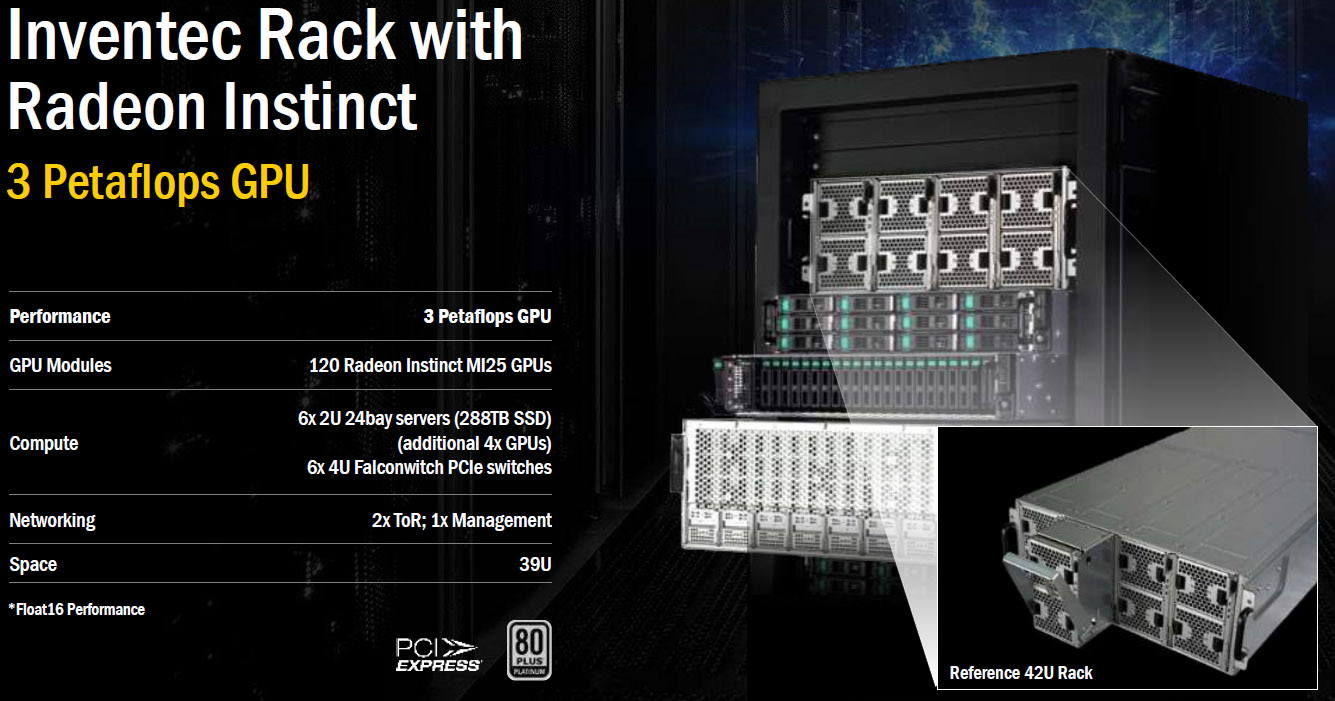

At the other end, Inventec discussed a 39U platform with 120 MI25 GPUs, six 2U 24-bay servers, and six 4U PCIe switches delivering up to 3 PFLOPS.

Naturally, AMD couldn’t help but tease an imminent future when its Zen CPUs and Radeon Instinct accelerators operate together in a heterogeneous compute environment. However, we’re at least six months away from the realization of such a combination, so speculation about its potential will have to wait.

-

IceMyth Wow, I think AMD now is in the right track to beat NVidia and stand on the top (AMD fanboy comment). Deep learning and neural networks are the kind of things companies should strive for either NVidia, Intel, or AMD. What I would love to see a comparison of what the comming AMD GPUs will introduce to the public in term of Gaming, Design and all fields but as the article said still need sometime.Reply -

AMD's CEO Lisa Su seems to really know what she's doing. It should be a model example of why sometimes you need an engineer CEO instead of a sales type.Reply

-

captaincharisma AMD has turned into a company that's all talk but as always the reults will be lackluster. but hey, it makes the shareholders happyReply -

falchard But AMD has been in this market for years, how are they late when they are several years early? They were even competing with nVidia on the contracts they got recently.Reply -

JakeWearingKhakis JABER2 - No, its just that the way GPU's are made to calculate they just happen to be very good at neural networks, and things like bitcoin mining. I believe they will be (and already are) helping the CPU when it needs the help and is not being used for graphics processing at the time the CPU needs it.Reply

CAPTAINCHARISMA - They are not all talk. They just went the wrong way with CPUs for a while. Which is why hyperthreading is coming back with ZEN. They aren't all talk with GPUs either. What you've seen this year is their low-mid range line up. once VEGA and the 490X come, there is no doubt it will be wonderful tech.

Nvidia doesn't make CPUs (other than Tegra?) and AMD being the smaller company can keep up without stealing technology and keeping it open source.

Intel is a mega corporation, and AMD having less than I think 10k employees is on their ass. AMD gets way less credit than it deserves. -

falchard It's amazing how much changed in the last couple months with the RX 480 and GTX 1060. Before you could say the 1060 will perform better in DX11 and worse in Vulkan/DX12. Now it's the RX 480 will perform the same in DX11 and better in Vulkan/DX12. I guess this means the actual competition for the RX 480 8GB was the GTX 1070.Reply -

alidan Reply18993940 said:Wow, I think AMD now is in the right track to beat NVidia and stand on the top (AMD fanboy comment). Deep learning and neural networks are the kind of things companies should strive for either NVidia, Intel, or AMD. What I would love to see a comparison of what the comming AMD GPUs will introduce to the public in term of Gaming, Design and all fields but as the article said still need sometime.

tomorrow should be the stream for zen, given they also showed off vega gpu getting 70-100fps at 4k ultra in doom, may see vega there too.

18996427 said:It's amazing how much changed in the last couple months with the RX 480 and GTX 1060. Before you could say the 1060 will perform better in DX11 and worse in Vulkan/DX12. Now it's the RX 480 will perform the same in DX11 and better in Vulkan/DX12. I guess this means the actual competition for the RX 480 8GB was the GTX 1070.

nah, the 1070 to far ahead hardware wise, there is only so much ground to make up in drivers, but the 480 being between a 1060 and 1070 is about right, to beat a 1070 they would need to refine the process and make more of the silicons higher end, some of them can get damn near 1500mhz clock rate some of them can barely go above base, if the process gets improved and they can put out more higher end parts, it could compete with a 1070, but likely wont happen unless they rebrand for navi. -

omikronsc "AMD said the MI6 and MI8 are ideal for GPU-accelerated inference, which is what a neural network does after it has gone through its more intensive training stage."Reply

So MI6 and MI8 is a crap. Amdahls law points that the best speed up you get from speeding up the LONGEST operation in a process. Image shows that training takes hours (days in fact) and inference takes seconds (milliseconds in fact) and AMD is best in speeding up inference part.

I'm wondering if this is what AMD really presented or author of this article messed it up. -

problematiq Reply18997563 said:"AMD said the MI6 and MI8 are ideal for GPU-accelerated inference, which is what a neural network does after it has gone through its more intensive training stage."

So MI6 and MI8 is a crap. Amdahls law points that the best speed up you get from speeding up the LONGEST operation in a process. Image shows that training takes hours (days in fact) and inference takes seconds (milliseconds in fact) and AMD is best in speeding up inference part.

I'm wondering if this is what AMD really presented or author of this article messed it up.

The problem with benching the training portion is that it's heavily dependent on what you are training. It also depends on your training style, my brother and I trained that same thing (face recognition) on two boxes with identical hardware yet it took his box a whole day an a half longer to train than mine. (his performed the task better in the end though.)