Robotic Arm Controlled With Muscle Movement via EMG Signals

If you’ve dreamt of the ultimate mecha-powered future with giant robots and mecha suits controlled by the human body, you’re sure to get excited about this project from Ultimate Robots. The team has created a robotic arm that can be controlled using muscle movement thanks to their EMG signal sensor PCB, the uMyo. It also leverages one of our favorite microelectronics boards -- the Arduino.

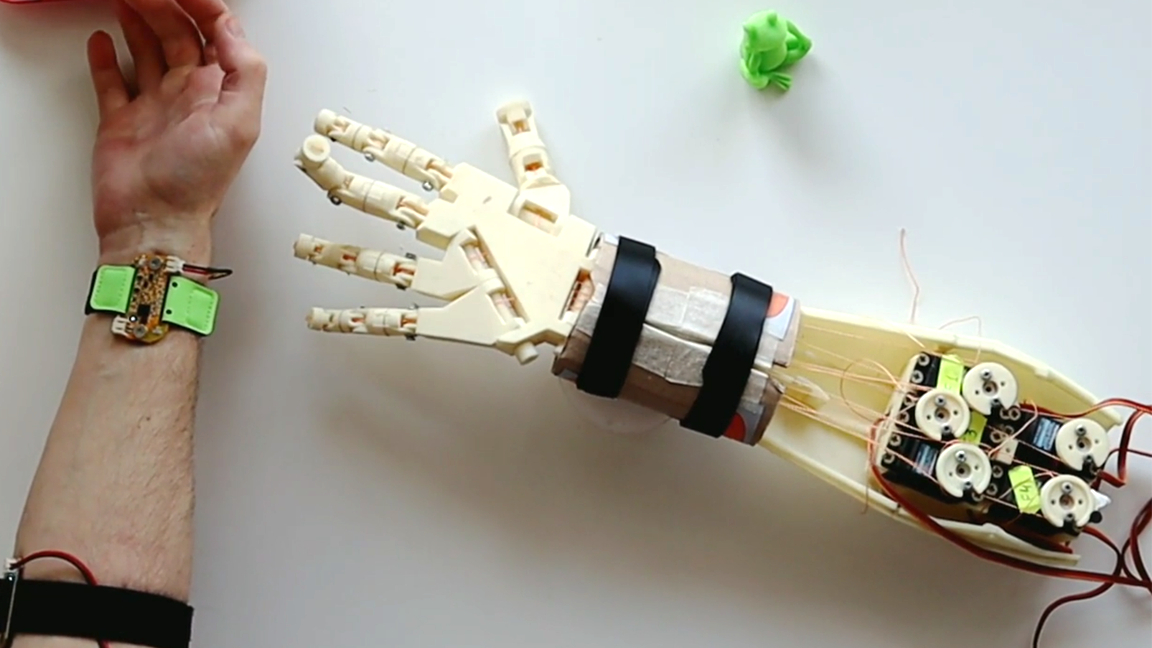

This project was designed as a simple demonstration of what the uMyo sensor module can achieve. It’s fitted with three separate uMyo PCBs to detect movement from the wearer accurately. Each finger on the robotic arm has two tendons. These are connected to a wheel that is operated by a servo. The servo determines whether or not to curl or uncurl the fingers.

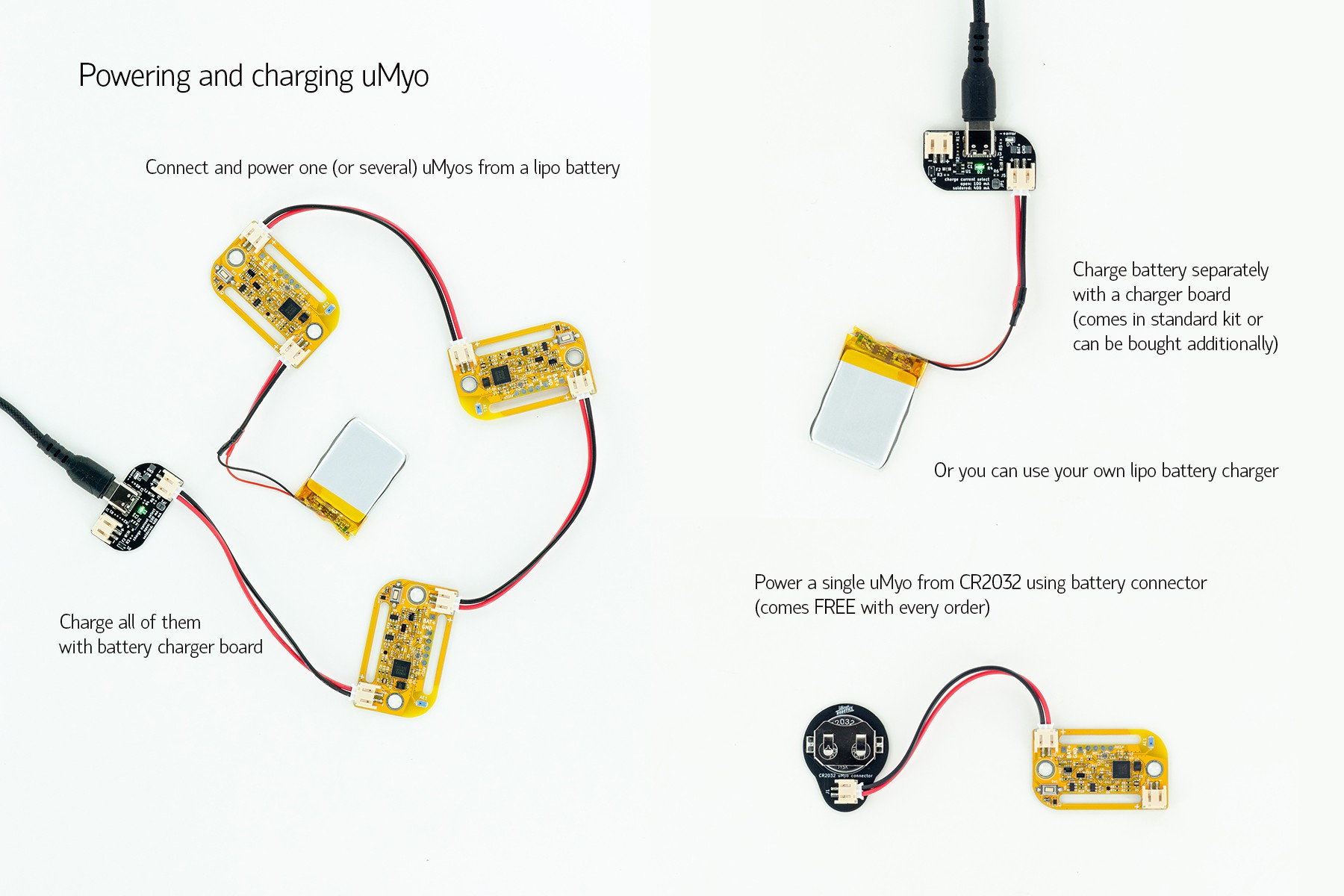

The shining gem of this creation is the uMyo sensor. It’s an open-source device designed to be worn for user input. It can transmit data wirelessly, so the wearer shouldn’t expect to be bogged down with cables tethering them to the output device. According to Ultimate Robotics, the uMyo can detect signals from various muscle groups, including arms, like in this project, legs, face muscles, and torso muscles.

Two uMyo sensors are placed at the elbow to monitor finger muscle signals. A third sensor is used at the wrist to monitor thumb muscle movement. The signals are transmitted to an Arduino, which uses an nRF24 module to receive the wireless signal. The Arduino then processes the input to send commands to the servos via a PCA9685 driver board, causing the robotic arm to move in response.

Not only is the uMyo sensor open source, but so is the software used in this robotic arm project. The team was kind enough to share everything on GitHub for anyone interested in perusing the source code.

To get a closer look at this project, check out the official uMyo breakdown uploaded by Ultimate Robotics at Hackaday. The team shared plenty of details about how it works and what goes into the PCB. You can find more information on the robotic arm on Reddit and see it in action via YouTube.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Ash Hill is a contributing writer for Tom's Hardware with a wealth of experience in the hobby electronics, 3D printing and PCs. She manages the Pi projects of the month and much of our daily Raspberry Pi reporting while also finding the best coupons and deals on all tech.

-

punkncat I have been reading a bit about a similar/same tech in relation to amputees. The study had a couple of below elbow amps picking up coke cans and doing other simple tasks with the device. It would be super duper cool to see this technology (along with 3D printing) evolve prosthesis to a whole new level of functionality. I would absolutely love to have an articulating ankle again....Reply -

edzieba If I had a penny every time someone stuck some myoelectric sensors on a forearm to use as an input device, I'd probably be able to buy a nice meal by now.Reply

Like with head-mounted EEG sensors, the major problem is the precision of electrode placement required to get repeatable results. In a lab or clinical environment where you can have trained technicians place electrodes (or employ bespoke fitted hard mounts) it can be viable, but self-donning not really. Whilst we're a few decades past the transition between "train humans to the interface" to "train the interface to user intent", it's still no good if you need to perform retraining every time the interface is donned. Think of the inconvenience as akin to your keyboard randomising key layout every time you sat down at your desk.